Design Voice Assistants for Phone Support That Customers Don’t Hate

Design a voice assistant for phone support that matches human CSAT. Learn a practical framework for caller psychology, flows, metrics, and safe escalation.

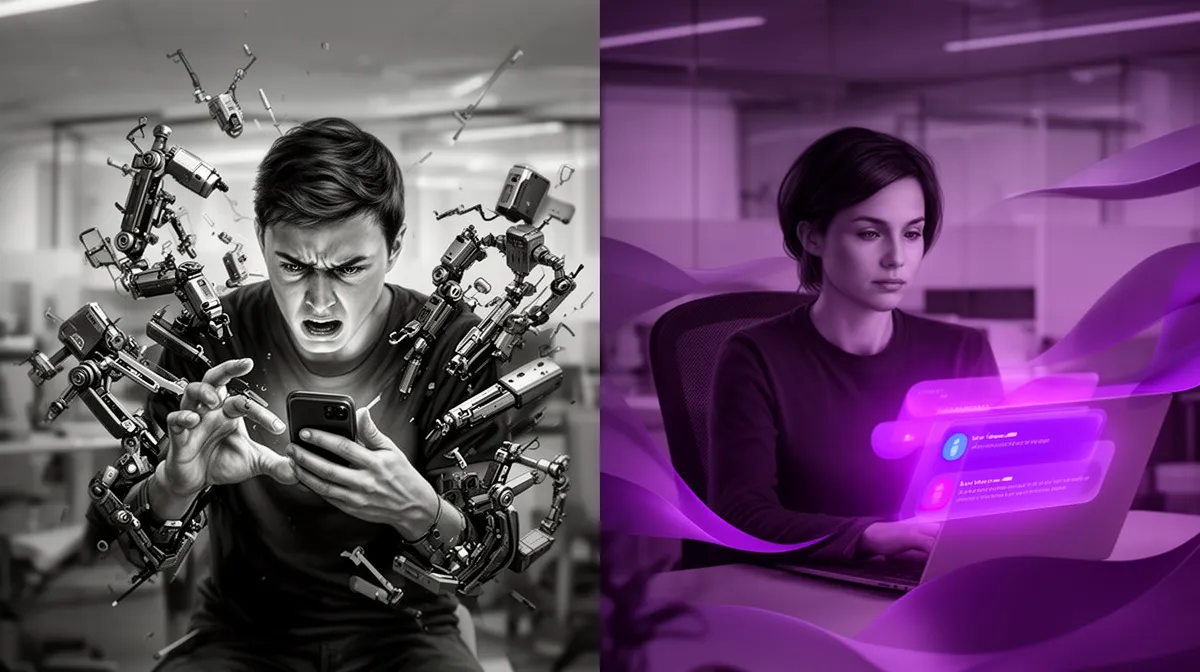

Most teams that deploy a voice assistant for phone support end up in the same place: the tech works, the metrics look fine on paper, and customers still hate calling. ASR is accurate, NLU is solid, dashboards show containment—but CSAT is nowhere near your human agents or even your web chatbot.

That gap isn’t a model problem. It’s a channel problem. We keep treating phone calls like slightly awkward chat sessions or downgraded smart speakers, instead of a channel with its own physics, psychology, and rules.

In this article, we’ll lay out a Phone Support Voice Design Framework that starts from caller behavior and phone constraints, then works up to flows, guardrails, and metrics. If you own KPIs like customer satisfaction (CSAT), FCR, containment, or call center automation ROI, this is for you.

At Buzzi.ai, we build channel-native AI voice assistants for WhatsApp and phone that actually move CX metrics, not just impress your data science team. Let’s unpack why the “phone support paradox” exists—and how to design your way out of it.

Why Voice Assistants for Phone Support Trail Chatbots on CSAT

The Phone Support Paradox: Great Tech, Bad Experience

The paradox is simple: speech recognition and natural language understanding are finally good enough for production, yet CSAT for a typical voice assistant for phone support still lags both chatbots and human agents. Containment looks healthy, average handle time may even drop, but social feeds and survey comments tell a different story.

Contact center AI vendors proudly report metrics like “40% self-service containment” or “30% call deflection.” Meanwhile, callers describe your new voice bot as “that robot wall” or “worse than the old IVR.” Quantitative success, qualitative pain.

The root cause: we port chatbot flows to phone almost verbatim. In chat, people can see a history, skim options, and click buttons. On phone support, there’s no scrollback, no visual affordances, and much higher emotional stakes. Patterns that feel efficient in chat become oppressive or confusing when spoken linearly.

Imagine this: a customer calls about a card decline. The voice bot greets them warmly, asks an open-ended question, mishears the answer, then launches a long clarification prompt. By the time the caller finally gets to “talk to an agent,” they’ve explained the issue three times. In chat, a similar issue might be resolved with a quick back-and-forth, a link, and a confirmation. Same backend intelligence, completely different customer experience.

What’s Unique About Phone Callers’ Psychology

Phone callers rarely arrive in a neutral state. They’re under time pressure (lunch break, airport queue), already frustrated (failed self-service on app or web), or anxious (billing, fraud, service outage). Their tolerance for ambiguity is low; their expectation for rapid, clear progress is high.

On a call, silence feels like failure. A long prompt feels like a lecture. Misrecognition feels like disrespect: “I just told you that.” That emotional layering is why a small latency bug or clumsy prompt can tank customer satisfaction (CSAT) far more on phone than in chat.

Contrast that with a chat session or a smart speaker. Chat is semi-asynchronous: people often multitask, tolerate some delay, and lean on the visual transcript as external memory. Smart speakers are usually used in low-stakes, domestic contexts—playing music, checking weather—where expectations are forgiving. Phone support is different: it’s where customers go when everything else has failed.

Research on IVR and automated phone systems consistently shows higher stress and lower patience among callers than in digital channels. For a solid overview, see this industry study of IVR frustration and abandonment patterns from the ACM Digital Library: ACM research on IVR user behavior.

Structural Constraints of the Phone Channel

Then there are the hard constraints of the channel itself. Phone is a purely auditory, linear medium. Every interaction obeys strict turn-taking rules. You can’t show menus, hyperlinks, or rich cards; you have to speak them. There’s no scrollback, so everything relies on human memory and attention.

That matters for your voice user interface (VUI). Long lists of options, multi-intent prompts (“you can say… or… or…”), and nested conditions that work in chat are brutal on phone. Latency is also unforgiving: even 800 milliseconds between turns can feel broken, especially if your barge-in detection is off and callers keep talking over the bot.

Some patterns that work in chat but fail on phone:

- Rich cards with three or four options become a 20-second spoken menu that callers forget halfway through.

- Long FAQ-style responses that are skimmable in chat turn into dense monologues that no one can retain.

- Multi-step forms where you can scroll up and correct inputs become linear gauntlets—one mistake and you’re back to the start.

To succeed, you need a phone-first conversational architecture, not an omnichannel design that treats phone as an afterthought in your omnichannel customer support diagram.

Phone vs Chat vs Smart Speaker: Different Channels, Different Rules

Turn-Taking and Silence Tolerance

Phone calls have near-zero tolerance for dead air. Humans expect sub-second responses in most turns; 400–800 ms of latency can already feel like a technical glitch, not “the system thinking.” When that happens, callers repeat themselves, leading to double-requests and confusion.

That’s why tuning turn-taking, barge-in detection, and latency is non-negotiable. On chat, a two-second delay is barely noticeable. With smart speakers, users already expect a brief pause. On phone, the same delay can knock CSAT down several points and inflate handle time.

Picture a poorly tuned system: the bot waits 2.5 seconds after the caller speaks before responding. The caller assumes it didn’t hear, repeats the request, and the ASR captures overlapping audio. Intent confidence drops, the voice bot asks for clarification, and frustration spikes. All from a latency budget that made sense in your test lab but not in real contact center AI conditions.

Memory, Context, and Cognitive Load

On phone, callers can’t see their options. Every detail you speak has to be held in working memory, which is limited—especially under stress. That’s why the classic 6–8 option IVR menu performs so badly in the wild.

Chat, by contrast, provides a persistent transcript. People glance, scroll, and revisit earlier information. This difference should reshape how you design conversational IVR flows and how you think about call intent detection.

Instead of a single 6-option menu, a phone-specific conversational design might use two narrowing questions: first identify the broad area (billing, technical, account), then disambiguate within that. You’re trading one long high-load step for two shorter low-load steps—much easier for callers to follow.

Expectations of Empathy and Authority

Voice feels personal. When someone hears a voice—human or synthetic—they instinctively expect empathy, competence, and a clear sense that “someone is on this with me.” A virtual agent for phone calls can’t get away with the clipped, tool-like responses that are fine in chat.

Small script changes matter. “Processing request” sounds like a machine. “Got it, I’ll check that for you right away” sounds like a helper. Same backend action, different emotional impact—and different customer experience (CX) metrics.

Smart speakers are mostly used as tools; expectations are lower. Phone support is an escalation channel. By the time someone calls, they expect your system to act with the same authority and care as a live agent. Your voice AI for customer service needs to sound like it understands that.

The Phone Support Voice Design Framework: Overview

Four Layers of a High-Performing Phone Voice Assistant

To build the best voice assistant for phone support in call centers, you need a framework that starts from the channel, not the model. We use a four-layer structure:

- Caller Psychology – emotional state, expectations, trust.

- Interaction Mechanics – turn-taking, latency, barge-in, silence.

- Dialog Strategy – prompts, confirmations, error handling.

- Operational Guardrails – compliance, escalation, routing, monitoring.

You can picture this as a stack. Caller psychology is the foundation; interaction mechanics sit on top; dialog strategy uses those mechanics to shape conversations; operational guardrails wrap the whole thing so your voice assistant for phone support is safe, compliant, and measurable.

Most failed deployments obsess over NLU and ignore at least two of these layers. A true framework for designing phone support voice assistants assumes phone-specific constraints in every layer.

How This Framework Connects to Business Metrics

Each layer maps directly to business KPIs. Caller psychology and dialog strategy shape customer satisfaction (CSAT) and first call resolution (FCR). Interaction mechanics affect drop-off rates, abandonment, and total handle time. Operational guardrails determine self-service containment rate, quality of escalations, and compliance incidents.

The goal isn’t “high accuracy”; it’s human-like performance at scale. That means combining strong ASR/NLU with flows that reach comparable CSAT and FCR to your human agents, while still delivering automation and queue deflection.

We’ve seen contact centers move from a pure containment target (“hit 50% and declare victory”) to a balanced scorecard: containment, FCR, CSAT, and escalation quality. That mindset shift is where real call center automation ROI shows up.

Where This Fits with Existing IVR and Chat Investments

This is not a big-bang IVR replacement mandate. You can wrap existing DTMF menus with conversational IVR for high-volume intents while leaving legacy flows in place. You can reuse chat NLU models and knowledge articles but redesign prompts and decision points for phone.

Over 12–18 months, a typical path looks like: (1) add a conversational front-door to your IVR, (2) gradually migrate common journeys to full automation, (3) retire brittle DTMF trees. Along the way, you integrate with ACD, CRM, and existing chatbots, orchestrating call routing based on intent and risk.

If you’re exploring voice AI use cases beyond support, you can still anchor them in this framework; the layers generalize well across inbound and outbound scenarios.

For examples of adjacent applications, see our overview of voice AI use cases beyond support.

Layer 1: Design Around Caller Psychology, Not Menu Trees

Opening Seconds: Reduce Threat, Signal Competence

The first 5–10 seconds of a call make or break trust. If your greeting sounds vague, overly branded, or evasive about reaching a human, callers assume they’re trapped in a wall of automation. That’s when hang-ups spike.

A good opening for a voice assistant for phone support does three things quickly: says who it is, states what it can do, and explains the escape hatch. For example: “You’ve reached Acme Support. I’m an automated assistant that can help with billing, orders, and account issues. You can say ‘agent’ at any time to talk with a person.”

Compare that to: “Thank you for calling Acme, the leader in innovative solutions for customers worldwide…” followed by 20 seconds of branding and legal boilerplate. One respects phone support reality; the other ignores why people call.

Framing the Interaction to Match Caller Goals

Callers don’t want a conversation; they want a job done. The art of how to design a voice assistant for customer phone support is to frame prompts around those jobs, not your org chart.

Instead of “How can I help you?”—which invites unfocused monologues—try: “Briefly, what are you calling about today? For example, a bill, a technical issue, or an appointment.” Then quickly confirm: “Got it, your bill. Is this about a charge you don’t recognize, a due date, or something else?”

That pattern helps call intent detection without overloading the caller. You’re steering them into solvable paths while staying conversational. The same approach works across billing, technical support, scheduling, and more.

Handling Emotion and Escalation Gracefully

Emotion is data. Repeated requests, rising volume, specific anger keywords, or long silences are all signals that the current path isn’t working. Your virtual agent for phone calls should treat those as triggers to change behavior, not just errors.

For example, after two failed attempts to capture an amount for a disputed charge, the assistant might say: “I’m sorry this is taking longer than it should. I’ll connect you to a specialist who can help, and I’ll pass along what you’ve already told me so you don’t need to repeat it.” Then it actually does pass that context.

Empathy phrases without concrete next steps don’t move CSAT. Real de-escalation combines tone (“I can see this is frustrating”) with action (shortening questions, summarizing, escalating to a human agent when needed).

Layer 2: Interaction Mechanics—Turn-Taking, Barge-In, and Latency

Set Aggressive but Realistic Latency Budgets

On phone, your total latency budget per turn—ASR, NLU, dialog management, TTS—should be measured in hundreds of milliseconds, not seconds. For simple yes/no or short utterances, aim for sub-second responses; for more complex queries, keep it as close to that as possible.

Architectural choices drive this. Streaming ASR instead of chunked, local caching of common responses, prefetching likely next prompts—all of these cut latency. The payoff is directly visible in customer experience (CX) metrics: fewer interruptions, fewer double-requests, smoother turn-taking.

Usability research on speech systems supports this: once response times exceed around one second, perceived quality drops sharply. The Nielsen Norman Group has a helpful overview on latency expectations in voice interfaces: Nielsen Norman on response times.

Smart Barge-In: Let Callers Interrupt Without Chaos

Barge-in is one of the biggest differences between phone and other channels. Callers will interrupt your AI voice assistant mid-prompt. If you don’t handle that intelligently, you’ll end up with mangled intents and “I didn’t get that” loops.

Best practices for IVR and AI voice assistants in phone support include defining barge-in windows (when interruption is allowed), updating intent mid-utterance when possible, and safely rolling back partial prompts. You also need clear exceptions: no barge-in during mandatory compliance disclosures or payment confirmations.

A good experience might look like this: the bot starts identity verification, the caller jumps in with “Yes, it’s about my last bill,” and the system pauses, acknowledges, and then weaves that new information into the flow without losing track of where it was.

Silence, Overlap, and Noisy Environments

Real-world phone support isn’t quiet. People call from cars, shops, trains. Speech recognition accuracy drops, and your design has to adapt. That means higher confidence thresholds for risky actions, targeted confirmations, and sometimes a fallback to DTMF when voice is unreliable.

Silence handling also matters. Two identical “Sorry, I didn’t get that” messages feel clueless. A better sequence might shift from open question to binary choice to explicit suggestion (“If it’s loud where you are, you can use your keypad instead”).

These mechanics are invisible when things go right—and glaring when they go wrong. The difference in CSAT between a voice bot that adapts and one that stubbornly repeats itself is enormous, even if their underlying ASR models are similar.

Layer 3: Dialog Strategy—Prompts, Confirmations, and Error Handling

Prompt Design: Short, Specific, and One Cognitive Step at a Time

Prompt design is where phone-specific conversational design for AI voice support becomes tangible. On phone, every extra clause adds cognitive load. The rule of thumb: ask for one thing at a time, in 10–15 words if you can, and avoid hidden conditions.

Instead of: “For billing questions say ‘billing’, for technical support say ‘technical support’, for appointments say ‘appointments’ or press the corresponding number on your keypad,” try: “Briefly, what are you calling about today—your bill, a technical issue, or an appointment?” Follow with a quick confirmation.

This may sound like a small change, but it’s often the difference between a voice bot that feels conversational and one that feels like a legacy IVR in disguise. Conversation design here is about pacing and cognitive load more than clever wording.

Confirmations That Build Trust Without Dragging the Call

Confirmations are a lever to balance speed, safety, and trust. For low-risk actions, a “light” confirmation—restating only the key element—is enough: “Just to confirm, you said your internet is down, right?” For high-risk actions like payments, you need “strong” confirmations: amounts, dates, last four digits, and explicit consent.

Designers of the best voice assistant for phone support in call centers vary confirmation depth by risk and user signals. If the caller sounds confident and you’re in a low-risk flow, skip redundant confirmations to save time. If you detect uncertainty or noise, lean into stronger confirmation to protect FCR and avoid rework.

Think in flows, not turns: an extra two seconds of confirmation may prevent a second call, raising both FCR and CSAT.

Error Handling That Feels Competent, Not Clueless

Error handling is where many teams realize why their phone support voice bot is frustrating customers. Repeating the same “I didn’t catch that” line three times feels like talking to a wall. Instead, design tiers of recovery.

First misrecognition: a simple paraphrase and retry. Second: narrow the question or offer examples. Third: switch modality or escalate: “It sounds like this might be easier with a person. I’ll connect you to an agent and share what I have so far.” Behind the scenes, send a concise summary so the caller doesn’t start from zero.

Done well, this strategy protects your self-service containment rate without sacrificing dignity. Done poorly, it drives abandonment and angry callbacks.

Layer 4: Operational Guardrails—Compliance, Escalation, and Live Metrics

Safe Escalation Paths That Don’t Punish Callers

Escalation isn’t failure. For complex or sensitive issues, a fast, well-routed handoff to a human can actually improve CSAT compared to dumping callers straight into a long queue. The key is to make escalation feel like progress, not a reset.

Best practice: the AI voice assistant completes simple identity checks, gathers a short description, and tags the intent. Then it routes to the right queue—fraud, retention, technical tier 2—with a summary visible to the agent. The agent greets the caller with, “I see you’re calling about a disputed charge on your last bill; let me pull that up.”

This protects queue deflection where it makes sense and builds trust where automation should gracefully step back.

Compliance, Consent, and Data Handling on Phone

Phone is not a compliance-free zone. If your voice AI for customer service takes payments, accesses health data, or records calls, you’re in PCI-DSS, HIPAA, or other regulated territory. You need clear consent flows and robust data handling.

The trick is to integrate consent without tanking CSAT. That might mean a concise statement right after greeting (“This call may be recorded and processed by our automated system to help with your request”) and a simple opt-out path. For payments, route sensitive keypresses via secure DTMF masking and avoid storing raw card numbers.

Regulatory and standards bodies like PCI Security Standards Council provide guidance on phone payments: see, for example, their overview of PCI-DSS requirements and how they apply to card-not-present transactions.

Metrics That Matter: Beyond Containment

If you only track containment, you’ll optimize for the wrong outcomes. A more complete view of how to improve CSAT with phone support voice assistants includes:

- CSAT specific to bot-handled calls vs agent-handled calls.

- FCR including hybrid journeys (bot + agent).

- Transfer rates and reasons (design failure vs right escalation).

- Average handle time including the bot leg, not just agent time.

- Step-level drop-off and error hotspots.

Combine quantitative metrics with qualitative review: listen to call snippets, read transcripts, categorize why customers escalate. Over the first 90 days of a new deployment, your dashboard should show not just utilization but a trend toward human-like CSAT and FCR as you iterate.

Testing, Iteration, and Pricing Realities for Phone Voice Assistants

From Pilot to Production: How to Deploy Without Backlash

Deploying an AI voice assistant for inbound phone support is high-risk if you flip the switch everywhere on day one. A better path is a staged rollout: start with a few low-risk intents (e.g., balance checks, appointment confirmations) during limited hours, then expand.

Crucially, offer clear “escape hatches” to agents from the start, and measure not just containment but satisfaction among those who use the bot. Tell customers upfront: “You’ll first speak with our automated assistant which can solve common issues or get you to the right person.”

Brands that skip this stage often end up in the press for the wrong reasons—a poorly designed voice IVR that blocks customers from humans. Numerous case studies, like the widely covered backlash against a major airline’s phone bot experiment (see, for instance, reporting from The Wall Street Journal on IVR frustrations), show how fast reputational damage can spread.

A/B Testing Prompts, Flows, and Escalation Rules

Once live, treat your assistant as a living system, not a static project. Basic experimentation can unlock surprising gains in customer satisfaction (CSAT) and first call resolution (FCR).

Formulate hypotheses: “A shorter greeting will reduce early hang-ups,” or “Explicitly mentioning the ‘agent’ escape hatch will increase trust without killing containment.” Then A/B test scripts, confirmation styles, or escalation thresholds by intent or customer segment.

We’ve seen simple greeting tests deliver 10–15% CSAT uplift: version A overloaded callers with branding; version B got to capabilities and next steps faster. The difference was about 10 words—but it changed behavior.

Understanding Cost and Pricing Models Without Getting Trapped

Pricing for an AI voice assistant for call center phone support typically mixes platform fees with usage-based charges. Common models include per-minute, per-call, or per-interaction pricing. On top of that, there are integration, compliance, and ongoing optimization costs.

It’s tempting to choose the cheapest minutes and call it a win. But a poorly designed assistant with low CSAT and containment will drive repeat calls, agent escalations, and churn—more expensive than a better-designed system with higher upfront investment. Design quality is part of your AI development ROI, not a nice-to-have.

Imagine two assistants handling 100,000 minutes per month. The cheap one costs $0.02/min but has 20% containment and low FCR, resulting in 80,000 minutes of follow-up agent time. The better-designed one costs $0.04/min but reaches 50% containment and higher FCR, cutting agent minutes by half. Total cost of ownership tilts strongly toward the second, even before you factor in customer satisfaction and retention.

If you want help scoping this, an AI discovery workshop can surface where design investments will pay back fastest.

How Buzzi.ai Helps You Build a High-Performing Phone Voice Assistant

Channel-Native Voice Design, Not Just Models

At Buzzi.ai, we don’t ship generic bots. We specialize in channel-native voice AI for customer service, with deep focus on phone and WhatsApp. That means our projects start from caller psychology, device constraints, and operational realities—not just a list of intents.

Our teams handle end-to-end conversation design, latency-optimized architectures, and safe escalation and call routing—aligned to the four-layer framework in this article. The result is a voice assistant for phone support that sounds human, routes intelligently, and is actually judged “better than the old IVR” by customers.

In a recent engagement, we inherited an underperforming phone bot with decent NLU but terrible reviews. By redesigning openings, shortening prompts, tightening barge-in logic, and clarifying escalation paths, we lifted CSAT by double digits and nearly doubled effective self-service containment rate.

From Discovery to Ongoing Optimization

Our process is straightforward: start with discovery and call analysis, design high-impact pilot flows, then iterate based on real interactions. Over 4–6 weeks, we can usually identify why your current phone support voice bot is frustrating customers and prototype fixes that move the needle.

We integrate with your existing telephony, CRM, ticketing, and analytics stack so that deploying an AI voice assistant for inbound phone support doesn’t mean rebuilding your contact center from scratch. Think of us as an AI agent development partner embedded in your CX team.

If you’re considering AI voice assistant development services, we’ll help you quantify the impact on CSAT, FCR, and costs before you commit to a full rollout.

Conclusion: Phone Support Voice Assistants Customers Don’t Hate

Low CSAT in phone voice assistants is not a law of nature; it’s the result of channel-agnostic design. When you treat phone like chat with audio or a smart speaker with hold music, you inherit all the wrong patterns and none of the right ones.

The Phone Support Voice Design Framework we’ve outlined—Caller Psychology, Interaction Mechanics, Dialog Strategy, Operational Guardrails—gives you a way to design intentionally for phone. Done well, it closes the gap between automation and human agents on CSAT, FCR, and containment.

Your next step is simple: audit your current flows against these four layers. Where are you overloading memory? Where is latency too high? Where are escalations punishing callers? If you’d like a partner to do this with you, schedule an AI discovery session with Buzzi.ai and let’s design a voice assistant for phone support that your customers actually prefer.

FAQ: Designing Voice Assistants for Phone Support

Why do voice assistants for phone support often have lower CSAT than chatbots?

Phone callers are more stressed, impatient, and sensitive to friction than chat users. When we reuse chatbot patterns—long menus, dense explanations, slow responses—on phone, those design choices amplify frustration. The result is lower CSAT even if your ASR and NLU are technically strong.

How should I design the opening script of a phone support voice assistant?

Keep the opening short and focused on trust. State who the assistant is, what it can do, and how to reach a human (“You can say ‘agent’ anytime”). Avoid long branding intros or legal boilerplate up front; those can be integrated later in the flow without spiking early hang-ups.

What is a Phone Support Voice Design Framework and how does it work?

It’s a structured way to design your phone voice bot around the realities of the channel. Our framework has four layers—Caller Psychology, Interaction Mechanics, Dialog Strategy, and Operational Guardrails—that connect directly to KPIs like CSAT, FCR, and containment. Instead of optimizing just the model, you design the whole experience end to end.

Which conversational patterns from chatbots should I avoid on phone-based voice assistants?

Avoid long multi-option menus, dense FAQ-style answers, and prompts that ask for multiple things at once. These rely on visual memory and scanning, which don’t exist on phone. Instead, break flows into short, single-purpose prompts and use clarifying questions to guide callers step by step.

How can I improve CSAT and FCR with a voice assistant for phone support?

Start by tightening openings, latency, and barge-in behavior so calls feel responsive. Then redesign prompts and confirmations to match caller goals and risk levels, and add smart escalation that passes context to agents. Continuous A/B testing of scripts and flows will reveal where small wording or timing changes drive big gains in CSAT and FCR.

What are best practices for barge-in, turn-taking, and latency on support calls?

Target sub-second response times for most turns and test under real network conditions, not just in the lab. Allow barge-in on most prompts but protect compliance statements and payment confirmations. Design your VUI to handle interruptions gracefully by updating intent mid-stream and resuming the dialog without confusing callers.

How should my phone voice assistant escalate to human agents without frustrating callers?

Escalation should feel like progress. Have the bot complete basic verification, capture a concise description, and tag intent before transferring. Then pass that context to the agent so the caller doesn’t repeat everything. Clear messaging about wait times and who they’re being connected to also reduces frustration.

Which metrics matter most to judge if my phone voice assistant is successful?

Look beyond containment to CSAT specific to bot interactions, FCR across bot and agent legs, transfer rates and reasons, and step-level drop-off. Combine those with qualitative review of transcripts and call recordings. Over time, you should see trends toward human-like CSAT and FCR, along with reduced agent handle time.

How do compliance and data privacy requirements affect AI voice assistants on phone?

They shape how you handle recording consent, payments, and sensitive data. You’ll need clear consent language, secure handling of card details (often via DTMF masking), and defined retention and access policies. Getting this right protects you legally and builds trust with callers by showing that automation doesn’t mean cutting corners on privacy.

How can Buzzi.ai help redesign my current phone support voice bot to perform better?

We start with a focused review of your existing flows, transcripts, and metrics to pinpoint why customers are frustrated. Then we apply our four-layer framework to redesign openings, prompts, interaction mechanics, and escalation paths. If you’d like to explore this, our AI voice assistant development services include end-to-end design, integration, and ongoing optimization.