Choose a Real Estate AI Development Company Built for Local Markets

Partner with a real estate AI development company that builds locally-grounded models using MLS data so agents trust valuations and act on insights daily.

The glossy demo looked perfect—until it hit a real neighborhood.

A national valuation tool had just priced a fifth-floor walk-up the same way it priced a glassy luxury tower two blocks away. Same ZIP code, similar square footage, identical bedroom and bathroom count. The model barely blinked. Local agents, on the other hand, were furious—and the seller was confused.

This is what happens when real estate AI is built as if the whole country were a single homogeneous market. The pitch decks are full of sleek charts; the daily reality is a patchwork of micro-markets, quirky zoning, unreliable data, and unwritten local rules. That’s why so many national models quietly get sidelined by the very agents they were supposed to empower.

If you’re looking for a real estate AI development company, you’re not shopping for another generic proptech demo. You’re trying to build something your agents will actually trust, that reflects real streets, real buildings, and real deal dynamics in your markets. That means treating real estate AI development as a local-first problem, not just a bigger data problem.

In this article, we’ll unpack why national models so often miss the mark, what it really means to model markets locally, and how to choose a partner that won’t repeat the same mistakes. We’ll also show how Buzzi.ai works as a locally-aware, discovery-led proptech solutions partner for serious regional and national players.

Why One-Size-Fits-All Real Estate AI Fails Local Markets

National Datasets Hide Micro-Market Reality

Most automated valuation models started life on giant national datasets. That scale is impressive, but it has a hidden downside: it smooths out the very neighborhood-level differences that drive actual deals. If you average enough noise, everything starts to look the same.

Imagine two three-bedroom homes separated by a single street. On paper, they look identical: same square footage, same beds and baths, built in the same decade. But one sits inside a top-rated school district with lower crime and easy access to transit; the other falls into a weaker district, on a noisier street, with higher taxes. In many cities, that boundary can mean a 15–30% price difference.

National automated valuation models that don’t explicitly model this kind of micro market segmentation end up chasing averages. That hurts AVM accuracy for specific micro-neighborhoods, and that’s exactly where agents and clients notice errors first. Over time, those misses compound into distorted neighborhood-level pricing and misleading signals about local housing market trends.

Generic Models Ignore Local Rules and Constraints

Real estate isn’t just about bricks and mortar; it’s also about rules. Zoning regulations, short-term rental limits, rent control, and property tax assessments vary not only by city, but sometimes by block. These factors materially change both fair value and investment risk.

Consider a city with strict rent control and aggressive tenant protections. A condo in one zone might face capped rent increases and long eviction timelines; a similar condo just outside the zone could be flexibly priced and repositioned. A naive real estate price prediction model that ignores those boundaries will systematically misvalue both units.

When a model misses obvious regulatory constraints, it’s not just a technical issue—it’s a reputational one. Agents are left explaining away clearly wrong outputs, and compliance teams worry about how those estimates are used in consumer-facing experiences. Generic models optimized on national metrics rarely capture the regional seasonality and regulatory nuance you actually operate in.

The Business Risk of "Good Enough" National Averages

On a slide, “95% accuracy nationwide” sounds great. On the ground, the remaining 5% can cluster in ways that break your business. A few highly visible mistakes in flagship neighborhoods are enough to erode trust across an entire network of agents.

The hidden costs of relying on generic national averages include:

- Lost listings when sellers don’t trust your tool’s price.

- Reduced agent adoption when models feel disconnected from local reality.

- Brand damage if public AVM errors get surfaced in media or social channels.

- Compliance headaches when mismatched valuations touch regulated processes.

We’ve already seen this play out. High-profile criticism of national AVMs—like debates around Zillow’s Zestimate and the fallout from its home-flipping exit—shows how public and painful accuracy issues can become when local markets move against a model’s assumptions.1 For any brokerage or proptech founder, the question isn’t whether to use AI, but whether your chosen real estate AI development company understands why national real estate AI models fail in local markets—and has a plan to avoid repeating those mistakes.

Competitive pressure is only increasing. Consulting firms like McKinsey are already documenting how analytics and AI are reshaping real estate investment and valuation at scale.2 The winners will be the ones who blend that sophistication with truly local insight.

What Makes Real Estate Fundamentally Local in Data and Modeling

Local Supply, Demand, and Inventory Dynamics

Every market has its own rhythm. Days on market, inventory levels, and market absorption rate can look healthy in aggregate while masking fragile submarkets underneath. If your model assumes uniform dynamics within a metro, it will misjudge urgency, pricing power, and negotiation strategies.

Think of a fast-moving urban core where desirable condos receive multiple offers in days, versus a slow-moving exurban submarket where large lots sit for months. The same asking price strategy that works downtown may fail 20 miles away. Any local real estate AI development company worth its fees will explicitly model these differences and tie them into pricing, timing, and lead scoring models for agents and investors.

Overlooking this is how you end up with AI guidance that tells agents to “hold firm” on price in a soft submarket, or to discount aggressively in an already hot pocket of demand. Those are the moments when trust evaporates.

Micro-Neighborhood Features That Move Prices

Beyond classic attributes like square footage or bedroom count, modern pricing is driven by micro-environmental features. Walkability score, transit access, school district data, noise levels, and proximity to amenities can all swing neighborhood-level pricing meaningfully. The catch: these fields rarely show up cleanly in national datasets.

Local-first modeling uses geospatial analytics to engineer these signals. Instead of a raw address, your feature set might include “distance to nearest metro station,” “within top-decile school catchment,” or “street-level traffic noise proxy.” Combined with modern comparable sales analysis, this unlocks more accurate and more explainable valuations.

If a vendor claims deep local expertise but can’t explain how they engineer and maintain this kind of feature set, they’re not the local real estate AI development company you’re looking for.

Regulations, Seasonality, and Culture by Region

Markets also differ in time, not just space. Regional seasonality shapes listing cycles and pricing strategies: snow markets may hibernate in winter and surge in spring, while sunbelt markets stay active year-round. A single nationwide seasonality factor can’t capture those patterns.

Layer on top the variations in zoning regulations, building codes, and local cultural preferences. A layout that’s prized in one city might be a non-starter in another. Effective local market modeling requires explicit regional parameters and segmentation—clear boundaries, not just a global fit.

Academic work on real estate price prediction and AVMs has consistently shown that incorporating local features and spatial dependence improves accuracy.3 The research is clear: if your models ignore local context, you’re choosing lower performance.

Inside a Local-First Real Estate AI Development Company

Integrating MLS, Public Records, and Proprietary Local Data

The first test of a real estate AI partner is how seriously they treat data integration. Local-first platforms start with robust MLS data integration, but they don’t stop there. They bring together tax rolls, permitting data, zoning layers, and brokerage systems into a unified, continuously updated dataset.

A typical pipeline for custom real estate AI software development for MLS data looks like this: ingest MLS feeds and public records, normalize schemas, resolve entity conflicts, and feed everything into a feature store. On top of that, you layer brokerage CRM, off-market leads, and internal deal notes via solid real estate CRM integration. Data quality checks and SLAs keep feeds fresh in near real time.

A truly local-first real estate AI development company with local market data integration will treat this engineering as a first-class problem, not something tacked on at the end of a modeling exercise.

Feature Engineering and Geospatial Modeling for Local Nuance

Once the data is unified, the real work begins: turning raw records into signals agents actually care about. That’s where geospatial analytics and micro market segmentation come in. Instead of thinking in terms of ZIP codes, a local-first team discovers and models the micro-neighborhoods agents already talk about.

Engineers might compute distances to amenities, commute times, and school boundaries; they might use clustering to identify zones where price dynamics behave similarly. These features directly improve automated valuation models and AVM accuracy, and they’re easier to explain. Agents can see, for example, why being 200 meters closer to a metro station or inside a specific school catchment adds value.

Technical blogs from GIS leaders like Esri have documented how spatial clustering and location intelligence transform real estate decisions—this isn’t theory anymore, it’s table stakes.4

Handling Sparse or Unique Local Markets

Not all markets are data-rich. Small towns, luxury pockets, or unusual property types can have sparse transaction histories. A naive model will either overfit on a handful of comps or revert to generic citywide averages that ignore local nuance.

A mature real estate AI development company with local market data integration designs for this from day one. Techniques like hierarchical models, transfer learning, and Bayesian approaches let you “borrow strength” from nearby markets while preserving local idiosyncrasies. For example, a rural town’s model might share some parameters with a nearby regional hub, but keep its own unique coefficients where local behavior diverges.

This is especially critical for multi-market deployment where your platform must serve both dense urban cores and thin rural markets without breaking either.

Designing for Explainability Agents Can Use

Even the best model fails if agents don’t trust it. That’s why explainability isn’t a nice-to-have; it’s core product design. A local-first ai real estate valuation platform for local agents should show not just “the number,” but the local story behind it.

In practice, that looks like simple, agent-facing explanations: top factors driving a price, relevant comparable sales analysis within a small radius, and highlighted local drivers such as school zone, transit access, or recent renovations. This level of transparency reduces friction in pricing conversations and supports broader broker workflow automation—because agents can see when to trust the model and when to override it.

Explainable AI here isn’t an abstract principle; it’s how you turn math into sellable narratives.

Collaborating with Local Brokers and Agents Throughout the AI Lifecycle

Co-Design Workshops and Local Market Mapping

Local-first AI starts with local voices. The best real estate AI company for brokers and agents doesn’t disappear into a lab and come back with a finished product; it runs discovery sessions with top-producing agents, local managers, and analysts.

In these workshops—often framed as an AI discovery process—you map how the market actually works: submarkets, property archetypes, rules of thumb, and edge cases. You co-create a shared language for micro-neighborhoods and pricing tiers. That’s how a real estate AI development company with local market expertise encodes tacit knowledge before touching a line of code.

A sample half-day agenda might include whiteboarding neighborhood boundaries, reviewing recent tricky deals, identifying data gaps, and defining what “good” predictions should look like in different segments.

Human-in-the-Loop Labeling and Validation

After co-design comes co-labeling. Local agents are uniquely qualified to flag tricky comps, annotate exceptions, and correct valuations where the model doesn’t yet understand context. A robust ai real estate valuation platform for local agents bakes this feedback loop into the product itself.

Imagine a valuation screen where an agent can flag, “Upcoming zoning change: density will double here in 18 months,” or “This property backs onto a noisy rail line—discount is justified.” Those inputs can be used in human-in-the-loop workflows to update training data and improve locally-appropriate AI performance over time.

The payoff is measurable: better AVM accuracy where it matters most, and faster agent adoption because people see their expertise reflected in the system.

Measuring "Local Fit" Beyond Generic Accuracy

Generic metrics like overall RMSE are necessary, but they’re not sufficient. A truly local real estate AI development company will measure “local fit” explicitly. That means looking at error distributions by neighborhood, price band, and property type—not just averages.

You can also track qualitative KPIs: What percentage of agents say they would quote the model’s price to a client? How much time is saved on pricing discussions? How often do overrides cluster in the same submarkets?

Executives care about outcomes. Tying these metrics to listing win rate, housing market trends, broker workflow automation gains, and margin impact creates a clear business case for continued investment in local-first modeling.

Governance and Escalation for Edge Cases

No model gets every case right—and that’s okay, as long as you have governance. Local offices need clear processes to handle edge cases and override AI outputs when needed. That includes who reviews disputes, how overrides are logged, and how those decisions feed back into training data.

Good governance frameworks echo emerging standards like the NIST AI Risk Management Framework, which emphasizes human oversight and clear documentation of AI-assisted decisions.5 A strong ai governance consulting approach ensures your local committees or lead analysts can review flagged valuations weekly and update rules without disrupting operations.

This is what responsible AI looks like in practice: transparent feedback loops, local office governance, and clear boundaries for when humans—not models—have the final say.

Scaling Local-First Real Estate AI Across Multiple Regions

Core Platform, Local Configuration

At scale, you can’t maintain a completely bespoke stack for every region. The hallmark of top real estate AI development companies for regional markets is a smart balance between shared infrastructure and local flexibility.

In practice, that means a core platform with shared services, data pipelines, and baseline models—plus per-region configuration layers and data stores. Agents in different metros experience a consistent UI and workflow, while local rules, features, and data sources vary underneath. This is how sophisticated enterprise AI solutions handle multi-market deployment without fragmenting into chaos.

Picture a brokerage operating in three regions: each has different model thresholds and local features, but everyone logs into one platform. That’s the goal.

Regional Model Variants and Testing

As data volume allows, you can go further and maintain separate models or fine-tuned variants per metro, state, or country. This supports meaningful regional variation while still leveraging a shared codebase. Robust MLOps services are critical here to manage versioning, deployment, and rollback safely.

You might, for example, launch a new local model in one city and compare its error metrics and agent adoption against the previous generic model over 60 days. If locally-appropriate AI performance improves, you scale it; if not, you iterate. Continuous A/B testing becomes part of your operating cadence, not a one-off experiment.

Monitoring Drift and Local Market Shifts

Real estate markets are not stationary. Sudden economic changes, new developments, or regulatory shifts can break previously accurate models. Effective platforms monitor data drift and performance drift by region and even by neighborhood.

Consider a new transit line opening or a major employer leaving a region. Within months, housing market trends and real estate price prediction patterns can shift dramatically. If you’re not monitoring drift at a local level, you’ll discover the problem when agents complain—not when dashboards light up.

Again, this is where a mature real estate AI development partner shines: continuous improvement baked into the contract, not a one-and-done delivery.

Change Management with Local Offices

Technology is only half the battle; the other half is culture. Successful rollouts follow clear playbooks: pilot in a few offices, identify champions among agents, train managers, and set up feedback channels. This is classic ai transformation services work, tuned for broker networks.

A typical rollout might start with one pilot office using the platform for internal pricing only. Once trust is built and workflows stabilize, you expand to a cluster of offices, then to full-network multi-market deployment. Along the way, improvements in broker workflow automation and AI for enterprises metrics—like time-to-list and conversion rates—reinforce buy-in.

The key is incrementalism: introduce local-first AI in ways that help agents win more business without disrupting their core routines.

How to Choose a Real Estate AI Development Company with Local Expertise

Questions to Test for Genuine Local Market Understanding

When you evaluate vendors, your goal is to distinguish true local-first experts from generic providers. The fastest way is to ask pointed questions. For example:

- “Which local data sources will you integrate beyond MLS—zoning, tax, permit, or school district data?”

- “How do you handle micro-neighborhoods that don’t align with ZIP codes?”

- “Show us an example of real estate AI development company with local market data integration work you’ve done before.”

A weak vendor will respond with vague statements about “big data” and “machine learning models that generalize well.” A strong local real estate AI development company will walk you through specific pipelines, feature engineering examples, and collaboration patterns with local agents.

Red Flags for Generic, National-Only Vendors

There are also clear warning signs. Be wary if a vendor:

- Insists on one global model for all markets with no regional variants.

- Relies only on national datasets with no local enrichment.

- Never mentions working directly with agents or local managers.

- Can’t explain why national real estate AI models fail in local markets.

These generic real estate AI solutions may look cheaper initially, but the hidden costs—mispricing, agent rejection, compliance issues—tend to surface quickly. You want a real estate AI development company with local market expertise, not just a team that can build a model in a notebook.

Designing a Pilot That Proves Local Fit

Before you bet the farm, design a pilot. Focus on one or two key markets with strong local agent involvement and clear success metrics. The pilot’s goal is to prove that your partner can be a real estate AI development company for accurate property valuation in your markets, not just on benchmarks.

A 90-day plan might look like this: weeks 1–3 for discovery and data access, weeks 4–6 for integration and modeling, weeks 7–9 for agent validation and feedback, and weeks 10–12 for refining and presenting results. Success metrics could include reduced pricing errors, improved days on market, higher listing win rates, and agent trust scores for locally-appropriate AI performance.

Structure contracts so that both sides are aligned on learning, not just delivery.

Budgeting and ROI for Local-First Real Estate AI

Local-first work does add cost versus a generic model—but it also adds leverage. Your budget will typically include data engineering, model development, integration, and change management. Think of these as components of a broader real estate AI development investment, not isolated line items.

On the return side, the gains compound: more accurate pricing, faster transactions, and higher agent adoption. Over a 2–3 year horizon and across multiple markets, improvements in margin and capital efficiency can dwarf initial expenses. In many cases, you’re reallocating spend from underperforming tools to targeted, high-ROI proptech solutions.

If you want a structured way to quantify that upside, look for partners who can connect valuation improvements to deal outcomes—similar to how Buzzi.ai approaches its predictive analytics and forecasting services.

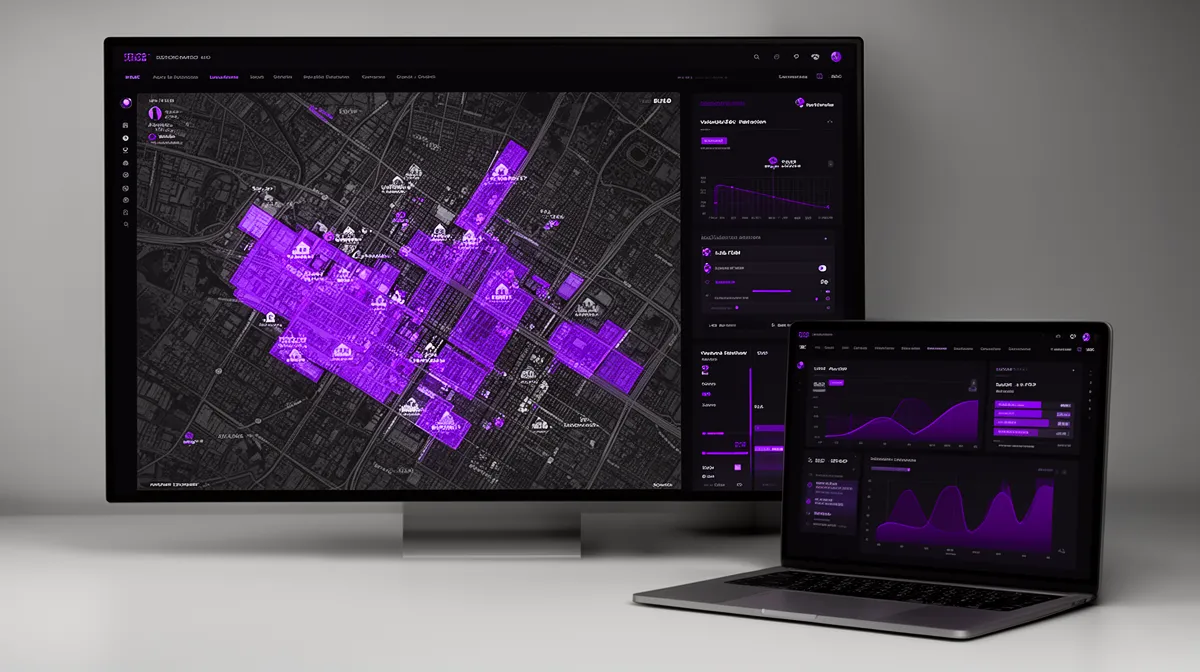

How Buzzi.ai Builds Locally-Aware Real Estate AI Platforms

Discovery and Local Market Mapping with Your Teams

At Buzzi.ai, we start every engagement with structured discovery, not code. Our AI discovery sessions bring together local brokers, agents, and analysts to map submarkets, decision patterns, and data realities. We don’t assume we know your city better than your top producers do.

In practice, this looks like an AI discovery and market-mapping workshop where we define what “good” locally-appropriate AI performance means for your brand. We document how agents actually price, which factors they trust, and where current tools fall short. That understanding becomes the blueprint for a local-first architecture.

This is also where we identify early candidate markets and use cases—so we can design a first project that is ambitious enough to matter, but constrained enough to succeed.

Technical Approach: Local-First Architecture and Tooling

Technically, we build on modular architectures that separate shared services from local configuration. For each region, we implement tailored MLS data integration pipelines and local enrichment layers. On top of that, we deploy automated valuation models, lead scoring models, and broker workflow automation tools that read from the same local feature store.

Every ai real estate valuation platform for local agents we build includes explainability and human-in-the-loop feedback by default. That’s how we turn models into daily companions for agents instead of black-box calculators that live in a corner of the intranet.

Because we also focus on multi-market deployment, we design for portability from day one: what we learn in one metro can inform another, without forcing them into the same mold.

Engagement Models and First Projects to Start With

We’ve found that the best way to start is with focused, high-impact projects. Common first steps include locally-tuned pricing assistants for listing agents, listing optimization tools that surface the right talking points, or regional investment scouting dashboards for capital deployment. Each is a contained but powerful use of AI for real estate.

A typical roadmap might start with upgrading one metro’s AVM, then layering on recommendation tools or investor dashboards as data and trust grow. Over time, we extend the platform to new regions, always keeping a local lens. By the time your network calls us their real estate AI development company, they already think of the tools as part of how they work—not as an experiment.

Why Buzzi.ai Over Generic Real Estate AI Vendors

What differentiates Buzzi.ai is simple: we’re obsessed with local reality. We combine local-first methodology, deep collaboration with agents, and technical excellence in geospatial modeling and MLS integrations. We measure success not just in RMSE, but in agent trust and business outcomes.

In one anonymized engagement, a regional brokerage came to us after a generic vendor’s valuation tool had been quietly abandoned by agents. Within six months of a locally-tuned rebuild, listing agents were using the new platform in over 80% of pricing conversations, and median pricing error in their flagship neighborhoods dropped by double digits. That’s what working with the best real estate AI company for brokers and agents is supposed to feel like.

If you’re ready to move beyond generic models and work with a real estate AI development company with local market expertise, the next step isn’t another demo—it’s a conversation. Let’s map your priority markets and design a local-first roadmap that fits your business.

Conclusion: Make Local-First Your Default

Real estate is, and always will be, fundamentally local. Any AI that ignores micro-markets, regulations, and culture is a liability, not an asset. The companies that win will be the ones that make local-first modeling a default assumption, not an exotic feature.

We’ve seen that national one-size-fits-all valuation models create real business risk when agents and clients see obvious mistakes. The antidote is a real estate AI development company that integrates rich local data, collaborates with your agents throughout the lifecycle, and measures local fit alongside generic accuracy. That’s how you turn AI from a slide in a strategy deck into a daily tool your network actually uses.

If you’re considering your next move, the answer isn’t to buy another generic national model. It’s to design a local-first AI roadmap, starting with the markets that matter most to you. To explore what that could look like, we invite you to schedule a discovery conversation with Buzzi.ai and chart a pragmatic first project together.

FAQ

Why do national real estate AI models often fail when applied to specific local markets?

National models are usually trained on large, aggregated datasets that smooth over neighborhood-level differences. They underweight factors like school district boundaries, zoning quirks, and hyperlocal inventory dynamics. As a result, error tends to cluster in precisely the micro-markets where agents and clients care most, eroding trust and making the tools hard to adopt.

What makes real estate fundamentally local from a data and modeling perspective?

Real estate values are shaped by a dense web of local drivers: micro-neighborhood amenities, regulations, seasonality, and culture. Two properties with similar physical specs can diverge sharply in value based on walkability, transit, school zones, or tax regimes. Modeling that complexity requires local data sources, geospatial analytics, and region-specific parameters rather than a single global fit.

Which local factors—like zoning, school districts, or micro-neighborhoods—most affect AI valuation accuracy?

The heaviest hitters are often school district quality, proximity to transit and amenities, and zoning or usage constraints. Noise levels, views, and block-level safety can also meaningfully move prices within the same broader area. Good AVMs explicitly encode these through features like distance-to-metro, school catchment flags, micro-neighborhood clusters, and regulation-aware adjustments.

How should a real estate AI development company integrate MLS, public records, and proprietary local data?

Integration starts with robust MLS data ingestion and schema normalization, then enrichment from tax rolls, permits, zoning layers, and school district data. On top of that, you connect brokerage CRM, off-market leads, and deal notes to capture local context. A strong partner will build a unified feature store with quality checks and near real-time updates, so models and agent-facing tools always reflect current conditions.

What metrics beyond generic accuracy should we use to judge whether AI valuations are locally appropriate?

Look at error distributions by neighborhood, price band, and property type, not just overall RMSE or MAE. Track adoption metrics such as how often agents rely on or override the model, and whether they would confidently quote its prices to clients. Finally, connect those signals to business KPIs like listing win rate, days on market, and margin impact within each micro-market.

How can our brokers and agents participate in designing and validating real estate AI tools?

Involve them early through co-design workshops that map submarkets, pricing rules, and edge cases. Then embed feedback tools directly into your valuation platform, so agents can flag mispriced properties, annotate local nuances, and label tricky comps. This human-in-the-loop approach both improves model performance where it matters and builds trust by showing that local expertise is central, not peripheral.

What are the risks and hidden costs of deploying a generic, national-scale real estate AI solution?

The biggest risk is loss of trust: once agents see consistent local errors, they stop using the tool, turning your investment into sunk cost. There are also reputational and compliance risks if bad valuations surface in consumer contexts or regulated workflows. Hidden costs accumulate in the form of workarounds, manual overrides, and future replatforming to a more local-first approach.

How can we evaluate whether a real estate AI development company truly understands our local markets?

Ask for concrete examples: which local data sources they’ll use, how they handle micro-neighborhoods, and how they’ve worked with agents in similar markets. Probe their understanding of your region’s regulations, seasonality, and typical deal dynamics. A strong partner will discuss specific integration plans and discovery processes, not just generic model performance claims.

How can we roll out locally-grounded AI across multiple regions without losing local nuance?

The key is a core platform with shared services and standards, plus regional configuration layers and models. You pilot locally, refine with agent feedback, then scale patterns that work to new markets while still tuning for local data. Over time, you maintain a family of regional models under one roof, supported by strong MLOps and change management practices.

How does Buzzi.ai’s local-first approach to real estate AI development differ from other vendors?

Buzzi.ai leads with structured discovery and local market mapping, then builds modular, geospatially-aware platforms tuned to your regions. We integrate MLS, public records, and proprietary data into explainable tools that agents can critique and improve over time. If you want to explore what that looks like in your markets, you can start with an AI discovery engagement or reach out via our contact page to design a tailored roadmap.