Design Production‑Grade AI Solutions That Survive Real Use

Most “production‑grade AI solutions” are just polished demos. Learn the operational standards, architecture patterns, and monitoring needed for real reliability.

Most so‑called production-grade AI solutions are just expensive demos wearing production clothes. They ace a scripted walkthrough, survive a small pilot, and then quietly fall apart the first time real users, messy data, and weekend traffic hit them. The problem isn’t the model’s accuracy; it’s that no one designed for monitoring, failover, on‑call, or the dozens of operational details that define real systems, not experiments.

This is the core gap: teams optimize for functional correctness (“does the model predict well in a notebook?”) but ignore operational completeness (“does the system still behave when Kafka lags, GPUs run hot, or a dependency changes its API?”). In other words, we confuse “works in a demo” with “works at 3 a.m. during a partial outage.” To run AI in production at scale, you need both model quality and the boring reliability characteristics your other mission‑critical services already have.

In this article, we’ll walk through what truly operationally complete AI looks like: the non‑functional requirements, architecture patterns, SLOs, and observability stack you should insist on. We’ll use concrete examples and checklists you can drop into RFPs or internal design docs. And we’ll share how we at Buzzi.ai approach production ml systems so that they behave like any other core service in your stack—predictable, observable, and supportable.

What Makes an AI Solution Truly Production-Grade?

Production-Grade vs Proof of Concept: A Working Definition

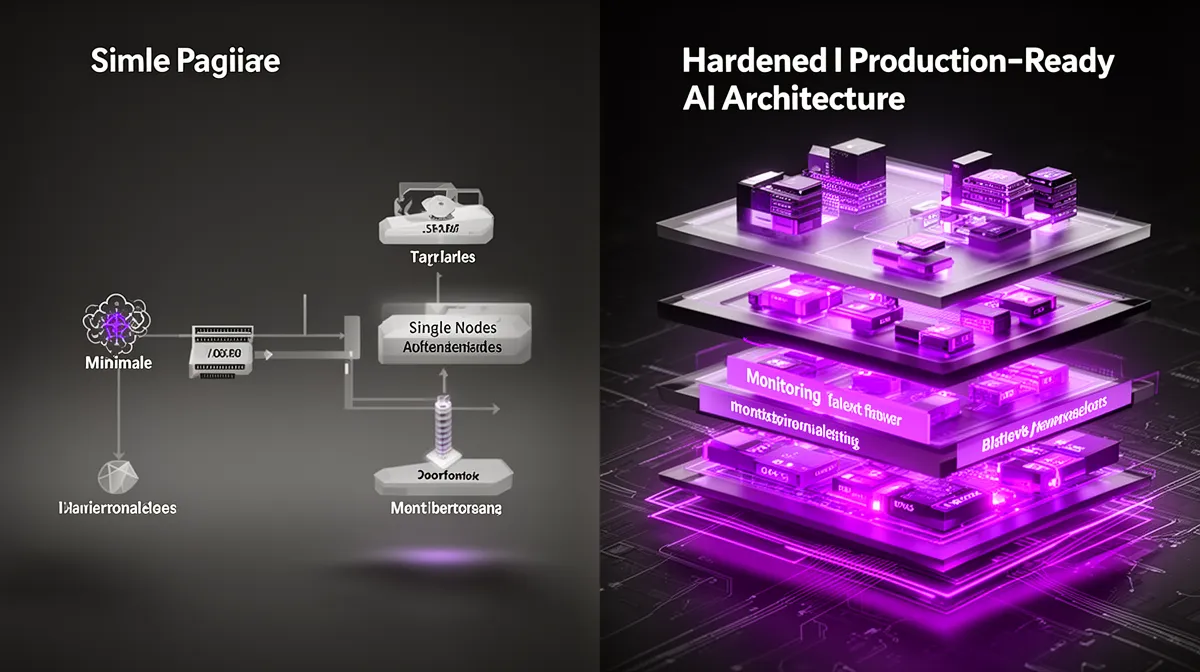

Most AI journeys start with a proof of concept. A small team gets access to data, hacks together a model, wires up a quick UI, and proves there’s signal. That’s valuable. But it tells you almost nothing about whether the result is a production-grade AI system.

A proof of concept answers: “Is there enough predictive power to be useful?” A production-grade AI solution must answer a very different set of questions: Can it meet reliability targets, handle real-world traffic patterns, integrate with security and compliance controls, and be operated by your existing teams? The POC is about feasibility; production is about durability.

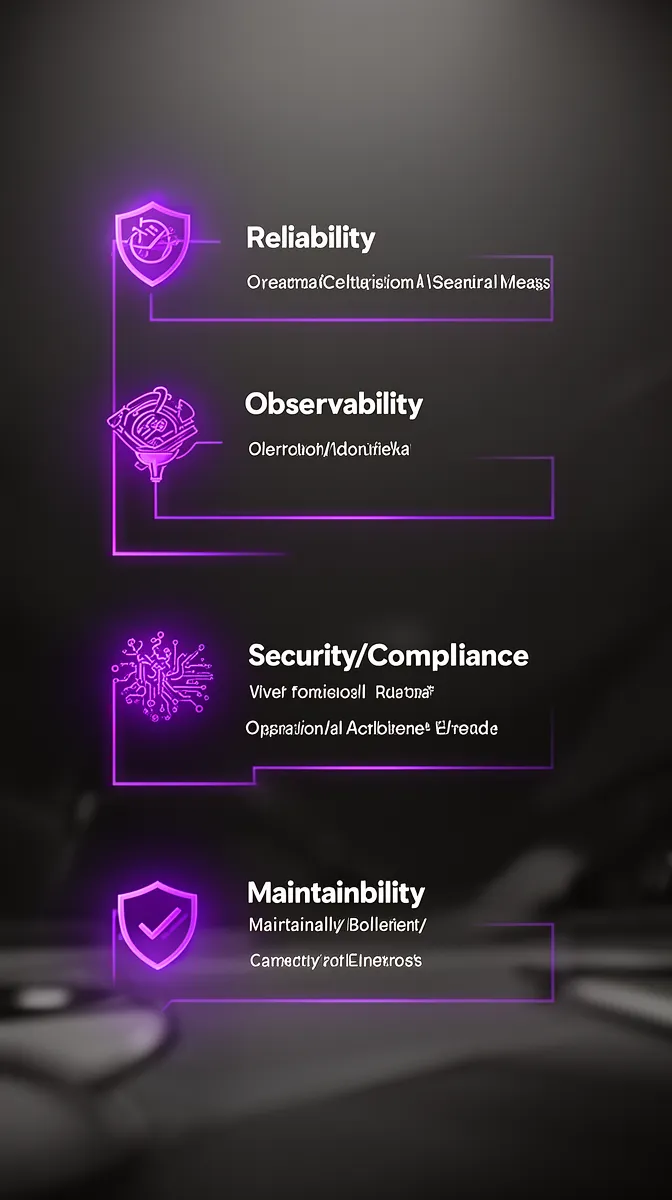

Think in dimensions:

- Functional accuracy: Does the model perform well on representative data?

- Reliability and scalability: Does the system stay up and responsive under peak load and partial failures?

- Observability: Can you see what's happening—infra, data, and model behavior—in real time?

- Security and compliance: Does it meet your auth, encryption, logging, and regulatory needs?

- Maintainability: Can new engineers understand, modify, and safely deploy it?

- Governance: Are changes auditable? Are risks (bias, misuse) managed?

A system that checks these boxes is not just accurate; it’s operationally complete. It’s a superset of a successful POC. Industry surveys back this up: McKinsey and others routinely find that most AI initiatives stall in pilot, with only a minority making it to scaled, reliable deployment.

We’ve seen this pattern repeatedly: a recommendation model works great in a lab, but when traffic spikes on a sales campaign, the unmonitored feature store lags, timeouts cascade, and the “AI experience” disappears. The issue wasn’t “bad AI”—it was the absence of rigor around AI in production as a first‑class operational concern.

Core Non-Functional Requirements for AI in Production

Non‑functional requirements (NFRs) are where “production‑grade” is actually defined. For AI, these NFRs look a lot like those for any critical service—but with added wrinkles for data and models. If you ignore them, your model’s ROC curve won’t save you.

Some of the most important NFRs for production ml systems and AI services:

- Uptime and high availability: Target 99.9%+ availability with clear SLAs and redundancy.

- Latency bounds: P99 and P50 latency targets appropriate to your UX (e.g., <200ms for a real‑time API).

- Throughput: Sustained and burst QPS targets with headroom.

- Security & privacy: AuthN/Z, encryption in transit and at rest, least privilege for services and pipelines.

- Auditability & explainability: Where needed, store inputs/outputs and explanations for key decisions.

- Observability stack: Metrics, logs, traces, plus model‑specific signals like drift and quality.

- Maintainability & supportability: Clear ownership, documentation, runbooks, and on‑call processes.

These are not “nice‑to‑haves.” They are the difference between a helpful tool and a liability that drags on your SRE and security teams. They should show up explicitly in your design docs and in your ML operations best practices, not just in someone’s head.

Operational Completeness as a Vendor Selection Criterion

When you evaluate vendors, accuracy and cost are the obvious axes. But if you don’t evaluate operationally complete AI solutions for enterprises as a separate dimension, you’re inviting trouble. A vendor that demos well but has no incident process is demo‑ware, not a partner.

When assessing enterprise ai implementation partners, ask questions like:

- What SLOs do you support, and how are SLIs measured and surfaced?

- How do you integrate with our monitoring stack? Can we get metrics into Prometheus, Datadog, or Splunk?

- What does your incident response playbook look like? Who is on call, and how are we involved?

- How do you handle rollbacks for bad model releases or data issues?

- What AI governance controls (access, audit trails, approvals) are built into the platform?

These questions separate vendors who think in terms of operationally complete AI from those who just want to ship a demo. At Buzzi.ai, we deliberately design production-grade AI solutions from day one: architecture docs, SLOs, monitoring hooks, and runbooks are deliverables, not optional extras. If you want to go deeper on how to implement LLMs in enterprise without high-profile failures, we’ve written about that separately.

The Non-Functional Checklist: Operational Requirements for AI

Once you accept that the real work of AI in production is operational, you need a concrete checklist. This is where many teams move from hand‑wavy “we’ll monitor it later” to explicit commitments around service level objectives, security, runtime observability, and ml ops processes. Think of this section as a template you can paste into your next design doc or RFP.

Reliability, Availability, and Error Budgets for AI Services

Reliability for AI services starts with the same math as any API. A 99.9% availability target means at most about 43 minutes of downtime per month; 99.99% shrinks that to roughly 4 minutes. Those numbers force clarity: are you really prepared to treat this AI service as mission‑critical?

Define SLIs (service level indicators) and SLOs for your AI endpoints. Typical SLIs include:

- Request success rate (e.g., non‑5xx responses).

- Latency (P50, P95, P99) for inference calls.

- Model quality metrics (precision/recall, click‑through rate, fraud catch rate) measured on recent traffic.

- Freshness of models and features (time since last update).

From here, you define error budgets. Suppose you set an SLO of 99.9% success rate for a recommendation API that serves 10M requests per month. Your error budget is 0.1% of 10M, or 10,000 failed requests per month. If a new model version burns through half that budget in two days, you halt risky changes and prioritize stability. This is where ai reliability becomes a quantitative discipline, not a vibe.

Observability, Monitoring, and Alerting for ML Workloads

Infrastructure metrics alone aren’t enough for ai in production. You need a complete observability stack—metrics, logs, traces—augmented with model‑specific signals. That’s how you detect when a dependency slows down, a feature distribution shifts, or your model’s precision degrades.

Key components:

- Metrics: QPS, latency, error rates, GPU/CPU utilization, queue depth.

- Logs: Request IDs, user IDs (where allowed), truncated inputs/outputs, error details.

- Traces: End‑to‑end traces across app, API gateway, model service, and databases.

- Model monitoring: Drift metrics, quality metrics on labeled feedback, anomaly scores.

Most enterprises already run tools like Prometheus, Grafana, or Splunk. The right move is to plug AI metrics into those systems, not create yet another silo. Prometheus and Grafana’s own docs on metrics and alerting best practices are good baselines for structuring this layer for ML workloads.

Security, Compliance, and Governance Expectations

AI governance is not purely an ethics conversation; it’s also about basic security and compliance hygiene. Models and pipelines access valuable data, sometimes regulated (GDPR, HIPAA, PCI). If your AI solution skirts your existing controls, your CISO will (rightly) object.

At minimum, enterprise ai implementation should include:

- Strong authentication and authorization around AI APIs and admin tools.

- Encryption in transit (TLS everywhere) and at rest.

- Least‑privilege access for model services, feature stores, and data pipelines.

- Audit logs for admin actions, model deployments, and significant predictions in sensitive domains.

- Data minimization and retention policies that align with GDPR/CCPA and, where applicable, HIPAA.

A bank or insurer, for example, might require that every model decision affecting credit or pricing be logged with features used, model version, and a traceable ID. That’s how you turn probing questions like “is this gdpr compliant ai development?” into confident answers.

Maintainability and Runbook-Level Support

Without maintainability, your AI stack becomes a fragile black box only the original team understands. Production‑grade AI solutions need clean deployment pipelines, versioning, and documentation that make ai system maintenance routine rather than heroic.

Every critical AI service should have:

- CI/CD pipelines with automated tests (unit, integration, data validation).

- Versioned models, data schemas, and configuration.

- Runbooks and playbooks for common failure modes (drift, bad data, upstream API changes).

- Defined on‑call rotations and escalation paths for incident response.

A model drift incident runbook, for instance, might include: confirm alert validity, inspect recent feature distributions, check for upstream schema changes, temporarily route traffic to a backup model, and, if needed, roll back to a previous version. That’s what ai operations best practices look like when written down instead of improvised.

Architecture Patterns for Resilient Production-Grade AI

Architecture is where many AI projects lock in their future reliability—for better or worse. Embedding a model deep in a monolith with no clear API boundary is fast initially but painful later. The best architecture for production-grade machine learning solutions treats models as services: isolated, observable, and replaceable.

Decoupled Microservices and Sidecar Patterns for ML

Isolate model inference behind a well‑defined API or microservice. This keeps your core app logic clean and your ai system architecture flexible. You can scale the model independently, change runtimes, or even swap model providers without rewriting the whole application.

In a typical setup, a recommendation model runs as a separate microservice. The web app calls it via gRPC/HTTP, passing user and context features. A sidecar container handles logging, metrics, and model monitoring—emitting latency, error rates, and selected feature stats to your observability stack. This way, you can evolve production ml systems while keeping the boundary with the rest of your microservices clear.

High Availability and Automated Failover for AI Endpoints

Once the model is decoupled, the next step is high availability. Run active‑active across zones or regions, fronted by a load balancer with health checks. If one region’s AI cluster degrades, traffic automatically shifts to another—this is automated failover in practice.

For truly critical services, you should also implement graceful degradation. If both regions are struggling, fall back to a simpler rules engine or cached responses. When you think about how to build production-grade ai systems with failover, the answer is layered defenses: redundant infra, smart traffic routing, and sensible fallbacks that preserve core user value even when the fancy model is offline.

Safe Deployment: Canary, Blue-Green, and Rollback Strategies

Shipping a new model into production should never be an all‑or‑nothing gamble. Use canary releases: route 1–5% of traffic to a new model version, compare metrics (latency, error rate, business KPIs), and then gradually increase if everything looks good. If metrics degrade, roll back automatically.

Blue-green deployment takes this further by running two identical production environments (“blue” and “green”) and switching traffic between them. This simplifies rollback strategies: if the new model or pipeline misbehaves, switch back instantly. All of this should be wired into a disciplined deployment pipeline so that every change is versioned, reviewed, and monitored.

Resilience Patterns: Circuit Breakers, Timeouts, and Retries

Even with solid infra, individual requests will fail. Resilience patterns from traditional microservices matter just as much for ai in production. A circuit breaker around the AI API prevents cascading failures if latency spikes or error rates climb.

Thoughtful timeouts and retry policies are crucial. In many user‑facing flows, it’s better to time out and fall back to cached results than to hang waiting for an overloaded GPU. Integrated with your API gateway or service mesh, these patterns ensure your ai system architecture behaves predictably—even when parts of the system are struggling.

Designing Observability for AI: From Metrics to Model Drift

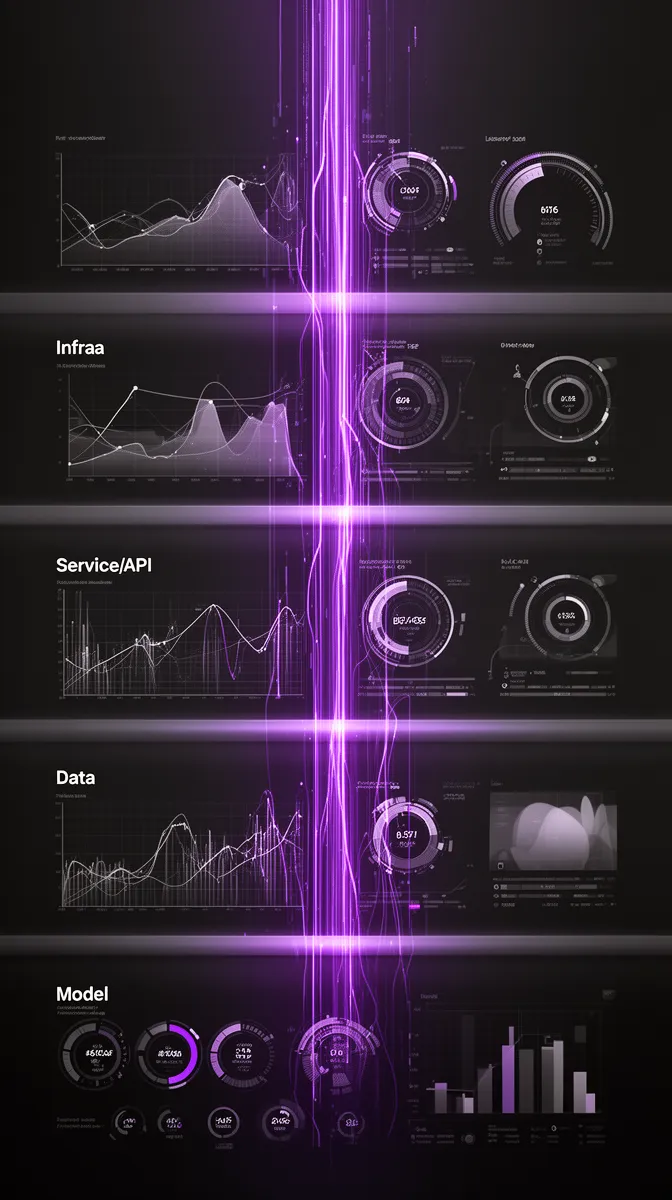

Most organizations have strong observability for web apps and databases, but AI adds two new layers: data and model behavior. An enterprise production-grade ai platform with observability has to make all four layers—infra, service, data, and model—visible. If you miss one, you’ll be flying partially blind.

Four Layers of Observability: Infra, Service, Data, and Model

A practical runtime observability strategy for AI covers:

- Infrastructure: CPU/GPU utilization, memory, disk, network—standard SRE metrics.

- Service/API: Latency, error rates, throughput, saturation on the AI endpoints.

- Data: Volume, freshness, schema validity, missing values—core data pipeline reliability metrics.

- Model: Performance metrics (precision, recall, CTR), drift indicators, bias checks where needed.

A traditional web service might only track the first two. Production ml systems that ignore data and model signals end up with nasty surprises: the infra looks fine, the API is up, but predictions quietly get worse because the input distribution changed. That’s how mean time to resolution (MTTR) stretches from minutes to days.

Integrating Model Monitoring with Existing APM and Logging

The right move for most enterprises is not to create a separate monitoring island for AI. Instead, pipe model metrics into existing APM/logging stacks like Prometheus/Grafana, Datadog, New Relic, or Splunk. That way, infra, API, and model behaviors share dashboards, alerts, and on‑call rotations.

For example, you might export per‑model metrics—latency, error rate, precision on recent labeled samples—to Prometheus and visualize them in Grafana alongside cluster utilization. Correlation IDs and tagging conventions let you trace a user request from the app through the model service to the database. Datadog’s own guidance on integrating ML workloads into APM illustrates this pattern, and New Relic provides similar examples.

The benefit is cultural as much as technical: SREs, data scientists, and product managers all look at the same dashboards. That’s what real ai system maintenance and apm integration feel like when done well.

Real-Time Detection of Model Drift and Data Quality Issues

Drift is how models fail in slow motion. Model drift detection is about spotting when the world your model sees no longer looks like its training data. In practice, we talk about three kinds: data drift (inputs change), concept drift (the relationship between inputs and outputs changes), and label drift (output distributions shift).

Operationally, you monitor distributions of key features and outputs, comparing current windows (e.g., last 24 hours) to reference windows (e.g., training data or last month). When statistical distances exceed alerting thresholds, you fire alerts. Automated responses might include triggering retraining, shifting some traffic to a backup model, or paging humans with enough context to investigate.

An e‑commerce recommendation system is a classic example. During a seasonal event, user behavior changes dramatically. If you aren’t watching click‑through rates, conversion rates, and feature distributions in near real time, your “personalization” can quietly degrade. With solid ml operations best practices around drift, you detect the change early and adapt—rather than discovering months later that your AI stopped adding value.

From Prototype to Operationally Complete AI Lifecycle

So how do you move from “we have a cool model” to an operationally complete ai solution for enterprises? The answer is to treat AI as a product with a lifecycle, not a project that ends at deployment. This is where ml ops, governance, and software engineering meet.

An End-to-End Lifecycle for Production-Grade AI

A robust ai development lifecycle typically follows these stages:

- Discovery and use‑case selection: Clarify the business problem, success metrics, and constraints.

- Data readiness: Assess data availability, quality, and lineage; establish pipelines.

- Model development: Experiment, train, and validate models against business metrics.

- Pre‑production hardening: Design ai system architecture, security, observability, SLOs, and failure modes.

- Deployment: Use a disciplined deployment pipeline (canary, blue‑green) to go live.

- Monitoring and operations: Continuous ai in production monitoring, incident handling, and drift management.

- Continuous improvement: Incorporate feedback, retrain, and refine both models and processes.

Ownership shifts across stages: data science may lead early, platform/ML ops teams own deployment and reliability, and product teams own outcomes. The key is explicit handoffs and gates. Before a model enters production, it must satisfy operational checklists, not just accuracy thresholds. That’s how enterprise ai implementation turns from a series of bets into a repeatable capability.

If you’re at the beginning of this journey, engaging in structured AI discovery and architecture-first scoping can save months of rework and avoid painting yourself into an architectural corner.

Ownership, Runbooks, and Incident Response for AI Services

One of the most painful anti‑patterns we see is AI systems with no clear owner. SRE assumes “data science owns it”; data science assumes “platform owns it.” In production, that ambiguity becomes pager noise. Mature teams define ownership and incident response up front.

Every AI service should have a directly responsible team for uptime and performance. That team should maintain runbooks covering: typical symptoms (e.g., rising error rate, degraded CTR), likely causes (infra vs data vs model), diagnostics (dashboards, logs, data checks), mitigations (rollback, traffic routing, disabling features), and escalation paths. That’s how you turn ai operations from best‑effort heroics into a predictable process.

Versioning, Rollback, and Change Management

Versioning is deceptively simple: tag models with versions. In reality, you need synchronized versioning across models, datasets, feature definitions, and code. Without that, rollback strategies break: you can’t truly restore a previous state if the data or features differ.

Good practice includes:

- Immutable model artifacts with semantic or commit‑based version IDs.

- Dataset snapshots and feature store versions linked to each model version.

- Deployment manifests capturing config, thresholds, and dependencies.

- Change management processes for approvals, risk assessment, and post‑deployment reviews.

In a well‑designed deployment pipeline, a bad release is annoying, not catastrophic. You detect issues quickly, flip back to a known‑good version, and analyze what went wrong. That’s what real ai governance looks like in practice.

Estimating and Managing the Cost of Operating AI in Production

Operating AI is not free. Ongoing costs include compute for inference (and retraining), data storage, monitoring tooling, on‑call time, and regular ai system maintenance. These are part of your real AI development cost, not overhead you can wish away.

Industry reports on AI TCO and ROI consistently show that the biggest hidden costs come from instability and ad‑hoc operations. A rushed prototype pushed into production might be cheap initially but expensive over time: frequent incidents, slow diagnosis, and mistrust from stakeholders. An operationally complete service costs more up front but recoups that through fewer outages and faster, safer iteration.

Back‑of‑the‑envelope: a fragile system that triggers one major incident per quarter can easily burn dozens of engineer‑hours each time, plus opportunity cost. Multiply that by headcount and lost revenue, and the ROI of doing things right—designing operationally complete ai solution for enterprises from day one—starts to look obvious.

How Buzzi.ai Delivers Production-Grade, Operationally Complete AI

Everything above is the standard we hold ourselves to. At Buzzi.ai, we don’t just ship models; we build production-grade AI solutions that your ops team can live with. That means architecture‑first design, integrated observability, and support structures that make AI feel like any other well‑run service in your environment.

Architecture-First Approach: Designing for Operations Upfront

We start every engagement with operational requirements, not just model performance targets. What SLOs do we need? How will this plug into your existing ai system architecture, security model, and observability stack? What are the acceptable latency bounds and failure modes?

From there, we design reference architectures tuned to your environment—cloud‑native, hybrid, or on‑prem‑heavy—without forcing a particular vendor. Architecture docs, SLO definitions, and runbooks are core deliverables. When we’ve taken fragile pilots and hardened them, the work often looks like this: extract models from ad‑hoc scripts, wrap them as services, add monitoring sidecars, define ml operations best practices, and deploy using canary/blue‑green patterns.

Integrated Monitoring, Governance, and Support

We design enterprise production-grade ai platform with observability by default. That means wiring model monitoring into your existing tools (Datadog, Prometheus/Grafana, Splunk, etc.) instead of deploying a disconnected dashboard. You get unified runtime observability across infra, service, data, and model layers.

On the governance side, we implement versioning, audit trails, and access controls aligned to your policies. And we don’t disappear after launch: we focus on knowledge transfer, shared runbooks, and getting your teams ready for on‑call. We can also support AI readiness assessments and phased rollouts to reduce organizational risk.

From Pilot to Scaled Platform: Standardized Playbooks

Our goal isn’t just to make one model work. It’s to help you build an internal capability—a reusable platform and playbooks that support multiple AI use cases. We standardize components like feature stores, CI/CD patterns, and observability templates so you can go from single pilot to a broader AI transformation.

That’s how we help enterprises move from “we launched one chatbot” to a portfolio of reliable AI services in sales, operations, and customer support. Under the hood, it’s the same pattern: operationally complete AI, scaled via repeatable practices. If you’re looking for production-grade AI agent development with this mindset baked in, that’s exactly our focus.

Conclusion: Audit Your AI Before Reality Does

By now the pattern should be clear: production-grade AI solutions are defined less by clever models and more by operational completeness. Reliability, observability, governance, and maintainability turn prototypes into durable systems. Without them, “AI in production” is just a fragile demo waiting for the wrong night to fail.

Architectural discipline—microservices, failover, canary/blue‑green deployments, circuit breakers—provides the backbone. Observability that spans infra, service, data, and model layers gives you the eyes. A lifecycle with clear ownership, runbooks, versioning, and cost awareness gives you the muscles and nerves. Together, they turn operationally complete from a buzzword into a practical standard.

If you’re unsure how your current systems stack up, start by auditing them against the checklists in this article. Where you find gaps, we’re here to help. Talk with Buzzi.ai about hardening existing pilots or designing new, production‑grade AI solutions from day one—for systems that don’t just demo well, but survive real use.

FAQ

What makes an AI solution truly production-grade rather than just a proof of concept?

A production-grade AI solution goes beyond model accuracy to meet operational standards for reliability, security, observability, and supportability. It behaves like any core service: it has SLOs, monitoring, incident processes, and governance. A proof of concept validates feasibility; production-grade AI delivers durable value under real-world conditions.

Which operational requirements must every production-grade AI solution satisfy?

At a minimum, every production-grade AI solution needs clear SLOs and SLIs, high availability targets, and latency bounds that match the user experience. It must integrate with your security model (auth, encryption, least privilege) and your observability stack (metrics, logs, traces, model monitoring). Maintainability, documented runbooks, and defined on-call ownership complete the operational picture.

How should I architect an AI system for high availability and automated failover?

Architect AI services as decoupled microservices, deployed across multiple zones or regions behind a load balancer with health checks. Use active-active or active-passive setups with shared or replicated models and feature stores, plus automated failover when health checks fail. Add graceful degradation paths—rules engines or cached responses—so the user experience degrades gracefully if all AI endpoints struggle.

What monitoring and observability tools are needed for AI in production?

You can usually extend the tools you already use for other services, such as Prometheus/Grafana, Datadog, New Relic, or Splunk. The key is to add AI-specific metrics—model performance, drift, data quality—alongside standard infra and API metrics. A unified observability stack reduces blind spots and makes AI incidents visible to your existing operations teams.

How do you integrate model monitoring with existing APM and logging stacks?

Expose model metrics (latency, error rates, quality, drift scores) via endpoints or sidecars and scrape or ship them into your APM/monitoring tools. Use consistent correlation IDs and tagging so you can trace requests end-to-end and segment metrics by model version or tenant. Teams like ours at Buzzi.ai typically wire these metrics into Grafana, Datadog, or similar systems so SRE and data science share the same dashboards.

What are common failure modes of AI systems in production and how do you mitigate them?

Common failure modes include data drift, upstream schema changes, resource exhaustion (GPU/CPU), and silent model regressions after updates. You mitigate these with layered defenses: data validation, drift monitoring, SLOs with alerting, canary releases, and robust rollback strategies. Runbooks and incident response processes tailored to AI shorten diagnosis and recovery times.

How do you define SLOs, SLIs, and error budgets for AI-driven services?

Start with user and business expectations—response times, reliability, and acceptable error rates. Define SLIs such as request success rate, latency percentiles, and model quality metrics; then set SLO targets (e.g., 99.9% success, P95 < 300ms). Error budgets quantify how much unreliability you can tolerate; if a new model or feature burns through the budget, prioritize stability over further changes.

How should versioning and rollback be handled for models and data pipelines?

Version models, datasets, feature definitions, and deployment configs together so you can reconstruct any deployed state. Use immutable artifacts, tagged snapshots, and a deployment pipeline that supports blue-green or canary strategies with one-click rollback. This synchronized versioning and rollback capability is essential for safe iteration and for maintaining trust in AI systems.

What does an operationally complete AI development lifecycle look like end-to-end?

An operationally complete lifecycle covers discovery, data readiness, model development, pre-production hardening, deployment, and ongoing monitoring and improvement. Each stage has clear owners, gates, and deliverables, from architecture designs and SLOs to runbooks and governance artifacts. Instead of ending at “model shipped,” the lifecycle treats operations and continuous learning as first-class phases.

How does Buzzi.ai ensure its AI solutions are operationally complete for enterprise environments?

Buzzi.ai starts with architecture and operational requirements—including SLOs, security, observability, and governance—before writing models. We design integrations with your existing stacks, implement model monitoring and drift detection, and produce runbooks and documentation as standard deliverables. Our goal is to deliver AI systems your ops team can trust, not just demos that look good in a meeting.