Predictive Analytics Services That Actually Change Decisions

Redefine predictive analytics services around operationalization: embed models into decision workflows, applications, and MLOps for real business impact.

Most predictive analytics services quietly fail—not because the models are bad, but because nothing in the business actually changes. Predictions sit in notebooks, dashboards, or slide decks while frontline teams keep making decisions the old way. The real gap isn’t in data science; it’s in operationalization.

If you’ve ever sponsored a "successful" analytics project that produced a beautiful model and then… nothing happened, you already know this pain. The ROI story falls apart not at the algorithm, but at the point where predictions should drive behavior in sales, operations, risk, or support. That’s where most predictive analytics consulting work stops—and where value was supposed to start.

In this article, we’ll reframe predictive analytics services for business decision making around one non-negotiable: value only appears when predictions are wired into decision workflows, applications, and MLOps practices that keep them alive in production. We’ll unpack what operationalization-inclusive services look like, show concrete integration patterns, and give you criteria to evaluate vendors. Along the way, we’ll share how we at Buzzi.ai approach implementation as an AI partner focused on decisions, not just models.

Predictive Analytics Services Are Broken Without Operationalization

Beyond Models: What Predictive Analytics Services Should Include

Ask ten vendors what their predictive analytics services include, and you’ll hear a familiar checklist: data exploration, feature engineering pipeline, model training, some validation metrics, maybe a dashboard. Occasionally, there’s a shiny demo in a BI tool. On paper, this looks like end-to-end analytics services.

What’s usually missing is everything that connects those predictions to real-world decisions. The model lives in a Jupyter notebook or a model registry; the dashboard lives in a browser tab that managers open once a month. But the systems and people who actually make daily decisions—CRM users, operations teams, call center agents—are untouched.

An operationalization-ready predictive analytics service looks different from day one. It explicitly scopes the decision workflows that will consume predictions, the systems that must be integrated, the form factors users will see, and the deployment and monitoring strategy. In other words, it treats machine learning deployment and decision change as part of the same project, not as optional phase two.

Consider a classic churn prediction project. A vendor builds a strong model, delivers a list of at-risk customers, and shows a lift curve in the deck. Everyone nods. But nobody changes the retention team’s daily routine or updates the CRM to surface these scores at call time. Three months later, the "churn model" is a static report in someone’s inbox—proof that even sophisticated predictive analytics consulting services with model deployment are incomplete without workflow design.

Why So Many Predictive Projects Fail to Change Decisions

When predictive initiatives fail, people often blame the model: "The accuracy wasn’t high enough," or "The data wasn’t good." In reality, the more common failure modes are organizational and architectural, not mathematical. The hand-off from data science to the rest of the business is where projects quietly die.

Typical issues include: hand-off at model delivery with no budget for integration; vague assumptions that IT will "productionize" the model later; and unclear ownership between data, IT, and business teams. No one owns model operationalization as a discipline. The result is a graveyard of promising proofs-of-concept that never reach production.

There are also structural gaps. Many organizations lack MLOps capabilities to support production deployment, monitoring, and retraining. Decision owners aren’t clearly identified, so there’s no one accountable for changing workflows or incentives. Experimentation is risky politically, so teams cling to existing decision support systems, even if they’re clearly suboptimal.

None of this is hypothetical. Industry surveys routinely show that only a minority of AI and analytics models make it into production or drive broad adoption. For example, McKinsey has reported that only a small fraction of companies achieve material, scaled value from AI despite significant investments (McKinsey AI survey). That’s not a failure of algorithms; it’s a failure to design and wire decision workflows where predictions actually matter for analytics ROI.

So when you see a predictive project that "worked" technically but didn’t move KPIs, look first at change management for analytics, not at the ROC curve. Did anyone redesign the process? Did any system change? Were frontline teams given new tools, or just new numbers? Until we correct these gaps, predictive analytics services will keep overpromising and underdelivering.

What Operationalization-Inclusive Predictive Analytics Services Look Like

From Predictions to Decisions: The Missing Middle Layer

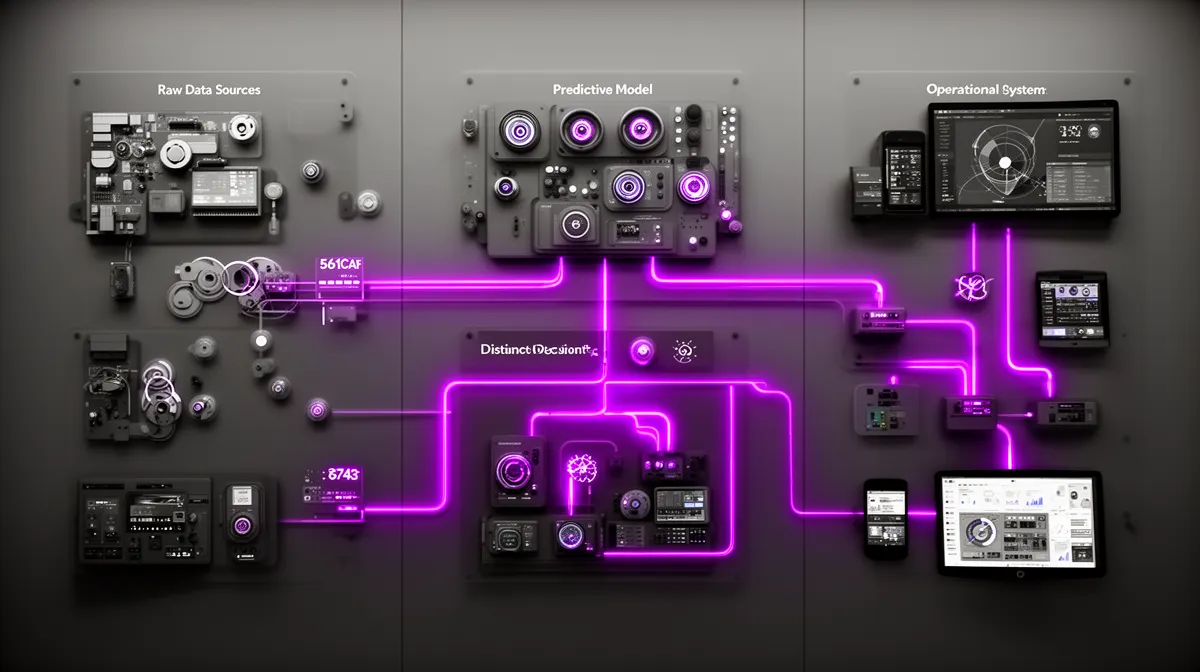

The way out is to treat predictions as raw material, not as the finished product. Between models and applications sits a missing middle layer: the decision layer. That’s where decision workflows, business rules, and human judgment come together to turn scores into actions.

Think of the model as an oracle that whispers, "This customer has a 72% chance of churning." On its own, that’s interesting but not actionable. The decision layer answers the next questions: What should we do? Under what conditions? Who approves it? Which channel executes it? That is decision automation and decision support in practice.

In a credit risk example, a risk model might output default probability for each loan application. A decision engine then combines that probability with business rules (e.g., regulatory thresholds, policy constraints, profitability caps) to decide whether to auto-approve, auto-decline, or route to manual review. A human-in-the-loop queue handles borderline cases. This is predictive analytics as a service with decision orchestration, not just a score in a spreadsheet.

This is also where the emerging discipline of decision intelligence comes in. Decision intelligence blends analytics, UX, and operations design into a single practice focused on improving decision quality. It treats the model, the business rules engine, and the user interface as a cohesive system. And it’s exactly the layer missing from most predictive analytics consulting engagements.

Core Capabilities of Operationalized Predictive Services

If we start from this framing, the capabilities of mature, operationalized predictive services look very different from a modeling-only pitch deck. Yes, you still need data exploration, feature engineering, and model development. But that’s only one slice of the work.

First, there’s requirements discovery around decisions: mapping who decides what, using which inputs, under what constraints, and with what service-level expectations. Then comes integration framework design: which systems will consume predictions, through what interfaces, and in production deployment patterns that balance real-time scoring and batch scoring.

Next, there’s model lifecycle management: monitoring, retraining, rollback, and A/B testing. Model monitoring isn’t just about technical metrics—it’s also about business KPIs. Finally, there’s change management: training users, updating SOPs, and aligning incentives so people actually trust and use the new decision support.

Delivering all this requires cross-functional teams. A credible AI implementation partner will put data scientists, MLOps engineers, integration engineers, UX designers, and domain experts on the same engagement. That’s what real end-to-end analytics services look like: not just a model, but a living decision capability embedded in the business.

Designing Decision Workflows That Consume Predictive Scores

Start from the Decision, Not the Data

Most organizations start predictive projects by asking, "What data do we have?" The better question is, "Which decision are we trying to improve?" Decision-backwards design forces clarity and dramatically increases the odds that predictive analytics services for business decision making actually change behavior.

To design a decision workflow, you map the basics: Who is the decision maker? What exactly are they deciding? How often? What inputs do they use today? What systems do they work in? What’s the SLA—seconds, minutes, days? What level of error is tolerable, and what are the escalation paths when the model is uncertain?

This mapping has direct implications for architecture. A decision that happens in a customer-facing UI within seconds likely requires real-time scoring via API. A monthly planning decision might be fine with batch scoring. Decisions that are legally sensitive may require explainability and human review; others can be fully automated. In all cases, we’re designing decision support systems, not just prediction engines.

Take a customer retention example. Instead of "build a churn model," we define the decision as: "Each week, which customers should receive which retention treatment, through which channel?" From that, we derive requirements: churn scores at weekly cadence, integration into the campaign tool, constraints on discount costs, and reporting on uplift. The model becomes one component in a designed workflow.

Patterns for Human-in-the-Loop Decision Support

Full decision automation can be tempting, but it’s often risky—especially early on, or in high-stakes contexts. Human-in-the-loop patterns strike a balance between efficiency and control. They also build trust in predictive analytics over time.

One common pattern is ranked work queues. For example, in collections, agents might see a queue of delinquent accounts ordered by predicted likelihood of repayment if contacted today. The model affects which cases agents see first, but humans still decide how to handle each case. Over time, we can use feedback to improve both the model and the workflow.

Another pattern is approval workflows triggered by scores. In insurance claims, a low-risk, low-value claim might be auto-approved, while medium-risk claims get fast-track human review with suggested actions, and high-risk claims go to a specialist queue. Here, human-in-the-loop design and model governance go hand in hand: we define thresholds, override rights, and logging up front as part of change management for analytics.

The key is to align confidence thresholds with business risk and operational capacity. Early in a deployment, you might keep thresholds conservative and manually review more cases. As model operationalization matures and trust grows, you can expand the automated segment. This incremental approach makes adoption politically and practically feasible.

Embedding Predictions Into Daily Tools and Journeys

Even the best-designed decision workflow fails if it lives in a tool nobody uses. Operationalizing predictive analytics means meeting users where they already work: CRM, ERP, ticketing systems, and internal portals. That’s where many projects stall, because it demands integration engineering and product thinking, not just data science.

In a CRM like Salesforce or HubSpot, this might mean adding inline risk indicators next to each customer, along with next-best-action suggestions. In an ERP like SAP, it could be dynamic safety stock levels or lead-time adjustments powered by forecasts. In support tools like Zendesk, tickets might be tagged with predicted priority and recommended macros for resolution.

These are concrete implementations of how to integrate predictive analytics into business workflows. They often require custom UI components, API calls to scoring services, and changes to routing logic or SLAs. When done well, users don’t talk about "the model"—they talk about how their queue feels smarter, or how they’re spending more time on the right work.

There are growing numbers of case studies of predictive models embedded into CRM and ERP flows driving measurable uplift—for example, predictive lead scoring improving conversion rates, or demand forecasting reducing stockouts (Salesforce AI case examples). But the pattern is always the same: the impact doesn’t come from a dashboard alone; it comes from re-shaping day-to-day journeys. This is exactly where strong workflow and process automation capabilities become a competitive advantage.

Integration Patterns to Connect Models to Real Systems

API-First Model Serving and Microservices Integration

Once decision workflows are clear, we can design the plumbing. For user-facing or near-real-time use cases, the dominant pattern is API-first model serving. The model is wrapped in a REST or gRPC service behind an authentication and observability layer. Multiple applications can then request real-time scoring as needed.

For example, a pricing optimization model might sit behind an API that web, mobile, and call center channels all call when they need a price quote. Each call passes relevant features; the API returns a recommended price and confidence interval within tens of milliseconds. This is where API integration, versioning, and performance SLAs become core parts of predictive analytics consulting services with model deployment.

This approach also fits microservices architectures, where different services (checkout, inventory, recommendations) can consume the same central model serving layer. It’s the backbone of many cloud-based predictive solutions. But it comes with operational requirements: MLOps practices for monitoring latency and errors, rollbacks, and security hardening.

Batch Scoring and Data Pipeline Integration

Not every decision needs or benefits from real-time scores. For many planning, targeting, and ranking use cases, batch scoring is both sufficient and more efficient. Here, data pipelines and feature engineering pipelines feed regularly scheduled scoring jobs.

Consider a churn risk use case where you update scores nightly. An ETL/ELT pipeline extracts fresh behavioral data, builds features, and writes them into a feature store or data warehouse. A batch scoring job then runs against this dataset and writes results back to tables that downstream systems can consume.

In an enterprise, these workflows are often orchestrated with tools like Airflow, dbt, or cloud-native schedulers. CRMs or marketing platforms then read the updated scores to build campaigns or lists. This is a core pattern in predictive analytics implementation services for enterprise: integrate the model into the same data pipelines that already power reporting, but with added steps for scoring and actioning.

Cloud providers have published extensive best practices on this kind of integration—for example, Google Cloud’s MLOps design patterns and AWS’s guidance on integrating SageMaker models into data pipelines (Google Cloud MLOps guide). Mature MLOps embraces both API and batch patterns and chooses based on decision needs, not technology fashion.

Event-Driven and Workflow Engine Patterns

A third integration style sits between pure API calls and batch jobs: event-driven architectures. Here, business events ("order created," "claim filed," "ticket opened") trigger workflows that may include model scoring, rules evaluation, and human tasks. This is particularly powerful for decision orchestration in complex processes.

Imagine a smart support ticket routing and triage flow. When a new ticket comes in, an event fires. A workflow engine calls a language model to classify the issue, a priority model to predict urgency, and then a business rules engine to decide which queue to route it to. Agents see prioritized tickets with context and suggested replies. This is exactly the pattern behind smart support ticket routing and triage use cases.

Workflow engines like Camunda, Temporal, or cloud-native workflow services can orchestrate these steps without rewriting core transactional systems. The key for integration framework design is to make models and rules addressable services and to centralize the definition of workflows. Done well, this architecture makes it much easier to evolve decision logic over time without destabilizing operational systems.

MLOps and Governance for Production-Grade Predictive Services

Monitoring, Drift Detection, and Model Lifecycle Management

Getting a model into production is just the beginning. Once predictions start shaping real decisions, model monitoring becomes non-negotiable. Data distributions shift, customer behavior evolves, regulations change—drift detection is how you avoid flying blind.

On the technical side, we track metrics like input feature distributions, prediction histograms, and performance against ground truth when it becomes available. On the business side, we watch KPIs: are conversion rates, churn, fraud losses, or SLA adherence moving in the expected direction? If a model’s behavior or impact deteriorates, alerts should fire in the same way they would for any critical production system.

Robust model lifecycle management also includes A/B testing of models, canary releases, and rollbacks. You might run an experimental model on 10% of traffic, compare its impact to the incumbent, and only promote it when results are clearly better. This is standard practice in modern production deployment of software; MLOps brings those same disciplines to machine learning.

Governance, Compliance, and Risk Controls

As predictive analytics influences more consequential decisions, model governance and compliance move from "nice to have" to front-and-center. Governance spans documentation, approvals, audit trails, access control, and model registries. It also covers fairness, explainability, and alignment with internal policies and external regulations.

In financial services, for example, credit models must be documented, approved by risk committees, and explainable to regulators. Overrides must be tracked. Audit logs must show who changed what, when, and why. NIST and other bodies have begun publishing AI risk management frameworks to guide these practices (NIST AI RMF).

Governance ties directly back to decision workflows. Who is allowed to override a model’s recommendation? Under what conditions? How are those overrides logged and reviewed? A serious AI governance consulting or responsible AI consulting practice answers these questions within the same operationalization framework as deployment and monitoring. Governance that lives in a separate binder, disconnected from real systems, is just another form of non-operationalized analytics.

Measuring Decision and ROI Impact from Predictive Analytics

Linking Predictions to Business KPIs and SLAs

We can’t talk about analytics ROI without connecting predictions to business outcomes. That starts before deployment, by defining clear success metrics tied to the decisions we’re changing. Are we trying to lift conversion by 10%, cut churn by 5 points, reduce fraud losses by 20%, or improve SLA adherence by 15%?

Then we instrument the decision workflow to capture the right signals. For a predictive targeting use case, we might compare response rates between a model-selected group and a business-as-usual group. For a fraud model, we track chargebacks, false positives, and investigation times. When feasible, we use controlled experiments—A/B tests or holdout groups—to get causal evidence of impact.

The key is that the unit of measurement is the decision, not the model. ROC AUC is a model metric; uplift in revenue or reduction in cost is a business metric. By building measurement plans into decision support systems themselves, we create a feedback loop: better data on decisions drives better models and processes.

Creating a Repeatable Operationalization Playbook

Once you’ve successfully operationalized one predictive use case, the goal should be to turn that into a repeatable playbook. That’s how organizations scale predictive analytics implementation services for enterprise internally instead of treating each project as a bespoke adventure.

A simple playbook might look like this: (1) Decision discovery and mapping; (2) Data and model design aligned to that decision; (3) Integration architecture for APIs, batch, or events; (4) MLOps setup for production deployment and monitoring; (5) Measurement and experimentation design; (6) Iteration and governance review. You can templatize artifacts at each step.

Over time, this becomes a core part of your operating model for end-to-end analytics services. It also shapes how you work with an AI implementation partner: instead of buying isolated models, you co-develop a portfolio of operationalized decision workflows, each with clear ROI and governance. That’s how predictive analytics stops being a series of experiments and becomes an engine of transformation.

How Buzzi.ai Delivers Operationalized Predictive Analytics Services

Decision-First Discovery and Design

At Buzzi.ai, we’ve built our predictive analytics consulting approach around one principle: start from the decision, not the dataset. Our engagements begin with discovery focused on mapping decision points, workflows, and KPIs—not just profiling tables in a warehouse.

We run alignment workshops with business stakeholders, operations leads, and tech teams to define who decides what, how often, and with what constraints. We identify candidate use cases where predictive analytics services for business decision making could materially shift outcomes. And we’re explicit about the systems and channels where decisions live so we can design integration from day one.

This work is formalized in our AI discovery and decision design workshops, which help clients move from "we need a churn model" to "we need a reactivation and retention decision workflow with clear metrics." It’s a subtle shift in language that has a huge impact on whether models ever leave the lab.

Full-Stack Deployment, Integration, and Continuous Improvement

From there, we deliver full-stack implementation: model development, deployment, and integration into CRMs, ERPs, support tools, and custom systems. Our teams combine data science with engineering and automation expertise so that predictive analytics services ship as functioning decision capabilities, not as standalone artifacts.

We leverage our strengths in AI agents, workflow automation, and predictive analytics and forecasting services to build solutions that fit your existing tools. That might mean API-first real-time scoring for a digital channel, batch pipelines for campaign targeting, or event-driven flows for support ticket triage. Under the hood, we implement robust MLOps practices so models are monitored, retrained, and governed over time.

A typical engagement moves through phases: (1) Decision and workflow discovery; (2) Data and feature engineering pipeline setup; (3) Model development and validation; (4) Integration into business systems via APIs, batch, or workflow engines; (5) Go-live with shared dashboards, SLAs, and alerting; (6) Continuous improvement based on KPI movement and user feedback. In short, our predictive analytics implementation services for enterprise are designed around operationalization from day zero.

Conclusion: Make Predictions Serve Decisions, Not the Other Way Around

When predictive analytics services stop at model delivery, they almost never change real decisions. The PowerPoint looks good; the business doesn’t move. To escape that trap, we have to treat operationalization—not accuracy—as the core of the service.

Operationalization-inclusive services design and wire predictions into decision workflows, systems, and journeys. They build on solid integration patterns (APIs, batch, events) and robust MLOps and governance to keep models healthy in production. And they close the loop by measuring decision impact and ROI, feeding that learning back into both models and processes.

If you’re planning or running an analytics initiative today, a practical next step is to audit one project for operationalization gaps: Where do predictions enter the workflow? Who sees them? What actually changes in the tools and decisions? If those answers are fuzzy, that’s exactly where an implementation-focused partner like Buzzi.ai can help. Explore our predictive analytics and forecasting services to start a decision-first discovery session and design a path to production that actually changes outcomes.

FAQ

What are predictive analytics services beyond basic model development?

Predictive analytics services go beyond just building models and delivering accuracy metrics. At their best, they include decision discovery, workflow design, integration into real systems, and ongoing MLOps. The goal is not a score in a notebook, but a change in how your business makes and executes decisions every day.

What does it mean to operationalize predictive analytics in practice?

Operationalizing predictive analytics means embedding predictions into production decision workflows, applications, and processes. It involves wiring models into CRMs, ERPs, support tools, or custom systems via APIs, batch jobs, or workflow engines. It also includes monitoring, retraining, governance, and change management so that models remain accurate and trusted over time.

Why do many predictive analytics projects fail to impact real business decisions?

Most failures stem from a lack of focus on decision workflows and integration, not from poor algorithms. Projects often stop at model delivery, with no budget or ownership for getting predictions into frontline tools and processes. Without clear decision owners, MLOps capabilities, and incentives aligned to using the model, the organization simply keeps making decisions the old way.

How can we integrate predictive analytics into existing business workflows and tools?

Start by mapping where decisions happen today—who makes them, in which systems, and under what constraints. Then choose appropriate integration patterns: inline indicators or recommendations in CRM, batch-scored lists for campaigns, or event-driven routing in support tools. Many organizations work with an implementation-focused partner like Buzzi.ai, leveraging our AI discovery and decision design workshops to design these workflows and integrations end-to-end.

What are common patterns for connecting predictive models to CRM, ERP, and support systems?

Common patterns include API-first real-time scoring, where business applications call a model-serving API on demand; batch scoring pipelines that write results back into data warehouses or application databases; and event-driven workflows that trigger scoring and routing based on business events. In each case, the prediction is embedded directly into the user’s existing tools, rather than sitting in a separate dashboard.

Which MLOps practices are essential for production-grade predictive analytics services?

Essential MLOps practices include robust model monitoring (both technical metrics and business KPIs), drift detection, structured model lifecycle management, and automated retraining pipelines. You also need strong observability around latency, errors, and usage, along with processes for A/B testing models and safe rollbacks. Together, these practices ensure that models remain reliable components of your production environment.

How should we monitor predictive models and detect performance or data drift?

Monitoring should track input distributions, prediction distributions, and downstream performance against ground truth when available. Sudden shifts in these patterns may indicate data or concept drift. In parallel, monitor business metrics tied to the model’s decisions; unexpected changes there can be early signals that a model’s real-world impact is degrading and that retraining or redesign is needed.

How can we measure ROI and decision impact from predictive analytics services?

Define clear business KPIs and SLAs before deployment, such as conversion lift, churn reduction, fraud loss reduction, or SLA improvements. Instrument decision workflows to compare model-influenced decisions against baselines, ideally using A/B tests or control groups. By quantifying changes in these metrics, you can attribute value to predictive analytics and guide future investments.

What should we look for when evaluating predictive analytics service providers?

Look for providers who talk as much about decisions, workflows, integration, and MLOps as they do about algorithms. They should offer end-to-end capabilities: decision discovery, system integration, deployment, monitoring, and governance. Strong references for operationalized projects, not just proofs-of-concept, are a good sign that they can deliver real business impact.

How are Buzzi.ai’s predictive analytics services different from traditional modeling-focused vendors?

Buzzi.ai focuses on operationalizing predictive analytics from day one, not just building models. We start with decision-first discovery, design workflows that consume predictions, and integrate models into your existing tools and systems. Our teams then provide ongoing MLOps, monitoring, and optimization so that predictive analytics remains a living, high-impact capability rather than a one-off experiment.