Design Cloud AI Platforms That Keep Your Multi‑Cloud Options Open

Design cloud AI platform development around multi-cloud flexibility, not lock-in. Learn architectures, abstractions, and patterns that keep options open.

The biggest hidden cost in cloud AI platform development isn’t your monthly bill—it’s the loss of strategic options when your entire AI stack quietly fuses to a single hyperscaler. By the time finance notices the spend, engineering has already wired data, models, and pipelines into proprietary services that are painful and expensive to undo.

If you’re building serious AI capabilities—recommendations, forecasting, personalization, automation—you’re not just deploying apps. You’re creating an AI platform: shared data, ML, serving, and operations that every digital product depends on. When that platform is accidentally designed as a single-vendor product, your multi-cloud strategy becomes a slide in a board deck, not something you can actually execute.

In this article, we’ll break down how to design and evolve cloud AI platforms that stay portable. We’ll look at architectures, abstractions, and patterns that preserve options: which layers must remain neutral, where you can safely lean on native services, and how to avoid deep vendor lock-in while still shipping fast. And we’ll share how we at Buzzi.ai approach multi-cloud AI so enterprises gain leverage instead of losing it.

What a Cloud AI Platform Really Is—and Why Lock-In Sneaks In

From Cloud Apps to Cloud AI Platforms: What’s Different

Most teams underestimate how different a cloud AI platform is from a traditional cloud application. A typical web app might be a monolith talking to a single database, plus some background jobs and a CDN. The lifecycle is relatively simple: deploy new versions, scale horizontally, back up the database.

In contrast, a cloud AI platform is a shared nervous system for every intelligent capability in your business. It pulls in raw data from many systems, transforms it into features, trains models, stores them, serves them in production, and monitors their behavior. You’re doing AI platform engineering, not just app development.

Concretely, that means your platform usually includes:

- Data ingestion and transformation for cloud-native AI workloads.

- A feature store for reusable, governed features across teams.

- Experiment tracking and model registry for lineage and reproducibility.

- MLOps pipelines for training, evaluation, and deployment.

- Model serving infrastructure for low-latency inference.

- Monitoring, drift detection, and governance controls.

Unlike a single app with a single database, a cloud AI platform is a long-lived, multi-tenant asset. Dozens of products and teams depend on it. That longevity means every architectural choice compounds over time, and reversing those choices—like shifting providers—is far harder than it looks early on.

How Vendor Lock-In Quietly Accumulates in AI Platforms

Lock-in doesn’t arrive with a big announcement. It accumulates through a series of small, reasonable decisions that add up. A team chooses a proprietary managed database because it’s fast to set up. Another uses the cloud provider’s feature store and managed ML pipeline service. Someone else plugs directly into the provider’s AI APIs and serverless functions.

Each decision improves short-term velocity. But each one also embeds assumptions about that provider’s APIs, IAM model, event formats, and operational tooling. Over time, your codebase and data flows become deeply coupled to one cloud’s semantics, and your cloud vendor neutrality quietly disappears.

Common sources of lock-in in cloud AI platform development include:

- Proprietary managed databases and analytics engines.

- Vendor-specific messaging and streaming systems.

- Cloud-native feature stores and model registries with closed formats.

- Serverless runtimes and function platforms wired directly into core workflows.

- Identity and access systems embedded through every component.

The compounding effect is brutal. The more of these services you wire together, the harder it becomes to change any single piece. Migration stops being a technical exercise and becomes a full-blown cloud migration strategy project—expensive, risky, and easy to postpone until it’s too late.

That’s where business risk shows up: limited leverage in price negotiations, stuck with regions that don’t match your growth, and “surprise” regulatory constraints that your current provider can’t meet. What started as a fast path to value now constrains your evolution path.

Why Optionality Matters More Than Cloud Spend in AI

The Strategic Cost of Single-Cloud AI Platforms

When executives talk about cloud, they usually start with cost. For AI, the more important metric is optionality—your ability to change direction quickly when prices, regulations, or technology change. A single-cloud AI platform erodes that optionality.

Today, AI platforms are becoming the central nervous systems of digital businesses. They drive recommendations, dynamic pricing, fraud detection, personalization, automation. If that platform is locked to one provider, the risk is bigger than a typical line-of-business app. You’re effectively outsourcing the pace and direction of your AI roadmap to your cloud of choice.

External pressures amplify this risk. Boards and regulators increasingly ask about multi-cloud resilience and hybrid cloud AI strategies. Data residency rules multiply. Sovereignty concerns and geopolitical tensions make “just pick a region” an incomplete answer to data residency and sovereignty requirements. Moving workloads isn’t optional—it’s mandatory for entering or staying in certain markets.

There are real-world examples where companies have delayed product launches for months because their AI stack couldn’t legally run in a given country or provider region. Others have paid large premiums for specialized hardware on a single cloud because their models and pipelines couldn’t burst to another provider. These are not theoretical problems; industry reports from analysts like Gartner on multi-cloud strategy and lock-in highlight these patterns.

Optionality as Financial and Negotiation Leverage

Optionality is not just a technical virtue; it’s financial leverage. If your workloads can credibly run on multiple clouds, your procurement negotiations change. Hyperscalers respond very differently to a customer who can—and occasionally does—move AI workloads elsewhere.

A platform designed with cloud AI platform development for multi cloud flexibility lets you adopt new accelerators, services, or regions without rewriting half your stack. When a new GPU generation arrives on another provider, or a local cloud offers better regulatory fit, you can reallocate workloads the way a CFO reallocates capital. Portability is liquidity for compute.

For CFOs and CIOs, this matters more than purely technical elegance. It’s the difference between using committed spend discounts as a lever, versus being forced to accept whatever pricing and roadmap your current provider offers. Enterprises increasingly look to an enterprise cloud AI platform development consultancy not just for architecture, but for this kind of negotiating position.

In short, multi-cloud capability is a business-level option. You don’t have to exercise it constantly. You just need it to be credible.

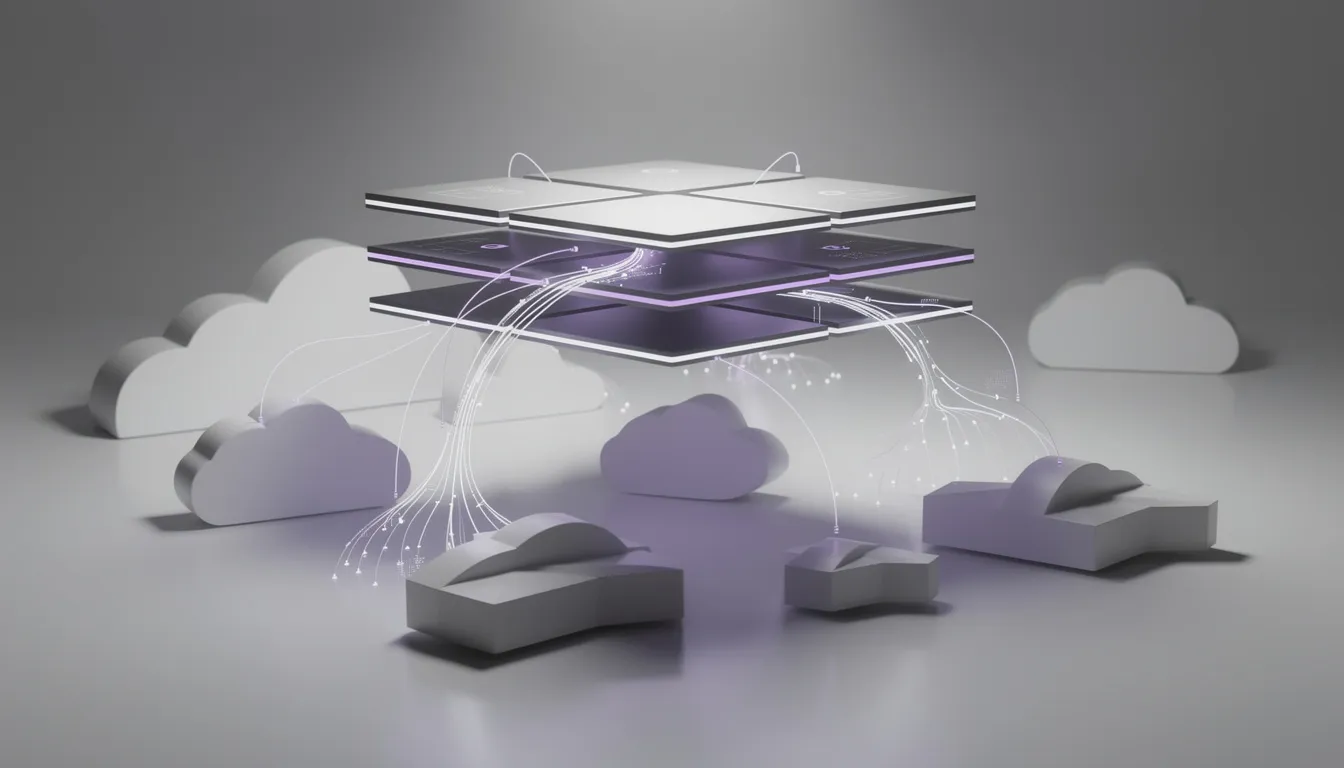

Core Design Principle: Separate Data, Control, and Compute Planes

The Three-Plane Model for Multi-Cloud AI Platforms

If we want optionality, we need structure. The most useful mental model we’ve found for how to design a portable cloud AI platform is to separate three planes: data, control, and compute. Each plane has its own concerns, technology choices, and portability strategy.

The data plane covers storage, feature stores, and the pipelines that move and transform data. The control plane abstraction handles orchestration, configuration, governance, and policy—what runs where, when, and under what rules. The compute plane is where training and inference workloads execute.

When these planes are cleanly separated, you reduce coupling to any specific cloud service. You can pursue a cloud agnostic design at the plane boundaries, even if inside each plane you pragmatically use native services. This is how you move toward the best architecture for vendor neutral cloud AI platforms without becoming dogmatic.

For example, your data plane might standardize on S3-compatible object storage and open table formats across clouds, while your compute plane runs on a kubernetes-based AI platform. The control plane then uses GitOps and infrastructure as code to describe the desired state, regardless of which provider is executing it. The cloud becomes an interchangeable substrate underneath.

Abstraction Patterns That Keep Each Plane Portable

Once you adopt the three-plane model, you can design deliberate abstractions per plane.

For the data plane, data plane abstraction means:

- Using S3 APIs and open formats (Parquet, Delta, Iceberg) instead of proprietary tables.

- Choosing feature stores that can run on multiple clouds and support pluggable storage backends.

- Designing pipelines in portable tools rather than single-cloud-only services.

For the control plane, the goal is portability of intent. Instead of wiring everything into vendor-specific pipeline tools, use cross-cloud orchestrators and GitOps workflows. Define infrastructure and ML workflows via infrastructure as code and portable microservices patterns. Your mlops pipelines should be able to execute on different substrates with configuration changes, not major rewrites.

For the compute plane, ai workload portability is achieved by containers and orchestration. Standardize on Kubernetes (including managed Kubernetes services where useful), use a service mesh for AI where needed, and keep your model serving infrastructure decoupled from any one provider’s serving product. Serverless can play a role, but treat serverless AI workloads as implementations behind an internal API gateway abstraction, not as your primary interface.

Concrete Abstraction Patterns for Portable AI Workloads

Portability for Data Pipelines and Feature Stores

Let’s make this concrete. For data pipelines, the main choice is between provider-native integration services and portable orchestrators running on compute you control. To keep cloud-native AI workloads portable, lean toward the latter: containerized schedulers and workflow engines that can run on any Kubernetes cluster or VM pool.

In practice, this means designing ingestion and ETL/ELT so that the “brains” live in code and portable tools, not in a proprietary UI or managed-only pipeline engine. That way, when you extend to a second cloud, you redeploy the same pipelines near new data sources rather than rewriting them from scratch.

Feature stores deserve particular attention. They sit at the intersection of data plane abstraction and online serving. A portable feature store design typically includes:

- Offline store in object storage using open formats.

- Online store in a database or cache that has equivalents on each cloud.

- Feature computation pipelines in a portable orchestrator.

- Clear separation between logical feature definitions and physical storage.

This design lets you move or replicate your feature store across clouds with minimal application changes. When data residency and sovereignty rules require per-country storage, a useful pattern is “local storage, global metadata and logic”: keep raw data and features local, but centralize definitions and governance.

Portability for Training Workloads Across Clouds

Training workloads are often the most compute-intensive part of cloud AI platform development. They’re also where capacity constraints (especially GPUs) can hurt most. Portability here is a hedge against regional shortages and pricing swings.

The baseline pattern is simple: containerize your training jobs and run them on managed Kubernetes or batch compute services on each cloud. Use framework-agnostic pipelines—TensorFlow, PyTorch, or others—wrapped in containers, with resource specs externalized in configuration. This supports multi-cloud architecture where the same pipeline can run in multiple regions or providers.

GPU and accelerator differences are handled by configuration and IaC. You describe clusters in code, including node types, autoscaling, and storage. When you need to burst to a second cloud, your cloud migration strategy is “apply the same IaC with provider-specific tweaks,” not “figure it out again from scratch.”

For experiment tracking and model registry, favor tools that can run on any cloud and store data in open formats. That way, your training history and artifacts don’t become a hidden source of lock-in. Open-source stacks and research like multi-cloud architecture patterns give good guidance here.

Portability for Inference and Real-Time Serving

Inference is where AI meets the real world—and where outages and latency directly hit revenue. For ai workload portability at serving time, start with a simple rule: keep your front door neutral.

Expose models through a stable, provider-neutral API gateway. Behind that, run your model serving infrastructure on Kubernetes, using containers for each model or model family. A service mesh for AI gives you cross-cloud traffic management, canarying, and blue/green deploys using the same patterns everywhere.

This design makes cross-cloud deployment straightforward. You can deploy the same model to multiple regions and clouds, routing traffic based on latency, cost, or regulatory needs. During an outage in one provider, you shift traffic to another with DNS or gateway-level changes, not a mad scramble to port models.

Serverless has a place, especially for bursty or low-traffic serverless AI workloads. The key is wrapping these in internal APIs so your applications don’t know or care whether a model runs on functions, containers, or a specialized managed service. If you later need to move that workload, you reimplement the function behind the same interface.

Balancing Native Cloud AI Services with Portable Components

A Decision Framework: Where Native Services Make Sense

Being portable doesn’t mean avoiding native cloud services. It means using them with intent. Native managed services are fantastic for speed—and sometimes, speed is the competitive edge that matters most.

The right question isn’t “native vs portable?” but “what is the migration cost if we ever need to move this?” For hybrid cloud AI platforms, the criteria include:

- Is this capability differentiating or a commodity?

- Where does data gravity sit, and how hard is it to move?

- What are the compliance and residency constraints?

- What’s the expected lifetime of this service in our architecture?

Use native services where they clearly accelerate differentiation or rely on specialized hardware, but design with an exit plan. That might mean ensuring bulk export paths exist, wrapping APIs in thin adapters, or keeping domain logic outside of proprietary DSLs. This is how you sustain cloud vendor neutrality while still shipping fast.

When to Insist on Open-Source or Portable Alternatives

Some layers are too central to leave entirely to one provider. In our experience, it’s worth insisting on portable or open-source options for:

- Feature stores and model registries.

- Pipeline orchestration and workflow engines.

- Observability and monitoring (metrics, logs, traces).

- Core security policy models and ai governance and compliance tooling.

The ecosystem here is mature. Open projects like Kubeflow or MLflow can run across clouds and integrate with different storage and compute options. Similarly, CNCF observability stacks give you a common lens on multi-cloud operations.

From a risk perspective, portable tools simplify audits and regulatory expansion. They also align with guidance from groups like the Cloud Security Alliance and NIST’s cloud security recommendations, which emphasize avoiding concentration risk. The goal isn’t anti-cloud—it’s pro-optionality. You mix and match with intent.

Refactoring an Existing Single-Cloud AI Platform Toward Portability

Assessing Your Current Lock-In and Setting Priorities

Many teams aren’t starting from a blank slate. They already have a single-cloud AI platform and a nagging sense that it’s too tightly coupled. The good news: you can move toward portability without a big-bang rewrite.

The first step is an honest inventory. List every managed service, proprietary API, and provider SDK in your AI stack. Include storage systems, databases, messaging, ML services, IAM integrations, monitoring, and anything tied to your mlops pipelines. This AI readiness assessment gives you a map of your current vendor lock-in.

Next, classify things along two axes: business criticality and “escape difficulty.” A low-criticality, easy-to-replace service can wait. A core model registry with proprietary formats is both high-criticality and high escape difficulty. These are the places where help from an enterprise cloud AI platform development consultancy can be especially valuable.

It’s also useful to establish a maturity model—from fully single-cloud, deeply locked-in, to multi-cloud-ready with separated planes and portable interfaces. Your goal isn’t perfection in one step; it’s to identify the highest-leverage changes that unlock future flexibility.

A Pragmatic Step-by-Step Refactoring Path

With the map in hand, you can plan a realistic refactoring path. Think in phases, not a single migration event.

Phase 1 is to decouple interfaces. Introduce internal APIs and service layers between your applications and provider-specific services. This alone reduces risk because it gives you a place to swap implementations later. It also nudges your architecture toward portable microservices.

Phase 2 introduces portable components where feasible. Migrate observability and logging first—they’re usually easier to move and beneficial across the board. Then containerize model serving, shift pipelines into portable orchestrators, and use infrastructure as code to codify your new patterns. At this point, you’re building a real multi-cloud architecture.

Phase 3 is to pilot cross-cloud deployment for non-critical workloads: maybe a secondary inference service or a batch training job. Use this to validate your security, observability, and governance across clouds. Phase 4 is consolidation: turn the working patterns into templates, platform tooling, and documentation teams can reuse.

Along the way, having access to multi cloud AI platform development services can accelerate the transition and de-risk early pilots. You don’t have to invent the playbook from scratch.

How Buzzi.ai Designs Cloud AI Platforms for Strategic Optionality

Our Architecture Principles for Vendor-Neutral AI Platforms

At Buzzi.ai, we treat cloud AI platform development as a strategic exercise, not just an implementation project. Our architecture principles center on the three-plane model, abstraction-first design, open standards wherever practical, and observability and governance as first-class concerns.

Every engagement starts with discovery: your business goals, regulatory and sovereignty constraints, existing cloud commitments, and AI roadmap. Only then do we recommend specific patterns for ai platform engineering and ai strategy consulting. The objective isn’t to uproot your current cloud provider; it’s to increase your leverage and freedom.

In practice, this often means designing a portable core with flexible edges. For example, we may recommend an open feature store and registry, with native managed Kubernetes beneath it, and a portable observability stack across clouds. For organizations scaling advanced analytics, we also help with cloud AI platform development for predictive analytics at scale.

Where Buzzi.ai Fits in Your Multi-Cloud AI Journey

Our role depends on where you are in your journey. For some enterprises, we start with an assessment and roadmap: mapping lock-in, defining target maturity, and prioritizing moves. For others, we co-design the platform architecture, then help implement the critical abstractions—data plane, serving layer, and orchestration.

Because we build real systems—like AI voice bots and agents for WhatsApp in emerging markets—we understand how AI agent development on top of portable cloud AI platforms has to cope with network realities, local regulations, and diverse cloud options. That pragmatism carries over into everything we do.

Ultimately, we position ourselves as a long-term partner. As AI and cloud markets evolve, we help you adapt: adding new providers, regions, and capabilities without destabilizing what already works. If you want an enterprise AI solution that keeps your multi-cloud options truly open, that’s exactly what we’re here to build with you.

Conclusion: Build Cloud AI Platforms That Keep Options Open

Cloud AI platforms are not just another IT system. They’re long-lived strategic assets that increasingly determine how fast your business can learn and adapt. Designing them as single-vendor products is a hidden but massive risk—and one that becomes obvious only when it’s expensive to fix.

Separating data, control, and compute planes—and deliberately abstracting them—gives you real multi-cloud flexibility. Portability for data pipelines, training, and serving doesn’t happen by accident; it’s the result of specific architectural choices about formats, orchestrators, and compute substrates.

Balancing native services with portable components is a strategic decision, not an afterthought. You can move quickly with managed offerings while preserving your ability to change providers, regions, or accelerators when the environment shifts.

If you’re serious about keeping your options open, now is the moment to evaluate how locked-in your current AI platform really is. When you’re ready to design or refactor a platform that stays flexible by default, we’d love to talk—reach out to Buzzi.ai via our services or contact page and let’s map a path to a multi-cloud future.

FAQ

What is a cloud AI platform and how is it different from traditional cloud applications?

A cloud AI platform is shared infrastructure that handles data ingestion, feature engineering, model training, model storage, and model serving for many use cases at once. Traditional cloud apps are usually single applications talking to one or a few databases with a simpler lifecycle. Because AI platforms become long-lived, central assets, architectural choices like portability and vendor dependence matter much more over time.

Why do most cloud AI platforms end up locked into a single cloud provider?

Most teams optimize for speed in the early stages, adopting proprietary managed databases, ML services, and serverless runtimes from their primary cloud. Each choice is locally rational but collectively creates deep coupling to one provider’s APIs, IAM model, and operational tools. Over time, reversing those decisions requires a costly, risky migration project that’s easy to postpone.

What are the main business risks of building a single-vendor cloud AI platform?

The biggest risks are loss of bargaining power, limited regional and regulatory flexibility, and slower adoption of new technologies. If your AI workloads can run only in one provider’s regions, you may be blocked from entering markets with strict data residency rules or pay a premium for scarce resources like GPUs. A single-vendor platform also creates concentration risk: outages, policy changes, or pricing shifts hit your entire AI estate at once.

How can I design my cloud AI platform for multi-cloud flexibility from day one?

Start by separating the data, control, and compute planes, then define clear abstractions at each boundary. Use open formats and S3-compatible storage in the data plane, portable orchestrators and infrastructure as code in the control plane, and containers on Kubernetes in the compute plane. Wrap any native cloud services behind internal APIs so you can swap implementations later without rewriting your entire stack.

Which abstraction layers are most important to keep AI workloads portable across clouds?

The most critical layers are storage formats and feature stores in the data plane, orchestration and CI/CD in the control plane, and model serving in the compute plane. If you keep those portable, you can usually adapt other components over time. Common patterns include neutral API gateways, open model registries, and observability stacks that span multiple clouds.

How do Kubernetes and containers help create a cloud-agnostic AI platform?

Containers standardize how your applications and models run, while Kubernetes provides common primitives for deployment, scaling, and resilience across providers. By building a kubernetes-based AI platform, you decouple your workloads from specific VM types or proprietary PaaS offerings. Managed Kubernetes services from major clouds then become interchangeable substrates instead of unique snowflakes.

How should data storage and feature stores be designed to avoid cloud-provider lock-in?

Design storage around open, S3-compatible object stores and table formats like Parquet or Delta, and choose feature stores that support pluggable backends. Keep feature definitions and metadata independent of any single provider’s services. This makes it far easier to replicate or move feature stores across clouds to meet latency, cost, or sovereignty requirements.

What security and compliance challenges arise in multi-cloud AI platforms, and how can they be managed?

Multi-cloud AI platforms must handle multiple IAM models, diverse logging formats, and varying regional compliance regimes. The key is to centralize policy and monitoring as much as possible, using portable security controls and observability stacks that work across clouds. Guidance from frameworks like the Cloud Security Alliance and NIST, combined with strong governance processes, helps keep risk under control.

Can I refactor an existing single-cloud AI platform toward portability without a complete rewrite?

Yes. A pragmatic approach is to first decouple internal interfaces from provider SDKs, then gradually introduce portable components in observability, serving, and pipelines. Pilot dual-cloud deployments for a subset of workloads to validate your patterns before expanding. Many organizations work with partners like Buzzi.ai to accelerate this transition and avoid common pitfalls.

How does Buzzi.ai’s approach to cloud AI platform development preserve strategic optionality for enterprises?

Buzzi.ai focuses on three-plane separation, open standards, and abstraction-first design so that your AI workloads are not trapped on a single provider. We combine platform engineering with services like AI agent development on top of portable cloud AI platforms to prove portability in real use cases. Our goal is to give you a flexible foundation that can adapt as AI, cloud, and regulatory landscapes evolve.