Choose a Machine Learning Development Company Built for 2026

Most machine learning development companies are already obsolete. Learn how to pick a foundation-model-native partner that will still matter in 2026.

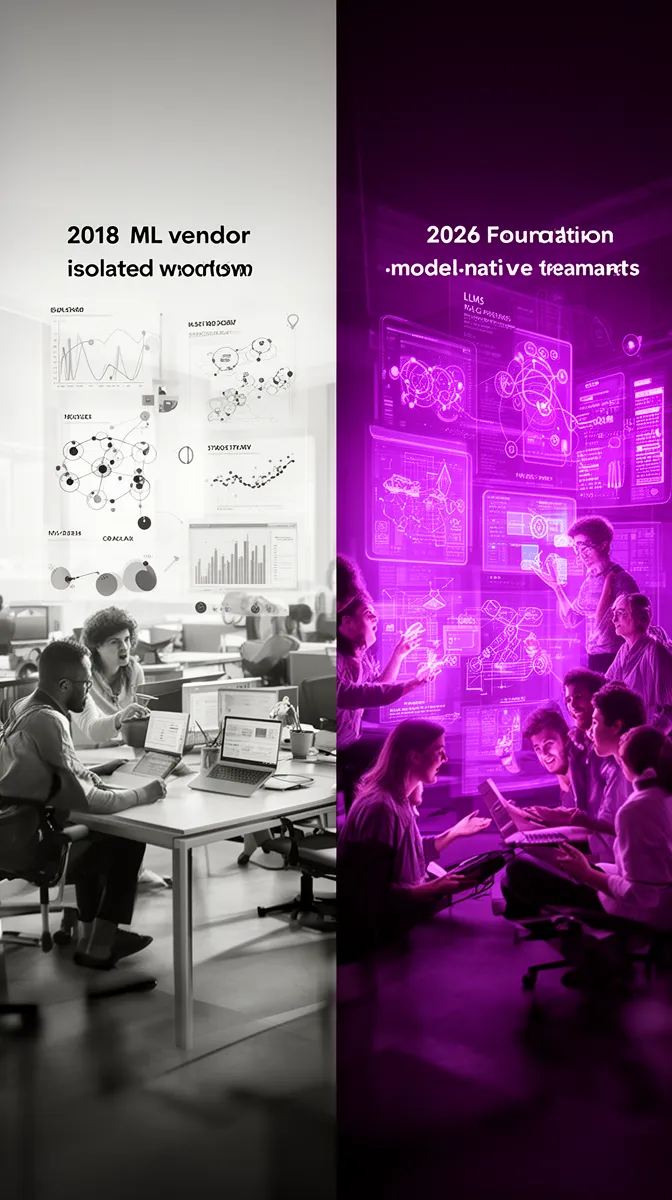

By 2026, most machine learning development company offerings that look impressive today will be effectively obsolete. Not because ML stopped working—but because the center of gravity has moved from training bespoke models to integrating, governing, and productizing foundation models at scale.

You can already feel the risk. You sign a multi-year agreement with an AI and machine learning development company, only to watch their “cutting-edge” skills get commoditized by the next wave of APIs from OpenAI, Anthropic, or open-source ecosystems. The slideware still looks good, but the value curve is flat.

This article is designed to de-risk that decision. We’ll give you a practical, opinionated checklist for telling classical-ML-era vendors apart from foundation-model-native partners—those who understand large language models, multimodal foundation models, retrieval augmented generation, and the new stack around them. Along the way, we’ll use Buzzi.ai as a reference point for what a future-ready machine learning development company focused on AI agents and voice bots actually looks like, without turning this into a product brochure.

What a Machine Learning Development Company Means in 2026

Until recently, a machine learning development company meant “a team that can clean your data, engineer features, train models, and deploy them.” In 2026, that definition is incomplete. The default building blocks are now foundation models—large language models and other general, pretrained models that can be adapted instead of trained from scratch.

That shift sounds subtle but it’s as big as the move from on-prem servers to cloud. The competitive frontier is no longer who can squeeze another 1% AUC out of a churn model; it’s who can design robust systems on top of foundation models that plug into your data, workflows, and governance.

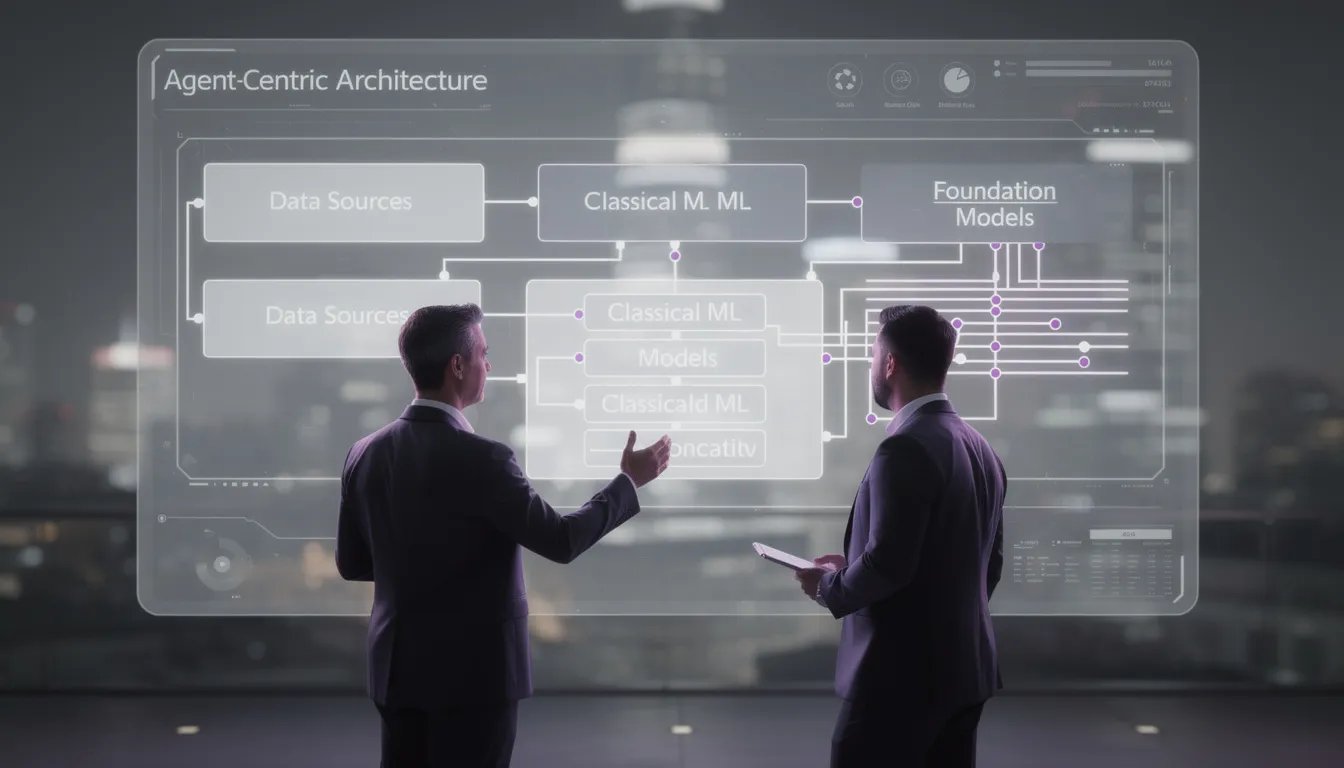

From model builders to foundation-model system integrators

In the pre-2020 world, machine learning development services were mostly about bespoke models. You hired a team to build a churn prediction model: they’d assemble a dataset of customer events, engineer features (tenure, recency, frequency, etc.), try a bunch of classical machine learning algorithms, and finally deploy one behind an API. The work was difficult but discrete.

Fast forward to 2026. Instead of a single churn score, your support experience might be a contract-aware assistant. It runs on top of large language models orchestrated with your CRM, billing system, and knowledge base via RAG. The assistant doesn’t just predict churn; it explains risk drivers in natural language, proposes retention offers, drafts outbound messages, and logs everything back into your systems.

In this world, the most valuable machine learning development company work looks less like handcrafting individual models and more like foundation model integration plus AI product development. The hard parts are data access, retrieval quality, prompt and tool orchestration, safety, and cost management—not training yet another classifier.

That’s the difference between a classical-ML-native organization and a foundation-model-native one. Classical shops optimize for pipelines of small bespoke models. Foundation-model-native partners treat foundation models as the substrate and build governed, integrated systems around them.

Defining a foundation-model-native ML partner

A foundation-model-native machine learning development company starts from a simple assumption: if a high-quality foundation model can reasonably solve the problem, that’s the first resort. Classical ML is still in the toolbox—but used surgically for specific latency, scale, or cost constraints.

This kind of partner offers machine learning consulting services that sound different. They talk about your enterprise AI strategy and AI roadmap, not just models and metrics. They are comfortable with generative AI solutions across text, voice, and other modalities, and understand when to fine-tune, when to RAG, and when to simply integrate an API.

Under the hood, persistent capabilities still matter: data engineering, evaluation, MLOps, and AI governance. Buzzi.ai, for example, approaches projects by first mapping your data and workflows, then designing agents and voice bots that orchestrate foundation models with your systems. The “ML” is there, but it’s embedded in a broader product and integration mindset.

That’s the bar for a 2026-ready partner.

Why Classical-Only ML Companies Are Becoming Obsolete

Classical machine learning isn’t dead; it’s just not where most of the marginal value is anymore. The skills that once differentiated vendors—training classifiers, basic NLP, recommendation systems—are increasingly available behind an API with a credit card.

A machine learning development company that only sells those skills is stuck competing on hourly rate and slide decks, not strategic impact. The top machine learning development companies moving beyond classical ML are reinventing themselves as foundation-model system integrators; everyone else is drifting toward commodity status.

API-accessible intelligence is commoditizing legacy skills

Think about a standard text-classification pipeline from 2018. You’d collect labeled tickets, tokenize, train a model (maybe an LSTM or transformer), evaluate, deploy, monitor drift, and retrain periodically. That’s months of work from a seasoned data science team.

In 2026, you can often replace this entire system with an LLM plus prompt engineering plus light rules and caching—perhaps with a small supervised layer on top if you need strict guarantees. That’s why LLM development services and generative AI solutions feel so disruptive: they turn what used to be projects into configuration.

This doesn’t mean it’s trivial. It means the bottleneck has moved. Your biggest challenge is not “can we classify tickets?” but “can we integrate this into our AI infrastructure, route sensitive data correctly, control behavior, and measure outcomes?” Vendors selling only classical machine learning expertise are simply solving yesterday’s hard problems.

The real bottleneck has shifted to integration and governance

The truly hard problems today live at the intersection of data, orchestration, and control. You need robust retrieval augmented generation pipelines, production-grade vector databases, tool-calling patterns, safety layers, and governance frameworks that regulators can live with.

Companies that stayed in the classical ML world often underinvested in these capabilities. They may have decent pipelines for small models but nothing equivalent for LLM prompts, retrieval schemas, or complex tool-use. When they “wrap an LLM” around your problem, you get a demo that works in a sandbox and falls apart in production.

We’ve seen this story: an enterprise launches an LLM-based support bot in a rush. It calls a foundation model API directly, without robust data access controls, observability, or feedback loops. Within weeks, hallucinations creep up, costs spike, and legal gets nervous because there’s no auditable trail. The issue isn’t the LLM; it’s the missing governance and integration layer.

Red flags your vendor lives in 2018

You don’t need to be an ML expert to spot a vendor stuck in the past. You just need the right questions and a sense of what’s missing from their story.

Here are concrete warning signs to look for in proposals and discussions about AI vendor selection and AI partner evaluation:

- The proposal centers on training models from scratch, with little mention of foundation models, RAG, or tool-calling.

- They never mention retrieval augmented generation, vector databases, or structured knowledge bases in contexts where they obviously apply.

- Their MLOps story is only about model training pipelines and accuracy metrics, not about prompts, evaluation harnesses, or model observability.

- They describe a “black-box” data science culture—models go in, predictions come out—with no clear pathway for business teams to understand or influence behavior.

- There’s no explicit model lifecycle management plan for foundation models: no versioning of prompts, no rollback strategy, no safe experimentation process.

- Security and governance are an afterthought; they wave at “we’re GDPR-compliant” but can’t articulate specific AI risk management practices.

- Case studies are all from 2018–2020-era projects (classifiers, basic forecasting), with no real production LLM systems.

Strong resumes can hide obsolete operating models. Your goal is not to assess whether their data scientists are smart; it’s to test whether the company has truly retooled for the foundation model era.

Which Classical ML Capabilities Still Matter—And Where

If foundation models are the new default, does classical machine learning still matter? Absolutely—but the context has changed. Classical ML is now the specialized component inside a larger, foundation-model-centric architecture.

The right machine learning development company will know when to lean on FMs and when to deploy classical models for predictive analytics, computer vision models, or ultra-low-latency scoring. The wrong one will try to apply the same playbook everywhere, either overusing FMs or clinging to old patterns.

Where bespoke models still beat foundation models

There are domains where bespoke models remain the rational choice. If you’re running real-time bidding, high-frequency trading, or embedded systems in industrial equipment, you care about microseconds and cents per million predictions. Here, tightly optimized classical models still shine.

The same holds for some predictive analytics scenarios in tabular data: think credit risk scoring, demand forecasting, or certain anomaly detection tasks. In these cases, a well-regularized gradient-boosted tree can outcompete a general-purpose FM on both cost and latency.

What changes is the framing. Instead of being the whole product, these models become services inside a broader system. For example, a tabular risk-scoring model might remain classical, while an LLM layer handles explanations, customer communication, and workflow orchestration. Your data engineering for ML work is still critical, but it feeds into a richer interaction layer.

How a modern partner blends classical ML with foundation models

A modern AI and machine learning development company treats existing models as “tools” for agents to call. The LLM handles reasoning and language; the classical model handles domain-optimized scoring; other tools (search, databases, APIs) provide context.

Imagine a customer retention agent. An LLM sits at the top, orchestrating calls to an existing churn model, your CRM, and your knowledge base via RAG. When an at-risk customer contacts support, the agent retrieves relevant policy documents, calls the classical churn model for a probability score, generates a personalized retention offer, and drafts an email—then logs actions back into your systems.

This blended architecture preserves your legacy investments while embracing new capabilities. A foundation-model-native machine learning development company will help you wrap existing models with APIs, design model deployment patterns that support tool-calling, and implement the surrounding interaction flows. You don’t need a big-bang rewrite; you need thoughtful integration.

That’s also where personalized customer experience solutions become practical: classical ML for scoring and segmentation, FMs for real-time messaging and conversation.

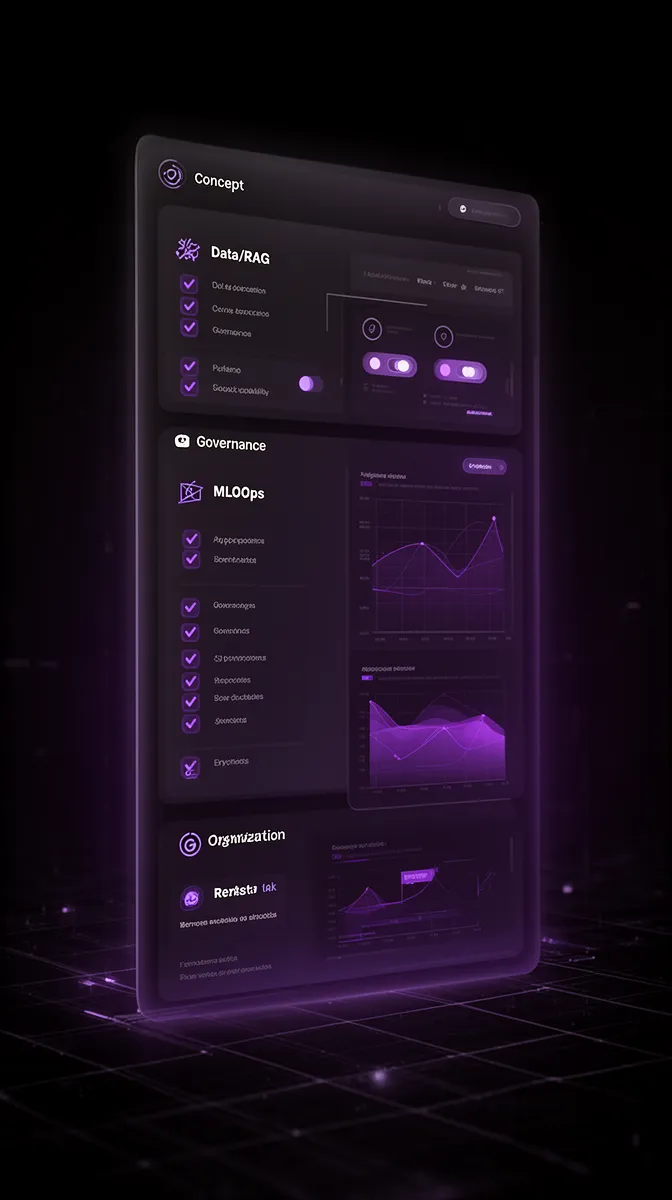

Capability Checklist for a Foundation-Model-Native ML Partner

If you remember nothing else, remember this: the best machine learning development company for foundation model integration is defined by its system capabilities, not its model zoo. You want a partner that is excellent at data and retrieval, FM-specific MLOps, governance, and organizational design.

Use this section as a concrete checklist for RFPs and vendor interviews, especially when you’re choosing a machine learning development company for generative AI and LLM projects.

Data, retrieval, and knowledge fabric competence

Foundation models are only as good as the context you feed them. That’s why mastery of retrieval augmented generation, vector databases, and knowledge base design is now a first-class capability. Your partner needs to treat your unstructured content—docs, tickets, chats, emails—as infrastructure, not an afterthought.

Look for real experience with enterprise content sources: SharePoint, Confluence, ticketing tools, CRMs, data warehouses. They should have opinions about schema strategy (chunking, metadata, hierarchies) and clear methods for evaluating retrieval quality and guarding against vector store drift.

Here are concrete checklist items you can drop into RFPs for enterprise AI platforms and data integration:

- Describe your approach to designing and maintaining knowledge bases for RAG, including content ingestion, chunking, and metadata strategy.

- Which vector databases have you used in production, and how do you monitor retrieval quality over time?

- How do you evaluate the impact of retrieval on overall system performance and user experience?

- How do you handle permissions and access control across multiple content systems in RAG pipelines?

- Provide an example of a retrieval failure you detected and how you fixed it.

A strong answer sounds like a playbook, not a science project. For context, McKinsey’s work on generative AI’s economic potential highlights just how much value is unlocked by combining foundation models with domain-specific data and workflows, not by models alone (source).

Foundation-model-specific MLOps and observability

Classical ML MLOps focused on data pipelines, training, deployment, and monitoring metrics like AUC or RMSE. In the foundation model era, you still need those—but you also need prompt management, evaluation harnesses for free-form outputs, and detailed cost/latency observability.

Your partner should talk about versioning prompts and configurations, not just models; running offline and online evaluations on LLM outputs; and tracking metrics like hallucination rates, coverage, deflection, and user satisfaction. They should have dashboards for monitoring token consumption, latency, and error rates at the conversation level.

When you ask about MLOps and model observability, probe with questions like:

- How do you manage versions of prompts, retrieval configs, and toolchains across environments?

- What metrics do you track for an LLM-based customer service assistant in production? How do you detect regressions?

- How do you simulate user behavior and run A/B tests on prompts or retrieval strategies?

- How do you handle rollbacks when a new model or prompt configuration behaves unexpectedly?

- What is your approach to model lifecycle management for foundation models (including periodic re-evaluation and possible switching of providers)?

Industry surveys from observability vendors like Arize or Weights & Biases show that most enterprises still underinvest in FM-specific monitoring, despite clear evidence that issues surface quickly post-launch (example). You want a partner in the top decile here.

Governance, security, and compliance in the FM era

Foundation models introduce new risks—prompt injection, data exfiltration, jailbreaks—that classical ML rarely faced. At the same time, regulators and frameworks like NIST’s AI Risk Management Framework are becoming more explicit about expectations for responsible AI (source).

Your partner must be able to discuss data residency, PII handling, logging, and access control with as much fluency as they talk about models. They should be able to describe concrete defenses against prompt injection and jailbreaks, audit trails for user interactions, and patterns for safe tool use.

When evaluating AI governance, AI security, and AI compliance, ask:

- How do you prevent sensitive data from leaking into prompts, logs, or external model providers?

- What controls do you implement to mitigate prompt injection and jailbreak attempts?

- How do you ensure auditability and traceability of model decisions and user interactions?

- What frameworks or standards (e.g., NIST, ISO, SOC2, EU AI Act guidance) do you align with?

- How do you involve risk, legal, and compliance teams in solution design?

In regulated industries like financial services and healthcare, this isn’t optional. It’s the difference between a nice demo and something your CISO will actually sign off on.

Organizational signals of a truly evolved partner

Finally, look beyond decks and into how the organization is structured. A truly evolved machine learning development company will have dedicated foundation model teams, strong experimentation frameworks, and productized integration patterns—like reusable agent frameworks or LLM-based workflow components.

Cross-functional squads are a strong signal: product managers, ML engineers, infra, and design working together, rather than data scientists handing models over the wall. They should be able to show you internal tools for rapid FM experimentation and deployment, not just ad-hoc scripts.

Buzzi.ai’s focus on AI agents and WhatsApp voice bots is an example of this productized mindset. Instead of treating each project as a one-off, we build reusable AI agent development frameworks—tool-calling, orchestration, monitoring—that can be adapted to different domains. That’s the kind of partner you want: one who can turn your FM investments into repeatable products, not just prototypes.

If you want a structured way to explore this with your team, consider an AI discovery and strategy engagement that explicitly maps your enterprise AI strategy to foundation-model-native capabilities.

How to Evaluate Vendors: Questions, Red Flags, and Scoring Rubric

Now we bring it all together. Knowing how to choose a machine learning development company in the foundation model era is mostly about asking the right questions, listening for the right patterns, and using a simple rubric to align stakeholders.

This section gives you those questions, a scoring framework, and a short list of red flags—so you can separate top machine learning development companies moving beyond classical ML from those still living in the past.

RFP and interview questions that reveal real depth

Here are questions you can use directly in RFPs or interviews for enterprise AI solutions and machine learning consulting services. The goal isn’t to quiz vendors; it’s to surface their operating model.

- Foundation model strategy: “Describe your approach to choosing between off-the-shelf FMs, fine-tuning foundation models, and training bespoke models. Please include at least one recent project example.”

- Integration patterns: “Walk us through an architecture where an LLM orchestrates calls to existing systems (CRM, ERP, ticketing) and classical models. How do you manage data flow and latency?”

- RAG and retrieval: “How do you design and evaluate RAG pipelines, and what’s your strategy for handling permissions and vector store drift?”

- MLOps and observability: “What does your monitoring stack look like for an LLM-based assistant? Which metrics are most important, and how do you act on them?”

- Governance and risk: “How do you embed AI governance and AI risk management into your delivery process from day one?”

- Migration from classical ML: “Give us an example of how you migrated or augmented existing classical ML models with foundation models without a big-bang rewrite.”

- Cost control: “How do you control and forecast foundation model costs (e.g., tokens, inference), and what knobs do you typically expose to clients?”

- Experimentation: “Describe your experimentation framework for prompts, retrieval strategies, and model versions. How quickly can you iterate?”

Strong answers will reference concrete architectures, tools, and processes; weak ones will stay at the buzzword level. That’s your signal.

Simple scoring rubric for shortlisting partners

To avoid decision-by-vibes, use a simple four-pillar rubric. Score each vendor from 1–5 on: Foundation-model capabilities, Integration/MLOps, Governance/Security, Organizational maturity. Then decide minimum thresholds for shortlisting.

For example, you might require at least a 4 on FM capabilities and Integration/MLOps, and at least a 3 on Governance/Security and Organizational maturity. Vendors below that bar don’t proceed, regardless of price.

Imagine two firms: Vendor A scores 5-5-3-4 and is 25% more expensive; Vendor B scores 3-3-4-3. Vendor B looks safer on paper (slightly better governance score), but Vendor A is far likelier to deliver differentiated outcomes. In the foundation model era, choosing the cheapest machine learning development company often means choosing slower learning and higher hidden risk.

Use this rubric to bring IT, product, and risk into the same conversation about enterprise AI strategy, instead of debating individual buzzwords.

Obvious and subtle red flags to walk away from

Some red flags are blatant; others are only obvious once you know to look. Both matter when you’re evaluating an AI and machine learning development company.

- No production LLM references—only PoCs or hackathon-style demos.

- Heavy emphasis on models and algorithms, light on integration, orchestration, and AI scalability.

- Vague answers to governance or security questions (“we’ll work with your security team”) without specific patterns or frameworks.

- No clear story for cost observability; they can’t tell you how they’d monitor or cap FM usage.

- They say “LLM is just another model type” and propose treating it like any other ML artifact.

- They rely heavily on vendor marketing materials instead of their own experience and playbooks.

- Contracts and SLAs focus on delivery timelines, not on post-launch performance, monitoring, and improvement cycles.

- No dedicated experimentation process; changes to prompts or retrieval configs happen manually and ad-hoc.

If you see several of these together, walk away. There are enough evolved vendors today that you don’t need to accept a 2018 operating model for a 2026 problem.

Migrating from Classical ML to a Foundation-Model-Centric Stack

Most enterprises aren’t starting from zero. You already have classical ML models in production—demand forecasting, churn prediction, fraud detection—and an existing data and app landscape. The goal isn’t to throw this away; it’s to modernize and integrate.

The right machine learning development services for migrating classical ML to foundation models will help you inventory, triage, and prioritize; design new architectures; and manage organizational change. It’s an AI transformation, not just a tech upgrade.

Inventory, triage, and prioritize your existing models

Start with a structured inventory. Catalog your models, what they do, where they live, who owns them, and how they’re used. Then categorize them by business value, technical debt, and “FM-fit potential.”

You’ll generally find four buckets, especially across ML model modernization scenarios like demand forecasting and churn prediction:

- Keep as-is: High-value, low-debt models that are cost-efficient and stable (e.g., a robust fraud detection model).

- Wrap with FMs: Models you keep but surround with LLM-based explanation, summarization, or decision support (e.g., churn model plus narrative explanations for agents).

- Augment with RAG: Areas where adding retrieval augmented generation over documents and historical interactions can dramatically increase usefulness (e.g., claims processing support).

- Retire: Low-value or redundant models better replaced entirely by FM-based systems.

The decision should be driven by business impact and cost–benefit, not technology fashion. A good partner will bring both technical and product perspectives to these calls, aligning them with your overall AI roadmap.

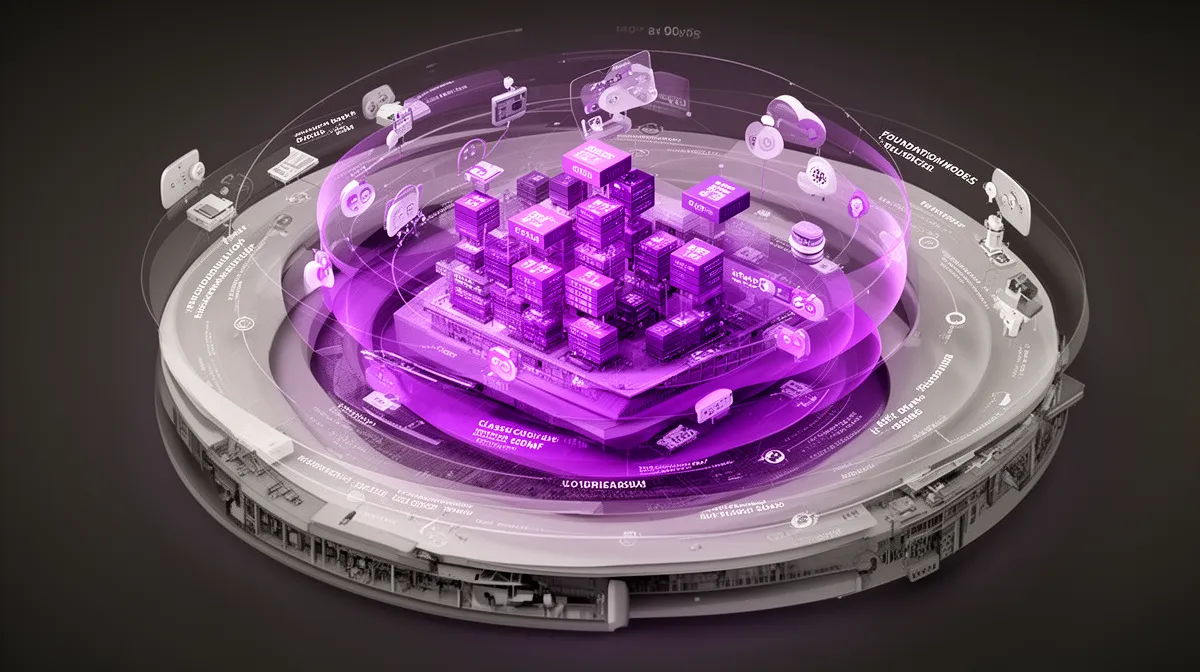

Designing foundation-model-centric reference architectures

Next, you need reference architectures where foundation models sit at the reasoning and interaction layer over your data and tools. Think of an LLM as the orchestration brain, not the source of truth.

Consider an enterprise support stack. At the bottom, you have systems of record: CRM, ticketing, ERP, knowledge bases. On the side, you have existing classical models: ticket routing, churn scores, demand forecasts. In the middle, you build RAG pipelines into vector databases that index your content. At the top, one or more LLM-based agents handle conversations across channels—including WhatsApp, email, and voice.

When a customer calls or sends a message, the agent retrieves relevant documents, calls the ticket-routing model, consults the churn model, and generates an appropriate response—then logs the interaction back into your systems. This is where foundation model integration, AI infrastructure, and thoughtful model deployment come together.

A partner like Buzzi.ai, as an AI and machine learning development company specializing in multimodal foundation models and voice, will co-design these architectures with you. We’ve seen patterns where WhatsApp AI voice bots become the front door to such stacks, especially in emerging markets. For vendor-agnostic RAG and vector best practices, resources like the Pinecone engineering blog are a useful complement (example).

At this stage, it’s also worth considering how AI agent development services can turn these reference architectures into reusable capabilities across business units.

Change management, skills, and operating model

Finally, this migration is as much about people and process as it is about tech. Your data scientists need to learn prompt design, RAG, and tool orchestration; your engineers need to adapt MLOps to FMs; your risk and legal teams need updated governance processes.

The safest path is phased. Start with a pilot—say, an internal agent for support teams in one region—measure it carefully, then expand. Use each phase to refine your architectures, guardrails, and operating model for enterprise AI implementation.

A partner that understands AI strategy consulting and AI transformation services will work alongside your teams, not in a black box. They’ll leave behind capabilities and playbooks, not just code. That’s ultimately what makes the investment durable.

Conclusion: Choose Partners Who Will Still Matter in 2026

The machine learning development company you choose today is effectively a bet on your AI trajectory for the next 3–5 years. In the foundation model era, the winning bet is on partners who focus on integration, governance, and MLOps more than on training one-off models.

Classical ML still matters—but as a specialized component inside foundation-model-centric architectures, not the star of the show. Your job is to use concrete questions, checklists, and rubrics to expose which vendors have truly evolved and which are just rebranding yesterday’s skills.

If you’d like a structured way to apply this to your context, audit your current or prospective partners against the capability checklist and rubric above. Then, when you’re ready to design a foundation-model-centric roadmap and architecture tailored to your business, reach out to Buzzi.ai for a discovery session and we’ll explore what a future-ready stack could look like for you.

FAQ

What is a machine learning development company in the foundation model era?

In the foundation model era, a machine learning development company is less about training individual models from scratch and more about integrating, governing, and productizing foundation models. It designs systems where large language models, retrieval pipelines, and classical ML components work together. The focus shifts from pure modeling to data, orchestration, and safe operation at scale.

How are foundation models changing the role of machine learning development companies?

Foundation models turn many traditional ML tasks—classification, basic NLP, recommendation—into API calls. This forces ML firms to move up the stack and specialize in architecture, integration, governance, and user experience. The most valuable partners now help you align foundation models with your data, workflows, and strategy, not just build isolated models.

Why are classical machine learning development companies becoming obsolete by 2026?

Classical-only vendors are optimized for problems that are increasingly commoditized by cloud FMs and LLM APIs. If a company’s capabilities stop at training bespoke models and don’t extend to RAG, FM-specific MLOps, and AI governance, its differentiation erodes quickly. By 2026, buyers will expect foundation-model-native capabilities as table stakes, not nice-to-haves.

Which classical ML capabilities are still valuable when foundation models are widely available?

Classical ML still excels where tight latency, cost efficiency, or domain-specific optimization are critical—such as real-time bidding, embedded systems, and some tabular predictive analytics. These models often continue running at the scoring layer, while FMs handle reasoning, explanation, and interaction. The key is using classical ML selectively, inside architectures that are otherwise foundation-model-centric.

How can I evaluate whether a machine learning development company is truly foundation-model-native?

Look for concrete experience with RAG, vector databases, prompt management, and FM-specific observability, not just LLM demos. Ask how they version prompts, manage retrieval quality, handle AI governance, and migrate classical models into FM-centric architectures. A foundation-model-native partner will answer with detailed playbooks and references, not just buzzwords.

What specific skills should a modern ML development company have for LLMs and generative AI?

They should be strong in prompt engineering, tool-calling, retrieval system design, FM selection and fine-tuning, and generative UX design. On the engineering side, they need FM-tailored MLOps, evaluation harnesses for free-form outputs, and granular cost/latency monitoring. They should also be fluent in security and governance topics unique to generative AI, such as prompt injection and data leakage.

How do I assess a vendor’s ability to integrate foundation models into my existing systems and data?

Probe their integration patterns: ask for architectures where LLMs call existing CRMs, ERPs, and classical models, and how they handle permissions and data residency. Look for familiarity with your tech stack and concrete stories of integrating across multiple systems of record. Strong vendors will also discuss change management and how they collaborate with your internal teams during rollout.

What are clear red flags that a machine learning development company is stuck in the classical ML era?

Red flags include proposals focused primarily on training models from scratch, no mention of RAG or FM-specific MLOps, and vague responses on security or governance. Lack of production LLM references, absence of cost observability plans, and treating LLMs as “just another model type” are also warning signs. If their case studies stop at 2018-style analytics projects, they likely haven’t truly modernized.

How should enterprises plan migrating or augmenting their existing classical ML models with foundation models?

Start by inventorying your models and categorizing them by business value, technical debt, and FM-fit potential. Decide which to keep, wrap with FMs for explanation and interaction, augment with RAG, or retire. Then design reference architectures and run phased pilots, using each iteration to refine governance, MLOps, and organizational practices.

How does Buzzi.ai position its machine learning practice for the post-classical ML landscape?

Buzzi.ai is built as a foundation-model-native AI and machine learning development company, with a strong focus on AI agents and AI voice bots for channels like WhatsApp. We emphasize data and retrieval systems, FM-specific MLOps, and governance as much as we do model selection. If you’re exploring a roadmap for this shift, our AI discovery and strategy engagement is a good starting point to align stakeholders and identify high-ROI opportunities.