Design Insurance AI Analytics That Survive Real Loss Development

Design insurance AI analytics that stay accurate as claims mature by embedding loss development patterns, triangles, and actuarial methods into every model.

Most insurance AI analytics look brilliant in year one and embarrassing by year three—because they quietly assume today’s incurred or paid losses are close to final. In a world where many P&C lines take five, ten, or even twenty years to fully develop, that assumption isn’t just wrong; it’s structurally dangerous.

If your models treat immature incurred or paid losses as if they were ultimate, they will systematically understate risk, flatter current performance, and then drift upward as claims maturation reveals the truth. Long-tail casualty, workers’ compensation, professional liability—these are exactly the portfolios where AI dashboards often show healthy loss ratios early, then require “reserve strengthening” later. Loss development patterns are not noise; they are the backbone of loss reserving and must be a first-class citizen in any serious claims analytics stack.

In this article, we’ll show how to design development-aware insurance AI analytics that remain stable as accident years age. We’ll walk through loss development triangles, how to embed them into machine learning workflows, how to avoid time-based data leakage, and how to build projections that survive real-world claims maturation. Along the way, we’ll explain how we at Buzzi.ai fuse actuarial loss development expertise with modern AI to deliver explainable, regulator-ready analytics—not just pretty charts.

Why Generic Insurance AI Analytics Break on Real Loss Development

Most off-the-shelf insurance AI analytics platforms treat loss data as if it were just another table: a row per claim, some dates, some amounts, maybe a loss ratio projection column. What’s usually missing is the most important dimension in insurance: development age. Without that axis, the models behave as if “today’s losses” are already close to ultimate, regardless of where you are in the claims lifecycle.

This is how you get AI dashboards that look great in the first couple of years, then fall apart as losses develop. The issue isn’t that machine learning is bad at predictive modeling for P&C insurers. The issue is that we are feeding these models targets and features that ignore the basic physics of claims maturation.

The Hidden Assumption: Today’s Losses Are Close to Ultimate

In many generic claims analytics tools, the core metric is incurred or paid loss to date, often expressed as a loss ratio. For a recent accident year, the model may learn relationships between premiums, exposures, and current incurred amounts, and then project future loss ratios off that immature base. Implicitly, it assumes that incurred versus paid losses today are a good proxy for the final outcome.

This can appear to work—at first. Take a long-tail casualty portfolio where the year‑1 loss ratio on reported losses is 55%, and everything looks green in your AI-powered dashboard. Fast-forward to year 5: IBNR has emerged, case reserves have strengthened, and the same accident year’s ultimate loss projection is now 75%. The model that once looked precise is now clearly biased, because it was trained to equate early incurred with ultimate.

Executives understandably lose trust when slick AI reports are quietly revised upwards year after year. The problem wasn’t the model’s algorithm; it was the hidden assumption about development.

Data Leakage in Time: When the Future Sneaks into Your Training Data

There’s a more subtle failure mode: data leakage in time series. This happens when we train models on fully developed losses (say, 10-year-old accident years) but then deploy them to predict outcomes for very recent cohorts, without adjusting for the difference in maturity. In effect, the training set knows the “future” that the production setting does not.

Imagine we train a model in 2024 using accident year 2010 data where losses have nearly fully developed. The target is ultimate loss ratio, and all features are measured as of 12 months after inception. But we also, inadvertently, include variables that embed knowledge of later development—for example, case reserve levels at 24 or 36 months, or underwriting actions taken after early warning signs appeared. Now we apply this model to accident year 2023, using only information at 12 months. We’ve built a model that learned from a richer information set than it will have in production.

This kind of claims lifecycle analytics leakage is especially problematic in regulated environments. You may be effectively “peeking into the future” during training, producing overly optimistic performance metrics that won’t replicate in real-time. For a deeper treatment of time-based leakage issues in insurance and finance, see research such as time-series ML leakage analyses, which highlight how common this pitfall is when temporal structure is ignored.

Short-Tail vs Long-Tail Lines: Where Naïve Models Fail Fastest

Not all lines punish naïve insurance AI analytics equally. In short-tail businesses—personal auto physical damage, standard property—the lag between occurrence and payment is relatively small. The runoff patterns are tight, and reserve adequacy is usually resolved within a few years.

Long-tail lines are a different universe. General liability, workers’ compensation, medical malpractice, professional liability: these portfolios can take a decade or more to mature. Late-reported claims, reopened claims, and reserve adequacy reviews all conspire to shift the triangle upwards over time. Here, ignoring runoff patterns and treating year‑1 losses as “good enough” is a recipe for chronic underestimation.

Even nominally short-tail lines can mislead if catastrophe events or legal changes disrupt patterns. A single major CAT season or a court ruling that expands coverage can elongate development. Models that don’t explicitly account for short-tail vs long-tail characteristics end up blending very different development behaviors, making their loss ratio projections unstable as the portfolio mix changes.

Loss Development Patterns 101: Foundations for AI Claims Analytics

If we want AI models that survive real-world maturation, we have to start where actuaries start: with loss development patterns. These are not dusty artifacts from a pre-AI era; they’re compressed representations of how claims actually emerge over time. They are the essential context for any serious claims analytics effort.

Loss development is how we translate from “what we know now” to “what this accident year will ultimately cost.” For AI, triangles and development factors become structured inputs rather than mere compliance exercises. To see how, we need to understand the basic building blocks.

From Accident Year to Ultimate Loss: How Claims Really Mature

In P&C, we usually analyze development on an accident year basis, not underwriting year. Accident year groups all claims arising from occurrences within a calendar year, regardless of when the policy was written. That makes it the natural unit for tracking how losses evolve as they age.

Consider a workers’ compensation claim. The accident occurs in March 2024, but the claim might not be reported until April. An initial case reserve is set based on early medical assessments. Over the next few years, payments are made, case reserves are adjusted, maybe the claimant returns to work—or doesn’t. The incurred loss at each point (paid plus case reserves) is a moving target, reflecting the best estimate at that development age.

The gap between what’s reported and what will ultimately be paid is handled through IBNR estimation—incurred but not reported losses. For an AI system doing claims lifecycle analytics, this maturation path is the reality it must respect. Accident year versus underwriting year distinctions, reporting lags, and the slow emergence of severity all shape the data the model sees.

Loss Development Triangles: The Actuarial Map of Maturation

Loss development triangles are simply tables that organize losses by accident year (rows) and development period (columns). You can build them for incurred losses, paid losses, claim counts, or severities. The idea is to line up each accident year’s experience at 12 months, 24 months, 36 months, and so on.

From these grids, actuaries derive age-to-age development factors: how much losses grow from 12 to 24 months, 24 to 36, etc. Cumulatively, those factors tell us what proportion of ultimate has typically emerged by a given age. The runoff patterns in the triangle—how quickly rows fill in and level off—carry rich signals about reserve adequacy and portfolio health.

Triangles are where you see shifts in reporting speed, changes in settlement practices, or case reserve strengthening. A simple 5x5 paid loss triangle for accident years 2018–2022, with development ages 12–60 months, already reveals whether your book tends to settle quickly or slowly and whether later cohorts are behaving differently from earlier ones.

Incurred vs Paid: Which Triangle Matters for AI Models?

Actuaries distinguish between incurred and paid triangles for a reason. Incurred losses (paid plus case reserves) are more responsive: they move as adjusters learn more and update reserves, including case reserve strengthening during adverse development. Paid losses are slower but more objective—cash has actually left the building.

For loss reserving, incurred triangles can react quickly to emerging information, but they’re vulnerable to changes in reserving philosophy. Paid triangles, by contrast, reflect actual settlement behavior and are less sensitive to reserving assumptions, though they lag. In AI terms, each triangle gives a different lens on claims maturation.

Rather than choosing one, robust claims analytics workflows should use both as structured inputs. Patterns in incurred versus paid losses, and the gap between them at each development age, are powerful signals. When incurred spikes but paid remains flat, for example, we might be seeing reserve strengthening, not worsening frequency. Feeding both triangles into the model preserves that nuance.

How to Integrate Loss Development Triangles into Insurance AI Models

The good news is that integrating triangles into insurance AI analytics is more about discipline than rocket science. You’re essentially turning classical actuarial structures into well-organized features and targets. The goal is an insurance AI analytics for loss development modeling stack where models are aware of age, cohort, and development context.

Think of loss development triangles as the scaffolding on which you hang your ML features, targets, and validations. Once you have that scaffolding, you can safely explore more advanced ideas like machine learning for loss development triangles in insurance without tripping over basic time-structure mistakes.

Start with Cohort-Based Modeling, Not Random Rows

Most data scientists start with a flat claims table: one row per claim, with policy attributes and outcomes. That’s fine for micro-level severity or fraud models, but dangerous for portfolio-level cohort-based modeling of loss ratios or ultimates. For development-aware reserving and pricing analytics, the natural unit is the accident year (or policy year) cohort at a given development age.

Practically, this means constructing a grid where each row is an accident year and each column is a development age (12, 24, 36 months, etc.). For each cell, you align earned and written premium, exposures, claim counts, and cumulative incurred and paid losses. You also lock in an “information cut” date—say, 31 December 2023—so that all features used for modeling respect what was knowable at that time, limiting data leakage in time series.

Once you have this cohort-by-age table, you can safely do exposure-based modeling using earned and written premium, attach external signals, and ensure that training and validation mimic production conditions. You’ve turned messy, overlapping claim histories into a consistent panel suitable for robust predictive modeling for P&C insurers.

Feature Engineering from Triangles: Turning Patterns into Signals

With triangles and cohort tables in place, the real fun is feature engineering. You can create cumulative paid and incurred ratios at each age, incremental-to-cumulative ratios (how much emerged this year relative to what we already knew), and rolling estimates of development factors. You can also split features by claims severity and frequency—separate claim count development from average severity development.

For a workers’ compensation portfolio, useful features might include:

- Cumulative paid loss ratio at 12, 24, 36 months.

- Cumulative incurred loss ratio at 12, 24, 36 months.

- Paid-to-incurred ratios by age (how aggressive or conservative reserving is).

- Change in case reserves between ages (reserve strengthening or weakening).

- Claim count development factors (reported count at 24 vs 12 months).

- Average severity at each age and its growth rate.

- Indicators for short-tail vs long-tail segments or coverage parts.

- External inflation indices or wage growth for severity pressure.

These features translate raw loss development patterns into quantitative signals that an ML model can learn from. They also make your model more interpretable: you can trace predictions back to understandable shifts in development factors, not just black-box embeddings.

Targets That Respect Development: Ultimate Loss and Loss Ratios

The next design choice is targets. If you train on immature incurred or paid losses, you’re effectively teaching your model to reproduce underdeveloped states. Instead, targets should be some form of ultimate loss projection or ultimate loss ratio at a fixed horizon, aligning with IBNR estimation and reserving objectives.

For long-tail lines, one approach is to use older accident years where ultimates are largely settled as your training set. Suppose in 2024 you take accident years 2005–2014; by now, their ultimates are mostly known. You then train models to predict those ultimates using information available at, say, 12 or 24 months. This teaches the model the mapping from early-age features to long-term outcomes.

There is a trade-off here. Longer history gives more mature targets but fewer cohorts; shorter history yields more cohorts but more uncertainty in ultimates. Development-aware loss ratio projection models need to strike a balance, sometimes using stochastic ranges or blending with actuarial priors to handle residual uncertainty in the training labels.

Blending Machine Learning with Chain Ladder and Bornhuetter–Ferguson

There’s a temptation to think that ML will “replace” classical actuarial methods like the chain ladder method or Bornhuetter–Ferguson. In practice, the best systems use ML to complement these frameworks, not supplant them. Actuarial techniques embody decades of domain knowledge about loss reserving and provide a clear, auditable structure.

One powerful pattern for ai powered loss reserving and development analytics is to let ML forecast development factors or segment-specific priors, which then feed into a BF or chain ladder engine. The AI learns non-linearities and interactions—by line, territory, limit, attachment, distribution channel—that are too granular for a traditional triangle analysis, while the actuary retains control of the overall structure.

Academic and practitioner work on integrating ML with BF/CL shows that this hybrid approach can reduce bias and volatility. Instead of throwing away the triangle, you turn it into a baseline and let ML explain the residuals at a finer level. The result is an ai powered loss reserving and development analytics stack that is both powerful and explainable.

Development-Aware Projection Techniques That Stay Stable Over Time

Once triangles, features, and targets are development-aware, the next challenge is projection stability. It’s not enough for a model to be accurate at a single cut date; it must behave sensibly as time passes and accident years mature. This is where a development aware insurance analytics platform differentiates itself from a generic BI tool with some ML sprinkled on top.

The key ingredients are scenario-based projections, rigorous back-testing against historical development, and drift detection on runoff patterns. Together, these make your stack look much more like modern stochastic reserving practice than point-estimate forecasting.

Scenario-Based Ultimate Projections Instead of Single-Point Bets

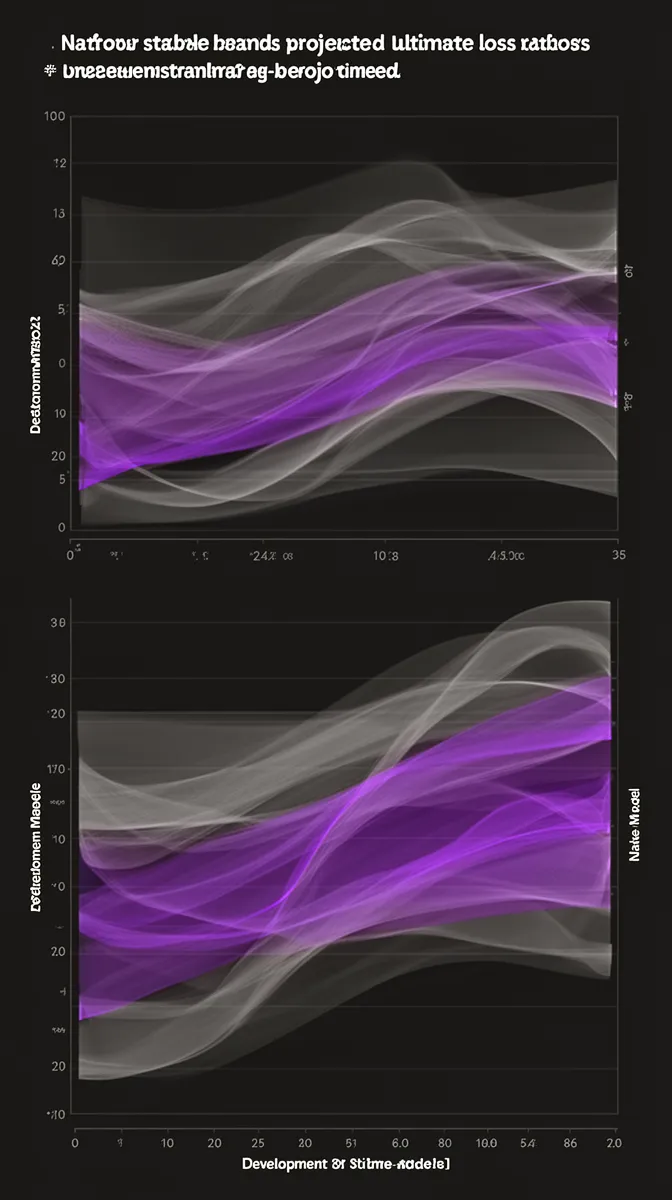

Real portfolios don’t have a single deterministic future. For reserving and capital, we care about ranges: optimistic, base, pessimistic scenarios, and full distributions. Development-aware ultimate loss projection models should output more than one number.

By layering stochastic techniques on top of ML forecasts, you can estimate distributions of possible outcomes. For example, a specialty liability book might have a base projected ultimate loss ratio of 65%, but the 90% confidence band could be 55–80%. That band is crucial for reserve adequacy reviews and capital allocation decisions, especially under Solvency II or RBC-style regimes.

Industry reports from bodies like the CAS and IAA on stochastic reserving and reserve uncertainty provide practical frameworks you can align with. ML becomes a way to parameterize these frameworks with richer, segment-level information rather than replacing them outright.

Monitoring Stability: Back-Testing Against Historical Development

Stability isn’t something you assert; it’s something you measure. A robust development-aware system routinely back-tests models by pretending to stand at past cut dates and projecting ultimates for older cohorts, then comparing those projections to the realized ultimates today. This reveals how projections drifted as more data came in.

In a naïve setup, you might see reserves revised upward by 10–15 loss ratio points as accident years 2018–2020 mature—an indication that your models or methods were chronically underestimating. In a development-aware setup, you expect revisions, but within a narrower, explainable band: maybe within 2–3 points, with clear links to external shocks like legal changes or inflation spikes.

Metrics here include average revision magnitude, direction of bias (over- or under-reserving), and stability of projections across successive valuation dates. For predictive modeling for P&C insurers, this kind of back-testing is as important as traditional accuracy metrics like RMSE or MAPE.

Detecting Abnormal Shifts in Development Patterns

Even the best-trained models can be blindsided when the world changes. That’s why a modern development aware insurance analytics platform also monitors the triangles themselves for abnormal shifts. Instead of assuming yesterday’s development factors will hold, it uses AI to detect pattern changes in near real-time.

Suppose a legal reform accelerates bodily injury settlements in a key jurisdiction. You would see paid development factors between 12 and 24 months increase, and the proportion of ultimate emerged by early ages rise. A good system flags this as a structural change, not random noise, prompting actuaries and underwriting teams to reassess assumptions.

Drift detection on incurred versus paid patterns, case reserve strengthening, and claim count emergence by segment becomes a core element of claims analytics. Instead of finding out about portfolio deterioration during an annual reserve review, you get early warning signals as soon as runoff patterns deviate from history.

Common Pitfalls When Training AI on Immature Loss Data

So far, we’ve focused on how to do ai claims analytics that account for loss maturation correctly. It’s equally important to recognize the traps that appear when you don’t. These pitfalls show up in almost every insurer experimenting with AI on claims and reserving data.

They usually stem from a single root cause: treating immature losses as if development doesn’t exist. Once you see them, you can’t unsee them—and you can redesign your analytics pipelines to avoid them.

Confusing Speed of Settlement with Lower Severity

One common error is misreading operational changes as risk improvements. Suppose you introduce a fast-track claims process that settles small auto claims within 30 days instead of 90. Your dashboards based on immature data now show lower average open durations and attractive early loss ratios.

If your models don’t control for development, they may “learn” that faster settlement equals lower severity and reward teams for closing claims quickly. In reality, all you’ve done is front-load payments while the tail of more complex claims is still maturing. Proper claims lifecycle analytics must distinguish between process speed and true improvements in claims severity and frequency.

Ignoring IBNR and Late-Reported Claims

Another pitfall is forgetting about claims that haven’t appeared yet. In long-tail lines with significant late-reporting—general liability, medical malpractice, professional liability—the visible reported losses at 12 or 24 months can severely understate reality. Training on raw reported losses bakes that understatement into your models.

For example, accident year 2022 GL might show a 60% loss ratio on reported losses as of December 2023. Traditional triangulation suggests the loss development patterns for that line imply an IBNR estimation that lifts the ultimate to 85%. If your AI models ignore that and focus on the 60%, they’ll project overly rosy outcomes and may even incentivize under-reserving.

Development-aware designs treat IBNR as a first-class target component. Either you train models directly on ultimate estimates that already include IBNR (e.g., from an actuarial engine), or you build explicit submodels for reporting lags and late claims in addition to severity and frequency.

Mixing Business Mix Changes with Development Signals

The third trap is conflating portfolio mix shifts with genuine development pattern changes. If you move from small commercial to large accounts, or from low to high limits, your claims will naturally develop more slowly and with larger late-emerging severities. Unsegmented models that treat all business as homogeneous will misinterpret this as a worsening of development behavior.

Robust exposure-based modeling separates these effects. You segment by line, territory, limit, attachment, and distribution channel, and you design features that distinguish portfolio mix from maturation. That way, when claims severity and frequency change because you’re writing different risks, the model doesn’t wrongly attribute it to changes in development factors themselves.

This is also where collaboration between underwriting, pricing, and reserving is critical. Analytics teams need context on strategic shifts so they don’t overfit models to transient patterns or misread deliberate business moves as data anomalies.

How Buzzi.ai Builds Development-Aware Insurance AI Analytics

Designing insurance AI analytics that survive real loss development isn’t just a modeling problem; it’s an architecture and governance problem. At Buzzi.ai, we’ve built our platform specifically to handle the realities of claims maturation, loss development triangles, and regulatory expectations around explainability.

The result is a development aware insurance analytics platform that combines actuarial rigor with modern ML workflows, so your AI doesn’t break as accident years age. Instead of fighting with legacy reserving processes, our stack plugs into them, turning them into assets rather than obstacles.

Architecture: From Raw Policy and Claims Data to Development-Aware Features

Our starting point is always the data pipeline. We ingest policy, exposure, and claims data from core systems; reconcile them; and construct incurred and paid loss development triangles by line, segment, and legal entity. This works across short-tail and long-tail lines, and in multi-entity or multi-TPA setups.

From there, we generate development-aware cohort tables—accident-year by development age—with aligned earned and written premiums, exposures, claim counts, and cumulative losses. On top of those structures, we derive features like development ratios, reporting lags, severity trends, and segment-level risk indicators. All transformations are versioned at the data-cut level, so you have an auditable trail of what was known when.

If you’re looking to modernize reserving, pricing, or portfolio steering, our predictive analytics and forecasting services start with this foundation. We don’t shortcut the hard work of building triangles and development-aware features; we industrialize it.

Blending Actuarial Expertise with Modern ML Workflows

Buzzi.ai doesn’t try to bypass actuarial teams; we work with them. Our platform includes configurable modules for classical actuarial models—the chain ladder method, Bornhuetter–Ferguson, and related techniques—alongside ML components. Actuaries can set priors, choose tail factors, and define segmentation schemes, while data scientists experiment with more flexible learners.

In a typical engagement, we co-design development-aware models with reserving and pricing actuaries. For a specialty liability book, for example, we might use ML to forecast development factors by industry and limit band, then feed these into a BF engine. The actuaries retain interpretability and control; the AI surfaces patterns that would be invisible in aggregate triangles.

We also align with regulatory expectations for model risk management and explainability, drawing on guidance from bodies like the NAIC and EIOPA. External reports on AI governance in insurance inform our documentation standards, so your models can be defended in front of boards, auditors, and supervisors.

Operationalizing Development-Aware Analytics Across Pricing, Reserving, and Portfolio Steering

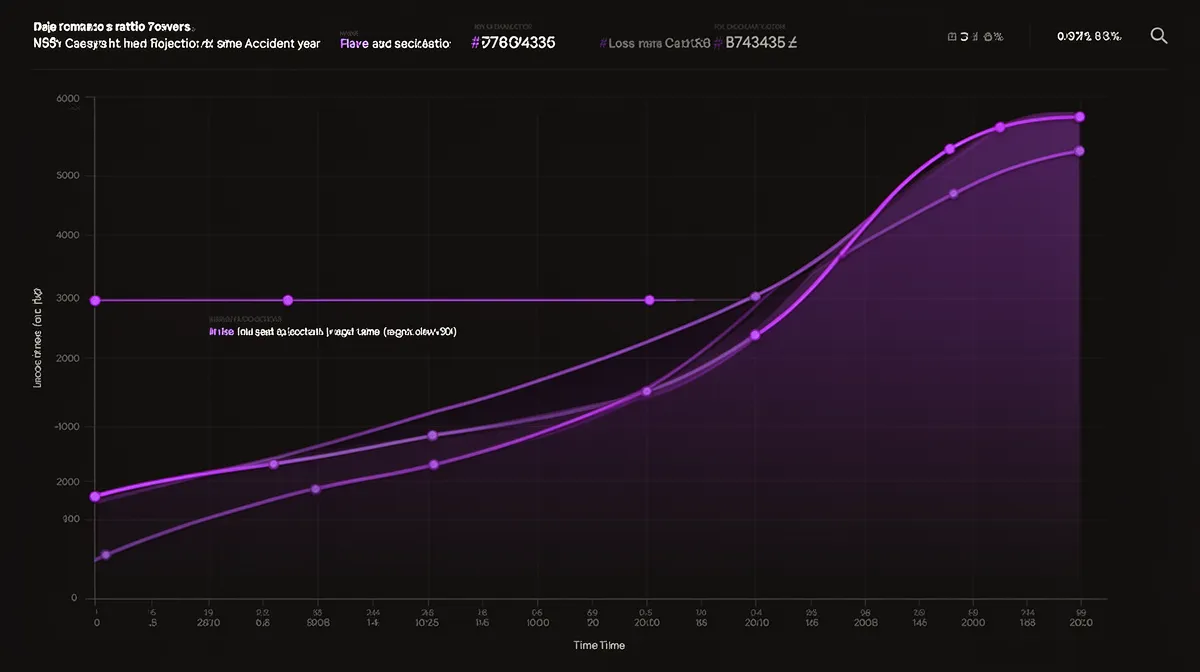

Development-aware analytics are only valuable if they show up where decisions are made. In Buzzi.ai, ultimate loss ratio projections, stability metrics, and drift alerts are exposed via dashboards and APIs that plug into quarterly reserving cycles, pricing tools, and reinsurance discussions. You can see, for each accident year and segment, how projections have evolved and why.

Reserving committees can review projections alongside historical back-tests, seeing whether models systematically under- or over-reserve. Pricing teams can adjust rate indications based on development-aware loss ratio views rather than immature snapshots. Capital and reinsurance teams can rely on ranges that reflect best insurance analytics solution for accurate loss projections, not just point estimates.

The net effect is an ai claims analytics that account for loss maturation layer that supports ai for insurance reserving and pricing, rather than fighting it. We integrate with existing systems rather than demanding wholesale replacement, so you can evolve toward a more development-aware posture without a multi-year rip-and-replace.

Conclusion: Make Loss Development a First-Class Citizen in Insurance AI

When insurance AI analytics ignore loss development, disappointment is inevitable. Models look good on young accident years and then drift badly as claims mature, forcing reserve strengthening and eroding trust. The root cause is simple: treating immature incurred or paid losses as if they were ultimates.

The cure is to embed loss development triangles, development factors, and maturity-aware targets into your AI stack from day one. Treat triangles as structured inputs, not legacy artifacts; design development aware insurance analytics that respect accident-year age; and blend actuarial methods like chain ladder and Bornhuetter–Ferguson with ML rather than replacing them. Done right, your projections stay stable, your IBNR estimates are defensible, and your dashboards support real decisions in pricing, reserving, and capital.

This is exactly the approach we’ve built into Buzzi.ai. If you’re wondering whether your current analytics will still look trustworthy as recent accident years age, it’s time to reassess. Explore a development-aware approach with us and schedule an insurance AI discovery session to review your loss development challenges and data readiness.

FAQ

Why do traditional insurance AI analytics fail when they ignore loss development?

They fail because they treat immature incurred or paid losses as if they were close to ultimate, ignoring how long it takes for claims to fully mature. This leads to underestimation of true loss ratios, particularly for long-tail lines. As accident years age, projections drift upward, eroding confidence in both models and management decisions.

What are loss development patterns and why do they matter for insurance AI models?

Loss development patterns describe how claims costs emerge over time, typically visualized through loss development triangles by accident year and development age. They show what fraction of ultimate losses has emerged at each age and how quickly portfolios run off. For AI, these patterns provide essential context, preventing models from confusing early, partial information with final outcomes.

How do incurred and paid loss triangles feed into AI and machine learning workflows?

Incurred and paid triangles become structured inputs and feature sources for development-aware models. From them, you derive cumulative ratios, development factors, reporting lags, and severity/frequency metrics at each age. Feeding both incurred and paid views lets AI distinguish between reserve movements and real changes in payment behavior, improving both accuracy and explainability.

What is the difference between predicting reported losses and ultimate losses with AI?

Predicting reported losses focuses on what has been reported or paid so far, which is inherently incomplete for immature accident years. Predicting ultimate losses aims to estimate the total cost of all claims for a cohort, including IBNR and future development. For reserving and capital decisions, ultimate-focused models are far more relevant, especially in lines with long reporting lags and tail development.

How can we integrate loss development factors into existing insurance analytics pipelines?

Start by building accident-year by development-age tables and loss development triangles from your existing policy and claims data. Then derive development factors and related features (like emergence ratios) and feed them into your current BI tools or ML models as additional fields. Over time, you can move toward a fully development-aware architecture, or work with partners like Buzzi.ai to accelerate that transition.

Which lines of business are most sensitive to ignoring loss development in AI models?

Long-tail lines—general liability, workers’ compensation, medical malpractice, professional liability—are the most sensitive because a large share of their ultimate losses emerges many years after the accident year. These portfolios often have significant IBNR and reserve volatility. Ignoring development here typically leads to chronic under-reserving and frequent reserve strengthening events.

How can machine learning work alongside chain ladder and Bornhuetter–Ferguson instead of replacing them?

Machine learning can enhance chain ladder and Bornhuetter–Ferguson by forecasting segment-specific development factors, priors, or residual adjustments based on richer feature sets. You still use the classical methods as structural backbones, ensuring transparency and regulatory comfort. ML then refines estimates at a granular level, capturing non-linearities across segments that traditional triangle analysis can’t easily see.

What data structures and features are needed for development-aware insurance AI analytics?

You need well-constructed accident-year by development-age panels, plus incurred and paid triangles by line and segment. On top of that, features such as cumulative loss ratios, emergence factors, paid-to-incurred ratios, claim count development, severity trends, and external inflation or legal indicators are critical. These structures let AI learn how early-age signals map to ultimate outcomes in a way that respects claims maturation.

How should insurers validate AI models when losses are still maturing and IBNR is significant?

Insurers should use back-testing across historical cut dates, comparing past projections to today’s realized ultimates, rather than relying only on cross-validation metrics. They should also evaluate stability (how much projections revise over time) and bias (systematic under- or over-reserving). For lines with high IBNR, scenario-based and stochastic reserving techniques can help reflect uncertainty in both targets and forecasts.

How can Buzzi.ai help implement a development-aware insurance analytics platform quickly?

Buzzi.ai provides an end-to-end platform that ingests policy and claims data, builds incurred and paid triangles, and generates development-aware features and targets out of the box. We blend actuarial models with ML workflows so you can move from spreadsheets to a scalable, explainable analytics stack. Our team works with your actuaries and data teams to deploy dashboards, APIs, and governance tailored to your portfolio, accelerating time-to-value.