Machine Vision Development That Survives the Factory Floor

Machine vision development for factories demands uptime, deterministic latency, and PLC/MES integration. Learn the industrial approach—and how Buzzi.ai builds it.

If your vision system can’t hit cycle time, survive dust and vibration, and talk to a PLC reliably, it isn’t “computer vision in a factory”—it’s downtime waiting to happen. That’s the uncomfortable truth behind a lot of machine vision development efforts: the model looks great in a lab demo, and then collapses the moment it meets real conveyors, real operators, and real changeovers.

Industrial teams don’t buy “accuracy.” They buy outcomes: fewer defects shipped, less scrap, higher OEE, fewer line stops, and better traceability. In industrial machine vision, a one-in-a-thousand glitch that would be a rounding error in a Kaggle notebook becomes a shift supervisor’s nightmare.

This article is a deployment-first guide to factory floor deployment realities: reliability requirements, environmental design (optics, lighting, enclosures), OT integration patterns (PLC/SCADA/MES), testing discipline (FAT/SAT), and how to evaluate an industrial machine vision development services partner. We’ll keep the mental model simple: treat vision as an industrial control subsystem, not a standalone AI model.

At Buzzi.ai, we build tailor-made AI systems and agents that automate work in messy, real-world conditions. The same mindset applies here: industrial automation is unforgiving, so engineering has to be.

Machine vision development vs computer vision: the real difference

Computer vision is a field. Machine vision development is a product discipline. They share tools—cameras, models, datasets—but they optimize for different things.

In practice, “computer vision” projects often stop at a trained model and a demo UI. “Industrial machine vision” projects start at the station: triggers, lighting, IO timing, operator workflows, and what happens at 2 a.m. when something goes slightly wrong.

Computer vision optimizes accuracy; machine vision optimizes outcomes

Accuracy is a means, not an end. A factory cares about a stack of KPIs that are closer to the business and closer to the line:

- Scrap rate and rework rate

- False reject rate (good parts flagged bad) and missed defect rate (bad parts flagged good)

- Cycle time constraints (does the station hit the window, every time?)

- Impact on OEE: availability, performance, quality

- Auditability: can QA explain why a part was rejected?

Costs are asymmetric in manufacturing. A false reject is annoying and expensive; a false accept can be catastrophic (warranty claims, safety incidents, recall risk). That asymmetry changes how we set thresholds, how we route borderline cases, and how we design fallback behavior.

A common failure vignette: a model shows 98–99% validation accuracy, but at shift change the lighting warms up, reflections change, and the system spikes false rejects. The line slows or stops, operators lose trust, and the “high-accuracy” model becomes a high-friction system.

Factories are adversarial environments (even when nobody is attacking you)

We tend to treat “distribution shift” like a research concept. On the factory floor, it’s Tuesday. The environment changes constantly and often invisibly:

- Dust, oil mist, coolant spray, and lens contamination

- Vibration from nearby presses; forklift bumps that change alignment

- Heat and temperature gradients; condensation after washdown

- EMI from motors and VFDs; grounding issues

- Lighting drift over shifts; bulb aging; maintenance swaps “equivalent” lights

- Specular reflections on shiny parts; glare that looks like a defect

- Product variation and changeovers that create permanent micro-shifts

In commissioning, “line realities” show up fast: a protective window gets scratched; a compressed-air cleaning nozzle is aimed slightly wrong; a bracket loosens; a cable gets tugged during maintenance. Environmental robustness isn’t a nice-to-have—it’s what keeps quality inspection stable.

Why integration is the product (not the model)

In industrial automation, the model’s output only matters if the rest of the system can act on it reliably. Vision has to trigger something: a reject gate, a robot pick, an alarm, a rework route, or a production stop. That means PLC integration and deterministic timing are part of the core product.

The OT/IT boundary makes this more complex. PLCs and robots need time-critical, deterministic signals. MES/SCADA/QA systems need traceability, images, and analytics. “Deployment” includes wiring, safety interlocks, and an operator UX that doesn’t crumble under stress.

Example: a reject station might require a deterministic OK/NOK within a 120 ms window so the PLC can actuate a gate at the correct encoder count. An API call with variable latency isn’t “modern”—it’s unreliable.

Industrial reliability requirements: design for uptime, not demos

Reliability is not something you bolt on after the model works. In industrial machine vision, reliability targets drive architecture: hardware choices, network layout, fallback paths, monitoring, and maintenance playbooks.

We’ve found that teams who specify reliability upfront ship faster, because they stop arguing about symptoms and start engineering to measurable constraints: MTBF, MTTR, and deterministic performance.

Define reliability targets before you pick a model

Start with the business need, then translate it into station-level targets. A practical template looks like this:

- Uptime target: 99.5–99.9% for non-critical inspection; higher for bottleneck stations. The right number depends on whether the station can be bypassed.

- MTTR: minutes, not hours. What can an operator fix, and what needs maintenance?

- False reject/false accept limits: tied to cost of scrap vs escape. Make it explicit.

- Operational envelope: line speed range, SKU count, changeover frequency, cleaning cycles, shift patterns.

- Failure budget: what can fail gracefully (e.g., route to manual review) vs must fail-safe (e.g., stop line if a safety-critical defect can’t be verified).

This turns how to develop reliable machine vision systems for factories from guesswork into a spec. It also forces an early decision: do we optimize for throughput, for escape risk, or for labor reduction? You can’t maximize all three at once.

Redundancy and graceful degradation patterns

Factories run on predictable behavior. When the vision system is uncertain, it should respond with a predictable, pre-agreed action—not a crash and not a mystery. Common patterns include:

- Low-confidence fallback: re-scan, slow line briefly, or route part to manual review.

- Dual-channel robustness: dual cameras or dual lighting for high-risk stations.

- Health checks: heartbeat signals to PLC, watchdog timers, and safe-state auto-restart.

A simple story that comes up often: adding a second lighting channel (different angle or polarization) reduced false rejects when one light aged and its intensity drifted. The model didn’t change; the system did.

Deterministic latency and cycle-time budgeting

In manufacturing, average latency is trivia. What matters is p95/p99 latency, because the line doesn’t care that you were fast most of the time; it cares about the time you missed the window.

Budget latency end-to-end. A useful breakdown looks like:

- Exposure + strobe timing

- Image transfer (GigE/USB3) and buffering

- Preprocessing (ROI crop, normalization)

- Real-time inference (CPU/GPU/accelerator)

- Decision logic (thresholds, rules, recheck)

- PLC handshake and actuation timing

Example: a conveyor inspection station with a 120 ms decision window might budget 10 ms exposure, 15 ms transfer, 20 ms preprocessing, 40 ms inference, 10 ms decision, and 25 ms PLC + IO margin. If you can’t make the math work, you don’t “optimize Python”—you revisit camera placement, triggering, ROI size, or whether edge AI is needed at all.

Edge compute vs server compute is a determinism decision. Servers are great for analytics; edge is often essential for cycle time constraints and predictable latency requirements. Sometimes a GPU is overkill; sometimes it’s the only honest way to hit p99.

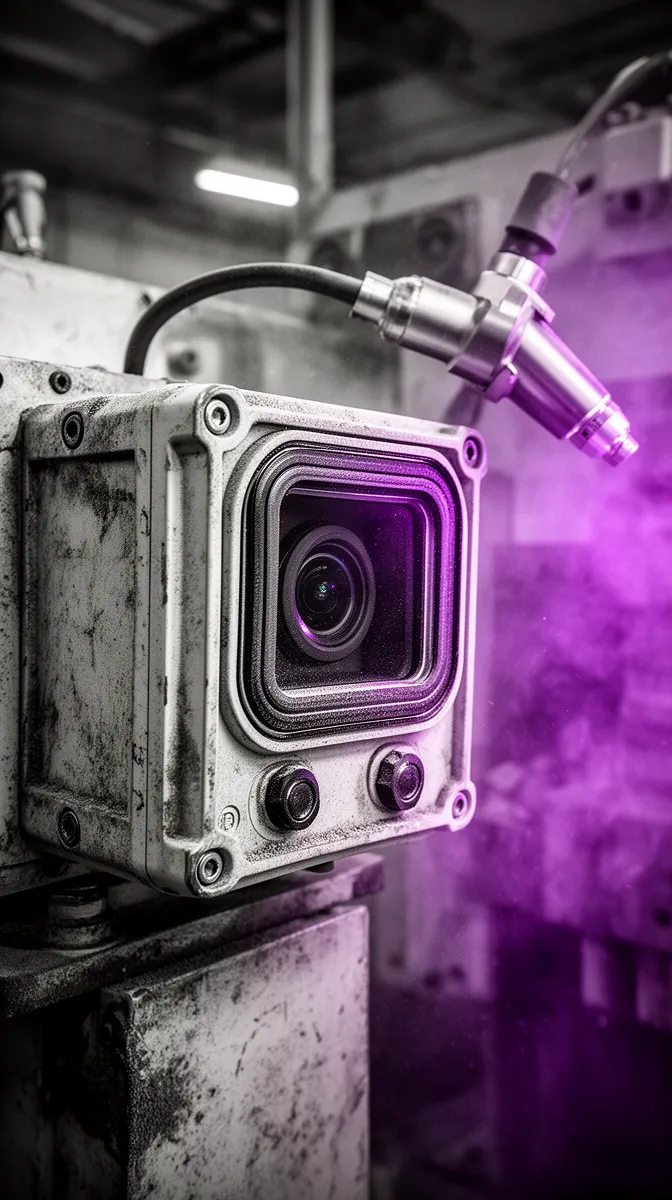

Environmental robustness: optics, lighting, enclosures, and the ‘physics layer’

Most machine vision failures aren’t ML failures; they’re physics failures. If the photons are unstable, the model becomes a very expensive random number generator.

That’s why environmentally robust machine vision solutions for harsh factories start with optics, lighting control, and mechanical design. The model is downstream.

Lighting is a control system, not a checkbox

Lighting has the same status as a sensor in industrial control: you control it, monitor it, and replace it predictably. For reliable defect detection, you usually want illumination to be more stable than the product.

In practice, that means:

- Strobes to overpower ambient light and freeze motion blur

- Diffusers to reduce harsh shadows

- Polarization (often cross-polarized) for shiny or specular surfaces

- Synchronization with motion: encoder/trigger so capture happens at the right time

Concrete example: specular metal parts often produce glare that looks like a scratch. Adding polarization can eliminate glare-driven “defects,” stabilizing inspection without touching the model. If you’re serious about industrial-grade computer vision for quality inspection, you’ll spend real time here.

Two external references worth bookmarking: Edmund Optics’ overview of illumination fundamentals (illumination in machine vision) and Cognex’s broader machine vision resource library (Cognex resources).

Camera, lens, and mounting choices that hold calibration

Industrial cameras are easy to buy and hard to keep stable. The trick is to treat camera placement like a mechanical fixture: rigid, repeatable, and maintainable.

Key tradeoffs show up immediately:

- Lens selection: depth of field vs resolution; distortion vs field of view

- Mounting: rigid mounts, vibration damping, and alignment features that survive maintenance

- Protection: protective windows, air knives, and access for cleaning

A weekly maintenance checklist that actually works in real factories is boring—and that’s the point:

- Inspect lens/window for residue, scratches, or fogging

- Verify mount integrity (torque marks, looseness, vibration damage)

- Check lighting intensity and uniformity (simple reference target)

- Confirm focus and alignment against a “golden part”

Notice what’s missing: “retrain the model.” Environmental robustness should reduce the frequency of model interventions.

IP-rated enclosures and thermal management for edge compute

Enclosures are where reliability gets won or lost. Dust and oil ingress cause intermittent failures that look like software bugs. Heat causes slow degradation and sudden restarts. Both show up as “random downtime,” which is the worst kind.

Design choices include:

- IP-rated enclosures aligned to the area (dusty, washdown, outdoor). If you need a refresher on IP codes, start with this IEC 60529 overview (IP rating basics).

- Fanless compute where possible; filtered air and positive pressure where needed

- Condensation control: gaskets, desiccants, heaters in some environments

- Cable management: strain relief, shielded cabling, proper grounding to reduce EMI

One real pattern: moving compute into a sealed enclosure with proper thermal design reduced unplanned reboots that were caused by dust ingress and clogged fans. The model wasn’t the culprit; the airflow was.

Integration with PLC, SCADA, and MES: where most projects break

Most machine vision development projects don’t fail because the model can’t see defects. They fail because the system can’t act reliably in an OT environment. That’s why best practices for industrial machine vision integration with PLC are, effectively, best practices for industrial delivery.

Integration is also where organizational complexity shows up: electrical, automation, IT security, QA, and operations all have legitimate constraints. Good system design reduces the number of times they have to negotiate at 3 a.m.

The PLC handshake: deterministic I/O beats ‘send an API call’

For time-critical stations, treat vision like a deterministic device with a state machine. The PLC should know exactly what state the vision system is in, and the vision system should know what the PLC expects.

A simple textual state machine for OK/NOK + reject gate looks like:

- READY: vision asserts “ready” signal; PLC asserts “part present” when sensor triggers.

- CAPTURE: PLC triggers camera/strobe; vision captures image and acknowledges.

- DECIDE: vision runs inference + logic; sets OK or NOK bit within the defined window.

- ACTUATE: PLC actuates reject gate at the correct encoder position.

- CONFIRM: PLC confirms actuation; vision logs result with timestamp/part ID.

- FAULT/RETRY: if no decision in time, go to safe behavior (predefined).

Discrete signals and industrial IO are boring for a reason: they are deterministic. Use higher-level APIs for reporting, images, diagnostics, and MES integration—not for the core decision loop.

Common industrial protocols and when to use them

Protocol choice should follow site standards and security constraints, not developer preference. A practical way to think about it is: control vs reporting vs legacy.

- OPC UA for structured data and interoperability across vendors (overview from the OPC Foundation: OPC UA).

- Profinet or EtherNet/IP when you’re inside real-time control environments and need deterministic behavior aligned to PLC ecosystems (Profinet overview: PROFINET technology).

- Modbus for legacy integration and “good enough” connectivity where determinism requirements are modest (Modbus specs: Modbus protocol).

Decision guide: if it’s a time-critical handshake, stay close to PLC-native IO/protocols. If it’s traceability and reporting, OPC UA is often a clean fit. If it’s a brownfield plant with older gear, plan for Modbus and serial realities.

OT/IT convergence without governance debt

OT/IT convergence is real, but so is governance debt: every new box on the network is a security and maintenance commitment. The goal is to design the data flow so you get traceability without turning the vision station into an unmanaged IT island.

Patterns that work:

- Network segmentation and edge gateways between OT networks and IT networks

- Audit logs: who changed thresholds, lighting profiles, or model versions

- Traceability integration: part IDs, timestamps, station IDs, model/config versions

- Bandwidth discipline: store defect crops + metadata instead of full video unless truly needed

This is also where workflow matters. The inspection decision often triggers a downstream process: rework ticket, hold release, supplier claim, or a maintenance request. If you want those decisions to reliably produce business value, you need the workflow glue—this is where workflow and process automation around inspection decisions becomes part of the system, not an afterthought.

Industrial machine vision applications: inspection, robotics, and beyond

Industrial applications tend to cluster around three themes: quality inspection, vision-guided robotics, and condition monitoring. The most successful projects share one trait: they connect vision outputs to an action that someone already wants to take.

Automated quality inspection that’s auditable

Automated inspection is where machine vision system development for manufacturing most often starts, because the ROI is legible: less scrap, fewer escapes, higher throughput. But the key word is auditable.

To make inspection auditable, align with QA on a defect taxonomy and acceptance criteria. Then record the context needed for traceability:

- Part ID / batch ID (where available)

- Timestamp, line, station, and recipe/SKU

- Decision and confidence + key features (where appropriate)

- Model version and configuration profile

Mini case pattern: a plant reduced scrap by separating cosmetic vs functional defects, routing them differently. Cosmetic defects went to rework; functional defects triggered containment. Same camera, same station—different outcomes because the decision logic matched real process needs.

Vision-guided robotics: accuracy is useless without synchronization

Vision-guided robotics is seductive because the demos look magical. In production, it’s mostly about calibration and timing. If your coordinate frames drift, the robot doesn’t “miss by a bit”—it collides, drops parts, or triggers safety stops.

Core requirements include:

- Calibration between camera frame and robot frame (repeatable procedures)

- Latency compensation for moving conveyors (encoder-triggered capture)

- Safety interlocks, guarding, and predictable failure behavior

Example: a pick-and-place cell on a moving conveyor captures at a precise encoder count, transforms coordinates into robot space, and uses latency requirements to project part position forward. If you ignore determinism here, you get “random” misses that only happen at high speed.

Predictive maintenance signals from vision (when it makes sense)

Vision can support predictive maintenance, but it should be tied to an actionable workflow. The strongest use-cases are visual drifts that correlate with downtime:

- Belt tracking and misalignment

- Clogging and buildup

- Wear patterns on tooling or fixtures

- Leak detection in contained areas

Example: a camera detects increasing residue buildup on a chute, triggers an alarm in SCADA systems, and schedules a planned cleaning window before the clog becomes a line stop. The value isn’t in “predicting”; it’s in making the maintenance action timely and routine.

Testing and validation: FAT/SAT discipline for vision systems

Testing is where machine vision development becomes a real industrial asset. Without FAT/SAT discipline, you don’t have a system—you have a perpetual pilot.

The key shift is moving from dataset metrics to acceptance tests that a plant manager can sign, and that a maintenance team can keep passing six months later.

From dataset metrics to acceptance tests

Dataset metrics help you iterate. Acceptance tests help you deploy. For industrial-grade computer vision for quality inspection, you need both, but you should never confuse them.

An acceptance test checklist (non-technical wording) might include:

- The station runs at production speed for X hours without unscheduled stops.

- Good parts are accepted at least Y% of the time (false rejects ≤ Z%).

- Known defect panels are rejected at least A% of the time (missed defects ≤ B%).

- System produces a clear log for each rejected part (image + reason code).

- Operator can re-run a part safely and get a consistent result.

- Maintenance can clean the window and restore performance using a documented procedure.

Document test conditions: lighting settings, lens, distance, line speed, and temperature ranges. This is the baseline you’ll compare against after changes.

Factory Acceptance Test (FAT) vs Site Acceptance Test (SAT) for vision

FAT and SAT exist because factories are noisy and expensive places to debug. The best projects split validation into two phases:

- FAT: controlled environment testing for integration logic, PLC handshake, baseline accuracy, and deterministic performance under known conditions.

- SAT: on-line validation for environmental robustness, cycle time verification, and operator workflow fit.

A realistic timeline: one week FAT, one week SAT, then a monitored ramp-up where you watch rejects by shift and confirm the station behaves under real changeovers. This is also where change control matters: lighting swaps, camera moves, new SKUs, firmware updates—each should have a defined re-validation path.

Operational monitoring after go-live

Once the station is live, reliability becomes an operations problem. The goal is to spot drift before it becomes downtime.

Monitoring that helps on the floor includes:

- Reject rate by shift and by SKU (catch lighting drift and changeover issues)

- Confidence distribution shifts (early indicator of optics/lighting changes)

- Camera and compute health: temperature, dropped frames, storage, heartbeat

- Top defect types and “unknown” bucket frequency

Pair monitoring with a maintenance playbook: cleaning schedule, recalibration triggers, spare parts list, and a clear path for updating model + configuration versions. If you care about MTBF and uptime, this is not optional.

How to choose an industrial machine vision development company

Buying a vision system is not like buying a SaaS subscription. You’re installing a cyber-physical system that must behave deterministically in the presence of dust, vibration, and human stress. That’s why selecting a machine vision development company for industrial automation should feel closer to selecting an automation integrator than selecting a model vendor.

The ‘industrial-grade’ vendor checklist

If you’re evaluating industrial machine vision development services, ask questions that force station-level thinking. Here’s a buyer’s checklist you can use in an RFP or vendor call:

- What are the cycle-time budget and the p99 latency target for this station?

- How will you measure deterministic performance (not just average latency)?

- What uptime/MTTR targets do you propose, and what design choices support them?

- What is your PLC handshake/state machine design? Can you describe it?

- Which protocols will you use (OPC UA/Profinet/Modbus/IO), and why?

- What is the safe default behavior if the system can’t decide in time?

- How are lighting control and drift management handled?

- What enclosure/IP rating and thermal plan do you recommend for this area?

- What is the plan for SKU changeovers and recipe management?

- How will you handle borderline parts (recheck, manual review, hold)?

- What does the FAT/SAT plan look like, and what are the acceptance criteria?

- What monitoring and maintenance support do you provide post go-live?

A vendor who can answer these crisply is likely building industrial systems, not demos.

Red flags that signal lab-grade computer vision

Red flags usually sound like “innovation” and behave like downtime. Watch for:

- Only talks about model accuracy; ignores false reject costs and missed defect risk.

- No plan for lighting control, optics, mounting, or cleaning access.

- Integration plan is “we’ll call your API” for a time-critical station.

- No monitoring, no versioning, no maintenance playbook.

What happens next when these show up? The line starts “randomly” rejecting parts, operators start bypassing the station, QA starts re-inspecting manually, and the project quietly becomes shelfware. Deterministic performance and integration discipline are how you avoid that ending.

Where Buzzi.ai’s approach is different

At Buzzi.ai, we treat machine vision development as industrial systems engineering. That means we don’t start with the model—we start with the station constraints: cycle time, integration points, environment, and what failure looks like on the line.

Practically, our approach looks like:

- Deployment-first discovery: line survey, constraints mapping, and risk assessment before committing to architecture.

- Co-design: hardware, optics, software, and OT integration engineered together.

- Validation-driven delivery: FAT/SAT, then monitored rollout with change control.

- Outcome metrics: scrap reduction, OEE impact, traceability, and operator trust.

If you’re building for 24/7 manufacturing, that mindset matters more than any single architecture choice.

Conclusion

Machine vision on the factory floor is the opposite of a demo environment. The line is fast, the lighting drifts, the air is dirty, and integration constraints are real. That’s why machine vision development needs to be treated as industrial systems engineering: models are only one component of a larger reliability story.

Build the system around explicit targets—uptime, MTBF, and p99 latency—then engineer optics, lighting, enclosures, and IO handshakes to meet them. Make integration with PLC/SCADA/MES the centerpiece, not a “phase two,” and validate with FAT/SAT so the station is maintainable, not magical.

If you want a practical plan tied to your line constraints, cycle time, and integration needs, the next step is a discovery workshop. Start with AI Discovery for machine vision feasibility and station design and we’ll map station-level requirements, risks, and an implementation path that’s built for uptime.

FAQ

What is the difference between machine vision development and computer vision?

Computer vision is primarily about extracting meaning from images—often optimized around model accuracy and benchmark metrics. Machine vision development is about delivering a production system that improves manufacturing outcomes under real constraints.

That includes deterministic latency, environmental robustness, and integration with PLCs and downstream automation. In other words: computer vision ships a model; machine vision ships a station that operators trust.

Why do machine vision projects fail when moved from lab to factory floor?

Most failures come from physics and operations, not from ML theory. Lighting drift, lens contamination, vibration, and product variation create constant distribution shifts that lab datasets rarely capture.

The other big culprit is integration: if the PLC handshake isn’t deterministic, the line will miss timing windows, reject good parts, or stop unexpectedly. When the system causes downtime, adoption dies fast—regardless of “accuracy.”

What uptime and MTBF targets should an industrial machine vision system meet?

Targets depend on whether the station is a bottleneck and whether it can be bypassed safely. For many inspection stations, teams aim for 99.5–99.9% uptime with an MTTR measured in minutes, not hours.

More important than the number is the engineering plan behind it: spare parts, watchdog/heartbeat design, cleaning access, and a defined safe state. If a vendor can’t discuss MTBF and uptime concretely, they’re likely selling a demo.

How do you budget cycle time and guarantee deterministic latency for inspection?

Start with the station window (for example, 120 ms between sensor trigger and reject gate). Then budget exposure, transfer, preprocessing, inference, decision logic, and PLC handshake with margin.

Focus on p95/p99 latency, not averages, and test under realistic line speeds and peak load. Deterministic performance often requires edge compute and a PLC-friendly state machine rather than cloud calls.

Which industrial protocols are best for PLC integration (OPC UA, Modbus, Profinet)?

Use PLC-native IO or real-time fieldbus protocols for time-critical decisions, and reserve OPC UA for structured reporting and interoperability. Profinet is common in Siemens ecosystems; EtherNet/IP is common in Rockwell environments; Modbus often appears in legacy plants.

The best protocol is usually the one that matches existing site standards, IT security posture, and maintenance capability. A good integrator will propose a split: deterministic control path + richer diagnostic/data path.

How should machine vision integrate with MES and SCADA for traceability?

Think in layers: the PLC needs immediate OK/NOK signals, while MES/SCADA systems need logs, images, defect codes, and versioned configuration for audits. Good designs store metadata and defect crops with timestamps, station IDs, and recipe/SKU context.

If you’re unsure what data to keep and where to keep it, start with a station-focused feasibility step like Buzzi.ai’s AI Discovery so traceability requirements are captured before you build. That prevents expensive rework when QA asks for audit trails after go-live.

What enclosure and IP rating do you need for dusty or wet factory areas?

It depends on exposure: dust, oil mist, washdown, and chemical spray each change requirements. IP-rated enclosures help prevent ingress-related downtime, but thermal management matters just as much—heat is a common silent killer.

Choose an enclosure aligned to the area’s cleaning process, add strain relief and proper grounding, and design for maintenance access. A sealed box that can’t be cleaned or serviced quickly becomes its own reliability problem.

How do you design lighting for reliable defect detection on reflective parts?

Reflective parts often fail inspection due to glare and specular highlights that look like scratches or dents. The fix is usually optical: cross-polarization, diffused dome lighting, or changing the lighting angle to control reflections.

Synchronizing strobes with encoder triggers helps freeze motion blur and overpower ambient light changes. When lighting is stable, the model becomes simpler and more reliable.

What does a good FAT/SAT plan look like for machine vision systems?

A good plan separates controlled validation from on-line validation. FAT proves integration logic, baseline performance, and deterministic timing in a controlled setup; SAT proves environmental robustness and operator workflow fit on the actual line.

Both phases should have written acceptance criteria, golden parts/defect panels, and documented test conditions. Change control should specify what requires re-validation (lighting swaps, camera moves, new SKUs, firmware).

How do you handle SKU changeovers and product variation without constant re-training?

First, treat changeovers like a first-class requirement: recipes, lighting profiles, and station parameters should be versioned and selectable, not hidden in code. Second, use robust imaging (consistent lighting and optics) so variation is reduced before the model sees it.

For borderline cases, design routing rules—re-scan, manual review, or slow-line modes—so production doesn’t stop every time the distribution shifts. Retraining should be a tool, not the only lever.