Choose an Industrial AI Development Partner Built for Harsh Sites

Most industrial AI dies on the factory floor. Learn how an industrial AI development company can engineer environment‑hardened, rugged edge AI that survives.

Most industrial AI that looks brilliant in a slide deck dies the first month it meets dust, vibration, and heat. Not because the algorithms are bad—but because the systems were never engineered for the world they’re supposed to live in.

If you’ve watched a beautiful predictive maintenance demo crumble once it hits the factory floor, you’re not alone. Studies on Industry 4.0 show that while digital pilots are everywhere, only a small fraction scale beyond a few lines or plants (McKinsey). The pattern is consistent: the industrial AI models are fine; it’s the environment that wins.

In the lab, industrial AI behaves like enterprise software—stable power, stable network, stable climate. On the plant floor, edge devices overheat, sensors drift, cabinets fill with dust, and models quietly degrade. This is why so many enterprise projects stumble when they become factory floor AI.

This article is a practical blueprint for how to develop industrial AI that survives harsh environments. We’ll walk through why enterprise AI fails in industrial environments, what it really takes to ruggedize systems, and how to choose an industrial AI development company that actually understands dust, vibration, and heat. Along the way, we’ll share the exact questions to ask vendors and how we at Buzzi.ai approach environment-hardened deployments.

Why Enterprise AI Fails the Moment It Meets Dust and Vibration

The Lab Works. The Plant Floor Doesn’t.

In the lab, the setup looks perfect. A clean test rig, shielded cables, a server on a stable UPS, climate control at 21°C, and a fat fiber link to the cloud. Under those conditions, most industrial AI demos perform beautifully.

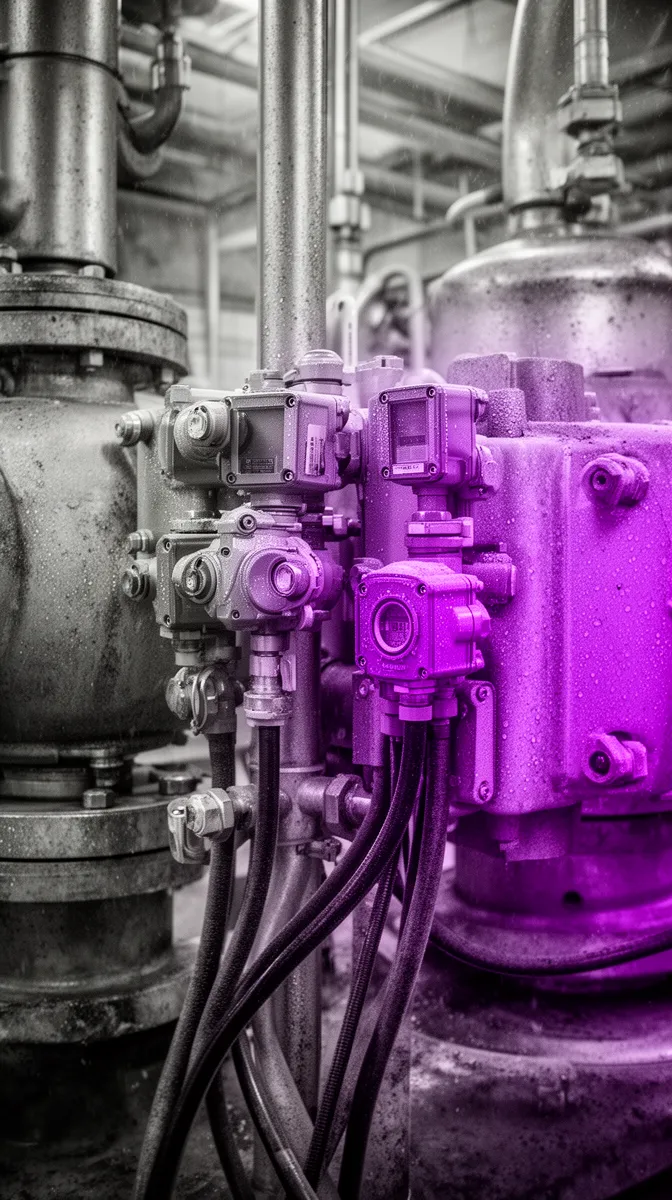

Now move the same system to a harsh environment: a compressor room, a steel mill’s hot strip, or a pump skid on an offshore platform. Suddenly you’re dealing with dust and oil mist in the air, constant low-frequency vibration, ambient temperatures pushing 45°C, intermittent Wi‑Fi, and electricians pulling power from whatever circuit is closest. It’s a different universe entirely.

We’ve seen predictive maintenance pilots that nailed shaft misalignment and bearing faults in a test stand, then miss obvious anomalies once installed next to a vibrating pump in a hot, dusty corner. Sensors clog, mounts loosen, accelerometers pick up structural noise instead of machine signatures, and condition monitoring models start to drown in garbage data. On paper the AI is “the same”—but functionally, it’s a different system.

The key point: most failures aren’t algorithmic. They’re environmental and systemic. If your vendor treats edge AI as “just another server” in a climate‑controlled rack, you’ll discover why enterprise AI fails in industrial environments the hard way.

Typical Failure Modes of Non-Hardened AI Systems

When a system designed for an office meets a harsh environment, it tends to fail in predictable ways. Understanding these failure modes is the first step to designing rugged industrial AI solutions for dust and vibration.

Consider a few common patterns across manufacturing, energy, and utilities:

- Overheating CPUs and GPUs: A fan‑cooled gateway mounted in a sealed cabinet hits thermal limits in the afternoon heat. It reboots randomly, dropping real-time inference and leaving blind spots in your predictive maintenance coverage.

- SSD and connector failures from vibration: A box bolted directly to a vibrating frame sees constant micro‑shocks. Over months, SSDs develop bad sectors and connectors loosen, creating intermittent data gaps that quietly destroy your condition monitoring data quality.

- Dust and moisture ingress: Non‑sealed enclosures inhale cement dust or corrosive vapors. Fans clog, boards corrode, and cameras fog, leading to blurry images and frequent field replacements that OT teams quickly grow to hate.

- Electrical noise and dirty power: In a harsh environment with large motors and drives, EMI spikes ride on power and signal lines. Sensors glitch, analog readings jump, and your models chase phantom anomalies because electrical noise isn’t filtered or accounted for.

- Cloud dependency in low‑connectivity sites: A system that relies on constant cloud connectivity for real-time inference fails outright in a mine or remote substation. Latency sensitive workloads like shutdown advisories or trip prevention simply can’t tolerate long round‑trips or offline windows.

- Unmanaged model drift from dirty data: Sensors drift out of calibration, maintenance crews change bearings or operating modes, and cleaning routines alter signal patterns. Without explicit strategies to detect data drift, models silently degrade, triggering more false alarms and eroding operator trust.

Operationally, these show up as nuisance alarms, missed failures, and dashboards OT quietly stops looking at. The root cause is nearly always the same: nobody captured environmental requirements early, and the supplier treated this as IT, not industrial engineering.

Know Your Environment: The Industrial Conditions AI Must Survive

Five Environmental Forces That Quietly Kill AI Deployments

To build an industrial AI development company for harsh environments, you have to start with physics, not Python. Real sites are defined by five environmental forces that quietly kill deployments over months: dust and particulates, vibration and shock, temperature extremes, moisture and condensation, and electrical noise on power and signal lines.

Dust and particulates are everywhere in cement, mining, metals, and many process plants. They block fans, coat lenses, clog optical sensors, and act as an abrasive layer inside connectors. Over time, your once‑clear factory floor AI vision system becomes a blurry, unreliable observer.

Vibration and shock come from pumps, compressors, presses, and rotating equipment. They don’t just affect vibration sensors; they also fatigue solder joints, loosen terminals, and stress SSDs. A device that looks fine in week one can become a flaky mess by month six.

Temperature extremes—and temperature cycling—are even more insidious. A cabinet that sees −10°C nights and +50°C days experiences expansion and contraction on every component, accelerating aging. Wide‑temperature industrial PCs exist for a reason; consumer hardware can’t survive this workload.

Moisture and condensation show up in food plants with washdowns, coastal facilities, and outdoor installations. Condensation forms during temperature swings, slowly corroding boards and connectors. Without proper ingress protection and enclosure design, sensors and edge devices will inevitably fail.

Finally, electrical noise and dirty power—harmonics, surges, ground loops—inject chaos into analog signals and crash poorly protected equipment. In industrial IoT deployments, ignoring power quality is a reliable way to turn your edge AI into a random number generator.

These forces don’t usually kill systems in days. They degrade sensors, hardware, and models over months, often just after the vendor has declared the pilot a success. Understanding them is table stakes for industrial edge AI development for temperature extremes and other demanding conditions.

Translating Conditions into Engineering Specs

Knowing that a plant is "hot, dusty, and loud" is not enough. To build environment-hardened systems, you need to translate qualitative descriptions into quantitative engineering requirements: temperature ranges, vibration levels, ingress protection ratings, and EMI/EMC compliance targets.

For temperature, that means specifying something like: operating range −20°C to +60°C, with defined temperature cycling rates. For vibration, you might define acceptable levels in g RMS over a given frequency band, based on ISO vibration standards or site measurements. For moisture and particulates, ingress protection ratings matter: an IP65 enclosure protects against dust and low‑pressure water jets; IP67 is tested for immersion (IEC 60529).

Ingress protection rating isn’t just a checkbox. An IP65 sensor in a washdown food plant might still be at risk if cable glands are mis‑specified or if cleaning chemicals attack seals. Similarly, EMI/EMC compliance with standards like IEC/EN 61000 tells you whether devices can survive common industrial interference (IEC 61000‑6‑2 overview).

Here’s what this looks like in practice. For an edge AI box in a food plant washdown area, a reasonable spec snippet might be:

- Operating temperature: 0°C to +50°C with defined temperature cycling during sanitation.

- Enclosure: stainless IP66 cabinet with appropriate cable glands and condensation management.

- Vibration: compliant with relevant vibration testing standard for wall‑mounted industrial PCs.

- Power: filtered 24 VDC supply with surge protection and brown‑out tolerance.

- EMI/EMC: certified to IEC/EN 61000‑6‑2 for immunity in industrial environments.

Crucially, you don’t invent these in a conference room. You involve OT, maintenance, and reliability engineers who understand shift schedules, cleaning practices, and real exposure profiles. A serious industrial AI development company will insist on this step before quoting anything material.

What It Really Means to ‘Ruggedize’ Industrial AI

Ruggedization Across Hardware, Enclosures, and Power

Ruggedization is not a brand label; it’s a system property. Environment hardened industrial AI development services start from the assumption that everything—from silicon to mounting bracket—must be designed against those five environmental forces.

On the hardware side, that means ruggedized hardware such as fanless industrial PCs or embedded modules with wide‑temperature components, solid‑state storage, and isolated I/O. No spinning disks, no open fans pulling in dust. These devices are designed for vibration testing and temperature cycling, not just office workloads.

Then you look at enclosures. A robust solution might place a fanless industrial PC inside an IP65 enclosure on a wall away from the worst vibration, with proper gasketing, cable glands, and drain or breather vents to handle condensation. Sensors are chosen with appropriate ingress protection and mounted with mechanical isolation where possible.

Power quality is just as important. You design for dirty power: surge protection, filters for harmonics, proper grounding, and sometimes a small UPS or DC buffer to ride through brown‑outs. Safe shutdown sequences ensure that even in a harsh environment, the system fails gracefully and doesn’t corrupt data or firmware. For many sites, this on‑premise deployment is the only realistic choice.

Put together, this stack might look like a fanless industrial PC in a stainless IP66 cabinet, fed by a conditioned 24 VDC rail, wired to vibration and temperature sensors on nearby machines—engineered explicitly for dust, vibration, and washdowns.

Software and Model Ruggedization: Not Just Metal Boxes

Ruggedization doesn’t stop at steel and silicon. Environment-hardened AI also demands robust software and model design, especially for real-time inference on noisy, intermittently available data.

On the software side, that includes watchdog processes to restart services that hang, auto‑reconnect logic for flaky networks, local buffering of data during outages, and persistent local logging. Health monitoring for CPU temperature, disk health, and process status lets operations see issues before failures cascade.

At the model level, ruggedization means designing for uncertainty. You handle noisy sensor fusion by validating signal quality, using confidence thresholds, and detecting out‑of‑distribution inputs. A well‑designed condition monitoring model doesn’t just output “OK/Not OK”; it outputs a confidence score and flags when data quality is poor.

This is where fail-safe design matters. AI should have clear fallback rules: when confidence is low or data quality drops, the system downgrades to advisory‑only mode or defers to existing PLC logic. Especially for latency sensitive workloads, you never want a flaky edge AI system making unreviewed control decisions.

Done correctly, rugged edge AI becomes a reliable advisor that keeps working through dust, heat, and glitches. It’s also where we frequently tie into broader predictive maintenance and condition monitoring solutions that span both edge and central systems.

An Engineering-First Methodology for Environment-Hardened Industrial AI

Step 1: Capture Environmental and Operational Requirements Up Front

Most industrial AI failures are baked in during the first few weeks—when requirements are treated as a formality. An engineering‑first industrial AI development company for harsh environments does the opposite: it invests heavily in discovery with OT, reliability, and AI engineers together.

A structured workshop should answer questions like: Where exactly will devices be mounted? How close are they to major vibration or heat sources? What cleaning routines, washdowns, or blast‑downs occur nearby? What contaminants—dust, oil mist, corrosive vapors—are present? What network is truly available at those points? What uptime and data loss windows are acceptable?

This is also where industrial data pipelines and process context are mapped. How often are machines started and stopped? What maintenance logs exist? How are alarms currently handled? Without this, you’re guessing at both environment and operations.

If you’re evaluating vendors, ask them:

- "Can you show me an example of environmental requirement specs (temperature, vibration, IP/EMI) from a past project?"

- "How do you decide between edge, on‑prem, and cloud for latency sensitive workloads?"

- "Which OT stakeholders do you involve before designing the architecture?"

- "What’s your process for handling data drift and calibration changes?"

Vendors who can’t answer these concretely probably don’t know how to develop industrial AI that survives harsh environments. Our own AI discovery and requirements workshops are explicitly structured to surface these constraints before any code is written.

Step 2: Design the Edge Architecture Around Constraints, Not Ideals

Once the constraints are clear, you design architecture around them—not around a vendor’s preferred cloud or off‑the‑shelf template. For industrial automation, this often means leading with edge AI for real-time inference where connectivity is unreliable or latency budgets are tight.

You decide what runs where: models that support safety‑critical or fast control loops usually run as edge inference near the machine, possibly in an industrial PC or embedded AI module. Aggregated analytics, fleet‑level learning, and heavy retraining can live in a central on-premise deployment or the cloud, depending on data sovereignty and security policies.

Consider two architectures for a process plant. The cloud‑centric version sends vibration and process signals upstream, doing most inferencing remotely and pushing recommendations back. It works fine in a well‑connected greenfield facility but falls apart in brownfield sites with intermittent links. The edge‑centric version runs core models locally, buffering data during outages and syncing when possible—far better aligned with industrial IoT reality.

You also decide between industrial PCs, PLC add‑ons, and embedded modules. If the environment is extremely harsh, integrating embedded AI into existing PLC racks or using hardened PLC‑adjacent modules can be safer. Where conditions allow, a ruggedized edge gateway with good vibration and temperature specs may be ideal.

Step 3: Build Data Pipelines for Noisy, Drifting, and Intermittent Signals

Industrial data is messy by design. Sensors drift out of calibration, operators override controls, maintenance events change machine signatures, and connectivity drops packets. Industrial data pipelines must embrace this reality.

For predictive maintenance and condition monitoring, you don’t just stream raw timeseries into a model. You track calibration events, attach maintenance logs, and tag known disturbances (like washdowns or changeovers). You design preprocessing steps that detect sensor drift, outliers, and missing data—and either correct them or mark them explicitly.

Connectivity is treated as intermittent by default. That means store‑and‑forward buffering at the edge, with eventual consistency when links are restored. Models can continue running on recent local data, while non‑critical analytics update when the network allows. This is where robust sensor fusion and OT/IT convergence practices pay off.

Imagine a vibration monitoring pipeline where sensors slowly drift. Periodic manual calibration checks are logged by maintenance. The pipeline correlates sudden changes in baseline with these events, updating the model and flagging potential drift when patterns don’t match. That’s environment-aware AI, not blind statistics.

Step 4: Validate with Environmental and Operational Testing

Finally, you test like an engineer, not like an app developer. For environment hardened industrial AI development services, this means explicit environmental and operational testing—not just functional demos.

On the environmental side, that can include temperature cycling, vibration testing, ingress tests, and EMI/EMC checks aligned with target standards (IEC/EN 61000‑6‑2 example). Highly Accelerated Life Testing (HALT) or Highly Accelerated Stress Screening (HASS) may be justified for particularly harsh environments.

On the operational side, you run soak tests in limited real locations: one production line, one substation, one mine face. You monitor MTBF, data loss windows, and model performance under real stress. Only after systems survive both environment and operations do you roll out fleet‑wide.

A disciplined industrial AI consulting for ruggedized machine learning systems will define acceptance criteria up front: maximum tolerated downtime, acceptable levels of missed data, alert precision and recall under known disturbances. Then they will refuse to call the project “done” until those criteria are met in the field.

Safe Integration with PLCs, SCADA, and Industrial Control Systems

Design AI as a Co-Pilot, Not a Rogue Controller

Even if your edge AI is perfectly ruggedized, it still has to play nicely with control systems. In real plants, the safest pattern is to design AI as a co‑pilot, not a rogue controller.

Initially, AI typically operates in advisory‑only mode. It analyzes sensor data and process trends, then surfaces alerts, recommended actions, or risk scores to operators and engineers. The existing PLC, DCS, or SIS logic remains fully in charge of control.

Over time, as confidence grows, you can move toward “soft interlocks” and more automated actions. But fail-safe design remains critical: if the AI goes offline, loses data, or reports low confidence, the system must gracefully revert to baseline control behavior. This is non‑negotiable in safety‑critical industrial automation.

For example, an AI model might flag a pump as at risk of cavitation based on sensor fusion across pressure, flow, and vibration. Initially, it only recommends a planned inspection and potential derating; the PLC logic remains unchanged. Later, after months of successful detection, you might allow the AI to trigger a soft alarm that suggests a controlled shutdown, still within PLC‑defined limits.

Integration Patterns That Actually Work in Brownfield Plants

In brownfield environments, you rarely get to rip and replace. Integration patterns have to respect existing PLC integration, SCADA architectures, and security constraints.

Common approaches include: reading tags from PLCs or SCADA via OPC UA, MQTT, or Modbus; writing advisory tags back for display in existing HMI screens; and using sidecar edge gateways that sit on a mirror port of control networks. This keeps AI visible but separable from core control.

Best practices from OT/IT convergence guides by ISA and NIST emphasize standard industrial protocols and observability (NIST ICS guide). You want integrations that are reversible—easy to roll back if needed—and avoid hard dependencies on proprietary protocols that increase vendor lock‑in.

Done well, AI becomes another trusted data source in your SCADA and MES stack, not a fragile side project. For many organizations, this incremental approach is the only realistic path to safe adoption.

How to Evaluate an Industrial AI Development Company for Harsh Sites

Questions That Separate Slideware from Field-Proven Vendors

If you’ve read this far, you probably already suspect that most vendors are stronger on slides than on the factory floor. The good news: a few targeted questions quickly reveal who actually knows harsh environments.

Ask prospective partners:

- “Can you walk me through a deployment you did in a dusty, high‑vibration, or high‑heat environment?”

- “What ingress protection rating and IP65/IP67 enclosure choices did you make there, and why?”

- “How do you verify EMI/EMC compliance for your edge setups, and which standards (e.g., IEC/EN 61000‑6‑2) do you target?”

- “What temperature ranges and vibration testing specs did your hardware need to meet?”

- “Show me an example of an environmental test plan you’ve used for industrial edge AI development for temperature extremes.”

- “How do you detect and respond to model and data drift in deployed systems?”

- “What’s your approach to OT/IT convergence and PLC integration in brownfield plants?”

- “Tell me about a failed pilot you inherited and how you fixed it.”

Vendors who answer with specifics—numbers, standards, test methods, concrete case studies—are much more likely to deliver environment hardened industrial AI development services that actually survive.

Red Flags That a Vendor Treats Industrial AI Like Office IT

Just as there are positive signals, there are also clear red flags that a vendor doesn’t really understand why enterprise AI fails in industrial environments.

Watch for:

- Architectures that put all inference in the cloud for latency‑critical use cases, with no serious plan for edge AI or on‑premise deployment.

- No mention of IP ratings, vibration specs, or temperature ranges—hardware is an afterthought.

- Using cheap consumer‑grade gateways on the plant floor, often mounted directly to vibrating equipment.

- No field test phase—straight from lab demo to “let’s roll it out to ten sites.”

- Workshops that include IT and data science, but no OT, maintenance, or reliability engineers.

Each of these is a leading indicator of painful pilots: frequent hardware failures, noisy models, and operators who quietly ignore yet another dashboard. If you see several of them, your best move may be to reshape the engagement or walk away before you commit capital.

By contrast, the best industrial AI development company for factory floor deployment will talk easily about grades of dust, process areas, and environmental stress, not just GPUs and dashboards.

How Buzzi.ai’s Approach Is Different

At Buzzi.ai, we start with the environment, not the model. Our teams will often begin an engagement with a plant walkdown: where we stand in the compressor room, in the washdown area, or next to the mill stand, and map out what dust, vibration, temperature extremes, and moisture really look like.

From there, we run structured discovery that combines AI engineers with OT, automation, and reliability specialists. We define environmental specs, choose appropriate industrial PCs and enclosures, design data pipelines for noisy and drifting sensors, and plan for both edge AI and central analytics. This cross‑disciplinary approach is baked into our ai implementation services.

For one manufacturing client, this meant rescuing a failing predictive maintenance pilot by moving models from a cloud‑only setup to ruggedized edge gateways inside IP66 cabinets, adding signal quality checks and confidence‑based alerts. For a utilities site, it meant designing tailor-made industrial AI agents that ran in remote substations with intermittent connectivity, using robust store‑and‑forward pipelines and conservative fail‑safe design around critical protection relays.

We position ourselves not just as an industrial AI development company for harsh environments, but as a long‑term partner for environment hardened industrial AI development services. That includes planning for model maintenance, periodic retraining, and environmental re‑validation as processes and plants evolve.

If you’re interested in a deeper discussion, you can explore our tailor-made industrial AI agents and how we adapt them for real‑world industrial conditions.

Conclusion: Build Industrial AI That Survives the Plant, Not Just the Pilot

Most industrial AI doesn’t fail because the data scientists got the loss function wrong. It fails because dust, vibration, heat, moisture, and electrical noise were never treated as first‑class requirements—and the systems were quietly crushed by the realities of the factory floor.

To build industrial AI that survives harsh environments, you need ruggedization across the entire stack: hardware, enclosures, power, software, and models. You need an engineering‑first methodology that starts with real environmental and operational requirements, designs architectures around constraints, hardens industrial data pipelines, and validates everything under real stress.

Safe integration with PLCs, SCADA, and industrial control systems ensures AI acts as a co‑pilot, not an uncontrolled actor—respecting safety and existing industrial automation practices. And choosing a partner that lives in these realities, not just in cloud diagrams, is the difference between a flashy pilot and a fleet of stable deployments.

A practical next step: audit one of your existing or planned industrial AI initiatives against the ruggedization framework in this article. Where are the environmental specs? How is edge AI handled? What are the fail‑safe modes? If you’d like a structured harsh‑environment readiness assessment and architecture review, you can schedule an industrial AI ruggedization assessment with us before your next deployment or scale‑up.

FAQ: Industrial AI for Harsh Environments

Why do so many industrial AI pilots fail when moved from the lab to the factory floor?

Most pilots are validated under lab conditions—clean power, stable temperature, great connectivity—then deployed into dust, vibration, and heat they were never designed for. Edge devices overheat, sensors drift, and data quality collapses, so models that looked great in testing suddenly underperform. The issue is usually environmental engineering and system design, not the underlying machine learning.

What environmental conditions must an industrial AI system be designed for in heavy industry?

In heavy industry, you should plan for dust and particulates, vibration and mechanical shock, temperature extremes and cycling, moisture and condensation, and electrical noise or dirty power. Each of these has specific impacts on sensors, enclosures, and electronics over time. A serious design process quantifies all five for each installation point before selecting hardware or finalizing architecture.

How does dust, vibration, and heat affect edge AI devices and sensors over time?

Dust clogs fans, coats lenses, and abrades connectors, slowly degrading cooling and signal quality. Vibration fatigues solder joints, loosens terminals, and damages drives, leading to intermittent faults that are hard to diagnose. Heat accelerates aging of all components and can cause thermal shutdowns or performance throttling, undermining real-time inference when you need it most.

What does it practically mean to ruggedize an industrial AI solution?

Ruggedization means designing the entire stack—hardware, enclosures, power, software, and models—to survive specific environmental stresses. Practically, that can include fanless industrial PCs, IP65/IP67 enclosures, surge‑protected and filtered power, watchdogs and buffering in software, and models that account for noisy data and fail-safe operation. It’s not a single product feature; it’s a disciplined engineering approach.

How can I specify temperature, vibration, IP rating, and EMI requirements for an AI project?

You start by mapping where equipment will be installed and what it will experience—using OT and reliability engineers’ knowledge plus any available measurements. From there, you define operating temperature ranges, allowable vibration levels, ingress protection targets (e.g., IP65 or IP67), and relevant EMI/EMC standards such as IEC/EN 61000 series. Many organizations find it helpful to work with an expert partner for this step; Buzzi.ai’s AI discovery and requirements workshops are designed to capture exactly these specifications.

What testing is required to prove an AI deployment is ready for a harsh industrial environment?

Beyond basic functional tests, you should run environmental tests like temperature cycling, vibration testing, ingress checks, and EMI/EMC validation against target standards. Operationally, limited field soak tests on real lines or assets validate uptime, data loss windows, and model performance under real disturbances. Only when both environmental and operational criteria are met should you consider scaling across multiple sites.

Which hardware and enclosure options work best for rugged edge AI deployments?

Fanless industrial PCs or embedded AI modules with wide-temperature ratings and solid-state storage are a strong foundation. Pair them with appropriately rated enclosures—often stainless IP65 or IP66 cabinets—with correct gasketing, cable glands, and condensation management for the environment. The “best” option is always contextual: your dust levels, washdown practices, vibration exposure, and mounting constraints drive the right combination.

How should industrial data pipelines handle noisy, drifting, or intermittent sensor data?

Robust pipelines tag calibration and maintenance events, detect sensor drift, and distinguish genuine anomalies from artifacts like washdowns or mode changes. They use store‑and‑forward buffering and eventual consistency to cope with intermittent connectivity, ensuring models can still run locally when networks are down. The goal is to make the pipeline aware of operational context, not just raw time‑series statistics.

How do you safely integrate AI with existing PLCs, SCADA, and MES systems?

Safe integration treats AI as an advisor first, using standard industrial protocols (OPC UA, MQTT, Modbus) to read and write advisory tags. AI outputs appear as additional information on existing SCADA and MES displays while PLC logic remains authoritative for control, especially early on. Over time, you can add soft interlocks or semi‑automated actions, but with clear fail-safe behavior if AI confidence drops or systems go offline.

How can I tell if an industrial AI development company truly understands harsh environments?

Ask for concrete examples of deployments in hot, dusty, vibrating, or wet environments, and listen for specific temperature, vibration, IP, and EMI/EMC details. Check whether they run environmental tests, insist on field soak pilots, and involve OT and reliability engineers from the start. Vendors that speak fluently about ingress protection, industrial PCs, and environmental stress—not just cloud and dashboards—are far more likely to deliver reliable systems at scale.