Fine-Tune LLM for Business Only When It Truly Pays Off

Use this pragmatic framework to decide when to fine-tune LLM for business versus doubling down on prompts, RAG, and tooling to maximize ROI.

Most teams that decide to fine-tune LLM for business do it for the wrong reason: it feels like the “serious” thing to do. In reality, many of those projects quietly stall out or ship brittle systems that don’t perform any better than a well-designed prompt library plus retrieval-augmented generation would have.

The pattern is usually the same. The base model is integrated with a single naive prompt, results are underwhelming, and someone suggests LLM fine-tuning as the magic fix. Months and a sizable invoice later, the business impact still looks suspiciously small.

What’s missing is a decision framework. You need a clear way to decide when to keep investing in prompt engineering, RAG, and orchestration—and when the economics and constraints finally justify custom LLM fine-tuning. That’s what we’ll walk through here.

This guide is written for product, engineering, and operations leaders who care about business ROI more than model vanity. At Buzzi.ai, we build production AI agents and workflows across industries, and we often advise clients not to fine-tune until very specific criteria are met. In the pages that follow, we’ll show you those criteria and how to apply them to your own use cases.

The Real Question: Are You Hitting the Prompt-Only Ceiling Yet?

Before you fine-tune LLM for business, you need to know whether you’ve actually hit the ceiling of what strong prompt engineering and orchestration can deliver. Most teams haven’t. They’re comparing fine-tuning to a weak baseline rather than to a serious attempt to optimize prompts, context, and tools.

Think of it like weightlifting. If you’re still learning basic form, you don’t blame the barbell when you can’t hit big numbers—you focus on fundamentals. With LLMs, that means your first responsibility is to get a strong baseline model performance from off-the-shelf models with good prompts and integration patterns.

Start With a Strong Baseline Before You Touch Fine-Tuning

A mature baseline setup doesn’t look like “call GPT with this one big prompt and hope for the best.” It looks like a deliberate system: role conditioning in system prompts, templates per task, few-shot examples, and style guides embedded into your prompt libraries. You should be A/B testing prompts the same way you’d A/B test landing pages.

For a support chatbot, a naive prompt might say: “You are a helpful support agent. Answer user questions.” A structured baseline could instead have:

- A system prompt defining brand voice, escalation rules, and forbidden behaviors.

- Task-specific templates (refunds, password resets, feature explanation) with explicit required outputs.

- Few-shot examples showing ideal answers, including how to say “I don’t know” and when to escalate.

With this kind of setup, we routinely see support bots closing 70–80% of tickets correctly on a base model, before any LLM fine-tuning. Only then does it make sense to ask whether the remaining gap is worth paying to close.

Critically, you must measure this baseline. Track task-level metrics like accuracy, first-contact resolution, CSAT, task completion rate, and average editing time for human reviewers. Baseline model performance isn’t a vibe; it’s a dataset you can evaluate and re-run whenever you change prompts or models.

Prompt Engineering Techniques You Should Exhaust First

If you’re wondering how to decide between prompt engineering and LLM fine-tuning, the answer starts with exhausting a set of high-leverage techniques. Many of these can unlock dramatic quality gains without touching training pipelines or custom models.

Some of the best ways to optimize an LLM before fine-tuning include:

- Chain-of-thought reasoning: Ask the model to think step-by-step, then optionally hide the reasoning from the end user.

- Tool calling and function integration: Let the model call search, databases, CRMs, or calculators instead of hallucinating facts.

- Explicit constraints: Specify allowed formats, forbidden phrases, and strict length limits.

- Structured outputs: Require JSON schemas or fixed templates, which dramatically improve reliability in workflows.

- Guardrails and policies: Wrap prompts in policy engines that enforce content rules, escalation logic, and safety filters.

On top of that, introduce retrieval-augmented generation for knowledge-heavy tasks. For internal knowledge assistants, policy Q&A, or support, RAG is often the single biggest upgrade: you ground model answers in a curated knowledge base without changing the model itself.

Finally, treat your prompts like a product. Maintain prompt libraries, run A/B testing prompts on live traffic, and build experimentation into your LLM integration. For many business use cases, prompts + RAG + orchestration get you 80–90% of the way there. Fine-tuning is about whether that last 10–20% is worth serious money and complexity.

A Fine-Tuning Decision Framework for Business Leaders

So when should a business fine-tune an LLM instead of just improving prompts? You need more than intuition. You need a repeatable LLM fine-tuning decision framework for companies that ties model customization to business outcomes, not just technical ambition.

We recommend evaluating four dimensions: performance gap, stability & consistency needs, data readiness, and operational capacity. Fine-tuning only makes sense when all four say “yes” at the same time. If any one of them is a hard “no,” your AI implementation roadmap should focus elsewhere.

Four Dimensions That Must Say “Yes” Before You Fine-Tune

Here’s the framework in plain language. Picture each dimension as a traffic light—green, yellow, or red.

- Performance gap: Is there a measurable model performance gap between current best prompts/RAG and your required outcome? Green means you’re close but not there (e.g., 85% vs 95% accuracy) and that closing the gap has substantial value.

- Stability & consistency: Do you need tightly controlled style, behavior, and guardrails that prompts and policies can’t reliably enforce? Green means small deviations are costly—regulatory, legal, or brand-wise.

- Data readiness: Do you have enough domain-specific data and labeled examples that reflect real production workloads? Green means thousands of high-quality, representative examples and a solid evaluation dataset.

- Operational capacity: Can your team handle model maintenance, monitoring, retraining, and rollout? Green means there’s a clear training pipeline, model monitoring, and owners for long-term upkeep.

If you see green-green-green-red, you don’t have an LLM fine-tuning problem; you have an operations problem. That’s why this is an enterprise AI strategy tool—not just a modeling checklist.

Dimension 1: Is There a Material Performance Gap Worth Paying For?

First, quantify the model performance gap between your optimized prompt/RAG setup and the business target. This isn’t about BLEU scores or obscure metrics; it’s about plain numbers: error rate, escalation rate, handle time, or revenue uplift.

Suppose you have a contract summarization assistant. Today, with good prompts and RAG against your contract database, 85% of summaries are “usable with light edits” according to legal reviewers. The business requirement, however, is 95%+ usable summaries so that legal review time can be cut in half.

To measure the performance gap, build an evaluation dataset of real contracts and gold-standard summaries. Run A/B tests between prompt variants and, if possible, a vendor’s domain-tuned model or small pilot fine-tune. You want to know: can we realistically gain that 10 percentage points? And if we do, what’s the impact on lawyer hours saved and deal cycle time?

As a rule of thumb, fine-tuning LLM for business use cases should target improvements like:

- +5–10 percentage points accuracy on a meaningful metric, or

- 30% reduction in editing/review time, or

- Clear, quantifiable revenue uplift (e.g., upsell acceptance or conversion rates).

If you can’t connect the expected lift to a believable cost-benefit analysis, you’re not ready to fine-tune LLM for business yet.

Dimension 2: Do You Need Tight Consistency, Style, and Guardrails?

Next is stability. Some use cases can tolerate variability—internal brainstorming tools, early-draft marketing copy, exploratory analysis. Others cannot. If your domain has strict governance and compliance requirements or narrow brand guidelines, consistency starts to matter as much as raw accuracy.

Consider a financial services chatbot that advises customers on loan options. It must always use precise regulatory disclosures, avoid forbidden phrases, and stay within a carefully defined brand tone. You can layer guardrails and policies around a base model, but if you still see drift in tone or phrasing, fine-tuning for style and policy adherence may become compelling.

The key question: how expensive is inconsistency? If one off-brand reply is a minor annoyance, prompts and guardrails are likely enough. If a single slip can mean regulatory trouble, customer churn, or brand damage, this dimension moves from yellow to green for fine-tuning.

Dimension 3: Do You Actually Have the Right Data to Fine-Tune?

Fine-tuning without solid data is like building an extension on a house with no foundation. You need both volume and quality of domain-specific data. That means labeled examples, diverse scenarios, and good coverage of edge cases.

For example, a ticket classification system that’s handled millions of historical support tickets with expert-labeled categories is in a strong position. You can build a robust training pipeline and a separate evaluation dataset that mimics production workloads. On the other hand, if you have 200 noisy examples and a vague labeling scheme, you don’t have a fine-tuning problem; you have a data problem.

You also need to think about model drift. If your domain changes quickly—like constantly updated product catalogs or rapidly shifting regulations—overfitting to historical data can become a liability. That doesn’t rule out fine-tuning, but it means you’ll need a clear retraining cadence and monitoring strategy.

Dimension 4: Can You Operate and Maintain a Fine-Tuned Model?

Fine-tuned models are not fire-and-forget assets; they’re ongoing products. You need MLOps capabilities: monitoring dashboards, incident response processes, versioning, and a repeatable training pipeline. Without these, you increase risk every time you ship a new custom model.

Operational readiness also includes understanding inference costs and infrastructure trade-offs. Sometimes, a managed base model with prompts and RAG is cheaper and more flexible than hosting multiple fine-tuned variants. Each new tuned model adds complexity, monitoring load, and model maintenance overhead.

We’ve seen companies fine-tune a model, deploy it, and then change their policy framework or product line months later. Without model monitoring in place, quality quietly degrades. Eventually someone notices, but by then you’re firefighting instead of executing an intentional AI implementation roadmap.

Measuring the Gap: How to Test Whether Fine-Tuning Is Warranted

Once you’ve used the decision framework to suspect that fine-tuning might be justified, the next step is empirical. You need to know how to evaluate if your LLM use case needs fine-tuning with data, not conviction. That means evaluation datasets, business-tied metrics, and structured experiments.

This is where teams often benefit from AI consulting services—not to push fine-tuning, but to design good tests. Before you sign a big contract, you should have a clear picture of what level of uplift justifies the spend and how you’ll measure it.

Design Evaluation Datasets That Mirror Real Workflows

An evaluation dataset is not an academic benchmark; it’s a mirror of your real production workloads. To build one, sample real user inputs from logs—customer questions, employee queries, real documents—not synthetic prompts that never occur in practice.

For an internal knowledge assistant, you might pull 500 past questions from employees along with the correct answers provided by subject-matter experts. Include common questions, edge cases, and messy real-world phrasing. Then annotate this data with ground truth outputs or scoring rubrics.

Crucially, keep your training data separate from your eval set to avoid leakage. If you later fine-tune, the eval dataset must remain untouched so you can honestly measure the model performance gap. Start with a small but representative set—200–500 examples per major use case is often enough to see patterns.

Choose Metrics That Tie Directly to Business Value

Model metrics only matter if you can tie them to business value. When you fine-tune LLM for business, you should be thinking in terms of improved KPIs, not nicer-looking outputs. That’s the only way to calculate a believable ROI.

For a ticket triage system, a 10% accuracy improvement might mean thousands of tickets automatically routed each month instead of manually handled. Multiplying by agent time and salary gives you a concrete benefit. Similarly, better summarization quality can be translated into fewer minutes per document for reviewers.

Pick a small set of clear metrics per use case—exact match, rubric-based scoring, human ratings, escalation rate—and map each to operational savings or revenue impact. That turns your LLM experimentation into a real cost-benefit analysis, not a beauty contest.

Run “What If” Experiments Before Committing to Fine-Tuning

Before you commit to full-blown LLM fine-tuning, run “what if” experiments that approximate what a more specialized model might achieve. This could include aggressive prompt variants, small adapter layers (where supported), or vendor-provided domain-tuned models.

For example, a company might test a vendor’s finance-specific model on their evaluation dataset alongside their best prompt-only setup. If the uplift is marginal—say, 2–3 percentage points on a metric that doesn’t move core business KPIs—they can confidently skip fine-tuning for now.

Document explicit decision thresholds: “We’ll only proceed if we see at least X% improvement on metric Y, which translates into $Z per year in benefit.” This is how you use an LLM fine-tuning decision framework for companies in practice. Be willing to walk away if the numbers don’t add up—and keep iterating on prompts, RAG, and workflow automation instead. For a deeper technical dive into the mechanics of tuning, you can later complement this framework with a detailed guide to fine-tuning LLMs for real business impact.

Fine-Tuning vs Prompt Engineering vs RAG for Business Automation

So far we’ve treated fine-tuning vs prompt engineering as a decision. In practice, they are complementary tools inside a broader stack. For business automation, you’re usually combining three things: prompts, retrieval-augmented generation, and (sometimes) fine-tuned models.

The trick is matching each technique to the part of the workflow where it shines. This is where solid LLM integration and architecture design pay off.

When Prompt Engineering Alone Is Enough

Plenty of workflows don’t justify the complexity of fine-tuning at all. If your use case is generic writing, basic Q&A on public knowledge, or simple classification with wide tolerances, strong prompt engineering is often sufficient.

Take a marketing copy assistant for blog intros and email drafts. With good prompt templates, brand voice instructions, and a shared style guide, a base model can produce on-brand drafts that humans lightly edit. From an ROI perspective, the marginal gain from fine-tuning is small compared to the added model maintenance burden.

In these scenarios, investing in prompt libraries, experimentation workflows, and team training in prompt engineering is far more valuable than jumping into model customization. Your baseline model performance is already “good enough” for the stakes involved.

When RAG Beats Fine-Tuning for Knowledge-Heavy Tasks

When the primary challenge is missing or constantly changing knowledge, retrieval-augmented generation almost always beats fine-tuning. You want the model to pull from a living, updatable knowledge base—not memorize everything into weights.

Imagine a policy Q&A assistant in a regulated industry. Policies change monthly. If you fine-tune, you’ll be retraining constantly and still risk stale answers. With RAG over a versioned knowledge base, you can update content instantly and maintain clear audit trails of which document each answer came from.

That’s why many modern enterprise workflows pair RAG for facts with base models for language. Fine-tuning in this context is reserved for narrow tasks like classification or style adherence, not for encoding the entire knowledge base.

Scenarios Where Fine-Tuning Delivers a Step-Change

There are, however, scenarios where fine-tuning genuinely delivers a step-change. These are usually high-volume, high-value, and relatively stable domains where the model needs to internalize nuanced patterns rather than just retrieve information.

Consider an invoice classification and enrichment system. You might have millions of historical invoices and a clear schema of fields to extract: vendor, line items, tax codes, cost centers. Here, when is custom LLM fine-tuning better than prompt engineering? When you can convert small accuracy gains into massive automation savings across millions of documents.

In one public case study, a company used fine-tuning to significantly improve support ticket triage quality and speed, reducing manual routing load by leveraging their own labeled history at scale. This is exactly the kind of high-volume, repetitive workflow where fine-tuning can pay off.

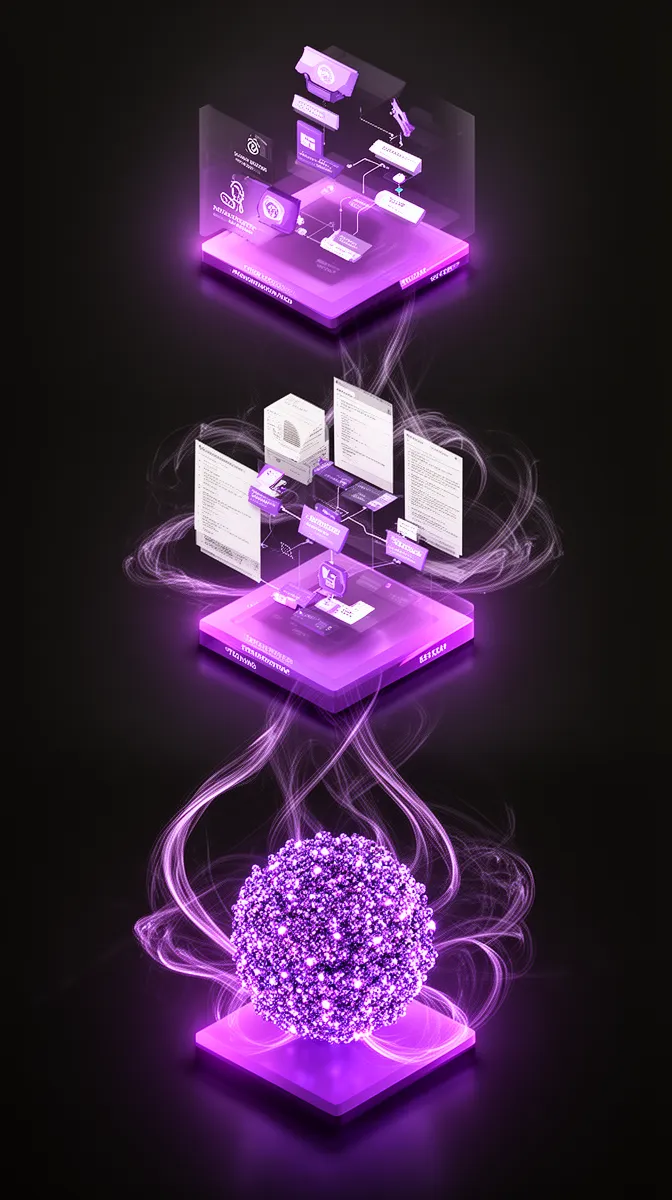

Hybrid Strategies: Orchestrating Prompts, RAG, and Fine-Tuned Models

The most powerful architectures are hybrid. Rather than arguing about fine-tuning vs prompt engineering for business automation, route different tasks to the technique that fits best.

A modern customer service AI stack might look like this:

- A fine-tuned intent classifier to route incoming messages.

- RAG over a knowledge base for factual questions, documentation, and policy lookup.

- A base conversational model, prompted for empathy and clarity, to handle the dialogue layer.

With good orchestration and AI agent design, the user never sees this complexity. The system just feels responsive and reliable. If you later upgrade one piece—a better fine-tuned classifier, a new RAG architecture—you swap components at the API level without rewriting everything. Partners like Buzzi.ai provide AI agent development services that start from this kind of modular, workflow-first thinking.

The Hidden Costs: Maintenance, Drift, and Governance of Fine-Tuned LLMs

By now, it should be clear that the initial training run is only a fraction of the total cost of a fine-tuned model. The bigger question is: what are the maintenance costs of fine-tuned LLMs over time? This is where enthusiastic proofs of concept often collide with reality.

Before you green-light fine-tuning, make sure your AI implementation roadmap includes line items for ongoing costs, governance, and risk management.

Operational and Maintenance Costs You Can’t Ignore

Operationally, each fine-tuned model carries fixed costs: infrastructure, inference, monitoring, annotation for retraining, and incident response. Over a 12–24 month period, OPEX can easily exceed the initial training cost, especially if you’re paying for higher-end GPUs or premium managed services.

On top of that, model drift is inevitable as your data, users, and policies change. You’ll need periodic re-evaluation against your evaluation dataset, and a defined retraining cadence when metrics slip. That means investing in a proper training pipeline, not just a one-time fine-tuning script.

Cloud providers have started publishing guidance on these topics, including the cost trade-offs and MLOps requirements for maintaining tuned models at scale. Treat those as input to your inference costs model when you budget.

Governance, Compliance, and Brand Risk

Fine-tuned models also raise thorny questions around governance and compliance. What data are you using for training—does it contain PII, PHI, or sensitive IP? Are you allowed to send that data to your chosen provider? Do you have audit trails for which data influenced which model versions?

Responsible AI frameworks like the NIST AI Risk Management Framework offer useful guidance on how to structure these controls. For high-risk use cases, you’ll need human-in-the-loop review, clear escalation paths, and well-documented policies—especially if the model is generating customer-facing content or decisions.

Fine-tuning can help with brand consistency and policy adherence, but it also hard-wires behaviors into the model. Changing them later may require new training runs, re-validation, and re-approval from legal or compliance. That’s fine if you plan for it; risky if you don’t.

Common Mistakes When Companies Fine-Tune Too Early

The most common mistakes are painfully consistent. Teams skip RAG and sophisticated prompts, jump straight to fine-tuning without a clear success metric, and underinvest in model monitoring. The result is a brittle system that quietly degrades and nobody feels fully responsible for.

We’ve seen startups fine-tune early for their support bots, only to realize later that every new product launch or policy change required another training cycle. Eventually they ripped out the fine-tuned model and replaced it with a well-orchestrated base model plus RAG and guardrails and policies.

The lesson: fine-tuning should be a strategic commitment, not a badge of technical sophistication. If you don’t yet have the data, the governance, or the operations, the best way to optimize LLM before fine-tuning is to double down on prompts, RAG, and process.

From Decision to Roadmap: How to Act on the Framework

Having a framework is useful; acting on it is where value is created. Whether your current answer to “when should a business fine-tune an LLM?” is “not yet” or “it’s time,” you need a concrete plan.

We’ll outline two paths: one if fine-tuning isn’t yet justified, and one if it is. In both cases, you should treat this as part of a broader enterprise AI strategy, not an isolated project.

If Fine-Tuning Is Not Yet Justified: What to Do Next

If your four dimensions are not all green, that’s not a failure. It’s a signal that the highest-ROI move is to invest in foundations. The best way to optimize LLM before fine-tuning is to strengthen the parts of your stack that will pay off regardless of whether you eventually fine-tune.

For a mid-size SaaS company, a 3–6 month roadmap might look like this:

- Standardize and expand prompt templates and prompt libraries across use cases.

- Introduce or improve retrieval-augmented generation for knowledge-heavy flows.

- Build realistic evaluation datasets and basic model monitoring dashboards.

- Run A/B testing prompts and RAG configs on a subset of workflows.

- Document why fine-tuning isn’t chosen yet and what metrics would change that decision.

This is solid use case evaluation and de-risking work. Even if you never fine-tune, you’ll end up with better workflow automation and more reliable AI behavior.

If Fine-Tuning Is Justified: Minimum Viable Fine-Tuning Plan

If your assessment says it is time to fine-tune LLM for business, resist the urge to boil the ocean. Define a minimum viable plan that targets a narrow, high-value workflow with a clear evaluation dataset and business KPI.

For example, you might start with the highest-volume ticket classification workflow in your support organization. Phase 1: build a rock-solid eval set and baseline with prompts + RAG. Phase 2: fine-tune a model on historical labeled tickets, then compare performance and operational savings. Phase 3: define retraining cadence, monitoring, and rollout to more queues.

This is where thinking in terms of fine-tuning LLM for business use cases ROI and not just accuracy pays off. Start with the smallest model and narrowest scope that can prove value, then expand. This is also a classic moment to bring in LLM fine-tuning services for enterprise workflows that understand production realities, not just training scripts.

Where External Partners Add Real Value

External AI consulting services are most valuable when they help you not overbuild. A good partner will design evaluation frameworks, run experiments, and push back if fine-tuning doesn’t yet have a strong business case.

We’ve seen this play out with knowledge assistants, where clients assumed they needed custom models. After structured evaluation, prompt and RAG optimization delivered the key gains, and fine-tuning went back on the shelf—for now. The result: faster time-to-value and lower total cost of ownership.

At Buzzi.ai, our role in these engagements is to help design an AI implementation roadmap that balances prompt engineering, RAG, agents, and, when truly justified, fine-tuning. If you want that kind of partner, an AI discovery and strategy workshop is often the most efficient way to stress-test your roadmap.

Conclusion: Fine-Tune Only When the Numbers, Not the Hype, Say So

Fine-tuning is a powerful lever—but it’s also one of the most expensive and operationally demanding ones you can pull. For most organizations, the fastest gains come from strong prompts, retrieval-augmented generation, and thoughtful orchestration, not from immediate model customization.

Use the four-dimension framework—performance gap, stability and consistency, data readiness, and operational capacity—to decide when to fine-tune LLM for business. Combine that with realistic evaluation datasets and metrics tied to real business ROI, and you transform “should we fine-tune?” from an opinion into a decision.

Don’t forget the hidden costs: model maintenance, drift, governance, and inference costs that accumulate long after the training run finishes. When you do choose to fine-tune, treat it as a long-term product with owners, processes, and budget—not a one-time project.

If you’d like a second opinion on where your current use case sits in this framework, consider running it past an experienced, neutral partner. We’re happy to help you evaluate whether prompt optimization, RAG, or fine-tuning is the right next move—and build a roadmap that makes the numbers, not the hype, work in your favor.

FAQ

When should a business fine-tune an LLM instead of just improving prompts?

You should consider fine-tuning only when you have a clear performance gap that matters to the business, strong prompts and RAG already in place, and all four dimensions—performance, stability, data, and operations—are green. If any of those are missing, focusing on prompt engineering and orchestration will usually yield better ROI. Fine-tuning is a “last-mile” optimization, not the first move.

How do I measure whether the performance gap justifies fine-tuning a model?

Start with an evaluation dataset built from real production workloads and define metrics that map directly to business outcomes—accuracy, escalation rate, handle time, or revenue uplift. Compare your best prompt/RAG baseline to candidate tuned or specialized models and quantify the improvement. If the projected gains don’t produce a meaningful cost-benefit outcome, fine-tuning isn’t justified yet.

What data volume and quality do I need to fine-tune LLM for business use cases?

There’s no single magic number, but you generally need thousands of high-quality, labeled examples that reflect the diversity and edge cases of your domain. Those labels should be consistent, ideally created or reviewed by experts, and accompanied by a separate evaluation dataset for honest measurement. If your data is sparse or noisy, invest first in labeling, cleanup, and RAG over curated knowledge sources.

What are the hidden maintenance and governance costs of fine-tuned LLMs?

Beyond initial training, you’ll pay ongoing inference costs, monitoring and alerting overhead, retraining cycles to combat model drift, and annotation work to maintain evaluation datasets. Governance adds additional requirements: audit trails, data privacy controls, legal and compliance reviews, and sometimes external audits. Over 12–24 months, these OPEX and governance costs often exceed the initial fine-tuning budget.

When is retrieval-augmented generation (RAG) better than LLM fine-tuning?

RAG is usually superior when your primary challenge is missing or frequently changing knowledge—like product docs, policies, or internal FAQs. Instead of encoding everything into the model’s weights, you serve up the right context from a searchable knowledge base at query time. This makes updates cheaper, governance easier, and behavior more transparent than repeatedly retraining a fine-tuned model.

Which prompt engineering techniques should I try before considering fine-tuning?

Before you think about fine-tuning, try chain-of-thought prompting, role conditioning, explicit constraints, structured outputs (like JSON schemas), and carefully designed few-shot examples. Combine those with tool calling and RAG for factual grounding, plus guardrails and policies to enforce safety and compliance. Many business workflows reach 80–90% of their potential with these techniques alone.

How do I build an evaluation dataset to compare prompt-only vs fine-tuned approaches?

Sample real user inputs from your logs, covering both common and tricky cases, and pair them with high-quality ground truth outputs or scoring rubrics. Keep this evaluation dataset separate from any training data to avoid leakage and use it to benchmark different prompts, RAG strategies, and tuned models. If you need help formalizing this process, an AI discovery and strategy workshop can accelerate the design.

In which business workflows does LLM fine-tuning usually deliver the biggest ROI?

The best candidates are high-volume, high-value, and relatively stable workflows where small accuracy gains translate into large savings—such as invoice processing, ticket classification, underwriting assistance, and domain-specific summarization. These tasks benefit from the model internalizing patterns across millions of examples. In contrast, highly dynamic, knowledge-heavy tasks tend to favor RAG over fine-tuning.

What organizational capabilities are required to operate fine-tuned models in production?

You’ll need MLOps fundamentals—versioning, deployment pipelines, model monitoring, and incident response—as well as clear ownership and governance processes. That includes teams responsible for training pipelines, evaluation datasets, policy updates, and retraining schedules. Without these capabilities, fine-tuned models can become brittle, opaque systems that are hard to update safely.

How can an external AI consulting partner help me decide if fine-tuning is worth it?

A strong partner will help you clarify business objectives, design evaluation datasets, and run structured experiments comparing prompt engineering, RAG, and fine-tuning. They should be willing to recommend against fine-tuning when it doesn’t meet ROI thresholds, and instead focus on improving prompts, workflows, and governance. This kind of neutral, strategy-first support is exactly what providers like Buzzi.ai aim to offer.