Design AI for Due Diligence That Hunts Exceptions, Not Pages

Design AI for due diligence that hunts exceptions, not pages. Learn how exception-focused AI surfaces hidden risks and red flags across M&A and compliance.

Most “AI for due diligence” tools are just faster photocopiers. They churn through data rooms, summarize mountains of contracts, and brag about pages processed—yet they regularly miss the 1–5% of documents that can kill a deal or trigger regulatory fallout.

If you lead legal, M&A, compliance, or legal-ops, you don’t care how many pages your ai for due diligence solution scanned. You care whether it caught the buried change-of-control clause, the undisclosed sanctions exposure, or the data residency gap that will make the regulator’s life easy and yours very hard.

That’s the core distinction of modern due diligence ai: tools built for exception identification, risk flagging, and issue detection—not volume metrics. In high-stakes deals and vendor reviews, missing a single critical issue is far worse than reviewing a few extra documents.

At Buzzi.ai, we build exception-focused due diligence AI agents that are explicitly designed to hunt red flags across contracts, policies, and third-party data. In this article, we’ll define what “real” AI for due diligence looks like, break down the exception patterns it must detect, walk through the architecture behind it, and show you how to evaluate and implement these platforms with the governance your board and regulators expect.

True AI for Due Diligence vs. Generic Document Processing

Most vendors selling ai for due diligence are effectively selling document review automation. They help you read faster, not necessarily see more. For low-stakes use cases, that might be enough. For M&A, vendor risk, and regulatory scrutiny, it’s not.

Throughput AI vs. Exception-Focused AI

Think of “throughput AI” as industrial-strength summarization. It ingests your data room, does basic extraction, powers search, and produces neat reports. Its success metrics are pages processed, hours saved, and how fast you can get to a first-pass summary.

Exception-focused AI for due diligence starts from a very different objective function. It is optimized for red flag identification, severity scoring, and routing high-risk content to experts. Its primary job is to drive better transaction risk analysis, not to compress reading time.

Consider two tools running over the same 20,000-document data room. The first (throughput AI) returns a dashboard of summaries by counterparty and contract type. Useful, but your team still has to spot that buried change-of-control clause in a single reseller agreement and a sanctions clause referencing a high-risk jurisdiction.

The second (exception-focused) tool clusters contracts by deal impact, then highlights a handful of documents: a reseller contract with an unusually strict change-of-control provision, a supply agreement tied to a sanctioned region, and a side letter that modifies liability caps. Those surfaced exceptions change the entire deal conversation.

In due diligence, averages don’t kill deals—outliers do. That’s why real ai for due diligence can’t just mimic junior associate summarization. It has to actively hunt the edges.

What “Real” Due Diligence AI Must Actually Do

So what does true due diligence AI need to deliver beyond document review automation? At minimum, four core capabilities:

- Detect exceptions versus a baseline (market norms, your playbook, regulatory expectations).

- Flag and prioritize red flags with clear severity levels.

- Cluster related issues across documents for coherent deal risk assessment.

- Explain why a document or clause is risky in plain language.

There is a world of difference between keyword hits and modeled exception patterns. A basic tool might highlight every occurrence of “indemnity,” “liability,” and “assignment.” A real ai for legal due diligence system learns what “normal” indemnity caps look like for your industry and deal type, then flags contracts where caps are unusually low, missing entirely, or coupled with non-standard exclusions.

The same applies to missing consent rights, unusual termination triggers, or MFN clauses that quietly reprice your entire book of business. Instead of dumping more text on already overloaded teams, a strong due diligence AI reduces cognitive load by pointing reviewers to a curated set of issues that matter for deal risk assessment.

Some examples of realistic exceptions a mature system should be able to flag:

- Undisclosed side letters that alter pricing or liability on key customer contracts.

- Change-of-control clauses that allow termination or renegotiation on your top 10 accounts.

- Unusual indemnity language that shifts regulatory or IP risk back to you.

- Missing data protection addenda or cross-border transfer clauses, given your target’s footprint.

- Termination-for-convenience rights that undercut forecasted revenue.

Under time-compressed review cycles and inconsistent human reviewers, issue detection can’t rely on heroics. It has to be systematic.

Designing Exception-Focused AI for Due Diligence

Designing exception-focused ai for due diligence means working backwards from what can go wrong, not from what documents exist. The architecture of the AI should reflect your risk model, not your folder structure. That’s a subtle but profound design shift.

Start From Exceptions, Not from Document Types

Most projects start by listing document types: NDAs, MSAs, SOWs, licenses, policies. That’s convenient for IT, but it’s not how deals fail. Deals fail because of exceptions: a missing consent, a hidden regulatory liability, a data residency gap.

Instead, you want an exception catalog: a structured list of “things that can go wrong” in a given deal. For example, across legal, financial, compliance, and ESG domains:

- Change-of-control / assignment: consent required, termination rights, automatic assignment blocked.

- Regulatory / compliance due diligence: sanctions exposure, export controls, data protection breaches, anti-bribery/AML gaps.

- ESG due diligence: environmental liabilities, labor violations, governance weaknesses, controversial counterparties.

- Financial impact: contingent liabilities, off-balance-sheet obligations, aggressive revenue recognition.

From there, you map each risk type to how it manifests in documents: specific clauses, omissions, cross-references, and metadata. The AI doesn’t just know “this is an MSA”; it knows “this is an MSA that is missing a standard data processing addendum and has an anomalous limitation of liability.”

Over time, these exception libraries become corporate assets. Every deal teaches your exception identification system something: new carve-outs, evolving regulatory expectations, shifts in market norms. You’re not just buying a tool—you’re compounding institutional memory.

From Rules to Models: How Exception Patterns Are Represented

Once you know which exceptions you care about, you need to represent them as patterns the machine can work with. Some patterns are straightforward and deterministic: “If a contract involves personal data of EU residents and there is no valid transfer mechanism, flag.” These are classic rules.

Others require nuance. Consider anomaly detection in contracts around indemnities. You rarely have a single magic phrase. Instead, you see language variations, jurisdictional quirks, and negotiated positions. This is where ML and LLM-based ai contract review shines: learning from examples of “acceptable” and “unacceptable” clauses in context.

The most robust systems use a hybrid approach:

- Rule-based checks for hard regulatory or policy requirements (e.g., no business with sanctioned entities; mandatory DPA for certain data types).

- Model-based detection for softer patterns (e.g., indemnities that significantly diverge from your standard; missing references that usually appear in similar deals).

- A risk scoring model that combines multiple weak signals—an unusual cap, a missing consent, a high-risk jurisdiction—into a strong flag.

For example: a rule might require change-of-control consent in customer contracts above a certain ARR. A model then looks for atypical carve-outs (e.g., consent not required if the acquirer is in a certain region) or missing references that usually appear in your standard clause set. The combined signal feeds an overall deal risk assessment, not a binary “good/bad” label.

Jurisdictional and sector-specific pattern sets are non-negotiable. A healthcare SaaS deal in Germany has very different regulatory compliance checks than a logistics company in Southeast Asia. Your due diligence AI needs to understand both the letter and the spirit of those regimes.

Tuning for High-Stakes: Prioritizing False Negatives Over False Positives

All classification systems wrestle with a trade-off between false positives (too many things flagged) and false negatives (critical issues missed). In e-commerce recommendations, you tolerate false negatives. In high-stakes deals, you don’t.

For due diligence, you want to bias the system towards catching more potential issues early, then use smart triage workflows to handle the noise. You can gradually tighten thresholds and apply false positive reduction techniques once you’re confident recall on critical exceptions is high.

Consider a simple numeric example. Suppose your initial risk score threshold for “review required” is 0.7 on a 0–1 scale. On a sample of 10,000 documents, you might see:

- 800 documents flagged, including 95% of known critical issues.

- Of those 800, 400 are ultimately deemed low or no risk (false positives).

If you raise the threshold to 0.85:

- Flags drop to 400 documents—much less reviewer workload.

- But recall on known critical issues drops to 80%—you miss 1 in 5 serious problems.

An ai due diligence platform built for high-stakes work lets your legal and compliance teams adjust these thresholds, change severity weights, and add or modify rules—without waiting weeks for vendor engineering. That’s how you keep control of issue detection while still benefiting from automation.

What Due Diligence AI Must Actually Detect: Concrete Red Flags

To separate marketing fluff from real capability, you need to know what a best-in-class due diligence ai should actually catch. Let’s walk through concrete red flag categories that the best ai for legal due diligence to identify red flags ought to handle.

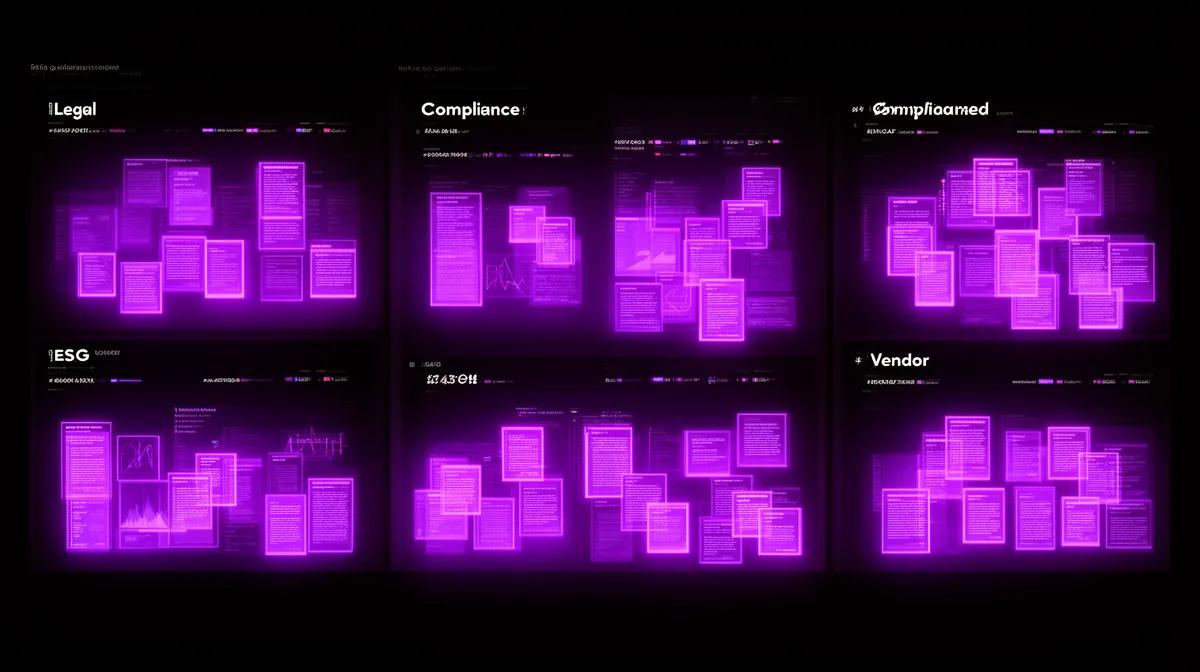

Core Legal and Contract Exceptions

On the legal side, some contract exceptions are universally high-value for m&a due diligence and vendor reviews. At a minimum, your ai contract review system should reliably surface:

- Change-of-control and anti-assignment clauses that allow termination, price renegotiation, or consent rights on key customers or suppliers.

- Unusual indemnities and liability caps that shift risk beyond your playbook (e.g., very low caps, carve-outs for specific damages, uncapped IP liability).

- Termination rights that are broader or easier to trigger than your standard terms.

- MFN clauses that silently reprice other contracts if you give better terms to someone else.

- IP ownership gaps or weak license grants on core technology.

Imagine a snippet in a standard SaaS MSA where your usual limitation of liability is 12 months of fees. In one contract, the cap reads “aggregate liability shall not exceed the fees paid by Customer in the month preceding the claim.” A robust contract analytics engine recognizes this as an anomaly, flags it, scores the risk high, and groups it with similar outliers.

Cross-document consistency is equally important. A master agreement that looks fine may be undermined by an SOW or side letter that introduces conflicting SLAs or bespoke pricing. An ai for legal due diligence system should cluster related documents and highlight inconsistencies between them—not treat each PDF as an island.

Compliance, Regulatory, and ESG Exceptions

Compliance due diligence is no longer a box-ticking exercise. Regulators explicitly expect robust third-party risk programs and sanctions screening. Guidance from agencies like the U.S. DOJ and OFAC stresses continuous monitoring, not just one-time checks.

Your system should be able to flag:

- Sanctions exposure: parties or jurisdictions appearing in contracts that match external sanctions or watchlists.

- Data protection violations: missing or weak privacy clauses, unlawful cross-border transfers, or failure to include required security commitments.

- Export controls and trade restrictions: references to controlled technologies or restricted destinations.

- Anti-bribery/AML gaps: absence of standard representations and warranties, risky payment structures, or red-flag intermediaries.

- ESG due diligence gaps: environmental liabilities, labor and human rights violations, governance weaknesses.

A sophisticated workflow combines internal contract language with external data. For example, a supplier name in a contract is matched against a sanctions list and an ESG controversy database. The system sees that the counterparty’s parent entity has recent allegations of environmental violations and a director on a PEP list, then raises a high-severity compliance flag.

Regulatory guidance on sanctions and third-party risk management makes it clear that “we didn’t know” is not a defense if your systems could reasonably have detected issues. Embedding regulatory compliance checks into your ai for due diligence pipeline is rapidly becoming table stakes.

Financial, Operational, and Vendor Risk Exceptions

Beyond pure legal and compliance risk, ai for vendor due diligence and M&A needs to support financial and operational analysis.

On the financial side, that includes flags such as:

- Aggressive revenue recognition language that conflicts with your accounting policies.

- Contingent liabilities buried in indemnities or warranties.

- Change-in-pricing or indexation provisions that undermine your quality of earnings review.

For vendor risk management, the AI should surface:

- Subcontracting without consent, especially where data or critical services are involved.

- Data residency or localization gaps relative to your policies.

- Service level carve-outs that allow outages without meaningful remedies.

- Termination-for-convenience clauses that jeopardize long-term revenue or critical dependencies.

Imagine a scenario in which your revenue model assumes a five-year term with strict termination conditions on a top customer. A single SOW contains a termination-for-convenience clause with only 30 days’ notice. Exception-focused due diligence ai that flags compliance and contract risks will catch that clause, score it based on potential revenue loss, and elevate it to the deal team. Without that flag, your forecast is fiction.

Architecture of an Exception-Focused AI Due Diligence Platform

So how does an ai due diligence platform for M&A issue detection actually work under the hood? At a high level, it’s a pipeline from messy documents to prioritized risk flags, wrapped in governance and workflow integration.

From Data Rooms to Risk Flags: End-to-End Flow

The starting point is data ingestion. The platform connects to your Virtual Data Rooms, contract repositories, CLMs, and file shares, then pulls in documents—often tens of thousands at a time. Solid data room analysis and OCR are critical; silent failures here undermine everything downstream.

Next, documents are normalized and classified: contracts vs. policies, financial statements vs. technical specs, and so on. Document clustering groups related items (e.g., all documents associated with a major customer). Then the exception patterns and models we described earlier are applied.

The result is a set of risk flags: each with a type (e.g., sanctions risk, unusual liability cap), a severity score (driven by a risk scoring model), and links to the exact clauses and context. Flags are then clustered by counterparty, deal workstream, or theme to reduce cognitive load.

In a typical M&A data room walkthrough, the pipeline might look like this:

- Ingest 15,000 documents from the VDR.

- Identify 3,000 as contracts, 1,000 as policies, 500 as financial attachments, etc.

- Apply exception patterns and generate 1,200 flags, of which 150 are high severity.

- Group those 150 across 20 key customers, 5 high-risk jurisdictions, and 3 regulatory themes.

- Route the resulting worklists to legal, compliance, and finance reviewers.

The goal is not just automated red flag review. It’s transaction risk analysis that’s structured enough to drive decisions: walk away, renegotiate, or accept with mitigations.

Risk Scoring, Triage, and Escalation Workflows

Once you have risk flags, you need a way to triage them. A modern ai for due diligence software with risk flagging will score each flag along multiple dimensions:

- Severity (how bad is it if this risk materializes?).

- Likelihood (how likely is that, given context?).

- Regulatory impact (does it trigger specific reporting or enforcement exposure?).

- Deal materiality (does it touch top-tier customers, key assets, or strategic vendors?).

From there, you define workflows. For example:

- All high-severity compliance flags auto-escalate to the compliance team with 24-hour SLA.

- Medium-severity legal flags go to a centralized legal-ops review queue.

- Low-severity anomalies are batch-reviewed or auto-approved based on rules.

SLA tracking and audit trails matter. If regulators or internal audit later ask, “Who saw this risk, when, and what did they do?”, your risk flagging system should give a clear answer.

Integration with VDRs, Contract Repos, and Deal Workflows

Even the best AI fails if it lives in a separate browser tab nobody opens. That’s why workflow integration is non-negotiable.

A mature ai for due diligence platform integrates with:

- Virtual Data Rooms for in-context data room analysis.

- CLM systems and contract repositories for live deals and BAU vendor management.

- GRC tools and ticketing systems for remediation tasks.

- Project management tools that legal and deal teams already use.

In practice, that means a reviewer can click a flag in their deal management tool, which opens the source document in the VDR with AI annotations side-by-side. For ai for vendor due diligence with automated risk alerts, new vendor contracts or amendments can be auto-scanned, with high-risk issues creating tickets in your GRC platform.

Security and access control have to match or exceed your existing stack. Sensitive deal data must be handled with strict permissions, encryption, and audit logging. This is where experienced vendors—and in-house teams leveraging partners for predictive analytics and risk scoring—stand out.

Tuning, Governance, and Trust: Making Risk Flags Reliable

For exception-focused AI to be trusted, legal and compliance leaders need to feel in control. That means collaborative tuning, transparent governance, and robust quality controls around risk flagging and false positive reduction.

Collaborative Tuning with Legal and Compliance Teams

The best results come when domain experts and data teams work together. Early in a deployment, legal and compliance reviewers look at a high sample of flags, label true/false positives, and suggest refinements to exception patterns. Those corrections feed back into both rules and models.

Over time, you develop jurisdiction- and sector-specific playbooks. For example, your EU data protection team may demand stricter thresholds on cross-border transfers than your U.S. commercial team. Your exception identification logic needs to reflect that nuance.

Crucially, changes to patterns can’t be a black box. Teams need visibility into what changed, why, and what impact it had on ai readiness assessment and production performance. That transparency is at the heart of serious ai governance consulting.

Auditability, Overrides, and Quality Controls

Governance also requires explainability. For any flagged issue, a reviewer should be able to see why it was flagged, which pattern or model triggered it, and what context was considered.

A typical explanation view might show the clause text, highlight the phrases that matched a rule (“governing law,” “termination for convenience,” “sanctions”), display model confidence scores, and reference similar historical cases. That’s how you avoid blind reliance and encourage informed judgment.

Override mechanisms—approving or downgrading flags—should feed back into training data. High-severity issues may require second-level review or explicit sign-off from senior counsel or the CCO. Every change, decision, and model update should be logged for later transaction risk analysis and external review. This is where ai security consulting practices intersect with legal governance.

How to Evaluate and Buy AI for Due Diligence Exception Identification

Buying ai for due diligence is itself a due diligence exercise. You’re not just choosing software—you’re choosing how your organization will see risk for years.

Test on Known-Issue Deal Sets, Not Vendor Demos

The first rule of evaluating how to evaluate ai for due diligence exception identification tools: never rely on canned demos. Vendors cherry-pick data that flatters their models and hides issues found in real-world chaos.

Instead, use your own history. Assemble 3–5 past deals or vendor reviews where you know what went wrong: missed indemnities, undisclosed liabilities, sanctions issues. For each, create a list of known red flags and outcomes.

Then run competing due diligence ai platforms side by side on the same documents. Measure:

- Recall on known red flags (how many did they catch?).

- Severity distribution (did they score the most important issues as high?).

- False negatives (which critical issues did they miss entirely?).

- Noise level (how many low-value flags did they generate?).

A simple evaluation plan over a few weeks will tell you far more than any scripted webinar. This is also where an AI discovery engagement can help structure the test and interpret results.

Key Evaluation Criteria: Beyond Pages Processed

Once you’re testing on real deals, you need the right scorecard. For an ai due diligence platform, look beyond throughput and ask:

- How broad is exception coverage across legal, compliance, ESG, and vendor risks?

- How accurate is it on critical red flags—especially in ai due diligence platform for M&A issue detection scenarios?

- How explainable are flags and scores?

- What tuning controls does it give your experts?

- How strong is workflow integration and security?

- What governance features does the ai for due diligence software with risk flagging offer (audit logs, change management, access control)?

Useful metrics include issues found per 1,000 documents, the severity mix of those issues, and the reduction of missed red flags over time. Different organizations will weight criteria differently: M&A teams may care more about deal risk assessment; vendor risk teams about ongoing monitoring.

The common thread is this: if a tool’s main success metric is pages processed, it’s not really ai for due diligence. It’s a nicer viewer.

Calculating ROI: From Issues Avoided to Counsel Spend

Leadership will ask about ROI, and you should have a crisp answer. The value of exception-focused AI isn’t only in hours saved; it’s in losses not incurred and leverage gained in negotiation.

Consider a numeric example. Suppose a missed indemnity clause leads to a post-closing dispute costing $5M. An ai development roi analysis might compare that to an annual AI platform cost of $300k that reduces the probability of missing such clauses by 70% across multiple deals. Even if you avoid one major issue every few years, the math is compelling.

You can also quantify reduced dependence on outside counsel for first-pass review, shorter deal cycles, and more standardized playbooks. Over a year or two, trends in surfaced issues, cycle time, and external spend will tell a persuasive story about your deal risk assessment maturity.

How Buzzi.ai’s Exception-Focused Due Diligence AI Is Different

Many platforms promise automation. We focus on exception-focused due diligence ai that actually improves how you see and manage risk.

Exception Pattern Libraries Built Around Real Deals

At Buzzi.ai, we start with real-world exception catalogs and risk taxonomies, not generic NLP. Our pattern libraries span M&A, vendor risk, and compliance programs—and cover legal, financial, compliance, ESG, and operational risks.

Clients can configure severity models to match their risk appetite across esg due diligence, compliance due diligence, and commercial positions. As regulations change and new edge cases emerge, we update patterns in collaboration with your teams so your exception identification stays current.

For example, a new ESG regulation might require additional environmental representations for certain industries. We encode that as new rules and model patterns, back-test them on prior deals, and roll them out with clear release notes and impact analysis.

Agentic Workflows, Not Just Static Reports

Instead of static reports, Buzzi.ai deploys agents that continuously monitor your VDRs and repositories. When new documents arrive—an updated SOW in a live deal, a fresh vendor contract—they run ai for vendor due diligence checks and push prioritized queues to reviewers.

Our agents integrate with your existing CLM, VDR, and workflow tools, aligning with your workflow integration needs rather than forcing a rip-and-replace. Reviewers work in a UI designed for human-in-the-loop: explanations, override controls, and audit logs are front and center.

Imagine an M&A data room that gets a late-stage upload of a key customer amendment. A Buzzi.ai agent spots a change-of-control termination right, flags it as high severity, and notifies deal counsel before signing. That’s exception-focused ai for due diligence in action—not a PDF report a week later.

Partnering on Evaluation and Governance

We don’t ask you to trust marketing slides. We run pilots against your historical deals and known issues, using the same principles we outlined for how to evaluate ai for due diligence exception identification. The result is a shared, evidence-based view of performance.

Beyond technology, we support governance design: playbooks, tuning rituals, and audit requirements for GCs and CCOs. Our work in ai governance consulting, ai strategy consulting, and exception-focused AI agent development gives us a front-row seat to what works in practice.

If you’re ready to move beyond summary-centric tools, we’ll help you design and run a 4–6 week pilot focused on a single deal archetype, then scale from there.

Conclusion: Move from Summaries to Exceptions

True ai for due diligence is not about reading faster. It’s about seeing what most people miss. Exception-focused platforms are designed around exception identification, explicit risk taxonomies, pattern libraries, and robust risk flagging workflows.

When you evaluate tools, prioritize recall on critical red flags, governance, and integration over raw speed. That’s where the real ai development roi lives: in issues avoided, deals repriced, and regulators satisfied—not in dashboards about pages processed.

If you want to explore what this could look like in your environment, we’d be happy to help you pilot an exception catalog and risk-flagging workflow tailored to your deals. Reach out to us to discuss a structured pilot at Buzzi.ai and move your due diligence from summaries to exceptions.

FAQ

What is the difference between true AI for due diligence and generic document processing tools?

Generic document processing tools focus on throughput—summarizing, extracting fields, and enabling faster search. True AI for due diligence focuses on detecting exceptions versus a baseline, flagging red flags, and scoring risk across deals. The key outcome isn’t pages processed, but critical issues surfaced in time to change decisions.

How does exception-focused AI identify red flags in contracts and corporate documents?

Exception-focused AI relies on explicit risk taxonomies and exception patterns, not just keyword search. It combines rule-based checks for hard requirements with ML and LLM models for nuanced anomaly detection in contracts. These models compare clauses against market norms and your playbook, then highlight deviations with severity scores and explanations.

What types of risks and exceptions should due diligence AI be able to detect?

A mature due diligence AI should cover legal, compliance, ESG, financial, and vendor risk exceptions. That includes unusual indemnities, problematic change-of-control and termination rights, sanctions exposure, data protection gaps, environmental liabilities, and subcontracting or SLA issues. The breadth of risk coverage is a core evaluation criterion when choosing a platform.

How can AI help surface hidden issues in M&A due diligence under tight deadlines?

In compressed timelines, AI can quickly ingest entire data rooms and cluster documents by counterparty, theme, and risk pattern. Instead of reading everything, deal teams focus on a prioritized set of high-severity flags, with direct links to clauses and context. This allows more rigorous M&A due diligence, even when closing windows are measured in days, not weeks.

What architecture is required for an effective due diligence AI platform with risk flagging?

An effective platform includes robust ingestion from VDRs and repositories, OCR and language detection, document classification and clustering, and layered rule+model exception detection. On top of that, you need risk scoring, triage and escalation workflows, audit logs, and tight integration with deal and GRC tools. Together, these components support end-to-end exception identification and review.

How can legal and compliance teams tune AI models to reduce false positives without missing critical issues?

Teams should start with conservative thresholds that favor recall, then iteratively adjust based on flagged results. By reviewing a sample of flags each week, labeling true/false positives, and refining rules and model weights, they can reduce noise while maintaining high sensitivity to critical issues. Platforms like Buzzi.ai support this with expert-facing tuning controls and transparent change tracking.

What should I look for when evaluating AI due diligence tools and platforms?

Look for strong performance on your own past deals, not just vendor demos. Key criteria include exception coverage, recall on known red flags, explainability, tuning controls, workflow integration, governance, and security. A structured evaluation—often as part of an exception-focused AI pilot—will give you real evidence of fit.

How can AI support legal, financial, compliance, vendor, and ESG due diligence in one workflow?

By building a unified exception catalog that spans these domains, AI can detect and score different risk types on the same documents. Reviewers can then filter by risk category while still seeing cross-domain context—for example, how an ESG controversy relates to a key commercial contract. This integrated approach avoids siloed assessments and supports more holistic deal risk assessment.

What role does anomaly detection play in finding non-obvious contract and compliance risks?

Anomaly detection models learn what “normal” looks like across your contracts, industries, and jurisdictions. They then surface deviations—like an unusually low liability cap or a missing consent right—that might not match simple keyword rules. This combination of explicit rules and learned anomalies is crucial for catching subtle but material contract and compliance risks.

How does Buzzi.ai’s exception-focused due diligence AI differ from traditional contract review solutions?

Buzzi.ai is built around exception pattern libraries, agentic workflows, and governance, not just clause extraction and summaries. Our AI agents monitor VDRs and repositories, push prioritized risk queues, and support human-in-the-loop review with explanations and audit logs. We also partner on evaluation and governance design so your exception identification improves deal by deal.