Manufacturing AI Automation That Handles Exceptions Without Stopping Lines

Manufacturing AI automation works when AI routes routine work and escalates edge cases. Learn exception-aware design, KPIs, and MES/ERP integration patterns—start here.

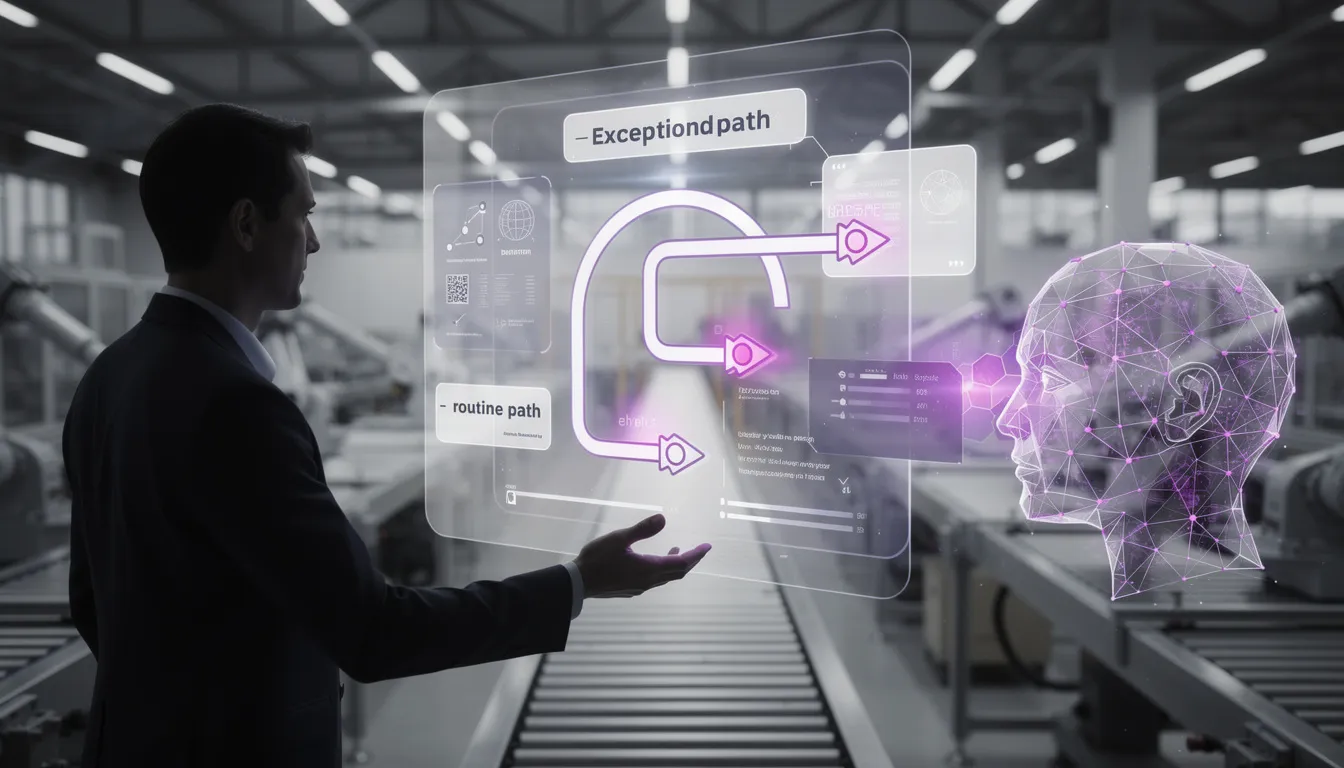

The fastest way to fail at manufacturing AI automation is to aim for 100% automation. The fastest way to win is to automate the routine and industrialize the exceptions.

If you’ve spent time on a shop floor, you already know why: reality is not deterministic. You get mixed SKUs, supplier variation, sensor drift, unplanned maintenance, and last-minute schedule changes that make yesterday’s “perfect” model look naive. The line doesn’t care that your pilot demo hit 98% accuracy; it cares whether the remaining 2% stops production, creates scrap, or forces operators into workarounds.

So the real goal of manufacturing AI automation isn’t eliminating humans. It’s reducing disruption when reality deviates from the model, and ensuring the system degrades gracefully when it’s uncertain. That’s what exception handling in manufacturing looks like when you treat it as a first-class design problem.

In this guide, we’ll lay out a practical framework for human-AI task distribution, the exception routing patterns that keep production moving, the KPIs that matter (and the vanity metrics that don’t), and the integration blueprint for MES/ERP/QMS without brittle coupling. This is the playbook we use at Buzzi.ai when we build human-in-the-loop automation that holds up after the pilot banners come down.

What “exception-aware” manufacturing AI automation actually means

Exception-aware manufacturing AI automation starts with a blunt admission: your factory is a high-variance environment. Even if your process is “stable,” it’s stable in the way a city is stable—most days look similar, but you’re always one event away from a traffic jam.

In practice, exception-aware systems assume that anomalies will happen and are designed to keep throughput high by routing edge cases to the right humans with the right context, fast. The AI isn’t just a classifier or a predictor; it’s part of an exception management workflow with queues, ownership, SLAs, and audit trails.

Define the unit of automation: decisions, not tasks

Most teams talk about “automating tasks,” but tasks are the visible layer. The real leverage is in automating decisions: approve, route, hold, release, rework, escalate. When you automate decisions, you stop arguing about whether AI “replaces” someone and start asking a better question: Which decisions can be made safely and repeatedly with the data we have?

This matters because decision boundaries are exactly where exceptions appear. Ambiguity lives there: missing context, conflicting signals, shifting constraints, or a situation that “never happens”—until it does. If your automation assumes the happy path, the first ambiguous case becomes a line stop or a workaround.

Here’s the hidden advantage: good AI acts like an exception amplifier. It increases throughput by concentrating human attention on the few cases that actually matter.

Example: a replenishment workflow in an ERP can auto-approve standard orders (same vendor, normal lead times, stable consumption). But when the vendor’s lead time suddenly changes, or demand spikes against a constrained component, the AI flags it and routes it to a planner with the relevant context—recent usage, open POs, supplier performance, and the schedule impact. That’s manufacturing workflow orchestration in the real world: not magic, but disciplined decision support.

Exceptions are not bugs—they’re where margin leaks or is protected

On the floor, exceptions aren’t annoying edge cases. They’re where you either lose margin quietly or protect it deliberately.

Think about what exceptions drive:

- Scrap and rework when out-of-tolerance conditions aren’t contained early

- Expedited freight when schedule issues are discovered too late

- Unplanned downtime when drift isn’t detected until the process fails

- Quality escapes that trigger returns, chargebacks, or customer shutdowns

- Safety incidents when abnormal conditions are handled informally

That’s why exception handling in manufacturing needs to be designed as a workflow, not a panic button. You want defined owners, clear escalation paths, and measurable SLAs. Done well, it improves operational efficiency, lifts first-pass yield, and supports continuous improvement in manufacturing without pretending you can predict everything.

Five typical shop-floor exceptions you can design for immediately:

- Out-of-tolerance measurement (SPC drift, gauge issues, or process shift)

- Missing components (kitting errors, mispicks, or supplier shortages)

- Machine drift (tool wear, fixture wear, thermal variation)

- Label mismatch (wrong revision, wrong customer label, serialization issues)

- Urgent engineering change (ECN) impacting work instructions mid-run

Why traditional automation breaks on the shop floor (and how to fix it)

Traditional automation fails in manufacturing for the same reason brittle software fails anywhere: it assumes the world will politely match the requirements document. The shop floor does not cooperate.

Modern manufacturing AI automation can be a genuine step-change—but only if you treat variability as the default condition and build systems that absorb it.

The 80/20 trap: automating the easy 80% and failing on the hard 20%

Pilots often look great because they focus on the easy 80%: clean data, stable conditions, friendly operators, a narrow set of SKUs, and a workflow designed around the model rather than the plant. Then the hard 20% arrives—reflective surfaces, new packaging, a revised spec, a different shift—and the system breaks. Operators lose trust. Workarounds appear. Shadow IT becomes the real production system.

The cost of these failure modes is not “bad AI.” It’s disruption:

- Line stops or blocked shipments

- Operators bypassing checks to keep up with takt time

- Quality teams drowning in false positives

- IT teams stuck babysitting integrations instead of improving them

The fix is counterintuitive: design for the 20% first—at least the routing, escalation, and fallback. Your model can improve over time, but your exception workflow must work on day one.

Mini-case: vision-based quality inspection automation catches obvious defects on matte parts. Then a reflective finish is introduced. The model struggles; false rejects spike. Without a review queue and “unknown” outcome, you either pass bad parts or stop the line. With exception routing, the system holds uncertain parts for human review, batches similar images for fast disposition, and keeps production moving.

For a grounded view of AI’s manufacturing impact and where it tends to break, see McKinsey’s overview on AI in operations and manufacturing: https://www.mckinsey.com/capabilities/operations/our-insights.

Brittle integrations create brittle decisions

Most AI failures in factories aren’t model failures. They’re integration failures. If the system can’t reliably answer basic questions—what part is this, which revision applies, what operation are we in, what lot is this tied to—then every downstream “decision” is suspect.

Common integration points include:

- Manufacturing execution system (MES): work orders, routings, operation status, traceability

- ERP: BOMs, inventory, purchasing, planning signals

- QMS: nonconformance, CAPA, inspection plans

- SCADA/PLC: real-time signals and machine states

- Historians: time-series context for analytics and root cause analysis

The classic failure: misaligned identifiers. A part ID in ERP doesn’t map cleanly to a routing step in MES, or a revision change updates the BOM but not the work instruction selection logic. Your AI can be perfect and still trigger the wrong action.

The fix has three parts: event-driven orchestration (react to plant events in real time), ID mapping (a shared translation layer), and a canonical decision record that stores what the system knew when it acted. That’s how you turn industrial automation software from a web of scripts into a resilient factory automation platform.

When sensors lie: ambiguity, drift, and data gaps

Factories are physical systems. Sensors drift. Calibration schedules vary. Networks drop packets. Product mix changes. Even “good” data can become misleading when the process changes—what ML calls concept drift, and operators call “things feel off today.”

The design principle is graceful degradation: when confidence drops, move to human review; don’t guess. In practice, your manufacturing AI automation solutions for exception handling should treat uncertainty as a normal operational state.

This is also where edge AI for manufacturing earns its keep. Latency-sensitive checks—like in-line inspection or predictive maintenance triggers—often need local validation and local fallbacks.

Example: a vibration model for predictive maintenance AI detects early bearing failure. But a network dropout or noisy signal can create phantom alerts. A robust system switches to “monitor only” mode when the signal quality degrades, flags the uncertainty, and prompts a technician to confirm before scheduling downtime. That’s operator decision support, not algorithmic bravado.

A task distribution framework: what AI should do vs what humans should keep

Once you accept exceptions are normal, the next question is governance: Who decides what the AI is allowed to do? This is where projects either become political (“AI is replacing us”) or operational (“AI is removing our worst bottlenecks”).

The best approach we’ve seen is a simple rubric that turns subjective fear into objective design, and makes human-in-the-loop automation feel like process standardization rather than disruption.

Classify decisions by risk, reversibility, and context required

Use a three-axis framework for human-AI task distribution:

- Risk / safety impact: could a wrong decision cause harm, regulatory violation, or major customer impact?

- Reversibility: can you undo the decision cheaply (reprint, re-route) or is it irreversible (scrap, ship, safety bypass)?

- Context richness: does the decision require “tribal knowledge,” cross-system context, or nuanced judgment?

Guideline: automate decisions that are low-risk, reversible, and well-instrumented. Keep high-risk or irreversible decisions human-led, with AI providing structured context and recommendations.

A table-like narrative (rough but useful):

- Reprint a label: AI can auto-do it after validation

- Adjust reorder point: AI recommends; planner reviews for constrained parts

- Scrap disposition: human decision, AI provides evidence (images, measurements, history)

- Safety interlock bypass: never automate; often shouldn’t be allowed at all

This reframes manufacturing AI use cases around operational ownership. You’re not “deploying AI.” You’re defining which decisions are safe to standardize and which need escalation.

Human-in-the-loop patterns that actually scale

Human-in-the-loop automation fails when “human review” means “someone gets pinged randomly and drops everything.” It scales when you engineer review as a production system: queues, roles, batching, and SLAs.

Three patterns that work in practice:

- Tiered review queues: L1 operator for fast disposition, L2 engineer for technical judgment, L3 quality for containment/CAPA triggers

- Skill-matrix routing: route exceptions to certified reviewers for specific product families or processes

- Batch review vs interrupt-driven review: batch cosmetic or low-risk issues; interrupt only for safety/containment

Example: a defect review queue for ai-powered quality control groups similar images (same camera, same product family, similar anomaly) so an inspector can approve or reject in batches. Throughput goes up not because AI is “smarter,” but because you reduced cognitive load.

Preserve expertise: turn tribal knowledge into decision assets

The biggest long-term value of a human-in-the-loop system isn’t the first model. It’s the feedback loop.

Every time a human overrides the AI, you should capture why. Those rationales can become:

- New rules (threshold adjustments, guardrails)

- New labeled data for retraining

- New sensors or checks (because the current signals don’t capture reality)

- Better root cause analysis when patterns repeat

Example: operators explain that a “good-looking” weld is risky because fixture wear is causing subtle misalignment. That note becomes a new feature (fixture age / maintenance state) and eventually a new sensor check. This is continuous improvement in manufacturing applied to AI: kaizen for decision-making, not just cycle time.

Exception routing design: the patterns that keep production moving

At this point, you can see the core idea: manufacturing AI automation is less about prediction and more about routing. The best systems feel like an air traffic control tower—most flights land automatically, but the tower is ready for weather, congestion, and emergencies.

This section turns that idea into concrete production exception routing patterns you can implement.

Confidence thresholds + “unknown” as a first-class outcome

Many teams treat confidence like a UI detail. It’s not. Confidence is policy.

You need calibrated thresholds that map to actions:

- Auto-act when confidence is high and the decision is low-risk/reversible

- Ask for review when confidence is mid-range or the cost of a mistake is meaningful

- Escalate when confidence is low, signals conflict, or the exception indicates a systemic issue

The key design move is making “I don’t know” safer than “I’m guessing.” If your model is forced to pick, it will make confident mistakes—exactly the kind that cause escapes or stoppages.

Example in quality inspection automation: auto-pass above threshold X, auto-fail above threshold Y (for clear defects), and route everything in between to a review queue with the top-3 reasons/features. Tune thresholds per product family and even per shift based on staffing and capability.

This is also where AI agent development for shop-floor decision routing becomes practical. An agent doesn’t just predict; it packages evidence, chooses the right queue, and triggers the next workflow step. See how we approach this at AI agent development for shop-floor decision routing.

Queue design: SLAs, batching, and anti-line-stop mechanisms

Queues are how you prevent exceptions from becoming interruptions. The rule of thumb: interrupt only when containment requires it.

Design at least two classes of queues:

- Line-stopping exceptions: safety, containment, wrong part/revision, critical defect risk

- Line-continuing exceptions: cosmetic issues, documentation mismatches that can be resolved before ship, non-critical drift signals

Then add operational mechanics:

- SLAs and aging rules: if not reviewed in time, escalate automatically

- Batching: group similar low-urgency items to speed human review

- Buffers: allow WIP to continue temporarily when safe, buying time for resolution

Example: missing component detected at a station. If you have buffer WIP and containment rules allow it, let the line continue for 15 minutes while paging the material handler. If the SLA is breached, escalate to the supervisor; if a pattern repeats, escalate to planning and supplier management. This is manufacturing workflow orchestration as a control system, not a dashboard.

Escalation paths: from operator to engineer to supplier

Escalation should be boring. If it’s dramatic, you’re doing it wrong.

A good escalation path is tiered and context-rich:

- Operator gets a concise prompt: what happened, what to check, what’s safe to do next

- Engineer receives a context package: images, sensor traces, work order details, machine state, lot genealogy

- Quality receives structured evidence for nonconformance and CAPA when recurrence thresholds are hit

Two design requirements make this scalable:

- Decision record: who decided, when, why, with what evidence (and which model version)

- Closed loop: repeated patterns automatically trigger corrective action workflows

Example: a nonconformance is routed to a quality engineer. If the same defect pattern appears across lots, the system auto-creates a CAPA ticket in the QMS and links evidence. For a primer on CAPA and nonconformance basics, ASQ is a solid starting point: https://asq.org/quality-resources/corrective-and-preventive-action.

Integration blueprint: making exception-aware automation work with MES/ERP/QMS

Integration is where “AI projects” become production systems. If you treat MES/ERP/QMS as afterthoughts, your automation will always be a fragile overlay. If you integrate deliberately, exception-aware automation becomes part of how work actually flows.

Where the workflow should live: orchestration layer vs point solutions

Point solutions are tempting because they’re fast: a model here, a dashboard there. But factories run on cross-system workflows. The sustainable approach is an orchestration layer that coordinates actions across MES, ERP, and QMS based on events and decision rules.

In plain terms, event-driven architecture means: “When exception X happens, do Y.” Not next week. Now. And with context.

Example: MES posts “operation complete.” The orchestration layer runs a quick SPC drift check. If drift is detected, it opens a QMS nonconformance and pauses the release of the next lot until review. Importantly, you avoid hardcoding business logic into the model; you keep logic in the workflow layer where ops teams can own it.

For the integration concepts that shape these architectures, ISA-95 is the canonical reference for enterprise-control system integration: https://www.isa.org/standards-and-publications/isa-standards/isa-95.

This is also where our work at Buzzi.ai tends to start: workflow and process automation for manufacturing exceptions—building the routing, queues, and decision records that make AI safe and operational.

Traceability and auditability by design

If you want operators and quality teams to trust automation, you need traceability. Not “we can reproduce it later.” Traceability now.

Your decision record should include:

- Inputs (measurements, images, key master data references)

- Model version and configuration

- Thresholds used and resulting action

- User overrides and rationale

- Timestamps and identities (role-based, not blame-based)

Then link that record to genealogy: materials, machines, shifts, and process parameters. This supports regulated environments and makes audits survivable.

Example audit question: “Why did lot 24A ship despite a borderline measurement?” A well-designed system answers with the measured value, the spec, the model’s confidence, the human disposition, and the evidence used. For governance thinking that maps well to risk-based controls, NIST’s AI RMF 1.0 is worth reading: https://www.nist.gov/itl/ai-risk-management-framework.

Edge vs cloud: latency, reliability, and data gravity

Where should the AI run? The answer is usually hybrid, driven by latency and reliability.

- Edge: latency-sensitive inspection, near-real-time anomaly detection, and safety-adjacent checks

- Cloud/hybrid: analytics, model training, fleet monitoring, and cross-site benchmarking

Two non-negotiables for shop floor integration:

- Offline mode: routing must still work during connectivity loss (even if it becomes more conservative)

- Data minimization: especially for regulated products or IP-sensitive processes

Example: an edge camera model flags defects locally and routes uncertain cases to a queue. Only exception samples are uploaded for review and training, reducing bandwidth and exposure while improving the model.

Measuring ROI the right way: KPIs for exception-aware automation

“Percent automated” is a vanity metric in manufacturing AI automation. It’s like measuring a hospital by “percent of patients seen by robots.” The point isn’t robotics; the point is outcomes.

Exception-aware automation is measurable, but you need the right scoreboard—one that predicts stability and operational efficiency.

Shift from ‘% automated’ to ‘exception performance’

Track the exception system like you’d track any production process. The core metrics:

- Exception rate: exceptions per 1,000 units (or per work order)

- Exception aging: how long items sit unaddressed

- Time-to-triage: time from detection to first human disposition

- Time-to-resolution: time from detection to closure

- Reopen rate: how often issues resurface after “resolution”

A sample KPI set for a 90-day pilot line might look like:

- Cut time-to-triage from 30 minutes to under 10 minutes

- Reduce exception aging (95th percentile) below 2 hours for line-continuing queues

- Reduce reopen rate by 20% through better context packages and CAPA triggers

These KPIs make your exception management workflow visible—and therefore improvable.

Operational outcomes executives care about

Ultimately, executives care about a short list: OEE, first-pass yield, scrap, rework hours, downtime minutes, and expedite spend. Exception performance is how you influence those without turning your line into a science fair.

Two recommendations:

- Baseline first: measure current exception rates and resolution times before “improvement” begins

- Use counterfactuals: compare shifts, lines, or product families to avoid attributing seasonal demand changes to your model

And be careful with accuracy obsession. A slightly “worse” model that routes exceptions cleanly can outperform a “better” model that generates chaos. For many manufacturing AI use cases, stability beats brilliance.

Practical first pilots: 90-day exception-aware projects that work

Most plants don’t need a moonshot. They need a pilot that improves daily life on the floor, proves value, and creates a foundation for more autonomy later.

Here are three 90-day manufacturing AI automation pilots that work precisely because they are human-in-the-loop by design.

Pilot #1: AI-assisted quality triage (not full inspection replacement)

This is the fastest path to value for ai-powered quality control: use AI to sort and route, not to replace inspectors.

How it works:

- Capture images/measurements as you do today

- AI flags likely defects, groups similar cases, and creates a review queue

- Implement an “unknown” bucket and a sampling strategy

- Inspector feedback becomes labeled data for continuous improvement

Example: discrete manufacturing cosmetic defects on molded parts. The AI routes obvious defects to one queue, obvious passes to another, and uncertain cases to a batch review queue. Inspectors stay in control, but they spend their time where it matters most. This is human in the loop AI for manufacturing quality control done pragmatically.

Pilot #2: Exception-aware document + work-instruction automation

Document chaos is a silent quality killer. Digital work instructions help, but only if they’re tied to the right revision, product, and lot—and if exceptions are routed to the right owners.

In this pilot:

- Work instructions are dynamically assembled based on product/lot context

- Mismatch exceptions (wrong revision, missing step, conflicting spec) are flagged early

- ECN-related issues are routed to engineering—not dumped on operators

Example: a torque spec changes in a revision. The system detects that the station is still referencing the old spec and flags it before assembly proceeds. Instead of “someone noticed,” you get a traceable exception and a fast resolution path.

Pilot #3: Production scheduling “sanity checks” and escalation

Scheduling isn’t just optimization; it’s constraint management. Materials, tooling, labor, maintenance windows, and supplier uncertainty collide in real time.

In this pilot, AI runs sanity checks and proposes alternatives:

- Detect constraint violations (material shortages, tooling conflicts, labor gaps)

- Suggest resequencing options that protect throughput

- Auto-apply low-impact changes; route high-impact changes to planners

Example: a late supplier ASN triggers a schedule exception. The system suggests resequencing to keep the line running while protecting due dates on constrained orders. Integrated with a manufacturing execution system (MES) and ERP, this becomes production exception routing that feels like operational leverage rather than “AI.”

Conclusion: build for exceptions, then earn autonomy

Manufacturing AI automation succeeds when it treats exceptions as the core design problem, not an edge case you’ll “handle later.” The right goal is higher throughput and quality with fewer disruptions—not a higher “% automated.”

Human-in-the-loop automation scales when routing, SLAs, and audit trails are engineered like any other production system. Integration with MES/ERP/QMS should produce a decision record that preserves context, supports compliance, and accelerates continuous improvement. And the best ai automation platform for discrete manufacturing is the one that makes your plant more resilient under variance, not just impressive in demos.

If you’re evaluating manufacturing AI automation, start by mapping your top 10 exception types and their current resolution path. Then design the future-state workflow where AI routes routine decisions and escalates edge cases to the right humans with traceability built in.

We can help you do that at Buzzi.ai—especially if you want manufacturing workflow automation with human AI task distribution that works in production. Start with workflow and process automation for manufacturing exceptions, or book a discovery call via our contact page.

FAQ

What is exception-aware manufacturing AI automation?

Exception-aware manufacturing AI automation is an approach where AI handles routine, low-risk decisions and routes ambiguous or high-impact cases to humans through a designed workflow. Instead of forcing “full automation,” the system treats “unknown” and escalation as normal outcomes. The result is fewer line stops, better containment, and a measurable reduction in resolution time for the issues that actually drive cost.

Why do manufacturing automation projects fail when edge cases appear?

They fail because most projects optimize for the happy path: clean data, stable conditions, and a narrow set of scenarios. When real variance shows up—new SKUs, sensor drift, reflective materials, supplier issues—the automation has nowhere to send uncertainty. Without queues, SLAs, and owners, the 20% becomes chaos: overrides, workarounds, mistrust, and eventually abandonment.

Which manufacturing decisions are safe to automate—and which should stay human-led?

A practical rule is to automate decisions that are low-risk, reversible, and supported by reliable data. Decisions that are safety-adjacent, irreversible (like scrap or ship), or heavily dependent on nuanced context should remain human-led, with AI providing evidence and recommendations. This framing keeps automation grounded in operational reality instead of ideology.

How do you design a human-in-the-loop workflow that operators will actually use?

You make review predictable and lightweight: clear queues, clear ownership, and minimal interruptions. Batch low-urgency reviews, interrupt only for containment or safety, and route by skill matrix so the right people see the right exceptions. Most importantly, capture override reasons so operators feel heard and the system improves over time.

What are the best patterns for production exception routing and escalation?

Use confidence thresholds with “unknown” as a first-class outcome, then map thresholds to actions: auto-act, ask for review, or escalate. Build tiered escalation paths (operator → engineer → quality/supplier) and always attach a context package (images, traces, work order details, genealogy). Finally, enforce SLAs and aging rules so exceptions don’t quietly become tomorrow’s downtime.

How can AI improve quality control without replacing inspectors?

AI can triage: sort likely defects, group similar cases, and route uncertain items to inspectors in a way that reduces cognitive load. Inspectors stay responsible for final disposition, while AI reduces the volume of manual review and speeds containment. Over time, inspector feedback becomes labeled data that improves the system and shifts more decisions into the “safe to automate” category.

How do confidence thresholds and review queues work in practice?

You define two or three action bands based on calibrated confidence and risk. High-confidence, low-risk outcomes can be auto-applied; mid-confidence outcomes go to a review queue; low-confidence or high-impact signals escalate immediately. If you need help designing these bands and the surrounding workflow, Buzzi.ai’s workflow process automation service is built specifically for routing and escalation in production environments.

How do you integrate exception-aware automation with MES, ERP, and QMS systems?

Start with an orchestration layer that listens to events (like operation complete, measurement recorded, material issued) and triggers workflows across systems. Keep business logic in the workflow layer, not buried inside model code, and maintain a decision record for traceability. This approach reduces brittle coupling and makes it easier to evolve rules, thresholds, and escalations as the plant learns.

What KPIs best measure the ROI of exception-aware automation?

Track exception rate, time-to-triage, time-to-resolution, exception aging, and reopen rate—these predict stability better than “% automated.” Then connect them to outcomes executives care about: OEE, first-pass yield, scrap, downtime minutes, and expedite spend. If those outcomes move while exceptions resolve faster and more consistently, you’ve found real ROI.

What are the best first pilots for manufacturing AI automation in 90 days?

AI-assisted quality triage is often the fastest, because it improves throughput without requiring full autonomy. Exception-aware digital work instructions are next, because they prevent revision-related errors and route ECN issues to engineering. Finally, scheduling sanity checks can deliver big savings by preventing constraint violations early and escalating the right decisions to planners rather than surprising the floor.