Design Machine Learning APIs That Evolve Faster Than Your Models

Learn evolution‑ready machine learning API development: stable contracts, versioning, and backward compatibility that let models change without breaking clients.

Most machine learning API development is done backwards. Teams ship a prediction endpoint wired directly to a single model snapshot, celebrate the launch, and only discover the real cost when the second or third retrain collides with a brittle API contract. The code is technically “in production,” but the interface is so tightly coupled to today’s model that tomorrow’s improvement looks like a breaking change.

An evolution‑ready ML API flips this. The API surface is designed to be stable, business‑oriented, and long‑lived, while models behind it are free to evolve aggressively—new architectures, retraining, A/B tests, even label changes—without forcing client rewrites. That’s the difference between a prototype wrapper and a real product interface.

In this article, we’ll walk through how to design evolution‑ready machine learning APIs in practice: decoupled contracts, backward compatibility, ML‑aware versioning, and rollout patterns like A/B tests and shadow deployment. We’ll also look at how MLOps pipelines and model registries slot into this picture. Along the way, we’ll share how we at Buzzi.ai approach evolution‑ready machine learning API development for enterprises that are tired of rebuilding integrations every time a model changes.

Why Traditional Machine Learning API Development Breaks at Model Two

Traditional machine learning API development works—until the first serious model evolution. The problem isn’t the model; it’s the hidden coupling between your API contract and the internals of whatever model you shipped first. By the time you retrain, you’ve accidentally turned the model’s feature space into a public interface.

The hidden coupling between models and API contracts

Most teams start with a simple rule: expose whatever the model needs as request fields and return whatever the model spits out as the response. On day one, this feels efficient. Your request/response schema is effectively a mirror of your feature engineering and prediction output.

Imagine a customer churn prediction endpoint like this:

tenure_monthsmonthly_spendused_feature_x_last_30_daysfeature_flag_experiment_17num_support_tickets_last_90_days

And in the response:

churn_score_rawchurn_bucket_rule_basedmodel_version_hash

At first this looks fine: tight mapping from request response schema to model behavior. But as the model lifecycle progresses, your features change. You swap in new embeddings, drop obsolete flags, and reshape inputs. Because the API exposed all of that directly, every feature tweak technically becomes an API change. That’s tight coupling in action.

Why retraining and replacement feel like ‘breaking changes’

Even when you keep the same fields, retraining can feel like pushing a new product, not an improved one. Suppose you run a fraud detection system that outputs a score from 0 to 1, with internal policies treating “block if score >= 0.8” as high risk. If your retrained model has a different calibration—maybe most legitimate transactions now land between 0.1 and 0.4—suddenly your old thresholds no longer make sense.

From the client’s perspective, the API contract didn’t change, but the meaning of the scores did. Risk teams see either a flood of false positives or a sudden drop in blocks. Stakeholders call it a regression; engineers say it’s an upgrade; product teams are stuck mediating. Without an explicit notion of behavioral guarantees in your machine learning API design, model evolution looks like a stream of breaking changes.

API evolution vs. model evolution: two different clocks

The fundamental mistake is assuming APIs and models should evolve at the same pace. They shouldn’t. API contracts are like database schemas: they should move slowly, predictably, and with clear communication. Models are like the data inside: they change constantly, and that’s the point.

If you design your API as if it were a direct window into the current model, you tie those two clocks together. A more robust approach treats model evolution as configuration behind a stable interface: the endpoint stays the same, while which model serves that endpoint is decided by a routing layer and a model registry. That separation is what makes api stability and rapid model evolution compatible instead of adversarial.

What Is an Evolution‑Ready Machine Learning API?

So what is an evolution‑ready ML API in practical terms? Think of it as an interface that speaks the language of your business, not the language of your current model. It’s intentionally designed to outlive any individual model implementation.

Stable, business‑oriented contracts over model‑shaped schemas

An evolution‑ready machine learning API design starts by expressing the contract in business terms. Instead of mirroring features, you define a small number of well‑understood entities and decisions. In a credit risk context, that might mean sending a structured customer_profile and receiving a risk_assessment object.

For example, instead of dozens of raw inputs, your request could look like:

customer_profilewith validated demographics and financialsproduct_context(loan type, amount, term)

And the response:

risk_score(0–1, clearly documented)risk_band(e.g., low/medium/high)explanations(optional, model‑dependent)

This style of request response schema helps ensure that when the underlying model changes, the interface doesn’t have to. You can keep api stability while swapping out models as your data and requirements evolve.

Decoupling the API surface from model implementations

Once the contract speaks business language, you can fully decouple the surface from the implementation. The API exposes prediction endpoints like /v1/credit-risk:score, but internally routes to one of several models. A routing layer—or lightweight microservice—decides which concrete model version to call based on configuration.

This is where ml model deployment meets architecture. Rather than calling models directly, the API talks to a model gateway that looks up the appropriate artifact in a model registry, loads it, and serves the request. Models become plug‑ins: each has metadata (version, training dataset, performance metrics, approval status) that the gateway uses to decide eligibility for production. That’s how you get robust prediction endpoints that can absorb model churn.

Supporting multiple models and rollout strategies by design

An evolution‑ready machine learning API assumes from day one that multiple model versions will coexist. It doesn’t treat A/B tests as one‑off hacks; it treats them as a first‑class capability. For example, you might configure the routing layer to send 10% of traffic from a particular region to a new model, while 90% continues to hit the current champion.

This is the natural home for A/B testing models, canary releases, and shadow deployment. Because the API surface is stable, you can run experiments behind the scenes without asking clients to change anything. Later we’ll dig into the specifics of encoding these strategies into your versioning and routing.

Choosing a Versioning Strategy for Machine Learning APIs

Once you’ve decoupled contracts from models, the next challenge is versioning. Many teams discover that the hard way: they change a model, bump an API version, and then realize they’ve lost track of which dataset and training run backed that release. Good ml api versioning prevents that kind of confusion and is central to serious mlops best practices.

Separating API, model, and data versions

The first rule of ml api versioning is: don’t overload a single version number. You need at least three distinct concepts:

- API version: the contract—request/response schema and documented behavior.

- Model version: the specific trained artifact (architecture + weights).

- Data/training run version: the dataset snapshot and training configuration.

Conflating these makes governance and debugging nearly impossible. A cleaner approach might map something like api_v1 → model_v1.3.2 → dataset_2024‑09‑01. The API stays at v1 even as you iterate models from 1.3.2 to 1.4.0, as long as you don’t introduce breaking contract changes.

Modern model registry tools (e.g., MLflow, Vertex AI Model Registry, SageMaker Model Registry) are built for this. They let you track model lifecycle metadata and bind specific versions to production slots. Google’s report on MLOps and model deployment practices highlights how this mapping is critical for regulated domains where auditability is non‑negotiable; see, for instance, their overview of MLOps pipelines and model deployment.

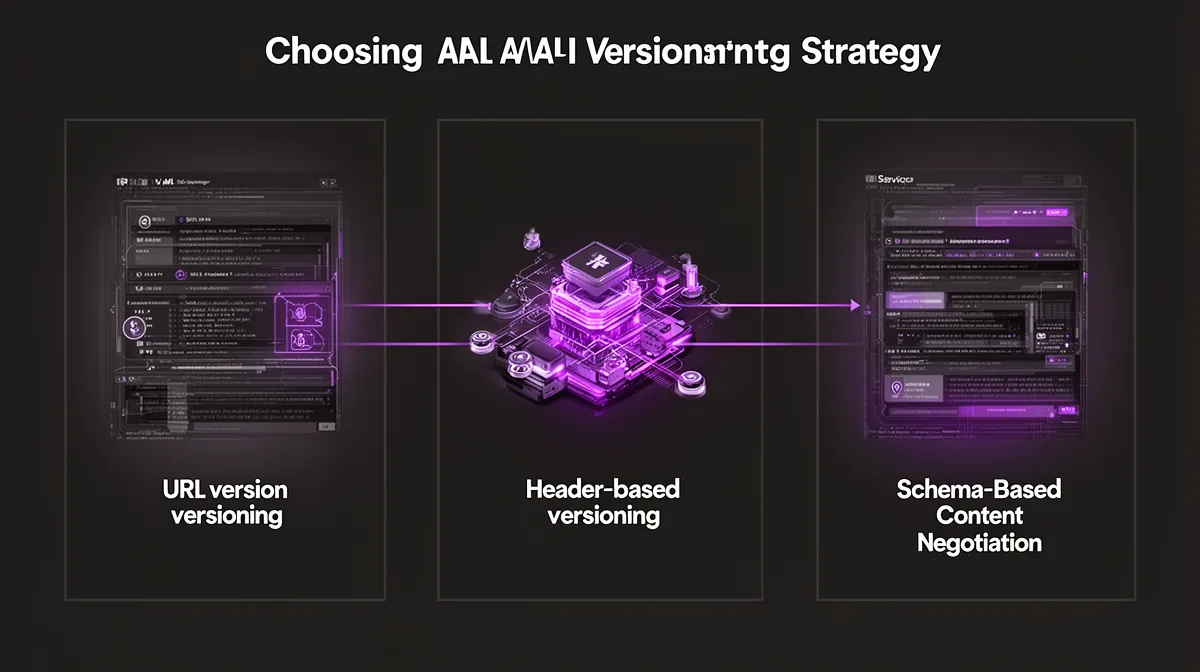

Endpoint versioning: URL vs. header vs. content negotiation

When it comes to API versions, you have a few standard options:

- URL‑based:

/v1/predict,/v2/predict - Header‑based:

Accept-Version: 1 - Schema/content‑based negotiation: e.g.,

Content-Type: application/vnd.company.risk-v1+json

For most ML systems, URL‑based versioning is the most pragmatic for api stability. It’s explicit, cache‑friendly, and easy for clients to reason about. Header‑based and content negotiation can be useful for internal services with strict governance, but they add complexity to debugging and logging.

The key, especially for machine learning API development services, is consistency. If you choose URL‑based versioning for your major contract changes, stick with it. Use headers for auxiliary information like model version and experiment metadata, not for primary contract selection, unless your organization already standardizes on that approach.

Applying semantic versioning to prediction APIs

Semantic versioning (SemVer) is a good mental model for best practices for machine learning API versioning—as long as you define what “breaking” means in your context. At a minimum, your API version should have MAJOR.MINOR.PATCH semantics.

In an ML setting:

- PATCH (

1.0.1): bug fixes, internal optimizations, doc clarifications. No schema or meaning changes. - MINOR (

1.1.0 → 1.2.0): strictly additive, non breaking changes such as new optional fields or extra metadata. - MAJOR (

1.x → 2.0.0): changes that remove fields, change types, or alter documented semantics.

For example, adding an optional confidence_interval field to your response would be a MINOR bump: old clients still work. Changing risk_score from a 0–100 integer to a 0–1 float with different semantics is a MAJOR bump: downstream rules and dashboards may break. When you log and expose API and model version info in headers or response metadata, A/B analysis and debugging become far easier.

Aligning API versioning with MLOps pipelines and registries

Versioning logic shouldn’t live in someone’s head or a spreadsheet. It belongs in your deployment pipeline and model registry. An ideal ml model versioning strategy for production APIs wires CI/CD such that when a new model passes evaluation gates, it can be bound to an existing API version slot—if it’s compatible—or to a new version, if not.

Google’s “Machine Learning: The High‑Interest Credit Card of Technical Debt” famously argued that ad‑hoc ML systems accumulate debt fast. Their core insight applies here: without explicit contracts and automation, each new model silently increases system complexity. A robust MLOps pipeline, like the ones described in Google’s technical debt paper, will automatically tag models, run contract tests, and update routing configs for continuous model delivery.

Backward Compatible Machine Learning API Design Patterns

Versioning alone isn’t enough. If every model change forced a new API major version, you’d quickly drown in variants. The real leverage comes from backward compatible machine learning API design—schemas and behaviors that can evolve without breaking existing clients.

Designing request and response schemas for change

The most important schema principle is additive‑first evolution. Avoid changing or removing existing fields whenever possible. Instead, add new optional fields with well‑documented semantics.

Say your current response schema is:

{

"risk_score": 0.87,

"risk_band": "high"

}

You want to add confidence intervals. A backward‑compatible change would be:

{

"risk_score": 0.87,

"risk_band": "high",

"confidence_interval": {

"lower": 0.75,

"upper": 0.92

}

}

Old clients can ignore confidence_interval. Document it as optional, include default behaviors, and if you ever need to phase something out, use explicit field‑level deprecation markers in schema docs. This pattern of additive schema versioning is at the heart of non breaking changes in ML APIs.

Managing behavioral changes and statistical shifts

Not all breaking changes are about fields. In ML, behavior shifts are more common than schema shifts. You might recalibrate a model so scores become well‑calibrated probabilities instead of arbitrary scores. If your API just says “score,” clients can’t tell whether 0.8 means “top 20% risk” or “80% probability of default.”

The fix is to publish explicit behavioral guarantees in your API contract: score ranges, monotonicity, and intended interpretations. When you change those, treat it like a semantic breaking change. One safe pattern is to introduce a new field for new semantics (e.g., risk_probability) while maintaining the old one (risk_score_legacy) for a deprecation period. That’s how you keep up with model evolution and model retraining without torching api stability.

Deprecation policies that don’t surprise clients

Deprecation shouldn’t feel like a trap. Mature APIs have clear policies: when a field or endpoint is marked deprecated, there’s a documented timeline, recommended migration path, and overlapping support window. For ML, that often means running both old and new semantics in parallel for a full business cycle.

Concretely, you can add a Deprecation header or embed a deprecation_notice field in the response, pointing to a changelog URL. Complement that with out‑of‑band communication—emails, dashboards, internal announcements—so that integration teams aren’t surprised. This is table stakes for backward compatibility and aligns with best practices for machine learning API versioning.

Compatibility strategies for high‑risk changes

Sometimes, though, you really do need a new major version. Suppose you redefine what “fraudulent” means in your labeling pipeline—maybe due to regulatory guidance or business strategy. That’s not a small statistical shift; it’s a conceptual change. In those cases, it’s safer to introduce /v2/fraud-score rather than silently mutating /v1.

You can still roll out carefully. Use a blue‑green style deployment: keep the old version live while you bring up the new one behind the scenes, route a small percentage of traffic to it (a canary pattern), compare outcomes, and then gradually migrate clients. This is where careful endpoint versioning strategy meets operational discipline. A real‑world illustration of how badly this can go is seen in incidents where recommendation or ranking updates caused unexpected user‑visible behavior; outlets like the Verge have covered cases where opaque model updates triggered public backlash.

Supporting A/B Tests, Canary Releases, and Shadow Deployment via APIs

If you’ve ever bolted an experiment onto a production ML system, you know how messy it can get. Evolution‑ready APIs assume experimentation is constant. Routing control and metadata flow become core design elements, not afterthoughts.

Routing strategies for multiple model versions

The foundation is a routing layer that can direct requests to different model versions based on configuration rather than code changes. You might route by percentage (10% to candidate model), by segment (all traffic from a specific country), or by feature flag (enabled for beta customers only). The API endpoint stays the same; the routing config evolves.

This is where robust A/B testing and canary release patterns live. The routing system should log which model served each request and why. That traceability is vital for effective ab testing models, debugging regressions, and safe shadow deployment strategies at the prediction endpoints layer.

Encoding experiment metadata in API responses

To make those experiments analyzable, you need metadata in your responses or headers. Typical fields include model_id, model_version, experiment_id, or bucket. With these, you can slice logs and offline metrics by experimental condition.

For example, a response might include:

{

"risk_score": 0.87,

"risk_band": "high",

"meta": {

"model_version": "fraud_model_v1.4.0",

"experiment_group": "canary_10pct_eu"

}

}

Balance is important: you want enough transparency for observability and model governance, but not so much that you leak sensitive implementation details or invite clients to couple themselves to specific model IDs.

Shadow deployments without impacting client behavior

Shadow deployment is one of the most powerful tools for de‑risking new models. The idea is simple: duplicate incoming requests to a candidate model, record its responses, but don’t return them to the client. This lets you evaluate a new model on live traffic without user impact.

At the API layer, this looks like a forked execution path behind the scenes. Clients keep calling /v1/predict as usual. The routing layer fans out the request to both the current champion and the new candidate, then quietly logs the candidate’s output for comparison. This pattern is especially valuable for high‑stakes domains—credit, fraud, healthcare—where you want weeks of shadow data before flipping any switch for continuous model delivery.

Taming Operational Complexity with Multiple Model Versions

By this point, you might be thinking: this sounds like a lot of moving pieces. You’re right. Once you support multiple models, experiments, and versions, the operational surface area grows. That’s exactly why you need a platform mindset, anchored in a strong model registry and disciplined pipelines.

Model registries as the source of truth

A model registry is to models what a source control system is to code. It tracks versions, metadata, lineage, approval status, and deployment history. For each model, you record when it was trained, on what data, with what hyperparameters, and how it performed.

In an ideal setup, your API routing config references logical slots (e.g., fraud_model:champion) rather than hard‑coded model IDs. The registry then binds those slots to specific model versions. Vendor docs, like the MLflow Model Registry documentation, show how powerful this abstraction is for mlops best practices and model lifecycle governance.

Deployment pipelines that respect API contracts

CI/CD pipelines for ML should understand API contracts explicitly. Before any model is promoted to serve a particular API version, it should pass both performance checks and contract checks. The latter verify that the model’s inputs and outputs remain compatible with the API’s schema and documented behavior.

For example, a pipeline stage might replay a snapshot of real traffic against the new model and compare responses to historical ones, ensuring that required fields are still present, types haven’t changed, and key invariants hold. If contract tests fail, the pipeline blocks promotion. This is how serious machine learning API development services prevent accidental breaking changes from ever reaching production.

Monitoring and rollback across API and model layers

Finally, monitoring has to span both API and model layers. On the API side, you watch latency, error rates, and throughput. On the model side, you monitor performance metrics, drift indicators, and calibration. When something goes wrong, you want fast, targeted rollback.

Rollback might mean reverting the model version bound to an API slot, restoring traffic to a previous model, or, in rare cases, downgrading the API version itself. Clear playbooks owned by your ML platform team ensure that these decisions are executed quickly and safely. This is what mature mlops best practices look like for continuous model delivery in a high‑change environment.

Modernizing Brittle ML APIs into Evolution‑Ready Interfaces

If you already have brittle ML APIs in production, the goal isn’t to start over from scratch. It’s to progressively migrate toward evolution‑ready interfaces. That begins with an honest assessment of how tightly your current APIs and models are coupled.

Assessing current API and model coupling

Start with an inventory. List all your ML‑powered endpoints, their request/response schemas, and the models they depend on. Note where raw features, thresholds, label names, or internal flags have leaked into the public API.

Then, ask a few hard questions: Can we retrain or swap this model without touching clients? Do clients rely on undocumented score interpretations? Do we know which dataset and training run backs each deployed model? This kind of assessment looks a lot like an AI readiness workshop—we often run structured AI discovery and architecture workshops with engineering and data science teams to make these dependencies visible.

A pragmatic migration playbook

Once you can see the coupling, you can untangle it step by step. A typical playbook to design evolution‑ready machine learning APIs might look like:

- Introduce explicit versioning for existing endpoints, even if you only have

/v1today. - Define business‑oriented schemas for requests and responses, independent of current model features.

- Insert a routing layer that can call different models and experiments while keeping the API surface fixed.

- Refactor models to consume internal representations derived from the business schema, not from public fields.

For example, we’ve seen legacy fraud detection APIs that directly exposed dozens of rule‑engine fields. Over a few sprints, you can wrap that in a new, cleaner contract, route old clients through a compatibility adapter, and start running a modern ML model behind the same endpoint. With careful use of backward compatibility, ml api versioning, and additive, non breaking changes, you can migrate without big‑bang rewrites.

How Buzzi.ai approaches evolution‑ready ML API development

At Buzzi.ai, we treat APIs as long‑term products, not thin wrappers around today’s model. Our enterprise machine learning API development consulting and implementation work starts with understanding your business decisions, then designing contracts that embody those decisions in stable, evolvable interfaces.

We combine architecture design, implementation, and ongoing MLOps support into a coherent offering of evolution‑ready machine learning API development services. In practice, that often means introducing a model registry, building a routing layer that supports experiments and canaries, hardening schemas for backward compatibility, and aligning CI/CD pipelines with your API contracts. The outcome is simple: you can iterate on models weekly without asking your customers or internal teams to constantly rebuild integrations.

Conclusion: Make Your APIs Outlive Your Models

Modern ML systems are inherently dynamic. Models improve, data shifts, and new use cases appear. If your APIs are shackled to any one model’s quirks, you’ll either slow down innovation or accept a constant stream of breaking changes. Neither is sustainable.

The alternative is evolution‑ready machine learning API development: stable, business‑oriented interfaces; clear separation of API, model, and data versions; backward‑compatible schemas and semantics; and routing plus MLOps pipelines that assume continuous model evolution. That’s how you get faster experimentation, fewer integration rewrites, and more predictable governance.

If you want a practical next step, pick one critical ML API and audit it against the patterns we’ve covered here. Where is it coupled to model internals? Where is versioning vague? Then, if you’d like a partner to help you design or modernize your roadmap, we’d be happy to talk about how Buzzi.ai can support your journey into evolution‑ready ML APIs.

FAQ

Why do traditional machine learning APIs break when models are updated or retrained?

Traditional ML APIs often mirror the model’s feature set and output directly in their request and response schemas. When you retrain or replace the model, those features, output ranges, or behaviors change, effectively turning every model update into an API change. Without a stable, business‑oriented contract, clients are forced to adapt every time the model evolves, which is costly and error‑prone.

What is an evolution‑ready machine learning API in practical terms?

An evolution‑ready ML API is a stable interface expressed in business language—entities, decisions, and guarantees—rather than model internals. Behind that surface, a routing layer can swap, retrain, and experiment with multiple models without altering the API contract. This approach lets you iterate quickly on models while keeping client integrations stable and predictable.

How should I choose a versioning strategy for my ML APIs (URL, header, or schema based)?

For most teams, URL‑based versioning (for example, /v1/predict, /v2/predict) is the most straightforward and debuggable choice. Header‑based or content negotiation schemes can work in highly standardized internal environments, but they introduce complexity in logging and observability. Whatever you choose, be consistent and reserve version bumps for meaningful contract changes, not for every model retrain.

What are best practices for backward compatible machine learning API design?

Design your schemas for additive change: add optional fields instead of modifying or removing existing ones, and document clear defaults and deprecation timelines. Treat behavioral shifts—like changing score semantics or calibration—as potentially breaking, and consider introducing new fields rather than silently redefining old ones. Combine these schema practices with robust communication (headers, changelogs, email) so clients have time to migrate without surprises.

How can I update or replace an ML model without forcing all API clients to change?

The key is to decouple your API contract from the model implementation. Use a routing layer that maps a stable endpoint to a configurable model version, and track that mapping in a model registry. As long as new models respect the same request/response schema and behavioral guarantees, you can deploy them behind the same API version, often using A/B tests or shadow deployments to validate performance before full rollout.

When is it acceptable to introduce breaking changes in a machine learning API, and how should I communicate them?

Breaking changes are acceptable when you need to change core semantics, remove problematic fields, or significantly redesign the contract—for example, redefining label meanings or shifting from arbitrary scores to calibrated probabilities. In those cases, create a new major API version, run it in parallel with the old one, and provide a clear migration guide and sunset timeline. Communicate changes through multiple channels and give clients enough overlap to transition safely.

How can semantic versioning be applied specifically to ML models and prediction APIs?

Use semantic versioning for your API contract (MAJOR.MINOR.PATCH) to signal the impact of changes on clients. PATCH covers internal fixes, MINOR covers backward‑compatible additions like optional metadata fields, and MAJOR covers breaking schema or behavioral changes. Separately, keep your model versions and training runs in a model registry, and expose those identifiers in responses or headers for debugging and governance.

What design patterns help decouple API contracts from the underlying ML model implementation?

Key patterns include business‑oriented schemas, a dedicated prediction gateway or routing layer, and model registries as the source of truth. The API talks to the gateway, which selects the appropriate model version based on configuration, experiments, or customer segment. This indirection lets you change models freely while keeping the external contract stable.

How can I support A/B testing or shadow deployment of new models through my ML API layer?

Build routing logic that can direct traffic by percentage, segment, or feature flag, and make sure each request is tagged with the model and experiment identifiers that served it. For A/B tests and canary releases, route a subset of traffic to the candidate model and compare outcomes. For shadow deployment, duplicate requests to the new model but only return the champion’s response, logging the candidate’s output for offline analysis; a good guide to patterns like this can be found in best‑practice resources from feature flag and edge providers such as LaunchDarkly.

How does Buzzi.ai approach evolution‑ready machine learning API development for enterprise clients?

We start by analyzing your current ML APIs, models, and data flows to identify coupling and technical debt. From there, we design stable, business‑centric contracts, introduce routing and registry patterns, and integrate these with your MLOps pipelines. Our goal is to give you a platform where models can change weekly while your API layer remains stable—so your teams can focus on delivering value, not chasing breaking changes; you can learn more about our approach on our evolution‑ready ML API services page.