Enterprise AI Consulting That Survives Real Governance

Enterprise AI consulting that survives real governance. Learn frameworks for stakeholders, decision rights, and implementation so AI strategies actually ship.

Most enterprise AI consulting failures aren’t technical—they’re political. The models perform in the lab, the business case clears your hurdle rate, and the POC demo gets applause. Then the initiative quietly dies in a steering committee, or disappears somewhere between Legal, Risk, Procurement, and Architecture Review.

That’s the gap this article is about. Enterprise AI consulting in large organizations is not primarily a problem of choosing the right algorithm; it’s a problem of enterprise AI strategy, AI governance, and stakeholder management. In other words: decision rights, risk posture, and organizational power matter more than model accuracy.

We’ll walk through concrete frameworks you can actually use: how to map your governance landscape, clarify decision rights, build a stakeholder “power grid”, and structure engagements that end in production systems instead of abandoned decks. Our goal is simple: help you design AI programs that can survive real governance and ship at scale.

At Buzzi.ai, we focus on helping enterprises do exactly that—designing and deploying AI agents, chatbots, and workflows that clear committees and sustain value, not just impress in demos. Let’s start with what makes enterprise AI consulting fundamentally different from generic AI advice.

What Makes Enterprise AI Consulting Different

On paper, most enterprise AI consulting services look the same: assess data, pick use cases, build a model, show a dashboard. In practice, the difference between an initiative that ships and one that stalls is whether your consultant understands committees, policies, and how technology decisions actually get made inside your company.

In a startup, a strong technical argument can be enough. In a bank, insurer, or telco, that same argument must pass through layers of AI governance, risk management, and budget review. Enterprise AI consulting, done properly, expands the scope from models to operating model, decision rights, and politics.

From Models to Decision Rights: Redefining the Scope

Traditional AI consulting assumes the main constraints are technical: data quality, model choice, infrastructure. Enterprise AI consulting has a broader mandate: it must design an enterprise AI strategy that fits your governance structures, funding model, and regulatory obligations. If you don’t address those, even the best recommendations will never be executed.

Consider the contrast. A 200-person SaaS company wants to use AI to prioritize sales leads. The CEO, Head of Sales, and CTO agree in a single meeting. The data team ships a model in weeks, the team experiments in production, and the strategy evolves live. There’s some friction, but almost no formal governance.

Now compare that to a regulated bank trying to use AI for credit risk scoring. Before a single line of code goes live, you’ll encounter Model Risk, Compliance, Legal, Data Governance, InfoSec, and often the central bank’s guidelines. What looks like a simple AI transformation problem is actually a design challenge in decision rights, documentation, and digital transformation governance.

Effective enterprise AI consulting services therefore extend into:

- Designing decision-making structures around AI systems

- Clarifying who owns which approvals (and on what basis)

- Embedding AI into the existing operating model rather than building a parallel universe

Why Technically Strong Recommendations Die in Committees

If you talk to AI teams in large organizations, you hear a familiar story: strong business case, promising prototype, then endless committee cycles. The problem isn’t always a hostile veto; it’s often a slow bleed through risk reviews, unclear sponsorship, and shifting budget priorities.

Typical failure modes include:

- Steering committee deadlock: no one wants to say “no”, but no one is willing to fully own the “yes”.

- Risk vetoes: Model Risk or Compliance blocks deployment because requirements were never addressed upfront.

- Procurement and third-party risk delays: vendor choices trigger processes that add 6–12 months of friction.

- Sponsorship drift: the executive sponsor moves roles or loses political capital midway through the program.

Slides optimize for intellectual elegance. Committees optimize for risk and politics. A deck that looks brilliant in isolation can be dead on arrival if it implicitly assumes a decision-making process your organization doesn’t have.

In one global insurer, for example, a predictive analytics initiative promised to cut claims cycle time by 25%. The model was solid, the proof-of-concept ran well. But nobody had mapped how Model Risk would assess the system, which documentation they would require, or who had final authority to sign off. The project hit Model Risk with a 150-slide deck and no alignment. Six months later, the model was still “under review”; the team was reassigned; the initiative quietly ended.

Good enterprise AI consulting works backward from these realities. It treats steering committees, risk reviews, and architecture boards as first-class design constraints, not annoying afterthoughts. The question is no longer “what’s the best model?” but “what model, documentation, and operating model will this organization actually approve and run?”

A Governance-First Blueprint for Enterprise AI Strategy

If you want an enterprise AI strategy and consulting for large organizations that actually ships, start with AI governance, not use cases. That sounds backwards to many teams, but in practice you save time: you design AI that fits through the pipes your organization already has, instead of repeatedly slamming into walls.

Think of governance as the terrain your AI program must cross. You can’t change the mountains overnight, but you can choose your route wisely—and sometimes build a tunnel.

Map the Governance Landscape Before the Use Case

Before you lock in use cases, you need a map. Which governance bodies exist today—Risk, Compliance, Legal, InfoSec, Data Governance, Architecture Review Boards, Procurement? Which of them can say yes, which can say no, and which can slow you down without ever formally rejecting anything?

We call this a Governance Topography map. It has three core questions:

- Who can approve AI-related decisions (and on what criteria)?

- Who can veto or materially delay them?

- Which policy and compliance frameworks—data privacy, regulatory requirements, cyber, model risk—must AI initiatives align with?

For a large bank, that map might include: a Model Risk Committee (focused on model governance and validation), a Data Governance Council (data quality, lineage, access), an Architecture Board (integration with core systems), and a Risk & Compliance Committee (overall risk posture and regulatory alignment). Each has its own calendar, documentation expectations, and decision rights.

With a Governance Topography in hand, your AI governance plan stops being abstract. You can plot which committees each AI use case will touch, how often they meet, and where you need early alignment. It also clarifies where to embed key decision rights so you’re not improvising at the eleventh hour.

Design Decision Rights and Guardrails Upfront

Decision rights are the skeleton of enterprise AI. Without clarity on who decides what, your AI program becomes a ghost—impressive in theory, insubstantial in practice.

A simple way to approach this is with RACI-style thinking for key AI decisions across Business, IT, Risk, and Compliance. For a credit decisioning system, you might define:

- Business: Accountable for business outcomes and treatment strategies; Responsible for defining acceptable trade-offs between risk and growth.

- Risk/Model Risk: Accountable for model governance and validation; Responsible for approving model classes, monitoring plans, and exception thresholds.

- IT/Data: Responsible for implementation, data pipelines, MLOps, and technical reliability.

- Compliance/Legal: Consulted on policy interpretation, regulatory requirements, and customer impact.

Clear guardrails make everything easier: data use boundaries, explainability requirements, human-in-the-loop checkpoints, and escalation paths for exceptions. Instead of being a brake, Risk and Compliance become design partners. You trade some theoretical flexibility for predictability and faster approvals.

Align AI Strategy With Enterprise Architecture and Operating Model

Too many AI roadmaps live in a parallel universe from enterprise architecture and the operating model. The result is predictable: pilots that can’t be integrated, duplicated data pipelines, and frustrated IT teams asked to “just make it work”.

A durable AI roadmap sits inside the existing architecture: data platforms, MLOps tooling, API gateways, IAM, logging and monitoring, incident management. Your operating model defines who runs what: which teams own models, who handles incidents, how changes are approved.

We’ve seen both sides. In one company, a consulting-led AI strategy ignored a concurrent data platform program. AI use cases were designed around ad hoc extracts and bespoke infra. When the data platform went live, everything had to be rebuilt. In another, the AI strategy was deliberately tied to a cloud data platform rollout; early use cases stress-tested the platform and created pull from business units. AI transformation and digital transformation reinforced each other instead of competing for funding.

The lesson: your enterprise architecture and operating model are not background noise, they’re the rails your AI program must run on. Good enterprise AI consulting designs for that from day one.

Stakeholder Mapping: The Enterprise AI Power Grid

Governance structures tell you how decisions are supposed to be made. Stakeholder mapping tells you how they’re actually made. In enterprise AI, this is the power grid you must understand if you want reliable delivery instead of random outages.

Effective stakeholder management and enterprise stakeholder alignment are not soft skills bolted onto the end; they’re central design inputs, just like data availability or model choice.

Identify Sponsors, Owners, Blockers, and Operators

A simple way to structure stakeholders is into four roles:

- Executive Sponsors: Senior leaders who can allocate budget, provide air cover, and align AI initiatives with strategy.

- Business Owners: Leaders who own the process or P&L affected by AI (claims, customer service, underwriting).

- Governance Blockers: Risk, Legal, Compliance, InfoSec—any group with veto or delay power.

- Operators: IT, data engineers, ML engineers, frontline managers who must build and run the thing.

Each group sees risk, value, and success differently. Executive Sponsors think about strategic positioning and board narratives. Business Owners care about KPIs and operational pain. Governance Blockers optimize for downside protection and reputation. Operators worry about feasibility, maintenance, and on-call burden.

Too many AI programs court sponsors and owners while ignoring operators until late. That’s how you get beautiful strategy documents that can’t be built within current systems or timelines. Strong stakeholder management brings operators into discovery, not just implementation.

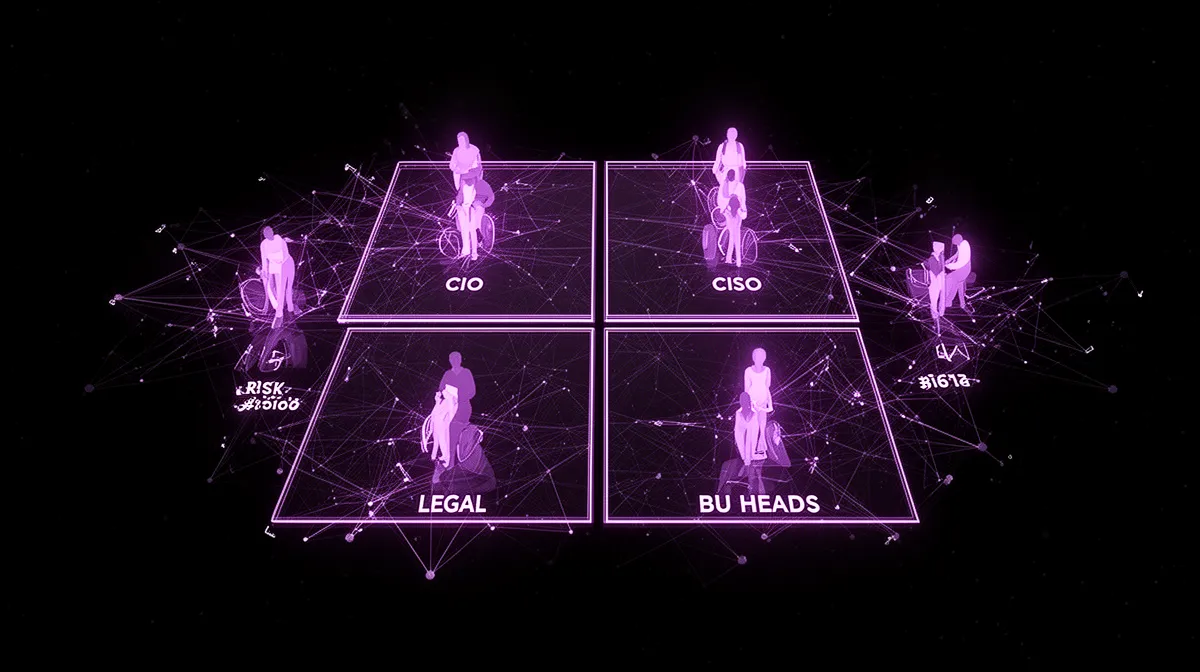

Practical Framework: The AI Power Grid Map

We use a framework we call the AI Power Grid: a 2x2 mapping stakeholders by influence (low to high) and sponsorship (opposed to supportive). Plotting individuals and groups onto this grid tells you where to focus engagement.

Think of a large telco launching AI for customer service automation. On the grid you might place:

- CIO: High influence, supportive—wants modernization, sees AI as leverage.

- CISO: High influence, cautious—concerned about data exposure and vendor risk.

- Head of Customer Service: High influence, mixed—interested in efficiency but worried about customer experience and unions.

- Legal: Medium influence, risk-focused—needs clarity on disclosures and liability.

- Frontline Supervisors: Lower formal influence, but critical to adoption—control day-to-day behavior.

An ai consulting firm that understands this grid will “pre-wire” decisions: one-on-one sessions with the CISO to address security controls; workshops with supervisors to co-design workflows; sponsor briefings that emphasize strategic upside while acknowledging trade-offs. The formal committee becomes a confirmation of work already done, not the starting line.

Securing and Sustaining Executive Sponsorship

Executive sponsorship is not a logo on a steering committee slide; it’s an ongoing political asset. To get—and keep—it, you need a narrative that matches the sponsor’s world.

For a COO, the story might be about throughput, reliability, and standardized processes. For a CMO, it’s personalization, customer satisfaction, and brand. In both cases, strong ai programs show how the ai roadmap ladders into their existing scorecards and board conversations, not a side project.

Tactically, this means pre-read briefings before key committees, sponsor-specific KPIs, and steering updates focused on upcoming decisions and risks, not just status. Sponsors don’t need a tour of features; they need to know what you need from them to keep momentum. That’s how organizational buy-in survives budget cycles.

Structuring an Enterprise AI Consulting Engagement

Once you understand governance and stakeholders, you can structure enterprise AI consulting services that deliver more than strategy slides. The engagement itself should model the future operating model: clear phases, explicit decision gates, and a path from idea to production.

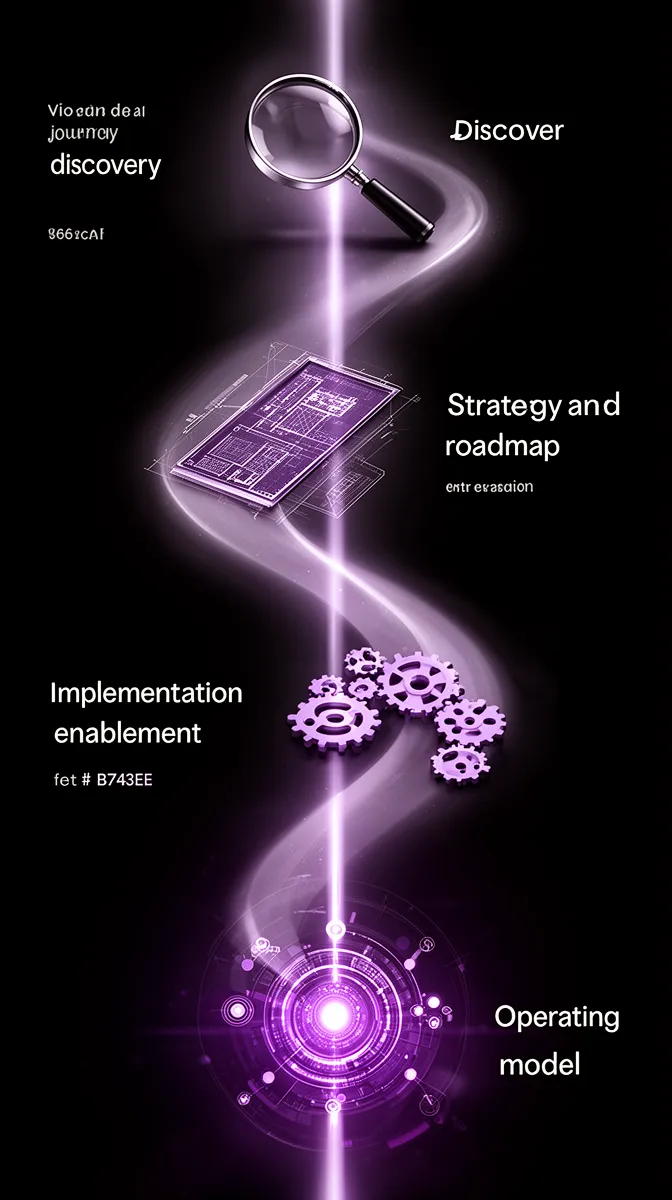

For enterprise AI strategy and consulting for large organizations, we’ve found a four-phase structure works best: Discovery, Strategy & Roadmap, Implementation Enablement, and Operating Model/Center of Excellence.

Phase 1: Discovery That Includes Governance and Politics

Most AI “discovery” work focuses on data and use cases. In an enterprise, you also need to discover governance, history, and politics. That means interviews not just with business and IT, but with Risk, Compliance, InfoSec, and the chairs of key committees.

Activities in this phase include:

- Governance interviews and committee calendar mapping

- Decision history reviews: which AI or digital initiatives succeeded or failed, and why

- Stakeholder mapping and Governance Topography refinement by business unit

In one engagement, a three-week discovery surfaced a “shadow veto group”: a cross-functional architecture/risk working group that wasn’t on any org chart but informally reviewed all major tech changes. Past AI projects had stumbled into them late and been delayed. Once identified, they became a core design partner instead of a surprise obstacle, changing the odds of success dramatically.

By the end of Discovery, you should have concrete artifacts: a stakeholder map, governance topography, and an ai readiness assessment that spans both technology and decision processes. This is the foundation for any serious governance frameworks you’ll propose.

Phase 2: Strategy and Roadmap Built Around Approval Paths

A credible ai strategy and ai roadmap in an enterprise can’t be just a list of high-ROI use cases. It must sequence those use cases by both value and “governance difficulty”. Some initiatives will demand heavy Model Risk scrutiny; others can move faster under existing policies.

We typically categorize use cases by:

- Business impact (revenue, cost, risk reduction)

- Data and technical feasibility

- Governance complexity: how many committees, what kind of approvals, regulatory exposure

The roadmap then packages initiatives into “approvable chunks” aligned with budgeting and risk review cycles: internal productivity tools in year one, external customer-facing automation once governance patterns are proven. Artifacts here include a target operating model for AI, RACIs for key decisions, and a model AI governance framework.

For example, a regulated insurer might start with internal claims triage tools and document processing before tackling fully automated customer-facing underwriting. Value is still generated early, but political and regulatory risk is managed more deliberately. This is also where you design the long-term implementation roadmap so individual projects add up to a coherent program.

Phase 3: Implementation Enablement, Not Just Slides

Where traditional consulting stops, effective enterprise AI consulting should lean in. Implementation enablement means taking the roadmap and turning it into backlog items, detailed requirements, and concrete handovers to internal teams or delivery partners.

That includes:

- Working with IT/Data to define platform and integration requirements

- Co-creating user stories and acceptance criteria with business and operators

- Designing playbooks for moving from proof of concept to production

In one case, a pilot chatbot for customer service was stuck as a perpetual “experiment”. The missing piece was an enterprise-grade design: integration with CRM, escalation flows to human agents, robust monitoring, and training for supervisors. With a clear implementation roadmap and change plan, the chatbot evolved into a hybrid support system used across regions—not a lab curiosity.

This is also where ai adoption and change management become very concrete: who needs training, what workflows change, and how success will be measured day-to-day.

Phase 4: Operating Model and Center of Excellence Design

Finally, you need an enduring structure. An AI Center of Excellence (CoE) is not just a team of data scientists; it’s the coordination layer for standards, tooling, ai governance, and enablement across the enterprise.

There are two broad patterns:

- Centralized CoE: One core team owns most AI delivery and governance—common in banks and highly regulated sectors.

- Federated CoE: A small central team sets standards and platforms; business units have embedded AI teams—common in global manufacturers and diversified enterprises.

In a global manufacturer, for example, a federated CoE might provide platforms, reusable components, and training while plant-level teams build local optimizations. In a bank, a centralized CoE may be necessary to meet strict model risk and regulatory expectations. In both models, you must define decision rights, funding models, and how the CoE interfaces with Risk, Compliance, and business units.

If you want to see how we structure this in practice, our enterprise AI discovery and strategy consulting work packages these four phases into a cohesive offering designed for large enterprises.

De-Risking Enterprise AI: Compliance, Change, and Metrics

So far, we’ve treated governance and stakeholders as constraints. They’re also your best risk mitigation tools. Proper risk management, change management, and measurement keep AI from becoming either a compliance nightmare or an underwhelming science project.

In mature enterprises, ai programs are judged not only by ROI but by their ability to maintain or improve the organization’s risk profile and reputation.

Regulatory, Risk, and Compliance Are Design Inputs

The worst way to involve Risk and Compliance is at the end. It forces them into a gatekeeper role and practically invites them to say no. Instead, treat policy and compliance requirements as design inputs from day one.

That includes:

- Data privacy regimes (GDPR, CCPA, sector-specific rules)

- Model risk and explainability expectations (especially in finance and healthcare)

- Third-party risk management for vendors and cloud services

A central bank model risk guideline, for instance, may require clear model documentation, validation processes, and ongoing monitoring. Translating a generic “must be explainable” policy into concrete choices might mean favoring gradient-boosting models with explainability techniques over opaque deep learning in certain contexts, plus structured documentation and challenge processes. Academic and regulatory work on model risk, like the Federal Reserve’s SR 11-7 guidance or ECB publications, provides a useful backbone for such model governance approaches.

Similarly, the emerging EU AI Act formalizes categories of AI risk and associated obligations. Aligning your enterprise AI initiatives with these regulatory requirements early makes approvals smoother and avoids last-minute redesigns.

Change Management for AI Adoption at Scale

AI doesn’t just change workflows; it changes identity. People worry: Will this system replace me? Will it monitor me? Will it make me less relevant? Ignoring that is a recipe for quiet resistance and low adoption.

Effective change management for ai adoption includes:

- Change impact analysis: Which roles and processes change, and how?

- Co-design with frontline users: Involve them in workflow design and interface decisions.

- Training and enablement: Not just how to use the tool, but how it changes expectations.

- Feedback loops: Channels to flag issues, suggest improvements, and feel heard.

We’ve seen support teams initially resist AI assistants that propose responses or triage tickets. When the initiative was framed as surveillance or headcount reduction, adoption was minimal. When the team was involved in co-design, and metrics were framed as support (fewer repetitive queries, more time for complex cases), adoption and satisfaction rose sharply.

In some regions, you must also engage unions or works councils early. That isn’t a box-ticking exercise; it’s part of building sustainable organizational buy-in and avoiding delays later in the implementation roadmap.

Defining Success Metrics and Avoiding Vanity KPIs

What does success look like? If your answer is “number of models built” or “POCs completed”, you’re in trouble. Those are activity metrics, not outcomes.

Instead, think in layers:

- Program-level: Contribution to strategic goals (e.g., claim cycle time reduction, NPS improvement, cost-to-serve).

- Use-case-level: Specific impact (e.g., percentage of claims auto-triaged, manual review reductions, uplift in conversion).

- Governance/quality: Bias metrics, stability, override rates, incident counts, regulatory findings.

For an AI claims triage system, you might track average handling time, backlog size, triage accuracy, override frequency, and compliance indicators like audit findings. These metrics keep both business and Risk at the table, because they show that you’re changing decisions and cycle time while maintaining a stable risk profile.

Critically, you should wire these metrics into sponsor scorecards and board reporting. When AI impact shows up in the COO’s dashboard or the Risk Committee pack, ai programs stop being “innovation” and become part of the core business.

Where AI starts to rewire workflows, our work in enterprise AI implementation and workflow automation focuses heavily on these metrics, so that automation is visible, governable, and improvable over time.

Choosing an Enterprise AI Consulting Partner

All of this raises an obvious question: how do you pick a partner who understands governance and politics, not just models and glossy demos? The stakes are high; the wrong partner loads you up with shelfware. The right one helps you change how decisions are made, safely.

Questions to Expose Governance and Political Maturity

When assessing how to choose an enterprise AI consulting partner, ask questions that force them to show scars, not just success stories:

- “Tell us about an AI initiative that stalled in governance. What went wrong and what did you change next time?”

- “How do you engage Risk, Legal, and Compliance from day one?”

- “What’s your approach to mapping decision rights and approval paths?”

- “Show us sample artifacts: governance maps, RACIs, model governance frameworks.”

- “How do you support implementation beyond strategy—what does enablement look like?”

- “What’s your experience with our regulators or similar regulatory environments?”

Strong answers will be specific, with examples from regulated contexts and clear methods for stakeholder and governance work. Weak answers will circle back to generic AI capabilities, technical tools, and “change management” buzzwords without real process depth. The best enterprise AI consulting firm for governance will treat these topics as core, not peripheral.

How Buzzi.ai’s Enterprise-Native Approach Differs

At Buzzi.ai, we’ve built our approach around the realities of large enterprises. We start with governance-first design, stakeholder mapping, and operating model definition—not just model selection. Our experience building production-grade AI agents, chatbots, and voice systems in governed environments shapes every consulting engagement.

We work as both an ai solutions provider and a strategy partner: clarifying decision rights, aligning with your architecture, and designing AI that can be deployed and maintained, not just demoed. That includes coaching sponsors through committees, co-designing with operators, and embedding ai strategy consulting into the way your organization already works.

In one anonymized example, a regional financial institution had run multiple AI POCs with limited production impact. By mapping their Governance Topography, redesigning their enterprise AI consulting services engagement structure, and building AI agents aligned with Risk and Compliance requirements, they moved from scattered pilots to a coherent portfolio of AI systems live in production—each with clear ownership, monitoring, and board-level reporting.

If you’re serious about enterprise ai implementation that can survive real governance, the next step is to assess your own terrain: which committees, which stakeholders, which policies. Then pick partners who can navigate that terrain with you.

Conclusion: Make Governance Your AI Advantage

Enterprise AI consulting, done right, starts with governance, decision rights, and stakeholder realities—not just technology. In large organizations, those are the real levers of success. The algorithms matter, but only inside an architecture of approvals and ownership that lets them operate safely.

Mapping your governance landscape and stakeholder power grid turns opaque committee behavior into something predictable. Structuring engagements around discovery, roadmap, implementation enablement, and operating model design prevents AI from becoming shelfware. When risk management, compliance, and change management become design inputs, you protect both innovation and reputation.

If you want your AI strategies to actually ship and scale, audit your current initiatives against the frameworks in this article. Where are decision rights unclear? Which stakeholders are missing? Then reach out to Buzzi.ai to design an enterprise AI consulting engagement that fits your specific committees, policies, and culture.

FAQ: Enterprise AI Consulting, Governance, and Scale

What is enterprise AI consulting and how is it different from general AI consulting?

Enterprise AI consulting focuses on designing AI programs that work inside the governance, regulatory, and political realities of large organizations. It goes beyond model selection to include decision rights, operating models, and risk frameworks. General AI consulting often stops at POCs and technical recommendations; enterprise AI consulting is about getting safe, compliant systems into production and keeping them there.

Why do technically strong AI recommendations often die in enterprise committees?

They die because they ignore how decisions are actually made. Committees optimize for risk, optics, and accountability, not just ROI or model performance. Without early alignment on governance requirements, decision rights, and sponsorship, even the best AI ideas can be slowed or vetoed by Risk, Legal, Compliance, or Architecture Review Boards.

Which governance frameworks matter most for enterprise AI initiatives?

The most critical frameworks typically include data privacy, model risk management, information security, and sector-specific regulations (like banking or healthcare rules). You also need internal AI governance policies covering model lifecycle, monitoring, and documentation. Mapping these early allows you to design AI solutions that fit existing controls instead of fighting them at approval time.

How should an enterprise AI consulting engagement be structured from discovery to implementation?

A robust engagement usually has four phases: Discovery (including governance and stakeholder mapping), Strategy & Roadmap (sequenced by value and governance difficulty), Implementation Enablement (backlog, requirements, and POC-to-production playbooks), and Operating Model/CoE design. This structure ensures you address politics and process, not just technology, and that there’s a clear path from slide deck to deployed system.

Who are the critical stakeholders in enterprise AI projects and when should they be involved?

Critical stakeholders include Executive Sponsors, Business Owners, Governance functions (Risk, Compliance, Legal, InfoSec), and Operators (IT, data teams, frontline managers). Sponsors and governance should be involved from the very start of discovery to set appetite and constraints. Operators must be engaged early enough to shape feasible designs, not just asked to “implement” a finished strategy.

How can enterprises prevent AI consulting projects from becoming shelfware?

First, insist on an implementation roadmap and operating model design as part of the engagement, not just strategy slides. Second, tie use cases to clear metrics, owners, and governance pathways, so there’s a defined route to production and monitoring. Finally, choose partners who stay involved through enablement and change management, not only during initial workshops.

What change management practices are essential for large-scale AI adoption?

Key practices include change impact analysis, early co-design with frontline users, targeted training and enablement, and visible leadership support. You should also create feedback channels so users can report issues and suggest improvements. In unionized or highly regulated environments, formal engagement with representative bodies is critical to securing lasting buy-in.

How do you design an AI operating model and Center of Excellence for a large organization?

Start by deciding whether a centralized or federated CoE model fits your culture, risk appetite, and structure. Then define clear decision rights: who owns standards, who builds models, who approves changes, who runs platforms. Finally, establish interfaces with Risk, Compliance, and business units so governance is embedded into everyday AI work rather than added as a late-stage hurdle.

What risk, compliance, and regulatory issues must enterprise AI consulting address?

Enterprise AI consulting must address data privacy, model risk, explainability, third-party risk management, cyber security, and industry-specific regulations. It should translate policies into concrete design choices, documentation, and monitoring requirements. Proactive alignment with emerging regulations like the EU AI Act helps avoid rework and reputational risk down the line.

What questions should we ask when choosing an enterprise AI consulting partner?

Ask about their experience with regulators, how they engage Risk and Legal from day one, and examples of stalled initiatives they’ve helped unblock. Request concrete artifacts like governance maps and RACIs, and probe their role in getting systems into production rather than just building POCs. You’re looking for depth in governance and stakeholder work, not only technical prowess.

How does Buzzi.ai’s approach to enterprise AI consulting differ from traditional technical consultancies?

Buzzi.ai combines deep technical capability with a governance-first mindset tailored to large enterprises. We focus on decision rights, stakeholder power grids, and operating models alongside models and infrastructure. Our work—from enterprise AI discovery and strategy consulting to implementation of AI agents and workflow automation—is built to survive real committees and deliver measurable outcomes in production.