Design Enterprise AI Automation Around Governance, Not Code

Design enterprise AI automation around governance, risk, and change management first. Learn how to scale automation safely with an organization‑aware strategy.

Most enterprise AI automation doesn’t die in the lab; it dies in a risk committee, a change-advisory board, or a skeptical business unit meeting. The technical proof-of-concept works, yet the organization quietly refuses to adopt it. In large enterprises—especially regulated ones—governance, risk, and change management, not model accuracy, decide whether automation scales.

That’s what makes enterprise AI automation different from a basic script or SaaS integration. You’re not just wiring an API into a form; you’re introducing a new class of probabilistic “digital employees” into processes that regulators, auditors, and executives already worry about. The question is less “Can this model hit 94%?” and more “Who is accountable when it’s wrong, and how do we prove we’re in control?”

When enterprises ignore this, they get the familiar pattern: successful pilots that never leave the sandbox, an enterprise automation narrative that stalls, and “shadow AI” projects that grow outside official processes. In this article, we’ll lay out a concrete AI automation strategy: how to design an enterprise AI strategy that is governance-first and organization-aware. We’ll connect to frameworks you already know—COBIT, ITIL, ISO 27001, NIST AI RMF, Prosci ADKAR—and show where AI fits. At Buzzi.ai, we build automation this way by default: technology plus a governance spine that lets you scale with confidence.

What Enterprise AI Automation Really Is (and Why It Fails)

Beyond Scripts and Workflows: A Working Definition

Most organizations already have some automation: macros, RPA bots, workflow tools. But enterprise AI automation is a different animal. It’s the coordinated use of AI agents, RPA, and intelligent workflows embedded into core business processes across departments—running continuously, not as isolated experiments.

Think of it as an operating layer that combines intelligent automation, RPA and AI integration, and process orchestration. These AI systems don’t just follow a script; they interpret unstructured data, make probabilistic judgments, and interact across CRMs, ERPs, core banking, and ticketing systems. Humans are still in the loop, but the “default” often becomes automated, with people intervening selectively.

Contrast a simple RPA macro that copies data from one screen to another with an AI-powered claims triage system in an insurance enterprise. The macro is deterministic; if the UI doesn’t change, it just works. The claims triage system classifies claim types, extracts information from PDFs, estimates severity, and routes to the right team. It must handle edge cases, explain decisions, log every step, and defer to humans when confidence is low. That is enterprise automation, not scripting.

Why Technical Success Doesn’t Equal Enterprise Success

In many organizations, the journey looks like this: a team builds a technically solid AI assistant, nails a demo, and shows impressive ROI estimates. Then, the project hits risk, legal, or compliance—and stalls. Or it reaches the Change Advisory Board (CAB), which asks, “What happens when this fails at 2:00 a.m.?” and nobody has a good answer.

This isn’t irrational. AI systems introduce probabilistic outcomes, explainability concerns, sensitive data flows, and new forms of operational risk. Existing regulatory compliance processes were tuned for deterministic software with clear specifications, not models that learn from data. Without a clear AI automation strategy, risk teams see a black box, and their default move is to say “no” or “not yet.”

Ownership is often fragmented too. Business sponsors care about cycle time and revenue. IT cares about integration and uptime. Risk and compliance care about auditability and exposure. Operations cares about workload and SLAs. When no one can clearly say, “I own this AI workflow, its outcomes, and its failure modes,” the safest path is to shelve the rollout.

The Real Root Causes: Organizational Not Technical

When you unpack failed AI automation programs, the pattern is striking: the tech was usually good enough. It’s the organization that wasn’t ready. The root causes are overwhelmingly non-technical.

You typically see a cluster of issues: weak change management, unclear ai governance, and missing accountability. Executive sponsorship is shallow—people endorse “AI” in principle but won’t take responsibility when an automated decision backfires. Model risk, data governance, and security teams are involved too late, often after key design choices are locked in.

Here are common failure patterns we see:

- “Pilot graveyard”: Dozens of POCs that never reach production because risk and compliance were never in the room.

- “Ownership vacuum”: No single business owner for the end-to-end process, so no one will sign off on go-live.

- “Governance bolt-on”: Models built first, governance policies written later, resulting in rework or rejection.

- “Tool sprawl”: Multiple teams buying their own AI tools, leading to incompatible standards and duplicated effort.

- “Change blindness”: Front-line staff not consulted; they quietly bypass the new AI tools and keep legacy workarounds.

- “Metrics mismatch”: IT reports high uptime while business leaders see no improvement in KPIs and lose trust.

The question “Why do most enterprise AI automation projects fail despite strong technical capabilities?” has a simple answer: because organizational change and stakeholder management were an afterthought. Governance, risk, and change have to be designed in from day one, not bolted on once the demo is done.

Designing an Enterprise AI Automation Strategy Around Governance

Start with Risk, Compliance, and Controls – Not Use Cases

In regulated industries, most AI automation strategies start in the wrong room. They begin with business sponsors brainstorming use cases, not with risk and compliance defining what’s allowed. For a sustainable enterprise AI automation program, the first workshop should include risk, compliance, legal, security, and data protection.

At that level, you’re not debating “chatbot vs. RPA.” You’re mapping the organization’s risk appetite to automation domains: customer communications, payments, KYC, clinical workflows, HR actions, and more. You decide where AI is allowed to fully automate, where it can recommend, and where it can only assist. Those guardrails become the outer boundary of your ai automation strategy.

Consider a healthcare provider. They might define a three-tier control model for enterprise AI automation solutions for regulated industries:

- Tier 1 – Fully automated back-office tasks: Claims data extraction, coding suggestions, appointment reminders—low direct patient risk, high-volume, governed via standard IT controls.

- Tier 2 – Supervised clinical triage: AI suggests triage priorities or flags high-risk cases, but clinicians must confirm; all AI recommendations are logged and reviewable.

- Tier 3 – Advisory-only for physicians: AI surfaces literature, guidelines, and similar cases, but never directly recommends treatment; clinical governance boards set boundaries.

This sort of risk-tiering is the starting point for serious risk and compliance alignment. It gives everyone a shared language before you ever discuss a specific model.

Map AI Automation to Existing Governance Frameworks

The good news is you don’t need to invent a new governance framework for AI from scratch. Large organizations already use COBIT for governance of enterprise IT, ITIL for service and itil change management, ISO 27001 for security, and the NIST AI Risk Management Framework for AI-specific risk. The task is to overlay the AI automation lifecycle onto these structures.

At a high level, COBIT’s decision-rights and controls define who can approve which AI automations and under what conditions. ITIL governs how changes move through environments and how incidents are handled. ISO 27001 underpins data governance, access control, and logging. NIST AI RMF provides the vocabulary and practices for identifying, measuring, and mitigating AI-specific risks like bias, robustness, and explainability.

Imagine an AI-driven KYC automation. Under COBIT (and related ISACA guidance like the COBIT framework), your board or its delegate defines which roles can approve automation of identity checks and under what risk thresholds. Under ITIL, every new model version is a standard or normal change with documented rollback. ISO 27001 ensures training data, model artifacts, and logs are protected and auditable. NIST AI RMF adds explicit checkpoints for data quality, bias analysis, monitoring, and continuous risk assessment throughout the lifecycle.

When we design a governance-first enterprise AI strategy, we make this mapping explicit. Each stage—design, build, deploy, monitor, retire—has named owners and checklists linked to COBIT, ITIL, ISO, and NIST AI RMF. That makes the process legible to auditors and risk teams, which is half the battle.

Define a Governed Automation Roadmap, Not a Feature Wishlist

Most automation roadmaps start as feature wishlists: “Let’s automate onboarding, then claims, then collections…” A governance-first roadmap sequences use cases by risk, dependency, and maturity of controls. You start where your enterprise automation capabilities and governance are already strongest.

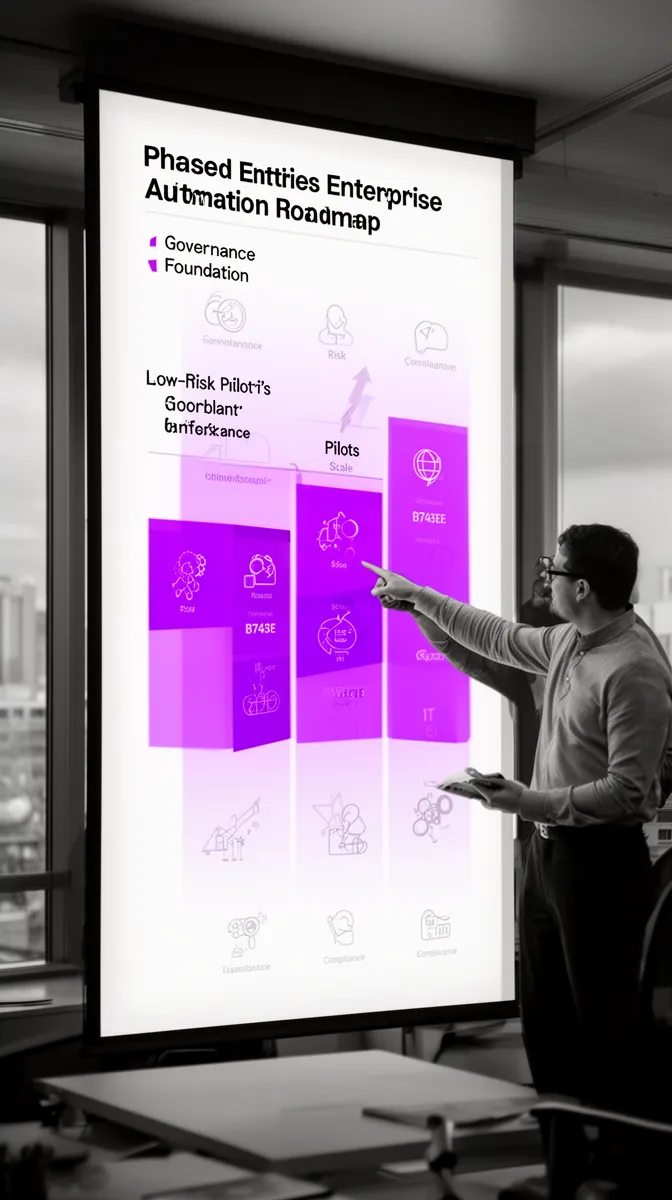

A practical automation roadmap over 12–18 months might look like this:

- Phase 1 – Foundational controls (months 0–3): Establish policies, AI use guidelines, model documentation standards, and monitoring; align with COBIT, ITIL, ISO, NIST AI RMF.

- Phase 2 – Low-risk, high-volume pilots (months 3–6): Internal processes like invoice capture, document classification, or internal helpdesk triage; tighten controls and refine approval workflows.

- Phase 3 – Medium-risk expansion (months 6–12): Customer-facing but reversible processes, like AI-assisted support routing, marketing personalization with strict opt-out, or credit pre-screening.

- Phase 4 – Regulated process automation (months 12–18+): Workflows that materially affect customer outcomes, financial exposure, or patient safety—with battle-tested governance, human-in-the-loop, and model risk management.

In this model, governance milestones—policy approval, model risk sign-off, change-management plans—are first-class items. They sit on the same roadmap as use cases. An enterprise AI automation platform with governance isn’t defined by a single tool; it’s the combination of technology, processes, and controls that mature together.

For many enterprises, working with partners for enterprise AI automation and workflow process automation services accelerates this journey. You get both implementation capacity and governance patterns that have already been proven elsewhere.

Building the Right Organizational Structures for AI Automation

Automation Center of Excellence as Orchestrator

Even the best ai automation strategy fails if no one is accountable for execution. That’s where an Automation or Intelligent Automation Center of Excellence (CoE) comes in. The CoE isn’t just a team of bot builders; it’s the orchestration layer for standards, platforms, and operating models.

The CoE typically owns reference architectures, reusable components, templates, vendor selection, training, and performance dashboards. Crucially, it enforces consistent patterns for intelligent automation and enterprise integration—how AI agents and RPA bots interact with core systems. To be effective, it must include IT, business units, risk, and operations. A CoE made only of technologists is just a development team with a fancy name.

One global manufacturer we worked with set up its CoE reporting jointly to the CIO and COO. That structure sent a clear signal: AI automation wasn’t a side project; it was part of the production system and the operating model. This alignment made it far easier to scale automations across plants and regions without constant renegotiation.

AI Governance Board and Model Risk Committees

As AI automations grow in scope and impact, a formal AI governance board becomes non-negotiable. This body is distinct from the CoE; it doesn’t build anything. Instead, it sets policies, evaluates high-impact use cases, and oversees model governance and operational risk.

Typical members include risk, compliance, data privacy, legal, IT security, and senior business sponsors. A model risk committee may sit under this board, focusing on methodologies, validation, and monitoring of models used in automation. Together, they decide which use cases are allowed, under what constraints, and with what monitoring.

Consider a financial services firm automating parts of its credit decisioning workflow. The AI governance board might approve an AI model to pre-score applications and recommend ranges, but require human approval for final decisions, robust documentation, bias testing, and tight monitoring. This creates a controlled path for ai governance, satisfying internal policies and external regulators.

Connecting CoE, Governance Board, and Business Lines

The CoE and governance board are necessary but not sufficient. They must be tightly connected to business lines that own outcomes. The CoE builds and enables, the governance board controls and approves, and business units are accountable for performance and customer impact.

A typical flow looks like this: a business unit identifies an opportunity for enterprise ai automation. The CoE helps refine it, estimates value, and designs an architecture. The AI governance board evaluates the risk and control setup, then approves or modifies it. Once deployed, the business unit owns the KPI impact; the CoE supports and iterates; the governance board monitors risk indicators and incidents.

When these pieces interlock, you have true organizational alignment. For enterprises that don’t yet have mature teams, partners like Buzzi.ai can act as a “virtual CoE” or specialist arm, providing custom AI agents embedded into governed enterprise workflows alongside governance and compliance support.

Embedding AI Automation into Change Management and ITSM

Using Prosci ADKAR to Drive Adoption, Not Resistance

If AI agents are a new class of employee, then rolling them out is a workforce change problem as much as a tech rollout. The Prosci ADKAR model—Awareness, Desire, Knowledge, Ability, Reinforcement—is a useful lens for how to implement enterprise AI automation with change management that sticks.

Applied to AI automation, ADKAR looks like this: build Awareness of what AI will and won’t do in people’s jobs; create Desire by linking automation to individual KPIs and reducing painful work; provide Knowledge through training on new tools and processes; ensure Ability via hands-on support and clear SOPs; and design Reinforcement through recognition, performance metrics, and continuous feedback.

Imagine a customer service organization rolling out AI-assisted ticket triage. Instead of simply shipping a new interface, change leaders run sessions explaining how AI will prioritize tickets, what agents can override, and how this supports faster resolution. Supervisors get dashboards showing how AI improves their team’s metrics. Adoption is measured and celebrated. This is organizational change by design, not by accident.

For more depth on ADKAR in digital programs, the official Prosci overview is a useful reference (Prosci ADKAR Model).

Integrating with ITIL and IT Service Management

AI automation also has to live inside your it service management processes, not alongside them. That’s where ITIL comes back into play. Every AI deployment is a change that must be cataloged, approved, and, if necessary, rolled back.

In practice, this means AI-related changes get explicit categories in your ITSM tools (ServiceNow, Jira Service Management, etc.). You define CAB criteria for AI deployments: required testing, rollback triggers, monitoring plans, and named on-call owners. Incidents that involve AI behavior get tagged and routed according to specialized runbooks, linking ml operations (MLOps) with traditional ops.

Suppose you’re deploying a new claims triage model. Under ITIL, this is a normal change. It goes to CAB with documentation on model validation, performance, bias testing, and rollback. Your ITSM tool includes dedicated AI incident types (“model degradation,” “data pipeline issue,” “unexpected decision patterns”) and runbooks. Best-practice resources from Axelos on ITIL 4 change management and ITSM can be adapted to include these AI-specific elements.

Human-in-the-Loop Oversight and Escalation

For serious ai process automation, “human-in-the-loop” must be more than a checkbox. It’s the explicit design of approvals, overrides, and escalation paths. You’re encoding how and when humans can and must intervene.

There are several patterns. In low-risk tasks, humans only review samples or exceptions. In higher-risk domains, the AI proposes decisions but humans must approve them. Escalation paths are defined: when confidence drops, when performance degrades, or when models drift, the system routes to named reviewers within clear SLAs.

Take a loan-approval AI that flags borderline applications for human review. If application volumes spike or model confidence drops below a threshold, cases auto-escalate to senior underwriters. The ITSM system logs these events; model monitoring sends alerts; and any spike in escalations triggers further investigation by risk and the CoE. This turns deployment governance into a living process rather than a static document.

Stakeholder Mapping, Politics, and Change at Scale

Mapping Power, Interest, and Risk Ownership

At scale, AI automation is political. It redistributes work, budgets, and risk. Ignoring that is an invitation to slow-rolling and quiet resistance.

Effective stakeholder management starts with a simple matrix: power vs. interest, with a third dimension for risk ownership. Who can approve or block automations? Who cares about the outcomes day-to-day? Who will be held responsible if the AI misbehaves? This view cuts across business sponsors, IT, security, risk, compliance, HR, and where relevant, unions or works councils.

Consider a bank automating KYC checks. Stakeholder mapping might reveal that compliance leaders have high power and high interest—they are potential veto players. Operations leaders have high interest but moderate power. IT has high power over feasibility but less direct interest in KYC specifics. Organizational alignment means recognizing these dynamics early, not when you’re already fighting for production approval.

Common Political Blockers—and How to Mitigate Them

The political blockers in enterprise ai automation are remarkably consistent. Fear of job loss leads to frontline resistance. Turf wars between IT and business units slow decisions. KPIs misaligned with automation outcomes prompt passive-aggressive behaviors—people quietly work around the new system. “Shadow AI” projects pop up in teams that don’t trust central governance.

Mitigation is possible but requires intent. Joint sponsorship between IT and business, with shared KPIs, reduces turf friction. Governance charters clarify who owns what. Transparent impact assessments show where automation will change roles, and HR co-designs new responsibilities and progression paths. A digital transformation office often plays the integrator role, aligning projects, people, and incentives.

We’ve seen both sides. In one case, a firm rolled out AI-driven scheduling without consulting HR or unions. The project stalled after backlash. In another, leaders involved HR early, mapped role changes, and created new “AI supervisor” positions. The second rollout moved slower at first—but then scaled smoothly. Sometimes the best change management move is to pause, address governance or stakeholder misalignment, and only then resume rollout.

Patterns for Regulated Industries and High-Risk Domains

Safe Adoption in Finance and Insurance

Finance and insurance live at the intersection of innovation and scrutiny. Constraints like model risk management, fair lending rules, anti-money laundering obligations, and audit trails define the boundaries for enterprise AI automation solutions for regulated industries. That doesn’t mean “no automation”—it means “governed automation.”

A practical pattern is to start in back-office domains: document processing, reconciliation, exception handling in operations. AI helps extract data, classify cases, and propose actions, but humans remain responsible for final decisions. As your model governance and monitoring mature, you selectively increase automation in customer-facing decisions under tighter controls.

For example, an AI-assisted anti-fraud workflow might surface suspicious transactions, cluster patterns, and suggest risk scores. Investigators still decide whether to freeze accounts or file suspicious activity reports. Logs capture every step, enabling risk and compliance teams to review both system and human behavior. Regulatory bodies like the BIS and EBA have begun issuing guidance on AI in financial services; aligning with that guidance and internal model risk policies is essential.

Healthcare and Life Sciences Considerations

Healthcare and life sciences operate under constraints like HIPAA, GDPR, clinical validation, and sometimes medical device regulation. Here, healthcare AI development must be tightly woven with data governance and clinical oversight. Patients and clinicians need to trust that automation won’t compromise safety or privacy.

The pattern mirrors finance: begin with workflows one or two steps removed from direct clinical decisions. Use AI for documentation, prior authorization, referral routing, and scheduling. Make sure all data is handled in line with regulatory compliance requirements, with de-identification where appropriate and strict access controls.

A hospital might deploy AI automation for referral triage. The model prioritizes cases based on urgency and specialty, but clinicians retain authority to override and re-prioritize. A clinical governance board reviews performance, equity impacts, and complaint patterns. Guidance from bodies like the FDA, EMA, or WHO on AI in healthcare provides important guardrails to integrate into your ai governance processes.

Public Sector and Critical Infrastructure

Public sector and critical infrastructure add their own constraints: transparency, public accountability, procurement rules, and national security or safety standards. Here, enterprise ai automation must withstand not just audits, but also public scrutiny.

A common pattern is to pilot in internal process optimization and citizen service triage, where errors are reversible and appeal mechanisms are clear. For instance, a city may use AI to prioritize service requests or route citizen inquiries, while always allowing humans to review and override decisions on request.

Aligning with frameworks like NIST AI RMF, ISO standards, and national or regional AI ethics guidelines is critical. Regulators and professional bodies increasingly publish sector-specific AI guidance for public services and critical infrastructure. Using those as constraints for your automation design keeps you ahead of scrutiny rather than reacting to it.

Measuring Enterprise AI Automation Success Beyond Accuracy

Operational and Business Outcomes

Focusing on model accuracy alone is a trap. For enterprise ai automation, the real question is: did the process improve? Did customers notice? Did risk stay within appetite?

Core metrics should include cycle time reduction, error rate reduction, capacity freed, SLA adherence, and cost-to-serve. Each automation initiative should tie to a clear business KPI within your broader enterprise AI strategy, owned by a named leader. Counting “number of models deployed” is a vanity metric unless it tracks to these outcomes.

An insurance firm automating parts of claims processing, for example, might track average handling time, first-contact resolution rate, and NPS alongside financial outcomes. That’s how you calculate real automation ROI and AI development ROI, not just technical performance.

Adoption, Trust, and Governance Health Metrics

Success also depends on adoption and governance health. If front-line staff bypass the AI tools, your enterprise automation is a mirage. If governance processes are so slow that new automations take a year to approve, your competitive edge erodes.

Track metrics like percentage of staff actively using AI tools, rate of opt-outs or overrides, and reduction in backdoor workarounds. On the governance side, measure number and severity of incidents, time-to-approve new automations, compliance audit findings, and time-to-detect model drift. These become your ai governance and deployment governance health indicators.

Imagine a leadership dashboard showing automation coverage, business KPIs, user satisfaction, incident trends, and approval cycle times in one place. Organizations that can say “we approve changes safely and fast” convert governance from a tax into a competitive advantage.

When to Pause: Using Metrics to Decide to Slow Down

There are moments when the right strategic move is to slow or pause rollout. Metrics help you see them. Spikes in incidents, rising escalations, high override rates, or negative staff sentiment are all signals that governance or change management hasn’t kept up with automation.

Consider a telecom expanding AI automation for billing disputes. If escalations and complaints rise sharply, the responsible move is to pause further rollout, investigate root causes, and adjust governance, training, or thresholds before continuing. Ignoring these signals risks customer trust and regulatory scrutiny.

For us, this is where enterprise ai automation consulting for governance and compliance matters. Partners can help interpret these signals, run structured AI discovery and governance readiness assessment programs, and adjust the roadmap so that automation scales sustainably instead of explosively.

Conclusion: Build Automation on a Governance Spine

If there’s one lesson from the last decade of AI in large organizations, it’s this: most failures are organizational, not technical. Enterprise AI automation fails when governance, risk, and change are afterthoughts. It succeeds when those elements are the starting point.

Design your automation roadmap around risk and compliance appetite, governance frameworks like COBIT, ITIL, ISO, and NIST AI RMF, and structured change approaches like Prosci ADKAR. Establish an Automation CoE and AI governance board that work hand-in-hand with business lines, and embed human-in-the-loop oversight into both workflows and ITSM. Treat governance not as friction, but as the spine that lets you move faster without breaking.

If you’re already piloting AI or planning to, this is the moment to reassess through a governance-first lens. Buzzi.ai works with enterprises to provide enterprise ai automation consulting for governance and compliance, combining technical depth with organizational awareness. If you want to scale automation safely and credibly, you can talk to Buzzi.ai about scaling AI automation safely or start with our governance-focused workflow and process automation services.

FAQ

What is enterprise AI automation and how is it different from basic workflow or RPA automation?

Enterprise AI automation is the coordinated use of AI agents, models, RPA, and intelligent workflows embedded into core business processes across multiple departments. Unlike basic workflow or RPA, it handles unstructured data, makes probabilistic judgments, and interacts across many systems, often with human-in-the-loop oversight. It also requires formal governance, risk controls, and change management to operate safely at scale.

Why do many technically successful AI automation pilots fail at enterprise rollout?

They fail because the organization wasn’t prepared, even if the model was. Risk, compliance, and legal teams are often engaged too late, and there is no clear accountability for outcomes or failure modes. Without governance structures, change management, and stakeholder alignment, the safest organizational response is to slow-roll or block rollout—even if the technology works.

How can we align enterprise AI automation with COBIT, ITIL, ISO 27001, and NIST AI RMF?

You align by overlaying the AI lifecycle on top of these frameworks rather than treating AI as a parallel track. COBIT defines decision rights and controls for approving AI use cases; ITIL governs how model changes and incidents are handled; ISO 27001 secures data and model artifacts; and NIST AI RMF adds AI-specific risk management practices. When each lifecycle stage has mapped owners and checkpoints, governance becomes transparent and auditable.

What does a governance-first enterprise AI automation roadmap look like?

A governance-first roadmap starts with establishing policies, standards, and monitoring aligned to your existing governance frameworks. It then sequences use cases by risk and dependency: low-risk, high-volume internal processes first; medium-risk customer-facing workflows next; and only then high-risk regulated processes. Governance milestones—policy approvals, model risk sign-off, change plans—are treated as core deliverables alongside the technical builds.

How do we integrate Prosci ADKAR into AI automation change management?

You apply ADKAR to the people side of AI automation rollouts. Build Awareness of what AI will change in roles and processes; create Desire by linking automation to reduced pain points and better performance; provide Knowledge through targeted training; support Ability with hands-on support and clear SOPs; and reinforce new behaviors with recognition and metrics. Prosci’s own resources on ADKAR and digital transformation can help you design this approach, and partners like Buzzi.ai can embed it into your rollout plans.

What roles should an Automation Center of Excellence play in large organizations?

An Automation CoE should orchestrate standards, platforms, reusable assets, and delivery models for AI and RPA. It bridges IT, business units, and risk, ensuring consistent architectures, quality, and compliance across automations. In many enterprises, it also supports training, helps prioritize use cases, and reports on automation performance and risk posture to leadership.

How can regulated industries like finance and healthcare safely adopt enterprise AI automation?

They adopt safely by starting with lower-risk domains, embedding strong model governance, and aligning tightly with sector regulations and professional guidance. Patterns include using AI for back-office or documentation tasks first, keeping humans in the loop for higher-risk decisions, and maintaining detailed audit trails. A governance-first approach, supported by expert partners, lets them scale automation while staying inside regulatory guardrails—see Buzzi.ai’s AI discovery and workflow automation services as one example of that support.

How should we design human-in-the-loop oversight and escalation for AI automation workflows?

You start by defining where humans must approve, can override, or only monitor AI decisions, based on risk level. Then, you encode these rules into workflows and ITSM systems with clear escalation paths, SLAs, and monitoring triggers for anomalies or drift. The goal is to ensure that when AI is uncertain or out-of-policy, the right human sees the right case at the right time.

What metrics beyond model accuracy should we use to measure AI automation success?

Look at operational and business metrics like cycle time, error rates, capacity freed, SLA adherence, customer satisfaction, and cost-to-serve. Track adoption and trust—usage rates, override rates, and workarounds disappearing—and governance health, including incidents, approval times, and audit outcomes. These metrics collectively show whether automation is delivering real value within acceptable risk, not just performing well in isolation.

When is it better to slow or pause AI automation rollout to fix governance or stakeholder issues first?

You should pause when leading indicators show strain: rising incidents or escalations, high override rates, negative staff feedback, or new regulatory concerns. Continuing to scale in those conditions amplifies risk and can damage trust with employees, customers, and regulators. Fixing governance gaps, retraining users, or renegotiating stakeholder alignment before expanding use cases usually pays off in long-term stability and credibility.