Image Recognition Services That Go Far Beyond Basic APIs

Most image recognition services just resell cheap APIs. Learn how to spot providers that deliver domain-specific models, edge performance, and real workflow impact.

If you’re paying a premium for image recognition services that look a lot like the stock demo from a major cloud provider, you’re probably buying a markup—not a solution. Foundation model APIs have turned generic computer vision into a cheap utility. The interesting question now isn’t “Can we recognize this object?” but “Can we do it fast enough, reliably enough, and specifically enough to change a business decision?”

That’s where most buyers get stuck. They’re quoted serious money for what turns out to be basic labels and bounding boxes, bolted to a dashboard, with the real work of integrating into their workflows still left to their own teams. Latency to the cloud is too high for real-time image processing, domain accuracy is shaky, and the promised ROI never quite materializes.

In this article, we’ll walk through a practical way to evaluate modern computer vision services. You’ll see what’s now commoditized, where domain-specific models and edge deployment still create real advantage, and how to judge if a provider is doing more than wrapping foundation models for vision with a nicer UI. We’ll also outline when it’s smarter to build directly on those foundation APIs yourself—and where a partner like Buzzi.ai, focused on specialized, production-grade image recognition, can actually change your cost and decision curves.

Why Basic Image Recognition Services Are Now a Commodity

How Foundation Models Flattened the Vision Landscape

Over the last few years, foundation models for vision have quietly flattened the capabilities of most general-purpose image recognition services. Models like CLIP and Segment Anything, along with the big cloud vision APIs, deliver surprisingly strong out-of-the-box image analysis. You get usable object detection, image classification, simple OCR and document vision, and even some video analytics without touching a GPU yourself.

The result is that the baseline for object detection and image classification is now standardized. Whether you call Google, AWS, Azure, or one of several newer foundation model vendors, you’ll see similar demos: classify an image, detect a handful of common objects, extract text from a sign. As recent market analyses from firms like McKinsey note, this commoditization is spreading across AI workloads, and vision is no exception.

That standardization also drives price compression. When multiple hyperscalers compete to sell roughly equivalent image analysis APIs, per-call pricing drops and margins thin. From a buyer’s perspective, the generic capabilities of image recognition services vs foundation model APIs pricing now look a lot like electricity: reliable, cheap, and undifferentiated.

What Commodity Image Recognition Actually Gets You

So what do you really get from commodity vision AI platforms? In practical terms, you get labels (“car”, “person”, “bottle”), bounding boxes, generic categories (“food”, “electronics”), basic content moderation, and simple OCR. Some platforms extend this into templated video analytics like people counting or motion zones.

For many use cases, that’s perfectly fine. Internal analytics dashboards, early product prototypes, or non-critical monitoring can run happily on off-the-shelf image analysis and video analytics with minimal engineering. When you just need to know roughly how many customers passed through a doorway today, you don’t need a custom model.

The cracks appear when you ask these systems to handle edge cases and domain-specific subtleties. Think of retail shelf analytics: a generic model might detect “bottle” and “box,” but it won’t reliably distinguish between very similar SKUs whose packaging differs by a color band or a regulatory label. In manufacturing, generic defect detection may see “scratch” but miss micro-anomaly detection events like tiny coating bubbles or hairline cracks that actually drive warranty risk and rework.

Why Paying a Premium for Commodity Capabilities Makes No Sense

Despite this commoditization, many “image recognition services” on the market are little more than wrappers around those same cloud APIs. They add a basic dashboard and workflow UI, call the provider’s object detection endpoint, and then add a healthy markup on top. The core model IP and infrastructure still live with the cloud vendor.

Buyers often discover this too late. We’ve heard more than one story of a team paying enterprise prices for what they believed were custom models, only to see vision.googleapis.com or similar domains in the logs. What they bought wasn’t differentiated modeling or engineering; it was API integration plus some reporting widgets.

The core thesis is simple: you should only pay more when a provider materially changes your decision quality, latency profile, or cost curve. If you’re just getting slightly prettier labels, you’re overpaying. Real differentiation appears when actionable insights emerge—when the system is tuned to your domain, deployed where it needs to run, and wired into the workflows that actually move money or manage risk.

Where Image Recognition Services Still Create Real Advantage

Given that the basics are commoditized, where do enterprise image recognition services still earn their keep? The short answer: wherever generic models and cloud-only deployments can’t meet the real constraints of your business. That usually means domain-specific precision, real-time or edge decisions, and tight workflow automation.

When Domain-Specific Accuracy Is Non-Negotiable

Some industries simply can’t rely on generic vision models. In manufacturing, healthcare, energy, and logistics, small visual differences can have outsized consequences. Here, domain-specific training data and calibration matter far more than a benchmark score on ImageNet.

Consider domain specific image recognition services for manufacturing quality inspection. A generic model might be able to classify “scratch” vs “no scratch,” but real production environments care about hairline cracks, coating thickness inconsistencies, foreign particles, and subtle alignment issues. Visual inspection automation needs to distinguish acceptable variation from true defect detection and anomaly detection, based on plant-specific and even line-specific tolerance thresholds.

In these contexts, domain-specific models reduce costly false positives (unnecessary rework, downtime) and false negatives (escaped defects, recalls, safety incidents). That’s the kind of delta where paying for a specialized provider makes sense: they’re not selling you generic computer vision services, they’re selling you fewer incidents, less scrap, and better compliance.

Use Cases That Demand Real-Time or Edge Decisions

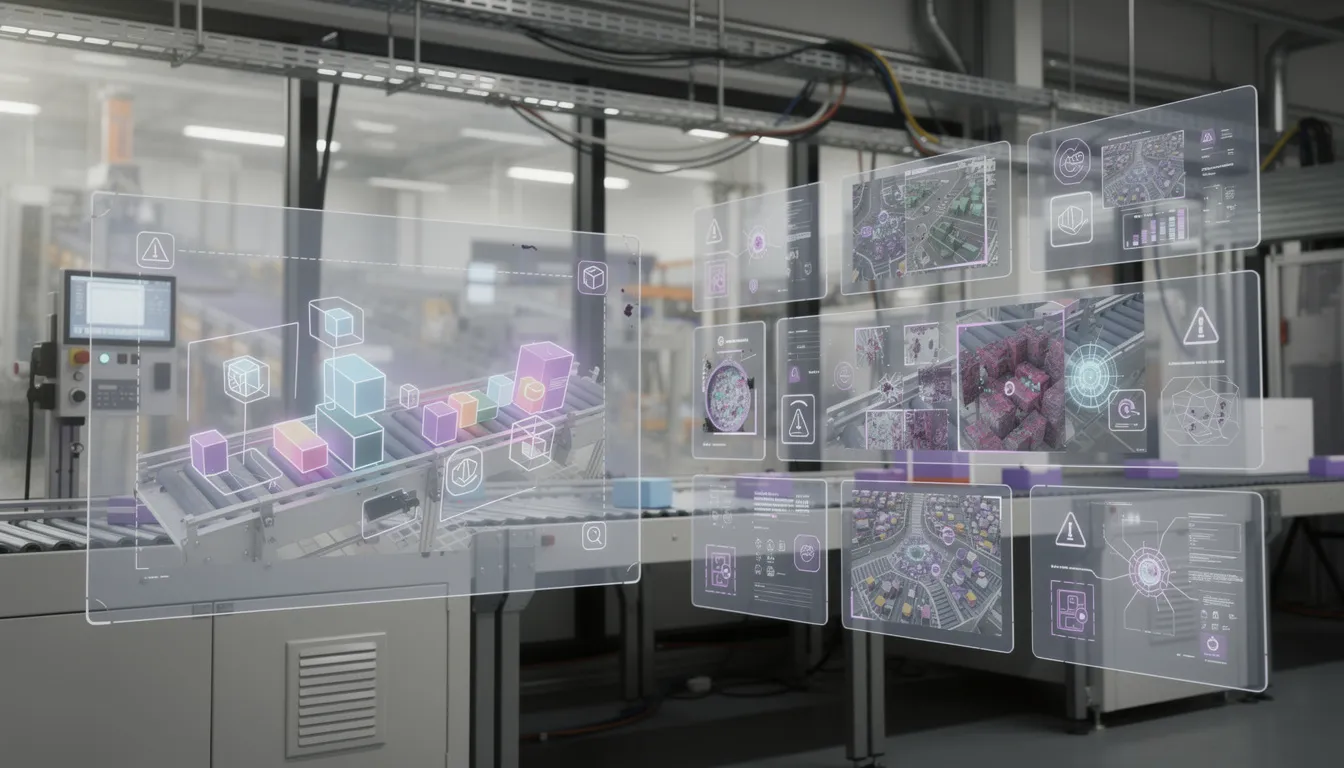

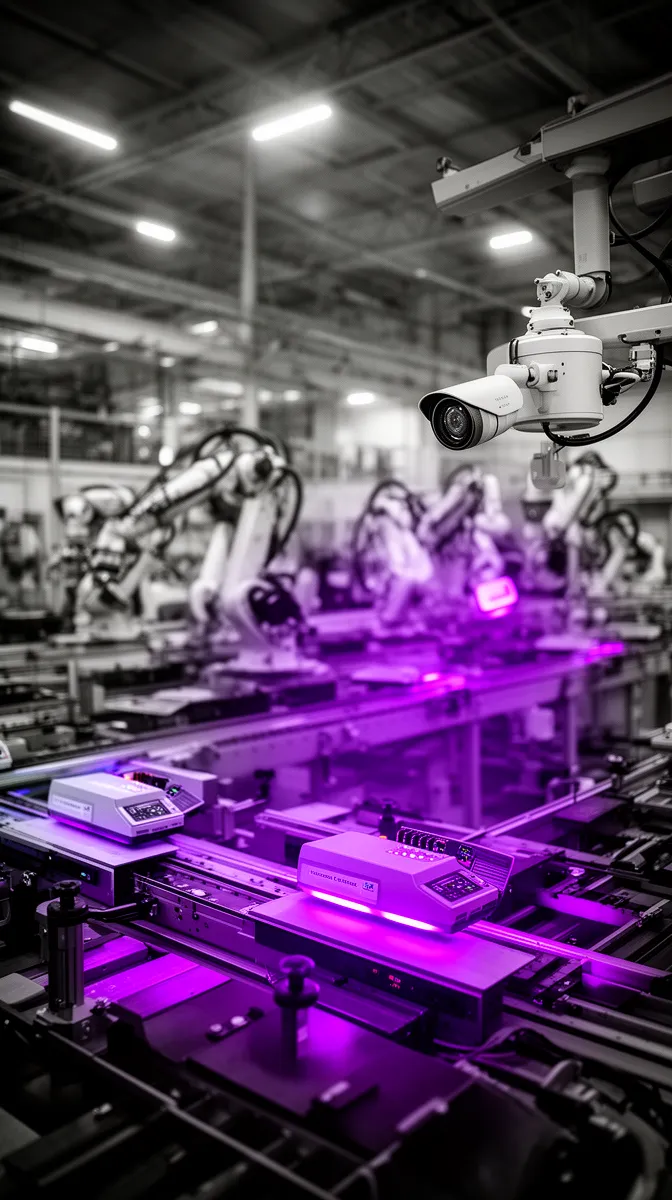

Latency-sensitive applications are another area where differentiated image recognition services matter. Robotics, industrial automation, safety monitoring, in-store analytics, and many IoT deployments cannot afford the round trip to the cloud for every frame. They need real time edge image recognition services for IoT devices that make decisions in milliseconds, not hundreds of milliseconds.

Imagine a safety system on a factory floor that must stop a machine if a person enters a restricted zone. If the video feed has to traverse a congested network to a central cloud, be processed there, and then send back a signal, you’ve likely blown the safety envelope. This is where edge deployment, on-device inference, and edge AI gateways become not just nice-to-have but mandatory.

Public case studies of edge deployments in manufacturing and logistics, like NVIDIA’s industrial AI references, consistently highlight the same story: push the intelligence close to the camera to meet latency, bandwidth, and reliability constraints. Generic, cloud-only vision AI platforms simply can’t solve this class of problem on their own.

When Recognition Must Drive Automated Actions

The third major category is where recognition isn’t just for reporting but directly drives automation. In these scenarios, value comes from closed-loop workflows, not more dashboards. Recognizing something is only step one; the payoff is in what happens next.

Think about a retail shelf analytics system that automatically generates restock tasks in your ERP or warehouse system. The camera detects gaps, confirms specific SKUs, and triggers a “replenish” task with location and quantity. Or a visual inspection system that creates and routes tickets in a smart support ticket routing tool when a defect crosses a threshold.

Here, the differentiation isn’t only in the model but also in the API integration, webhook design, event streams, and human-in-the-loop controls. Real workflow automation means the output of visual inspection automation is expressed in business terms (SKUs, work orders, asset IDs), not just pixel coordinates. Actionable insights are those that know which system to call and what to change, without making a human do copy-paste from a dashboard.

Key Capabilities That Separate Advanced Image Recognition Services

Once you know where differentiation actually lives, you can evaluate providers on the capabilities that matter. Advanced image recognition services go beyond demos to deliver custom image recognition models, edge-aware deployments, deep integration, and robust MLOps for vision. Think in terms of the full pipeline, not just the model.

Domain-Calibrated Models and Data Pipelines

Custom image recognition models are not just “fine-tuned ResNet.” They’re the end result of deliberate label design, domain-specific training data collection, annotation quality controls, and iterative calibration using your real-world images. For serious use cases, this often starts with a data audit: what images you have, how they were captured, and what decisions they support.

Strong providers help you collect and curate datasets that capture environmental realism—lighting changes, motion blur, occlusion, seasonal changes, and new product variants. They build labeling strategies that explicitly cover edge cases and create feedback loops from false positives and false negatives in production back into the training set. This is where image analysis meets process engineering, not pure modeling.

Behind the scenes, they implement secure data pipelines with clear governance and privacy protections, especially in sensitive domains like healthcare or proprietary manufacturing. That includes encryption, access controls, and compliance-ready logging of data lineage. When dataset drift appears—say, from a supplier change or new camera hardware—the system detects it and triggers a retraining lifecycle.

Real-Time and Edge Deployment Architectures

Deployment architecture is where advanced computer vision services start to diverge from simple APIs. There are three common patterns: fully cloud, fully edge, and hybrid. In the hybrid model, light-weight on-device inference or pre-filtering happens at the edge, and heavier analysis or OCR and document vision runs in the cloud.

On the hardware side, this involves GPUs in the data center, dedicated edge AI devices on the line, smart IP cameras, and sometimes mobile devices in the field. To make this viable, providers rely on model optimization techniques like quantization, pruning, and model compression, often combined with GPU acceleration where it’s available. The goal is to deliver real-time image processing where it’s needed, without breaking power or cost budgets.

Hybrid architectures also help balance cloud vs edge deployment economics. You can run quick checks locally, only sending ambiguous cases or periodic summaries to the cloud. That reduces bandwidth costs and improves resilience if connectivity is intermittent, while still leveraging cloud scale where it’s actually valuable.

Robust Integration and Action Workflows

Even the best model is useless if it can’t talk to your systems. Advanced providers treat API integration as a first-class capability, not an afterthought. They offer mature REST or gRPC interfaces, webhooks, and support for event buses like Kafka or Kinesis, plus connectors into low-code automation tools if your team prefers them.

Crucially, they map model outputs to business entities. A defect detection event becomes a structured incident with asset ID, defect type, severity, and recommended action. Intelligent document processing or visual inspection outputs flow into CMMS, ERP, MES, or CRM systems with the right metadata. This is how smart support ticket routing and workflow automation emerge from pixels.

Human-in-the-loop patterns round this out: review queues for uncertain predictions, escalation workflows for severe anomalies, and simple tools for operators to correct or confirm model outputs. Those corrections feed back into the training loop, ensuring that your system gets better, not just older.

Production-Grade Vision MLOps

Finally, advanced image recognition services are built on solid MLOps for vision. Monitoring goes beyond uptime to include accuracy over time, dataset drift, per-class performance, and latency/throughput metrics per deployment. You should be able to see, for example, that a specific camera or line has degraded accuracy after a lighting change.

They also support CI/CD for models: training pipelines that produce versioned artifacts, safe rollouts to subsets of devices, A/B tests of new versions, and fast rollbacks. This is particularly critical for latency-sensitive applications where performance hiccups have real-world consequences.

Best-practice guides on computer vision MLOps, such as those summarized by Google’s MLOps framework, emphasize these same patterns: observability, retraining, and governance. If a supplier change in your factory introduces new visual characteristics, your inference pipeline should detect the drop in model performance and route that information into a retraining cycle, not wait for a human to notice a spike in escapes.

How to Evaluate Image Recognition Service Providers

With this mental model, the question becomes: how to evaluate image recognition service providers in a structured way? The goal is to separate thin API wrappers from true partners, and to match the provider tier to your actual needs. Overbuying is as risky as underbuying.

Capability Tiers: API Wrapper vs. True Partner

It helps to think in three tiers. Tier 1 providers are essentially API wrappers: they offer a UI over cloud vision APIs with basic reporting and minimal configuration. Tier 2 are configurable vision AI platforms: they provide some tuning, possibly weak support for edge deployment, and generic integrations.

Tier 3 providers deliver fully custom solutions: domain-specific models, edge/hybrid architectures, and integrated workflows that reflect your business logic. For many organizations, Tier 1 is enough for experiments, while Tier 3 is appropriate for mission-critical, production-grade enterprise image recognition services for [industry] like manufacturing or logistics. The key is to avoid paying Tier 3 prices for Tier 1 capabilities, especially when what differentiates image recognition services beyond basic APIs is exactly what you’re not getting.

Technical Due Diligence: Questions to Ask

This is where a bit of RFP discipline pays off. To understand how to evaluate image recognition service providers, ask questions that surface real IP and engineering, not marketing. For example:

- Which components of your stack are built in-house vs third-party (models, training pipelines, inference, UI)?

- Can you describe your approach to custom training and domain-specific training data collection?

- What are your supported patterns for edge deployment and on-device inference?

- What latency and throughput guarantees can you include in an image recognition SLA, for both cloud and edge?

- How do you monitor model performance in production and detect dataset drift?

- What is your strategy for model updates and rollbacks across distributed devices?

- Which security certifications and compliance regimes do you adhere to (e.g., ISO 27001, SOC 2)?

- How do you handle data privacy, storage, and deletion for customer images?

- Can you provide performance metrics (accuracy, precision/recall) under real operating conditions, not just benchmarks?

- What is your roadmap for supporting emerging foundation models for vision while keeping customer costs controlled?

These questions force providers to expose whether they’re just orchestrating cloud vision APIs or offering genuinely differentiated image recognition services with clear performance metrics.

Assessing Domain Fit and Training Data Quality

Next, validate domain fit. Ask for evidence: sample datasets (appropriately anonymized), annotation guidelines, per-class confusion matrices, and performance on edge cases relevant to your environment. A provider serious about defect detection and anomaly detection should be able to show how they treat “near-miss” conditions.

Site visits or remote walkthroughs of how they capture data are invaluable. You want to see whether they account for lighting, camera placement, environmental conditions, and operator behavior—not just curated lab images. Often, a pilot under real plant conditions will reveal the need for recalibration, which is a good sign if the provider embraces it instead of resisting.

For visual inspection automation, insist on a pilot that uses your cameras, your products, and your defect taxonomies. The goal isn’t to prove that computer vision works in the abstract; it’s to prove that this provider can adapt it to your specifics.

Integration, Security, and Compliance Review

Finally, treat integration, security, and compliance as core parts of the evaluation, not appendices. Deep API integration means existing connectors or proven patterns for ERP, MES, CRM, and ticketing systems—not just “we have a REST API.” Check webhook reliability, event schemas, and how easy it is to extend integrations as your stack evolves.

On security and privacy, look for encryption at rest and in transit, clear data retention/deletion policies, and support for on-prem or private cloud when needed. Guidance from organizations like NIST is a good benchmark when thinking about data privacy for AI and vision systems. This matters even more in hybrid and edge deployments, where devices need secure boot, hardened OS images, and signed update mechanisms.

In regulated industries such as healthcare imaging or energy infrastructure, cloud vs edge deployment has regulatory implications. Ensure the provider can meet data residency and locality requirements, not just technically but contractually.

Cost, TCO, and When to Build on Foundation APIs Yourself

Price is where the “API markup vs solution” distinction becomes painfully obvious. To make smart decisions about image recognition services vs foundation model APIs pricing, you need to think in terms of total cost of ownership, not just per-API-call numbers. In many cases, generic APIs plus in-house engineering are cheaper; in others, a managed service is the bargain.

Understanding the Real Cost Components

There are several major cost buckets beyond the API line item. Data collection and labeling, engineering effort to build and maintain pipelines, edge hardware, deployment automation, monitoring, and support all add up. A cloud-only architecture might look cheap at low volume but become expensive as video flows scale.

An illustrative example: sending all your video frames to a cloud API for real-time image processing vs a hybrid where an edge gateway pre-filters frames. Over three years, the first option can rack up significant bandwidth and compute charges, while the hybrid design reduces recurring costs at the price of some upfront engineering. That’s the essence of smart cloud vs edge deployment trade-offs.

When a Managed Service Is Worth the Premium

A managed service makes sense when the use case is mission-critical, the cost of errors is high, and your internal team doesn’t have the capacity or expertise to build production-grade computer vision services. In these scenarios, you’re not just buying models; you’re buying an end-to-end system with SLAs.

Manufacturers and logistics firms often fall into this category. Rather than hiring a full in-house vision engineering and MLOps team, they choose an enterprise image recognition services for [industry] provider that brings domain expertise, deployment tooling, and support. The provider effectively amortizes the cost of building the best image recognition service with custom model training and integration across multiple clients, while tailoring each deployment to specific workflows.

When you run the numbers on salaries, time-to-market, and risk, paying a premium for an integrated service can actually be the cheaper option—if it clearly moves the needle on decisions, latency, or operating costs.

Signs You Should Just Use Foundation Model APIs Directly

On the other hand, sometimes the smartest move is to just use foundation model APIs directly. If your use case is non-critical analytics, early-stage experimentation, or low-volume image processing, the combination of cloud vision APIs and basic in-house integration may be exactly right. There’s no shame in staying at the commodity layer when that’s all you need.

Signs you’re in this bucket: no need for edge deployment, latency to the cloud is acceptable, generic labels are sufficient, and your team is comfortable with straightforward API integration. In this case, leveraging foundation models for vision via major vision AI platforms lets you move fast and cheap while you test ideas.

For example, a startup validating a new retail analytics concept might use cloud computer vision services for six months to iterate on UX and business model. Only if the idea proves out and demands real-time, store-level automation or domain-specific accuracy should they consider stepping up to a managed, custom service.

How Buzzi.ai Approaches Specialized Image Recognition

Given all of this, how does Buzzi.ai fit into the landscape of image recognition services? Our bias is clear: we focus on specialized, production-grade solutions where domain specificity, edge deployment, and integration into broader automation flows matter more than raw model novelty. We’re less interested in building yet another demo and more interested in changing how your operations actually run.

From Domain Discovery to Deployment, Not Just Models

We start with domain discovery, not code. That means understanding your workflows, defect taxonomies, and decision points before we touch model training. In a manufacturing inspection project, for example, we’ll map the end-to-end process: where images come from, who makes decisions today, what counts as an actionable insight, and how those insights should appear in your systems.

This phase includes data audits and the design of domain-specific training data strategies that reflect your reality. Once we know what matters, we build custom image recognition and computer vision services shaped around that, not around a generic benchmark. We then connect those outputs to actual processes using our broader strengths in workflow automation and AI agents.

Because we also build production-grade predictive and analytics pipelines, we’re able to treat image recognition as one piece of a larger decision system, not an isolated tool.

Edge-Ready Architectures and Operational MLOps

On the deployment side, we design hybrid and edge-first architectures tailored to your connectivity, devices, and regulatory realities. That includes edge deployment on gateways and cameras, on-device inference on mobile or embedded hardware, and cloud components where they make economic and technical sense. Our goal is always to support real-time image processing where it’s actually needed.

We apply MLOps for vision best practices to keep these systems healthy: monitoring, update orchestration, and feedback loops from operators back into the models. This is similar to how we run our AI-enabled applications more broadly, including AI-enabled web application development and automation projects.

Integration With the Rest of Your AI and Automation Stack

Finally, we treat integration as the point, not an add-on. Our API integration work connects image recognition outputs to ERP, CRM, MES, ticketing, and custom tools, and we often use those outputs to drive AI agents, chatbots, or other automation layers. A defect detection event might trigger an AI agent that validates related documentation, or a visual check might kick off automated invoice checks or smart support ticket routing.

Because Buzzi.ai also specializes in AI agent development and broader AI automation services, we can help you design flows where images, documents, and conversations all feed into one coherent operational brain. If you want to explore whether a specialized, edge-aware, workflow-integrated solution would materially outperform commodity APIs in your domain, our workflow and process automation services are a good place to start.

Conclusion: Audit Your Vision Strategy Before You Spend

The landscape has shifted. Basic image recognition services—labels, bounding boxes, simple detection—are now a commodity, powered by widely available foundation models for vision. Paying a premium for those capabilities alone rarely makes sense.

Real differentiation shows up in domain-specific models, edge and real-time architectures, and deep workflow integration that converts pixels into decisions. Use structured evaluation criteria, tough RFP questions, and pilot projects on your own data to separate API resellers from true partners. Think in terms of total cost of ownership, not just per-API-call pricing, when you weigh buy vs build.

If you’re looking at new initiatives—or wondering whether your current system is more markup than solution—use this framework as an audit. Then reach out to Buzzi.ai via our contact page to explore how a specialized, edge-aware, workflow-integrated approach could change the economics and performance of image recognition in your business.

FAQ

Why have basic image recognition services become a commodity?

Because the hardest parts of generic image understanding have been solved and productized by large providers. Foundation models for vision and cloud APIs now deliver strong object detection, image classification, and OCR at low cost with similar performance across vendors. That levels the playing field and turns basic capabilities into a utility rather than a differentiator.

What should I expect from enterprise image recognition services beyond generic APIs?

You should expect domain-specific accuracy, not just generic labels. That means custom models trained on your data, calibrated to your defect taxonomies and operating conditions. You should also see robust edge or hybrid deployment options, workflow integration into your systems, and production-grade MLOps for monitoring and continuous improvement.

How can I tell if an image recognition provider is just wrapping cloud vision APIs?

Ask directly which components are proprietary vs third-party, and request architecture diagrams that show the underlying services. Look for references to major cloud vision endpoints and confirm whether “custom models” are truly trained on your data or just using pre-built APIs. A true partner will be transparent about using foundation models while adding clear value in data, deployment, and integration.

Which industries benefit most from domain-specific image recognition services?

Industries where small visual differences carry high cost or risk gain the most. Manufacturing, energy, logistics, and healthcare all depend on precise defect detection, anomaly detection, and compliance checks that generic APIs can’t reliably handle. In these domains, domain-specific training data and tailored deployments can reduce scrap, downtime, and safety incidents.

What technical capabilities separate advanced image recognition services from basic labeling APIs?

Advanced services offer custom training pipelines, edge deployment and on-device inference, sophisticated MLOps for vision, and deep API integration with your business systems. They also provide monitoring for dataset drift, latency, and accuracy in real-world conditions, plus human-in-the-loop tools for continuous improvement. In contrast, basic APIs typically stop at returning labels and bounding boxes.

How do edge and on-device image recognition reduce latency and cloud costs?

By processing images closer to where they’re captured, edge and on-device inference avoid the round-trip to the cloud for every frame. That cuts latency for safety-critical or real-time image processing and reduces bandwidth costs by sending only summaries or exceptions upstream. Over time, a well-designed cloud vs edge deployment strategy can significantly lower total cost of ownership.

What evaluation criteria should I use when selecting an image recognition service provider?

Evaluate their domain expertise, custom training capabilities, and support for edge deployment in your environment. Review integration depth with ERP, MES, CRM, and other systems, along with security, privacy, and compliance posture. Finally, insist on transparent performance metrics, SLAs, and a clear roadmap for incorporating new foundation models and hardware.

How do I assess the quality of a provider’s domain-specific training data and calibration?

Request anonymized sample datasets, annotation guidelines, and per-class confusion matrices, especially for edge cases. Ask how they gathered data (under what conditions, with which cameras) and how they handle feedback from real-world errors. A strong provider will welcome pilots on your actual environment and use those results to recalibrate models and labels.

What integration and workflow capabilities should serious image recognition services offer?

Look for mature APIs, webhooks, and support for event buses, along with pre-built or proven connectors into your core business systems. The service should be able to express outputs in business terms—SKUs, assets, work orders—not just pixels. Many organizations also benefit from pairing image recognition with AI agents and automation layers, something Buzzi.ai supports through its broader workflow and process automation services.

When does it make more sense to build on foundation model APIs yourself instead of buying a managed service?

If your use case is low-volume, non-critical, or early-stage, direct use of foundation model APIs is often the best option. When latency requirements are modest, generic labels suffice, and your team can handle basic integration, building in-house keeps costs low and flexibility high. As your needs grow toward domain specificity, edge deployment, and automation, that’s the point to reconsider a managed service.