Build AI Applications That Actually Solve the Right Problems

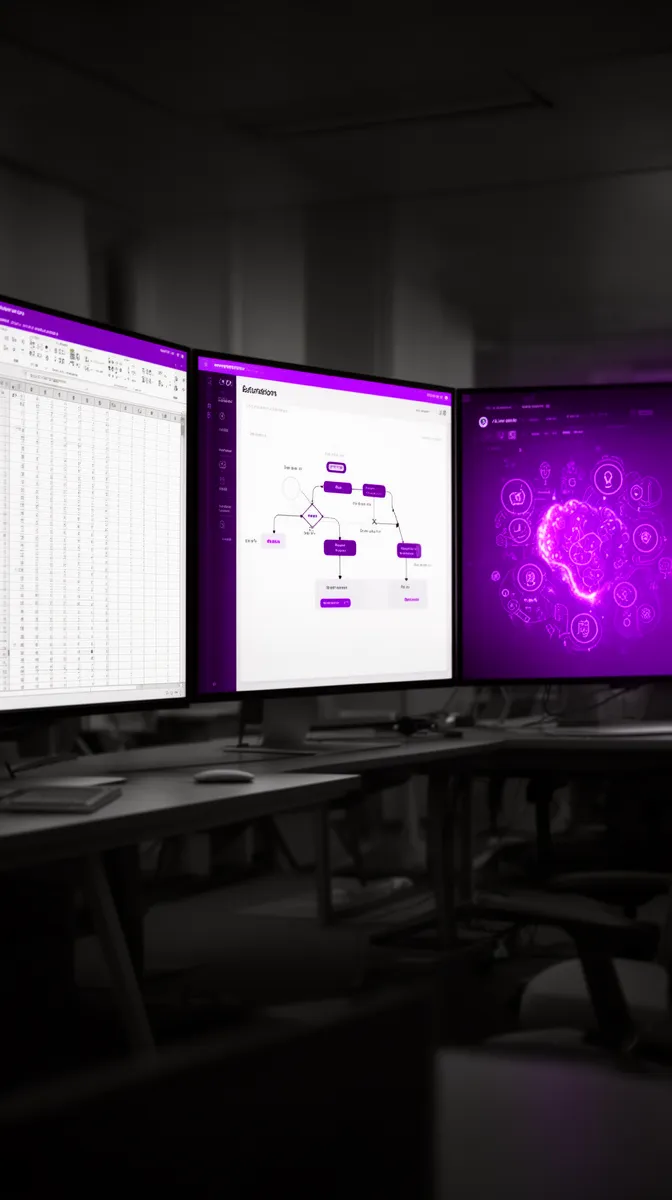

Use this decision framework to build an AI application only when it truly beats spreadsheets and simple automation—so your AI budget turns into real ROI.

Most companies don’t have an AI problem—they have a prioritization problem. Under pressure to “do something with AI,” teams rush to build an AI application where a spreadsheet, a simple workflow, or a rules engine would deliver 80% of the value at 10% of the cost. The result is familiar: impressive demos, underwhelming impact, and another slide in the “failed AI project” deck.

The harder part of AI application development isn’t wiring models or picking vendors. It’s deciding when AI is actually the right lever—and when it’s overkill. That’s what problem-solution fit really means in the AI era: proving that AI is the best tool for this problem, right now, compared to traditional software, spreadsheet automation, or process fixes.

This article lays out a practical decision framework for AI product strategy. You’ll get criteria, scorecards, and examples you can use to test your ideas before you commit budget or reputation. Our goal is simple: help you build AI applications only where they can win, and avoid everything else.

At Buzzi.ai, we specialize in fit-assessed AI application development, not just model-building. We’ll share the same thinking we use with clients so you can make better calls internally—whether or not you ever work with us.

Before You Build an AI Application, Prove You Need One

The default question in many boardrooms is, “How do we build an AI application for our business this year?” The more useful question is, “Where would AI clearly outperform spreadsheets, rules, or RPA for us—this year?” Before you touch models, you need a framework to assess if you should build an AI application at all.

Why "We Need an AI App" Is the Wrong First Question

The most common AI story starts with a mandate: “We need an AI app.” There’s a budget line, an innovation OKR, maybe even a steering committee—but no clearly defined problem. The initiative is technology-first instead of problem-first.

From there, everything downstream is skewed. Teams obsess over stack choices, vendors, and model families. They Google “how to build an AI application for my business” when the real question is whether the business problem is sharp enough to warrant AI at all. Model choice, architecture, and tools only matter after you have problem-solution fit.

We’ve seen companies launch flashy AI chatbots for customer support while their underlying workflow is broken. Tickets are mislabeled, SLAs unclear, and knowledge bases out of date. The “AI” becomes an expensive front end for a bad process—so customers hate it, agents route around it, and the project is quietly shelved.

An effective ai product strategy starts from the opposite direction: pick the problem, pressure-test it, then ask if AI is the best way to solve it. That reframing alone will improve your ai project scoping and stakeholder alignment more than any model upgrade.

The AI vs Spreadsheet vs Automation Decision

The first gate isn’t “Which model?” It’s “Is this better solved by a spreadsheet, a rules engine/RPA, or AI?” That’s the essence of making smart automation vs AI calls.

Spreadsheets are underrated. They win when:

- Data volume is low to moderate.

- Logic is clear and deterministic.

- Decisions are relatively infrequent.

- A business user can own and adjust the logic directly.

Think of pricing scenarios, small-team planning, or simple spreadsheet automation with scripts. For these, the overhead of AI or complex workflow tools is unnecessary.

Rules engines and RPA beat AI when you have repeatable workflows with clear if-then logic. RPA can click buttons and move data; rules engines encode consistent decisions. If inputs and outputs are structured, exceptions are rare, and outcomes are binary, go with traditional software vs AI every time. It’s easier to test, govern, and debug.

AI is justified when at least one of these is true:

- Inputs are messy or unstructured (emails, PDFs, chats, voice).

- There’s high variability and pattern recognition is needed.

- Decisions are probabilistic, not binary (ranking leads, predicting churn).

- Volume or complexity is beyond what explicit rules can cover.

Imagine three use cases:

- Pricing adjustments for a dozen SKUs quarterly → spreadsheet with some macros wins.

- Invoice data extraction from thousands of vendor layouts → AI model for document understanding plus workflow.

- Customer email triage at scale → AI for classification and routing, with a clear workflow around it.

That’s your first filter: if a spreadsheet or deterministic automation can handle it, don’t rush to build an AI application. Save AI for where it’s unfairly good.

Three Non-Negotiable Criteria for AI Problem-Solution Fit

Once you believe AI might be the right tool, you still need to confirm problem-solution fit. In our work, three criteria are non-negotiable:

- Valuable problem: Solving it moves a real business needle—revenue, cost, risk, or customer experience.

- AI-appropriate problem shape: The “shape” of the work matches AI’s strengths (uncertainty, patterns, language), not rigid rules.

- Data readiness: You have, or can reasonably create, the data the AI would need.

Practically, we use a simple 1–5 scorecard for each dimension plus an overall risk view. It’s a lightweight decision framework for AI and a framework to assess if you should build an AI application before you commit. If any of the three criteria scores very low, the default answer is: do not build an AI application yet.

This is the discipline that separates sustainable ai use case evaluation from hype-driven experimentation.

Define the Problem Ruthlessly Before You Touch Models

Once you’ve passed the “AI vs spreadsheets vs automation” gate, the next risk is a vague problem definition. If you want the best way to build an AI application that solves real problems, you have to get almost uncomfortably specific before you ever mention models.

From Vague Ambition to Concrete Use Case

Most AI proposals start with ambitions like “We want AI for customer service” or “Let’s use AI for sales.” These are slogans, not use cases. To get to problem-solution fit, you need to turn them into precise, measurable problem statements.

We start with basic ai problem analysis questions:

- Where exactly is the bottleneck today?

- Who is affected and how often?

- What is the current workaround and why is it painful?

- How do we know, quantitatively, that this is a problem worth solving?

Then we articulate the “job to be done” for the AI—still without mentioning AI. For example, instead of “AI for customer service,” we might define: “Reduce average email response time from 12 hours to 2 hours for Tier-1 questions without increasing headcount.” That’s a concrete target you can use for ai project scoping and future ai solution design.

Questions to Ask Stakeholders So the Problem Is Real

Real problems live with front-line teams, not on executive slides. To avoid building for an imagined world, you need stakeholder interviews that cut through wishful thinking and politics.

Here’s a practical checklist for stakeholder alignment in an AI workshop (operations, IT, business owners, and end users):

- What breaks today in this process? How often?

- How do you fix it now? What’s the workaround?

- What does “good” look like to you—concretely?

- Which metrics do you actually watch week to week?

- What do you fear most about changing this process?

- If we could only improve one step, which would it be?

- What decisions here are judgment calls vs strict rules?

- Who ultimately owns this process and its outcomes?

These questions ground your ai product strategy in reality. They also surface where simple workflow optimization or better tools, not AI, might deliver most of the benefit. That’s a win—it means you won’t waste your AI budget.

Spotting Low-Value or Vanity AI Projects Early

Some AI ideas should be killed early. The hard part is admitting it.

Warning signs include:

- No clear business owner or P&L responsible.

- Fuzzy or purely qualitative success metrics.

- “Innovation theater” motivations—PR, awards, internal buzz.

- No budget or appetite to actually change workflows or train people.

We’ve seen high-profile pilots that demo beautifully but never make it into daily work. In one case, a company launched a sophisticated AI recommendation system for their app, complete with a press release. But product teams were not prepared to change their roadmap based on the AI’s output, marketing didn’t trust the suggestions, and no one owned ongoing tuning. Six months later, it was effectively turned off.

This isn’t pessimism; it’s leadership discipline. Treat early detection of a low-value or vanity AI project as a success in your risk assessment for AI projects. You’ve freed up resources to pursue something with genuine problem-solution fit.

Is This Problem Really an AI Problem? A Practical Framework

Assume you now have a crisp, valuable problem. The next step is to decide whether it’s truly an AI problem or better served by classic software and process changes. Here’s a practical framework to assess if you should build an AI application using three dimensions: problem shape, data, and business value.

Dimension 1: Problem Shape and Uncertainty

AI shines when rules are fuzzy, patterns are latent, or language understanding is central. If you need to classify, rank, summarize, or predict under uncertainty, that’s where AI—and especially genAI solutions and LLM application development—are strong.

Characteristics of AI-shaped problems include:

- Noisy, unstructured inputs (emails, transcripts, free text, images).

- High variability across cases; no simple rule set covers them all.

- Outcomes that are probabilistic or graded, not “yes/no.”

- Need for semantic understanding or pattern detection.

Contrast two tasks:

- Invoice total calculation: Line items, tax, discounts—all encoded in a clear layout → rules or traditional software is ideal.

- Free-text email classification: Customers describe similar issues in 500 different ways → AI for language understanding is the right choice.

If your problem looks more like the second than the first, AI problem fit is high. If it’s closer to deterministic logic, prioritize traditional software vs AI in your ai project scoping.

Dimension 2: Data Availability and Quality

Even the best problem shape won’t save you if the data is bad. Without the right data, ai model development is guesswork dressed up as math.

Run a quick data quality assessment:

- Do we have historical data for this problem? Where does it live?

- Who owns the data and can we access it legally and ethically?

- How clean is it—are there missing fields, inconsistent labels, duplicates?

- Is it representative of how the process runs today?

Many enterprise AI failures trace back here. One study on ML project success rates found that poor data quality and unclear problem framing were leading causes of failure—more than model choice itself (example research on data and ML success). Likewise, industry reports from firms like McKinsey note that a huge share of AI initiatives stall or under-deliver because organizations underestimate the work of data readiness and governance.

If data readiness is low, your ai lifecycle management plan should start with instrumentation (capturing the right signals), data cleaning, or even a simpler non-AI solution while you improve data. Sometimes the right move is “wait, fix data, then build AI.”

Dimension 3: Business Value and Cost to Change

The third dimension is business value relative to cost and risk. This is where business value mapping and return on investment for AI become real, not theoretical.

Map the impact across four categories:

- Revenue: More conversions, upsell, retention.

- Cost: Reduced manual effort, fewer errors.

- Risk: Lower compliance or operational risk.

- Experience: Better NPS, faster response times.

Then factor in the cost to change: build cost, integration, change management, and ongoing operations. Industry reports on enterprise AI consistently highlight that change management and data work often outweigh model costs (example McKinsey AI survey).

A simple 2x2—value vs feasibility—helps. The best AI candidates sit in the top-right: high value, high feasibility (good problem shape and data). A medium-value, low-complexity non-AI change may yield better, faster ROI than a more ambitious AI project. Your ai implementation roadmap should reflect this, not chase AI for its own sake.

Putting It Together: A Simple AI Fit Scorecard

Now combine these three dimensions into a single, simple tool: an AI fit scorecard. For each candidate use case, score 1–5 on:

- Problem shape (AI vs rules).

- Data availability and quality.

- Business value (impact vs effort).

- Risk profile and governance needs.

For example, imagine two candidates:

- Use case A: Automate routing of support tickets → high problem shape score (language), medium data score, high value.

- Use case B: Predict quarterly budget variances for one team → low problem shape score (small data, deterministic), low value.

Use case A might score 4-3-5-3; use case B might be 2-2-2-4. You’d prioritize A for AI and handle B with better processes and spreadsheets.

This kind of ai use case evaluation clarifies your use case prioritization and ai project scoping. It’s the core of the AI discovery work we do, and a simple decision framework for AI you can adopt internally.

When Spreadsheets, Rules, or RPA Beat AI

One of the most valuable outcomes of this analysis is often a negative answer: “We don’t need AI here.” In many processes, the honest response to “when should I use AI instead of spreadsheets?” is “Not yet.” Understanding where simple tools win is key to a realistic ai implementation roadmap.

The Hidden Power of Well-Designed Spreadsheets

Spreadsheets, especially with light scripting, can be extraordinarily powerful. They are transparent, cheap, and editable by the people closest to the work.

They shine where processes are stable, volumes are manageable, and logic is explicit. In those cases, spreadsheet automation is often the best answer. You can add validation, macros, and even small data pulls without ever talking about AI.

We’ve seen sales operations teams improve forecasting accuracy by 20–30% simply by tightening assumptions, standardizing templates, and enforcing discipline in a spreadsheet—not by deploying predictive models. That’s a victory. It’s also a reminder that traditional software vs AI isn’t a war; it’s a portfolio choice.

Rules Engines and RPA for Deterministic Workflows

When work is repetitive, high-volume, and based on clear rules, a combination of workflow tools, rules engines, and RPA is usually the right investment. This is classic workflow automation.

Think about invoice routing, user provisioning, or data entry from structured forms. If you can describe the logic with “if X, then Y,” you probably don’t need AI yet. Many back-office processes benefit far more from robotic process automation and good workflow optimization than from complex models.

Industry benchmarks on automation vs AI vs RPA usage show most enterprises start with rules and RPA, then layer AI in later for unstructured inputs (example Deloitte RPA survey). You can mirror this: automate the deterministic steps first, and only then consider plugging in AI for the messy edge cases as part of broader ai process automation.

Hybrid Patterns: AI as a Narrow Component, Not the Whole App

Often, “build an AI application” really means “embed AI into an existing system.” AI is a specialist, not the whole team. The smartest ai solution design uses it that way.

A common pattern: an AI model classifies or extracts information, then a conventional CRM, ticketing system, or ERP handles the rest. For example, a support platform where AI only triages incoming tickets—assigning category, urgency, and suggested answer snippets—while agents and standard workflows handle resolution and escalation.

This approach minimizes scope and risk. Instead of a new standalone AI product, you get targeted ai integration services that upgrade existing tools. It’s still ai application development, but with a thinner, more focused AI layer. This often leads to faster adoption and a clearer ai implementation roadmap.

If you find that many of your candidates fall into rules or RPA territory, that’s good news: you can get big wins with simpler tools. For those, partners offering robust workflow and process automation services may be a better first move than full AI builds.

From Idea to Proof of Concept: Low-Risk AI Application Experiments

Let’s assume you’ve passed the filters and truly have a strong AI-shaped, data-ready, high-value use case. The question now is not just how to build an AI application for my business, but how to do it with low risk. The answer: structured, time-boxed experiments, not big-bang launches.

Designing a Proof of Concept That Actually Tests Fit

A proof of concept is not a demo factory. Its job is to test whether the proposed AI solution can actually solve the problem in the real context. That’s the best way to build an AI application that solves real problems.

Good ai proof of concept development is narrow and explicit:

- Pick one concrete slice of the workflow.

- Limit scope to a small user group and/or subset of data.

- Define clear success metrics linked to business outcomes.

- Time-box it (e.g., 4–8 weeks) with a fixed budget.

For example: “In 6 weeks, can we auto-classify 20% of inbound support emails into the right category with at least 85% accuracy, reducing manual triage time by 30%?” That’s a tight brief for ai MVP development and PoC work.

External best-practice guides on AI PoCs consistently stress this focus on narrow scope and real metrics (example PoC design guide). Treat the PoC as an experiment in ai lifecycle management, not a marketing event.

Choosing Metrics That Prove the Problem Is Solved

Your metrics should tell you if the problem is solved better than before, not just if the model “works.” Think in four categories when designing metrics for metrics for AI applications:

- Effectiveness: Accuracy, precision/recall, error rate.

- Efficiency: Time saved per task, throughput per agent.

- Adoption: How often users rely on AI output vs bypass it.

- Business outcomes: Cost savings, revenue uplift, or risk reduction.

For a customer service assistant, a simple metric table might compare baseline vs PoC:

- Average first-response time: 12 hours → 3 hours.

- Tickets handled per agent per day: 30 → 40.

- AI-suggested responses accepted: 70% of suggestions.

- CSAT: 4.1 → 4.3.

This is how you tie back to business value mapping and calculate return on investment for AI. It’s also how you build the evidence to move from PoC into MVP and, later, production deployment.

De-Risking with Shadow Mode and Human-in-the-Loop

For sensitive workflows, you shouldn’t turn AI on and hope for the best. Shadow mode and human-in-the-loop setups are your safety net.

In shadow mode, the AI runs in parallel: it makes predictions or suggestions, but humans still make final decisions. You log both and compare. For example, an AI-based routing engine for support tickets can propose categories and priorities while agents continue their normal work. Over a few weeks, you learn whether the AI is accurate enough—and where it fails.

Human-in-the-loop means the AI assists but never fully replaces humans. This is essential in finance, healthcare, HR, and other high-stakes domains. It also creates a feedback loop for ai lifecycle management, as corrections from experts become training data.

At Buzzi.ai, we treat these mechanisms as core parts of risk assessment for AI projects and any responsible ai implementation roadmap, not optional extras.

When to Graduate from PoC to MVP and Production

Many organizations get stuck in permanent PoC mode. To avoid this, define clear gates between PoC, MVP, and production deployment.

In our experience, you’re ready to move from PoC to mvp development when:

- Key metrics show stable improvement vs baseline.

- Failure modes are understood and tolerable with mitigations.

- There’s a clear owner and budget for ongoing operations.

- Stakeholders agree on process changes and training needs.

From MVP to full rollout, you’ll need more mature ai lifecycle management: monitoring, alerting, retraining, and rollback plans. Your ai implementation roadmap should lay out this journey upfront so you’re building toward production, not just to the next demo.

Along the way, specialized partners—including those offering AI chatbot and virtual assistant development services—can help you avoid common design and deployment pitfalls.

Fit-Assessed AI Application Development: Buzzi.ai’s Approach

Everything so far has been vendor-neutral on purpose. But if you decide you want a partner to help you build an AI application with true problem-solution fit, it’s worth knowing how we approach it at Buzzi.ai. Our ai application development services with problem solution fit follow four stages.

Step 1: AI Discovery and Use Case Prioritization

We start with structured AI discovery, not coding. That means workshops, process mapping, and a shared understanding of where the real pain is.

We use the same dimensions you’ve seen here—value, problem shape, data readiness, and risk assessment for AI projects—to run rigorous ai use case evaluation. The output is a prioritized backlog of AI and non-AI opportunities, with some ideas explicitly not pursued.

Sometimes the original brief (“We need a chatbot”) evolves into something more impactful, like an internal triage engine or document processing workflow. That’s the power of proper use case prioritization and ai readiness assessment. If you want help at this stage, explore our AI discovery and use case prioritization offering.

Step 2: Solution Design Across AI, Automation, and UX

Once we agree on the right problem, we design the solution across AI, automation, and UX. This is where requirements analysis and technical feasibility assessment really matter.

We clarify what should be AI-driven (e.g., classification, summarization, recommendations) and what should remain classic software or process. We assess data quality assessment, tools, existing systems, and constraints. For many teams, this also includes planning for LLM application development where genAI solutions make sense.

The output is an architecture and implementation plan: models, integration points, user flows, and change management steps. You can think of it as investor-grade documentation on your ai solution design and ai implementation roadmap.

Step 3: Proof of Concept, MVP, and Production Deployment

With design in hand, we move into staged build: PoC → MVP → production deployment. Each stage has clear entry and exit criteria.

PoCs test feasibility and fit on a narrow slice. MVPs broaden scope to a defined user group with real impact on KPIs. Production is where we harden systems, implement monitoring, and integrate with your broader stack.

We apply engineering practices around environments, observability, rollback, and ai lifecycle management. This applies equally to ai application development using classical ML and to modern LLM application development. The emphasis is always on actually shipping usable functionality, not building science projects.

Step 4: Measuring, Governing, and Evolving the AI App

The work doesn’t end at launch. We help define and track the metrics that show whether the app continues to solve the original problem—and whether drift or new constraints have emerged.

Governance is a first-class concern: access control, audit logging, and bias or error monitoring where relevant. This is where ai governance consulting intersects with product thinking.

We also set up feedback loops: user feedback, error triage, and model updates. In practice, this looks like quarterly reviews where we analyze performance, adjust models or prompts, and refine workflows. It’s ongoing ai transformation services, not just a one-off build.

Conclusion: Build AI Applications Only Where They Can Win

If there’s one idea to take away, it’s this: the real challenge isn’t “how” to build an AI application. It’s deciding where AI is genuinely the right tool compared to spreadsheets, rules, RPA, or plain process fixes.

Use the three pillars we covered—valuable problem, AI-appropriate problem shape, and data readiness—as hard gates. Combine them with low-risk PoCs, shadow mode, and staged mvp development to de-risk your AI investment. That’s how you turn AI budgets into measurable ROI instead of shelfware.

A practical next step: pick your top 2–3 AI ideas and run them through the fit scorecard from this article. If you want a structured partner to help you refine, prioritize, and execute, we’d be happy to talk. Start with an AI Discovery session, and we’ll help design the right mix of AI, automation, and software for your context.

FAQ

How do I decide whether I actually need to build an AI application or stick with spreadsheets?

Start by comparing the problem against three options: spreadsheets, rules/RPA, and AI. If the logic is clear, data volume is manageable, and decisions are infrequent, a well-designed spreadsheet or simple automation is usually best. AI is only justified when there’s high variability, unstructured inputs, or probabilistic decisions that rules and spreadsheets can’t handle efficiently.

What is a simple framework to assess if I should build an AI application at all?

Use a three-part scorecard: problem shape, data readiness, and business value. Score each dimension from 1–5 to create a quick “AI fit” profile. Only proceed to AI design if all three are above a threshold (for example, 3 or 4 out of 5), and document why AI beats non-AI alternatives for this specific use case.

What criteria make a problem a good fit for AI instead of rules or RPA?

A strong AI candidate typically has messy or unstructured inputs (text, voice, images), high variability across cases, and outcomes that aren’t purely yes/no. It often involves ranking, prediction, or classification under uncertainty. If you can’t write stable if-then rules to cover most scenarios, AI likely has an advantage.

How do I estimate the ROI of an AI application compared to non-AI alternatives?

Start from the business metrics: revenue, cost, risk, and customer experience. Estimate baseline performance and the expected uplift from each option—spreadsheets, rules/RPA, and AI—then factor in build, integration, and change management costs. This business value mapping lets you compare payback periods and prioritize the use cases where AI delivers a clearly superior return on investment.

What warning signs suggest my AI project is a vanity or low-value initiative?

Red flags include no clear business owner, fuzzy or purely vanity metrics, and motivations centered on PR or “innovation image” rather than outcomes. If there’s no budget or appetite to change processes, train users, or maintain the system, the project is likely to stall. In these cases, it’s better to stop early than to carry a zombie AI pilot that never reaches production.

How should I structure problem analysis before committing to AI application development?

Begin with technology-neutral problem statements and stakeholder interviews focused on what breaks today, how it’s fixed, and what “good” looks like. Map the workflow in detail, identify the most painful steps, and quantify the impact on time, cost, or quality. Only after this should you explore whether AI, rules, or simple tooling is the best lever.

How can I run a low-risk proof of concept to validate an AI application idea?

Define a narrow slice of the process, a small user group, and 2–3 clear success metrics tied to business outcomes. Time-box the PoC and, where possible, run the AI in shadow mode alongside humans to compare performance safely. Use the results to decide whether to invest in an MVP, pivot the solution, or pause the idea.

What steps are involved in fit-assessed AI application development from discovery to deployment?

A robust journey typically includes AI discovery and use case prioritization, solution design across AI and non-AI components, PoC and MVP development, and finally production deployment with monitoring and governance. At Buzzi.ai, we formalize this into staged engagements so you get structured decision points rather than a one-way commitment. You can learn more about our approach on our AI discovery page.

How do I evaluate data availability and quality when deciding to build an AI application?

Inventory where relevant data lives, who owns it, and how it’s structured, then check for gaps such as missing fields, inconsistent labels, or bias. Ask whether the data truly reflects current operations and whether you can legally and ethically use it. If data quality is low, plan for data cleaning, better instrumentation, or a phased approach that starts with simpler, non-AI improvements.

How can Buzzi.ai help us prioritize, design, and build AI applications with proven problem-solution fit?

We work with you from the start to clarify problems, assess AI fit, and prioritize the highest-impact opportunities. Our team then designs hybrid solutions across AI, automation, and UX, and delivers them through staged PoCs, MVPs, and production deployments with ongoing lifecycle management. The goal is always the same: AI that clearly beats the alternatives and drives real business outcomes, not just impressive demos.