AI Team Augmentation That Works: Fix the Structure First

Most AI team augmentation fails not from bad talent but bad structure. Learn how to assess readiness, redesign teams, and make AI hires actually deliver.

Most companies don’t have an AI talent problem. They have an AI team structure problem—and they keep trying to solve it by buying more talent.

On paper, AI team augmentation sounds simple: plug in a few machine learning engineers, maybe a data scientist or two, and your product magically becomes “AI-powered.” That’s how traditional ai staffing or software staff augmentation has worked for years—add more hands to ship more features.

But AI isn’t just more code. It’s a new capability layer that cuts across data, product, and engineering. When you drop AI specialists into software-era structures, you don’t get AI features—you get stranded proofs of concept, rising technical debt, and frustrated teams. The problem isn’t the people; it’s the system you plug them into.

In this article, we’ll unpack why most ai team augmentation services for product engineering teams underperform, how to assess whether your organization is structurally ready, and what a realistic adaptation roadmap looks like. We’ll also outline the metrics that tell you if your AI investments are actually working. At Buzzi.ai, we’ve learned the hard way that effective augmentation starts with structural readiness and integration, not just headcount—and we’ll show you how to apply that lens to your own teams.

What AI Team Augmentation Really Is (and Isn’t)

From ‘More Hands’ to ‘New Capability Layer’

In traditional software development, staff augmentation is straightforward: you add React developers, backend engineers, or QA specialists to increase capacity. The work is deterministic, the software development lifecycle is well understood, and tasks fit neatly into tickets. You’re buying more throughput for a known machine.

AI team augmentation is different. You’re not just adding more people to the same workflow; you’re introducing new workflows and feedback loops. Machine learning engineers, data scientists, and ML Ops specialists bring an entirely different way of working—experiment-heavy, probabilistic, and deeply dependent on data infrastructure.

Think about the contrast. Adding more frontend developers helps you ship an existing roadmap faster: more screens, more APIs, more features. Adding an ML engineer to build a recommendations system is not like that. It requires new data pipelines, experiment tracking, offline/online evaluation, and new interfaces with product and operations. You’re changing the shape of the work, not just the volume.

That’s why ai staffing only makes sense when you already have a clear product problem and at least a partial path to solving it with data and models. Augmentation is a force multiplier for an existing motion: improving search relevance, automating classification, optimizing pricing. If you’re still at the “maybe AI can help somewhere?” stage, you don’t need augmentation—you need discovery.

Why Talent-Only Augmentation Models Break

Most vendors still pitch AI like legacy outsourcing: “AI talent on demand.” You get a squad of data scientists, maybe someone who has shipped a model before, and you’re told they’ll unlock AI value in a few sprints. The engagement model assumes you can bolt these people onto your existing structure and process.

Here’s what usually happens instead. The new AI specialists spin up notebooks, explore data, and prototype models. They might even build something that works well in isolation. But because ai talent integration into your existing product engineering teams hasn’t been designed, nobody knows who owns the feature, how it gets into production, or who will maintain it.

The result: a shelf full of POCs that never ship. Product leaders are frustrated, engineering complains about technical debt, and the AI specialists quietly leave. There were no clear success metrics, no mapped dependencies, and no adapted technical leadership model to integrate AI work into the mainline roadmap.

In other words, the talent wasn’t the problem. The structure was.

Why Strong AI Talent Still Fails in Software-Era Structures

The Mismatch Between AI Workflows and Feature Factories

Most modern software teams run as feature factories. They operate on two-week sprints, fixed backlogs, and a bias toward deterministic commitments: if a ticket is sized to three points, it should be done in a sprint. This works well for UI changes, API endpoints, and bugs. It’s how agile delivery is taught and measured.

AI work doesn’t fit neatly into that model. Training a model, iterating on features, or improving performance is inherently uncertain. You may discover that your data isn’t predictive enough, your label quality is poor, or your chosen algorithm underperforms. It’s closer to product discovery and experimentation culture than to pure implementation in a software development lifecycle.

Now imagine an ML engineer working on a recommendation system while the rest of the squad is shipping urgent UI features. Every sprint planning, their work is hard to estimate, so it gets sliced or postponed. When a release crunch hits, the AI work is first to be bumped. Over time, the organization concludes that “AI is slow and risky,” reinforcing the feature factory bias.

This mismatch is a big part of why ai team augmentation fails and how to fix team structure becomes a strategic question, not just a hiring one. If you don’t adapt planning, prioritization, and risk management for AI, even the best talent will look ineffective. Research from McKinsey has repeatedly shown that organizational and process issues, not model quality, are among the top reasons AI initiatives stall before production (example report).

Role Confusion and Ownership Vacuums

Even when teams make “room” for AI work, organizational design problems show up fast. Who owns feature definition when a model is involved—the product manager, the data scientist, or the ML engineer? Who decides which metrics matter most: accuracy, latency, revenue impact, or user satisfaction?

Without a clear ml engineering team structure, roles blur. Backend engineers might dabble in modeling, data scientists might push code to APIs, MLOps folks might get pulled into firefighting instead of building foundations. Classic anti-patterns emerge: “data science in the corner” building models nobody uses, a “hero ML engineer” who becomes a bottleneck, or an “AI lab” that demos cool prototypes disconnected from any real roadmap.

Consider an enterprise that sets up an AI lab with brilliant PhDs. They build sophisticated NLP models and forecasting systems in isolation. But because there is no integration pattern with cross functional squads, no clear ownership, and no alignment with product priorities, none of it ships. The lab looks impressive in board decks, but impact is near zero.

This isn’t a skills gap. It’s a skills gap analysis and ownership problem. Roles and interfaces weren’t explicitly defined, so decisions stalled and prototypes never hardened into production systems.

Platform, Data, and Governance Debt

The final structural trap is infrastructure and governance. Many organizations bring in AI specialists long before they have a basic data platform team, coherent ml ops practices, or even clear governance and compliance guidelines for models. The result is expensive people waiting on basic plumbing.

A common vignette: data lives in scattered CSVs and ad hoc exports. An ML engineer trains a promising model locally, but there’s no pipeline to refresh data, no monitoring to track drift, and no secure path to deploy into production. When the model finally ships, it breaks silently, undermining trust and driving more technical debt.

Here, ai team augmentation doesn’t just fail to deliver—it amplifies weaknesses. You’ve multiplied the load on already fragile systems. As one industry news story about AI failures at a major bank highlighted, heavy hiring without platform readiness led to a graveyard of unmaintained models and compliance headaches (representative coverage).

Until you address platform, data, and governance debt, you’re asking AI specialists to build skyscrapers on sand.

A Readiness Checklist Before You Buy AI Team Augmentation

Team Topology and Decision Rights

Before you sign any ai augmentation consulting contract, pause and inspect your team topology. Do your product, engineering, and data roles currently make decisions together, or does each operate in its own lane? Can an ML engineer sit in a planning meeting and have real influence over scope and approach, or are they treated as a specialist to “plug in later”?

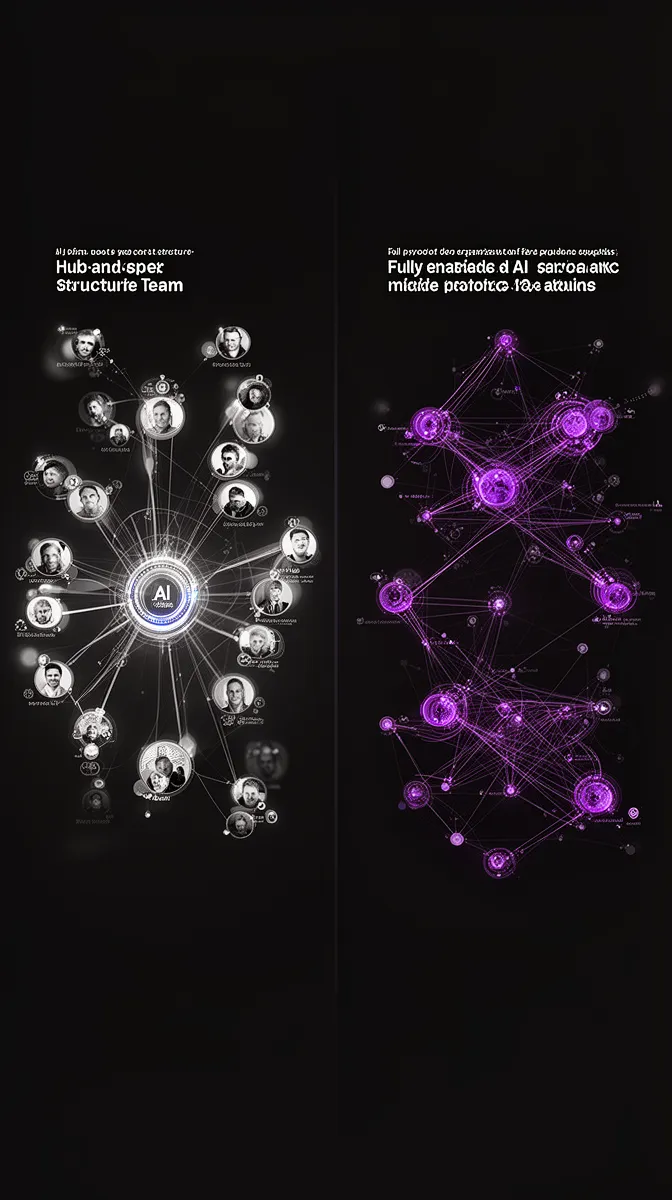

An AI-enabled topology typically follows one of two patterns: embedded specialists in cross-functional squads, or a central enablement team that partners tightly with squads. In both cases, you need explicit decision rights over data access, model behavior, and release criteria. Without that, your organizational design will produce constant friction.

Imagine three variations of a product team:

- Feature team only: PM + frontend + backend. No AI capability.

- Feature team + embedded ML engineer: AI work is integrated into backlog and rituals.

- Central AI enablement: small core team supports multiple product squads with shared components.

The last two are viable ml engineering team structure options; the first one isn’t, if you expect AI features. The key is not which model you pick, but whether decision rights and communication paths are explicit.

Data, Platform, and MLOps Baselines

Next, assess your data and platform baseline. At minimum, you need to know where data comes from, who owns it, and how to access it safely. You also need some form of data platform team support, even if it’s part-time, to avoid one-off pipelines and broken dashboards.

Here’s a simple maturity scale for ml ops and platform readiness:

- Level 1 – Ad hoc: models trained on local machines, manual deployments, no monitoring.

- Level 2 – Basic: shared environments, scripted deployments, basic logging and metrics.

- Level 3 – Managed: CI/CD for models, automated retraining pipelines, drift and performance monitoring, integrated governance and compliance checks.

You don’t need Level 3 perfection before starting ai model deployment services-style work, but you do need clarity on where you are and what you’ll upgrade first. Academic and industry research on MLOps consistently shows that deployment and monitoring capabilities are the strongest predictors of real-world AI impact (example study; see also Google’s work on MLOps maturity).

If your current reality is lots of CSVs and no shared environments, your first “AI project” should probably be building a minimal data and model infrastructure, not hiring a large augmentation team.

Product and Culture Signals

Finally, look at your product practices and culture. Do your teams run experiments, A/B tests, and product discovery spikes, or is everything a big-bang release with a fixed scope and date? Are leaders comfortable with probabilistic outcomes, or do they expect deterministic predictions about what will work?

An organization with a strong experimentation culture can absorb the uncertainty of AI naturally. Teams are used to failing small, iterating, and tying changes to measurable behavior. Where that culture is weak, AI projects will be judged by the same criteria as deterministic features—and will appear to “fail” more often, even when they’re generating valuable learning.

Look for culture signals: Do stakeholders ask “What will we learn?” as often as “When will it ship?” Do retrospectives include discussion of data quality and model performance, or just velocity and bugs? If not, part of your change management plan needs to be cultural, not just technical.

Designing an AI-Enabled Team Structure That Can Use Augmentation

Three Integration Patterns That Actually Work

Once you understand your baseline, you can choose an ai team augmentation model that fits. In practice, three patterns consistently work.

First, embedded specialists in product squads. This is ideal when you need ai team augmentation services for product engineering teams that are already shipping quickly and have clear AI opportunities. An ML engineer or data scientist sits directly with the team, attends standups, and co-owns outcomes.

Second, a central AI enablement team—a de facto ai center of excellence. This small expert group builds shared components, platforms, and best practices that multiple squads can reuse. It’s well-suited for enterprises and organizations with many product surfaces, where duplication would be costly.

Third, a hybrid hub-and-spoke model. The hub owns core infrastructure, governance, and shared models; the spokes embed AI capability into product teams. This is often the best ai team augmentation model for enterprise product teams, because it balances speed in squads with consistency across the organization.

What doesn’t work is the “AI team in the basement” pattern—an isolated group building things nobody asked for. That’s where AI labs go to die.

Clarifying Roles and Interfaces

Whatever pattern you choose, you need clarity on who does what. Machine learning engineers are responsible for end-to-end model integration: from data pipelines to serving and monitoring. Data scientists focus on problem framing, feature engineering, and exploratory analysis. ML Ops specialists build and operate the infrastructure that makes all this repeatable.

On the product side, product engineering teams and product managers own user value, UX integration, and non-functional requirements. Security, legal, and governance functions define boundaries and approval processes. This is where explicit “contracts” and RACI-style maps prevent conflict and rework.

For example, in a recommendations feature team, you might define responsibilities like this: product manager owns success metrics and experiment design; ML engineer owns model performance and deployment; backend engineer owns integration and API stability; MLOps owns observability; governance ensures compliance with data-use policies. That’s organizational design work as much as technical work.

Interfaces with security and compliance should also be clear. Who signs off on a model that affects credit decisions? How are fairness and bias checks integrated into pipelines? This is where governance and compliance become operational, not just aspirational.

Adapting Agile, Ceremonies, and Delivery Pipelines

Next, you must adapt process. Classic agile ceremonies assume that work is deterministic and easily estimable. AI work often isn’t, so you need a dual-track approach: an experimentation track and a hardening/production track.

In the experimentation track, tasks look like “test three feature sets for uplift” or “explore new model architectures”—with outcomes framed as learning, not delivery. In the production track, tasks are about integrating, testing, and deploying a chosen model using robust delivery pipelines and CI/CD.

On your sprint board, this might mean separate swimlanes for data exploration, model training, and productionization, each with its own definition of done. Your software development lifecycle expands to include model versioning, canary rollouts, and offline/online evaluation. This is also where change management is crucial: teams need coaching to treat models as living systems, not static features.

From a tooling perspective, CI systems must know how to build, test, and deploy models alongside code. Monitoring needs to track prediction quality, drift, and business impact. Without these adaptations, ai team augmentation will be hamstrung by process friction, no matter how good the people are.

Building a Structural Adaptation Roadmap for AI Augmentation

Phase 1: Assessment and Quick Wins

Rather than jumping straight into hiring, treat ai augmentation consulting to assess team structure readiness as Phase 1. Over 4–6 weeks, you can map team topology, architecture, workflows, and current AI initiatives. This is effectively an ai readiness assessment for both organization and platform.

The output should be concrete: a prioritized list of structural gaps, a view of your current skills gap analysis, and quick wins. Quick wins might include clarifying ownership for a critical AI feature, cleaning a key dataset, or setting up a basic MLOps pipeline that unblocks several potential models.

At Buzzi.ai, we formalize this as an AI discovery and structural readiness assessment. The goal isn’t a glossy report; it’s a practical blueprint that de-risks any subsequent augmentation investment, whether you work with us or not.

Phase 2: Pilot AI-Enabled Team

Phase 2 is to pilot an AI-enabled team. Rather than transforming the entire organization, pick one product surface where AI can move a clear metric—say, search relevance or lead scoring. Then, form a pilot squad with embedded AI capability and explicit structural changes.

This is where ai ml team structure and augmentation strategy for startups and larger organizations converge: start small, learn fast. Define success not just as “model in production,” but as “team can repeatedly take AI ideas from concept to shipped feature.” Include experimentation culture goals, such as the number of experiments run per quarter.

For example, you might pilot an AI-powered search ranking inside one app. A dedicated ML engineer works with the squad; product engineering teams adjust ceremonies to include model reviews; stakeholders agree on evaluation metrics and acceptable risk. This pilot becomes the template for future AI-enabled teams.

Phase 3: Scale and Standardize

Once the pilot proves the pattern, Phase 3 is scale and standardize. Here, you codify what worked into playbooks, templates, and governance mechanisms. You might document model review checklists, standardize deployment templates, and formalize roles for AI champions in each squad.

As adoption grows, you’re likely to move toward a more formal ai center of excellence and platform team, especially in larger organizations. This is where enterprise ai implementation concerns like shared governance, cross-team dependencies, and compliance reviews become central. Your change isn’t just technical; it’s organizational.

The point is that governance and compliance emerge from repeated practice, not from a one-time policy. Scaling AI means scaling the structures that let ai team augmentation work reliably across teams, not just cloning one successful pilot.

Measuring Whether AI Team Augmentation Is Working

Leading Indicators, Not Just ROI

When leaders ask about AI investments, they tend to jump straight to ROI. That’s understandable but dangerous: real return often lags by months or years, and attribution can be fuzzy. If you only track revenue impact, you’ll kill promising initiatives too early.

Instead, define ai initiative success metrics that include leading indicators. Examples: number of AI experiments run per quarter, time from model idea to first production deployment, percentage of models with proper monitoring. You might also track share of features that use predictive components or automation.

Over time, these connect to business outcomes like conversion rate, churn, or cost to serve. But in the first 6–12 months of ai process automation or predictive analytics development, focusing on capability-building metrics is more predictive of long-term success.

What to Track at Team vs Organization Level

You’ll also want to separate team-level metrics from organization-level ones. At the team level, track things like cycle time for AI features, deployment frequency for models, and experiment win rate. These help teams tune their own workflows and identify bottlenecks.

At the organizational level, track AI feature adoption, incident rates, and compliance exceptions. These metrics tell you whether your organizational design and governance are scaling. They’re also key inputs to leadership decisions about where to place more ai team augmentation resources.

For example, a monthly steering meeting might review “what metrics to track for successful ai team augmentation,” comparing squads on experiment velocity, model uptime, and business impact. This creates a feedback loop where structure and process improve based on data, not anecdote. Stakeholder alignment then becomes a matter of reading the same dashboard, not debating opinions.

How Startups vs Enterprises Should Approach AI Team Augmentation

Startups: Bias for Embedded, Multi-Hat Roles

Startups face a different constraint set. They don’t have the luxury of big central teams or heavy governance, but they often have cleaner architectures and faster decision-making. For them, the right ai ml team structure and augmentation strategy for startups is usually lightweight and embedded.

That means hiring or augmenting with multi-hat ML engineers who can handle data, modeling, and integration, working directly inside product engineering teams. Governance is light-touch but explicit, and experimentation culture is strong. The focus is on learning loops: ship small AI-powered improvements, measure, iterate.

What startups should avoid is overbuilding a central AI function too early. A big AI group without clear product hooks becomes a cost center searching for a problem. For ai for startups, the best structure is the one that keeps AI as close as possible to users and outcomes.

Enterprises: Design for Governance and Scale

Enterprises, by contrast, must design for scale, regulation, and coordination across many teams. Here, the best ai team augmentation model for enterprise product teams is usually hybrid: strong central platform and governance, plus embedded AI capability in key product lines.

A central ai center of excellence defines standards, manages shared infrastructure, and works with risk, legal, and compliance. Product-aligned squads then consume these capabilities to drive ai for enterprises use cases: personalization, fraud detection, forecasting, and more. This is classic enterprise ai implementation.

Authoritative best-practice guides on AI governance, such as those from the OECD and NIST, emphasize clear roles, risk assessments, and lifecycle management (NIST AI RMF). Augmentation partners that understand this will help you build governance into the structure, not bolt it on later.

Choosing an AI Augmentation Partner That Fixes Structure, Not Just Headcount

Questions to Qualify Vendors

When evaluating ai team augmentation services, your goal is to separate vendors who sell bodies from partners who design systems. The simplest way is to ask better questions.

Here’s a due diligence list:

- How do you assess our structural readiness before placing AI talent?

- What does your ai augmentation consulting to assess team structure readiness process look like?

- How do you integrate with existing teams, rituals, and codebases?

- Can you share examples of how you’ve adapted ai staffing models to different organizations?

- What metrics do you track to know if augmentation is working?

- How do you handle knowledge transfer and avoid long-term vendor lock-in?

- What’s your approach to governance and compliance when AI features are high-risk?

- How do you manage change for product managers and engineers who are new to AI?

- Can you support both AI specialists and related workflow automation work?

- What happens if we discover structural issues mid-engagement—how do you respond?

If a vendor only talks about hourly rates and resumes, they’re not prepared to help you redesign the system. And that’s the system your AI talent will live in.

How Buzzi.ai’s Augmentation Model Reduces Risk

At Buzzi.ai, we’ve built our model around structure-first augmentation. We start with a readiness and integration assessment, not a staffing proposal. That lets us see how to how to integrate ai specialists into existing software development teams without breaking what already works.

From there, we embed AI agents and specialists into your workflows—not beside them. Because we also build AI agent development and integration solutions, voice bots, and workflow automation, we think in systems: data flows, decision points, user journeys. Augmentation is one tool in that broader toolkit.

For one client with a stalled recommendation project, this approach meant starting by clarifying ownership, cleaning core datasets, and standing up minimal MLOps. Only then did we place an ML engineer alongside the feature team. Within a few months, they had models in production, an adapted process, and a template for other teams to follow—proof that ai team augmentation services for product engineering teams work when the structure is ready.

If you’re considering ai consulting services, the most valuable step you can take is to insist on this kind of structural lens. It turns augmentation from a gamble into a compounding capability.

Conclusion: Fix the System Before You Add Talent

When ai team augmentation fails, it’s tempting to blame the talent. But the deeper issue is almost always structural: team topology, data and platform maturity, and cultural readiness. Treating AI as “just more headcount” guarantees stranded prototypes and cynical teams.

If you focus first on readiness—across team design, platform, and culture—you create an environment where augmented AI talent can actually ship. Clear roles, adapted workflows, and embedded governance make ai talent integration sustainable. That’s the essence of why ai team augmentation fails and how to fix team structure: fix the system, then add the specialists.

The leaders who win with AI will be the ones who treat augmentation as a structural design problem, not a purchasing decision. If you’re about to sign a staffing contract, consider pausing and starting instead with a short, focused structural assessment. We’d be glad to run an AI discovery and structural readiness assessment with you—or simply use the ideas here to map your own gaps, then augment with confidence.

FAQ

What is AI team augmentation and how is it different from traditional software staff augmentation?

AI team augmentation means adding AI-focused roles—like ML engineers, data scientists, and MLOps specialists—to extend your product capabilities, not just your capacity. Unlike traditional software staff augmentation, these roles introduce new workflows around data, experimentation, and model lifecycle. You’re adding a new capability layer that must be integrated into product and engineering structures, not just more people to the same backlog.

Why do AI team augmentation initiatives fail even when the AI talent is strong?

They usually fail because the surrounding system—team topology, data platform, and governance—isn’t ready to support AI work. Strong specialists end up blocked by missing data pipelines, unclear ownership, or processes that can’t handle probabilistic outcomes. Without structural readiness, even top-tier talent will produce impressive prototypes that never reach or survive in production.

How can I assess whether my current product and engineering structure is ready for AI team augmentation?

Start by examining three dimensions: team topology, platform/data, and culture. Ask whether product, engineering, and data roles make joint decisions; whether you have basic shared data infrastructure and MLOps; and whether your teams already practice experimentation and product discovery. A focused AI readiness assessment—like Buzzi.ai’s AI discovery and structural readiness assessment—can turn these questions into a concrete, prioritized roadmap.

Which AI and ML roles are essential in an AI-enabled product team, and how should they work with existing developers?

Core roles usually include a machine learning engineer, a data scientist (for more complex problems), and someone responsible for MLOps and infrastructure. They should work directly with product managers, backend/frontend engineers, and designers in a cross-functional squad. The key is clear responsibility boundaries and shared ownership of outcomes, so AI work is part of the main product flow, not an external service.

What integration patterns for AI specialists work best in real product organizations?

Three patterns consistently work: embedded AI specialists in product squads, a central AI enablement team, and a hybrid hub-and-spoke model. Embedded patterns maximize speed and product alignment, while central or hybrid models suit enterprises that need shared platforms and governance. The wrong pattern is an isolated “AI lab” disconnected from real roadmaps and decision-making.

How should agile practices and development workflows change when AI work is involved?

Agile practices need to make room for uncertainty and learning. That often means introducing a dual-track approach: an experimentation track for model exploration and a production track for integration and hardening. Sprints should include experimentation tasks with learning-focused outcomes, and your pipelines must extend beyond code to include model versioning, evaluation, and monitoring.

What data, platform, and MLOps prerequisites should be in place before investing in AI team augmentation?

At a minimum, you need clear data sources and ownership, a shared environment for experimentation, and basic MLOps capabilities like reproducible training and simple deployments. You don’t need a perfect platform, but you do need to avoid ad hoc CSVs and one-off scripts as your main data pipeline. As readiness grows, you can invest in more advanced CI/CD for models, automated retraining, and integrated governance checks.

What metrics and leading indicators should we track to know if AI team augmentation is succeeding?

Track both capability-building and business outcomes. Leading indicators include experiment velocity, time from idea to first model in production, and the percentage of models with proper monitoring. Over time, connect these to business metrics like conversion, churn, or cost to serve, but avoid judging early-stage efforts purely on lagging ROI.

How should startups versus enterprises think differently about AI team structures and augmentation?

Startups should favor lightweight, embedded structures with multi-hat ML engineers working directly in product teams, optimizing for speed and learning. Enterprises need hybrid structures with a central platform and governance layer plus embedded AI capability in key product lines. The structural constraints are different, but the principle is the same: design the system around AI work before you increase headcount.

What questions should I ask an AI augmentation vendor to ensure they help with structural readiness, not just staffing?

Ask how they assess your current team and platform, how they integrate with existing squads and rituals, and which metrics they use to measure success. Probe their approach to governance, knowledge transfer, and change management for non-AI stakeholders. If they can’t speak concretely about structure, they’re likely selling staff, not a sustainable AI capability.