Stop Failing at AI for Predictive Maintenance: Fix the Data First

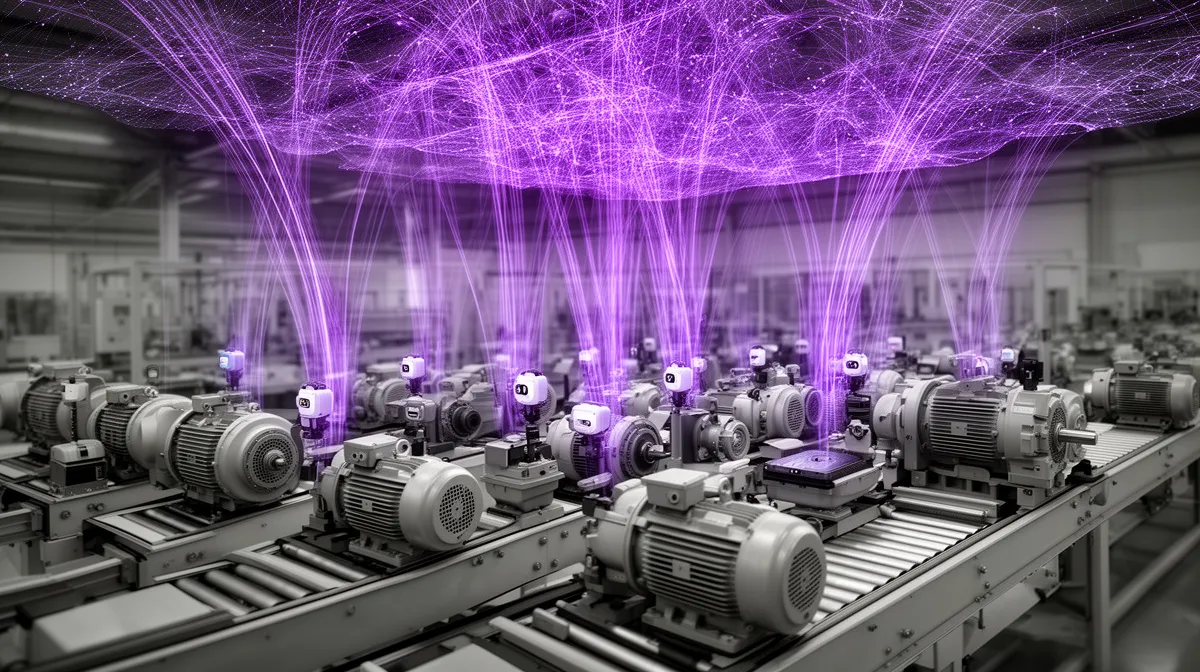

Learn how AI for predictive maintenance succeeds only when sensor data is complete and reliable. Discover a staged, data-first roadmap manufacturers can use.

Most AI for predictive maintenance projects don’t fail because the data science is weak. They fail because sensor data in real plants is incomplete, noisy, and too inconsistent to trust for day-to-day decisions.

If you run a brownfield manufacturing plant, you’ve probably seen this movie. A vendor runs a slick demo on a beautifully instrumented pilot line, the predictive maintenance AI looks magical, and then everything collapses when you try to roll it out across older lines with spotty sensors and messy industrial IoT data.

Dashboards that show current vibration or temperature are useful, but they’re not the same as a robust system that can reliably forecast failures with enough lead time to act. The uncomfortable truth is that predictive maintenance AI lives or dies on sensor data quality, coverage, and history—not on the latest algorithm hype.

In this article, we’ll walk through a data-realistic playbook for AI for predictive maintenance in brownfield manufacturing plants. You’ll learn how to assess data readiness, design a pragmatic sensor strategy, and stage AI deployment so that each step delivers value using the sensor data you actually have. We’ll also show how we at Buzzi.ai start with brutal data reality—auditing sensor coverage and data quality—before we ever talk about models.

Why AI for Predictive Maintenance Fails in Real Plants

The Algorithm Isn’t Your Main Problem—Data Is

Most vendor demos for predictive maintenance AI are built on carefully curated datasets. Every sensor is in the right place, sampling at a perfect rate, with clean labels for every failure event. That world is the exception, not the rule.

In real plants, sensor streams are full of gaps, noise, and misaligned timestamps. A vibration sensor might mysteriously go flat every weekend. A temperature sensor might drift slowly over months. Historian outages and PLC reboots create holes that failure prediction models interpret as real behavior.

The result is fragile models. They look great in a POC where the data set is cherry-picked, but they break when exposed to the full reality of your industrial IoT data. We’ve seen a manufacturer get stellar results predicting bearing failures on one well-instrumented line—only to watch the model fail on other lines where sensors were installed later, tags were named differently, and large chunks of history were missing.

This isn’t an isolated story. Industry analyses from firms like McKinsey repeatedly find that data foundations, not AI algorithms, are the biggest barrier to scaling predictive maintenance. If your sensor data quality is weak, even the best failure prediction models and anomaly detection techniques will underperform.

Common Data Pitfalls in Manufacturing Environments

Walk through almost any brownfield manufacturing plant and you’ll see the same patterns. The equipment may be different, but the data problems rhyme.

Typical issues include:

- Sensor coverage gaps on critical components (e.g., bearings on only one end of a motor, or no vibration sensors on key pumps).

- Irregular logging frequency, where some tags are at 1 Hz, others at 10 seconds, and some only on state change.

- Manual overrides and local PLC hacks that never make it into the historian or CMMS.

- PLC tag changes that silently break historian logging, leaving months of missing data.

- Historian downtime or storage limits that truncate old data just when you need historical failure data the most.

- Fragmented records spread across multiple historians, PLCs, spreadsheets, and maintenance systems.

- No consistent standard for tag names, units, or sampling rates—classic weak data governance in manufacturing.

Each of these might seem like a small nuisance on its own. Together, they make it extremely hard to build a reliable picture of asset health monitoring across your plant, let alone train accurate predictive maintenance AI models.

Greenfield Myths vs. Brownfield Reality

Many success stories for AI for predictive maintenance quietly assume a greenfield environment: brand-new equipment, fully instrumented, with standardized sensors and a modern historian from day one. It’s a nice fantasy.

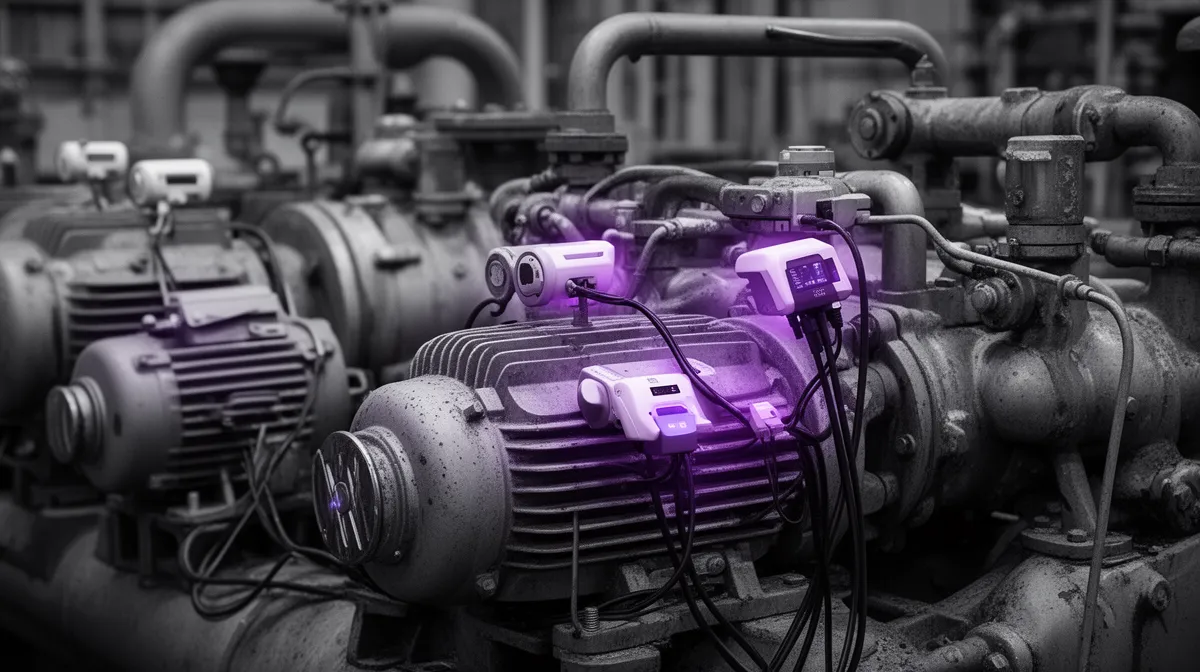

The reality in most brownfield manufacturing plants is very different. You’re dealing with legacy equipment monitoring, partial retrofits, limited documentation, and sensors added over years by different vendors and internal teams. Some assets are well-covered; others run blind.

Trying to apply a greenfield playbook to this environment is a recipe for frustration. Instead, you need a predictive maintenance strategy that starts from the data you actually have, accepts its limitations, and then charts a stepwise path forward. That path may begin with simple condition monitoring and anomaly detection on a subset of assets, then progress to more advanced models as you close coverage gaps and improve data quality.

What Data AI for Predictive Maintenance Really Needs

Core Data Types: Signals, Events, and Context

Effective AI for predictive maintenance is built on three pillars of data: signals, events, and context. Signals are your condition monitoring streams—vibration sensors, temperature sensors, current, pressure, flow, and other physical measurements. Events capture what actually happened: failures, trips, maintenance work orders, inspections, and root cause analysis outcomes.

Context is everything around those signals and events: operating mode, throughput, product recipes, ambient conditions, and shift patterns. Failure prediction models need to know not just what the machine “felt,” but what the outcome was and under what conditions it was running. This is how models distinguish between “high vibration because we’re at max throughput” and “high vibration because something is breaking.”

For example, imagine three assets: a pump, a motor, and a compressor. For each, your signal layer might include vibration (overall and banded), bearing and winding temperatures, motor current, and discharge pressure. Your event layer would include unplanned stops, component replacements, and detailed maintenance work orders. Context could include flow rate, suction/discharge temperatures, valve positions, and the specific product being run. Together, these layers form a usable foundation for predictive maintenance AI.

Real-time streaming data from sensors usually lives in PLCs, edge devices, or historians, while slower-moving data like CMMS logs or ERP orders may update hourly or daily. A good data architecture for industrial IoT data makes it possible to line up these different time scales so you can look back and say, “What did the machine look like in the hours and days before this failure?”

Condition Monitoring vs. Predictive Maintenance AI

It’s important to distinguish between basic condition monitoring and true predictive maintenance AI. Condition monitoring typically uses thresholds: if vibration exceeds X or temperature exceeds Y, raise an alarm. Many plants already have this type of monitoring, even if it’s just OEM-provided software on a local workstation.

Predictive maintenance AI is different. Instead of just checking whether a current reading is above a fixed limit, it learns patterns over time—how vibration signatures evolve as bearings wear, how temperature profiles change when lubrication degrades, and how combinations of signals foreshadow specific failure modes. This is where failure prediction models and advanced anomaly detection start to shine.

Consider a machine where current condition monitoring triggers an alarm when bearing temperature exceeds 90°C. That’s useful but reactive; you’re already close to trouble. A predictive model, trained on months of historical failure data, might detect a subtle increase in vibration at a particular frequency band combined with a slight rise in temperature during certain loads—giving you days of warning before that 90°C threshold is ever crossed.

Realistic Minimum Viable Data Sets

So what data is needed for AI based predictive maintenance to be practical? There’s no single answer, but there are realistic ranges. For rotating equipment like motors, pumps, and fans, a common starting point is months of 1–10 Hz vibration and temperature data, plus several failure or near-failure events per asset class.

Conveyors may need lower sampling rates but benefit from additional context like start/stop cycles, load, and jam events. Compressors may justify higher-frequency vibration on specific components but fewer contextual tags. Think of these as guidelines, not rigid rules: the right mix depends on criticality, failure modes, and how stable the process is.

If you don’t yet have much historical failure data, you’re not blocked. You can still start with anomaly detection and rule-based models while you build up history. The key is to be explicit about your sensor coverage gaps and known data quality issues, and to design models that can tolerate those realities. Academic and industrial surveys, such as those summarized on ScienceDirect’s predictive maintenance overview, emphasize that “perfect” data is rare; the goal is to know your limitations and work within them.

For further guidance, standards like ISO 10816 for vibration evaluation provide reference points for measurement and interpretation. They don’t replace a plant-specific strategy, but they are useful anchors when designing minimum viable data sets for condition monitoring and predictive maintenance AI.

Run a Predictive Maintenance Data Readiness Assessment

Map Assets, Sensors, and Failure Modes

The fastest way to stop guessing about AI readiness is to run a structured data readiness assessment. Start by mapping your assets: build or refine an asset hierarchy that reflects lines, systems, and critical equipment. Then, for each critical asset, list the sensors you already have and the known failure modes you care about.

Next, prioritize assets by business impact—downtime cost, safety risk, quality impact, and regulatory implications. A small auxiliary pump on a non-critical system shouldn’t have the same priority as the main compressor on your bottleneck line. This exercise often reveals that a handful of assets drive a disproportionate share of lost production and maintenance spend.

From here, you can build a simple asset–sensor–failure matrix for one production line. Rows are assets, columns are key failure modes, and cells capture what condition monitoring signals you have (and don’t have) for detecting those failures. Suddenly it becomes clear where AI for predictive maintenance can realistically start, and where sensor gaps must be closed before advanced models make sense.

If you prefer a partner for this process, Buzzi.ai’s AI Discovery and data readiness assessment is designed to walk your team through exactly this mapping, grounded in hard numbers instead of wishful thinking.

Audit Data Quality: Completeness, Noise, and Bias

Once you know what should be measured, you need to understand the quality of what is actually measured. A basic sensor data quality audit starts with completeness: what percentage of time do you have valid readings for your key tags? A simple plot of missing data over time for a critical vibration sensor can be surprisingly revealing.

Next, check timestamp consistency and unit consistency. Are all sensors on a line synchronized to the same clock, or do some drift by minutes or hours? Are temperatures always in the same unit? Do ranges make physical sense, or are there clear outliers and flat-lines suggesting sensor failures or historian issues?

Then, look for bias in your industrial IoT data. For example, perhaps your compressor vibration data mostly comes from normal production hours, while many failures actually happen during night shifts or weekend changeovers. In that case, your anomaly detection or prediction models will be overconfident on the data they’ve seen and blind to the conditions that really matter. Robust predictive maintenance AI software bakes in automated sensor data quality checks—missing-data alerts, drift detection, timestamp validation—so these issues are surfaced early instead of sabotaging models later.

Evaluate Historical Failures and Labels

The final piece of the assessment is your event history. You need reliable labels: clear, consistent recording of failures, maintenance work orders, and root cause analysis outcomes. Many plants discover that CMMS codes like “noisy,” “stopped,” or “won’t start” are too vague to be useful without additional context.

One manufacturer we worked with had years of CMMS logs but little structure. By pairing those logs with operator notes and historian data, we helped them cluster events into a few dominant failure modes for a critical motor class. That transformed messy text into usable labels for supervised models and more focused asset performance management.

As a rule of thumb, you need dozens of well-labeled failures (or near-failures) per asset family before supervised prediction becomes reliable. Below that, anomaly detection and rules are usually more appropriate. A good data readiness assessment will make this explicit, so you don’t overpromise what AI for predictive maintenance can deliver on day one.

Design a Sensor and Data Strategy for Brownfield Plants

Prioritize Sensors by Business Value, Not Technology Hype

In brownfield manufacturing plants, you’ll never have infinite budget or downtime to install sensors everywhere. That’s why your sensor strategy should be driven by business value, not by the coolest new industrial gadget.

Start by ranking equipment based on downtime cost, safety implications, and maintenance spend. You might discover that one compressor line, feeding your entire facility, is worth far more attention than several auxiliary systems combined. This is where a focused predictive maintenance strategy pays off.

From there, choose a small set of high-impact assets for initial upgrades. For example, a plant might compare three lines and decide to fully instrument the most critical compressor line first—adding vibration sensors to key bearings, temperature sensors to hotspots, and current monitoring for motors. This targeted approach to AI for predictive maintenance yields measurable downtime reduction without spreading your budget too thin.

Sensor Planning for Legacy Equipment and Coverage Gaps

Legacy equipment monitoring doesn’t require tearing everything out and starting over. In many cases, you can add clamp-on vibration sensors, non-invasive temperature sensors, or current transformers to existing hardware with minimal disruption. Wireless sensors with battery power and mesh networking can reach places where wiring would be painful or unsafe.

Where full coverage is unrealistic, focus on leading indicators. For example, you might not be able to instrument every bearing inside a hot, hazardous enclosure, but you can monitor upstream motor current, casing temperature, or downstream pressure to detect developing issues. The goal is to close the most important sensor coverage gaps that limit your industrial AI predictive maintenance solution for legacy equipment.

Real-world case studies, such as Shell’s description of using predictive maintenance in brownfield environments (Shell predictive maintenance case study), show that pragmatic retrofits often outperform ambitious but fragile greenfield-style plans.

Decide What Lives at the Edge, in the Historian, and in the Cloud

Once you know what to measure, you need to decide where that data should live. High-frequency vibration might be captured and preprocessed on an edge device, with only summary statistics stored in the historian. Lower-frequency condition monitoring signals may go straight to the historian, while long-term archives and AI model training happen in the cloud.

A practical pattern is to capture raw data at the edge for short windows—say, several days—and continuously compute features like RMS vibration, kurtosis, or band energy. Those aggregates are then pushed to the historian or cloud, dramatically reducing bandwidth while preserving predictive value. This also supports more resilient AI model deployment: if the cloud link drops, edge devices can still do basic anomaly detection locally.

Whatever architecture you choose, enforce consistent naming conventions and metadata across PLCs, historians, and cloud stores. Without good data governance in manufacturing—standardized tag names, units, and sampling rates—you’ll struggle to scale from one pilot line to an enterprise-wide predictive maintenance AI solution. Cloud and IIoT providers like AWS share best practices for this layered approach (AWS IoT predictive maintenance solution), which can be adapted to your environment.

Stage AI Deployment to Match Your Data Maturity

Stage 1: Rules and Smart Alerts on Existing Data

If you’re wondering how to implement AI for predictive maintenance with limited sensor data, Stage 1 is your answer: start with rules and smart alerts on what you already collect. Many plants have decades of tribal knowledge about “normal” versus “worrying” readings that never made it into a consistent rule set.

Codify that knowledge into centralized alarm logic. For example, on a bottling line, you might define explicit thresholds for conveyor motor current, gearbox temperature, and cycle time variation based on historical ranges. Even simple condition monitoring, when standardized and surfaced through clear alerts, reduces time-to-detection for obvious issues.

Stage 1 is as much about data hygiene as it is about AI. You use this phase to clean tag lists, fix obvious sensor data quality problems, and start routing alerts into standard maintenance work orders instead of ad-hoc emails or phone calls. Think of it as building the nervous system that later stages of AI will plug into.

Stage 2: Unsupervised Anomaly Detection

Once your signals are reasonably clean and your alerts are flowing into workflows, you can add unsupervised anomaly detection. Here, models learn what “normal” looks like from historical data, without needing labeled failures. When current behavior deviates from that learned baseline, they raise a flag.

This works best on assets with stable operating patterns and enough “normal” history: compressors, chillers, pumps, and some production lines. For example, a compressed-air system might show remarkably consistent pressure, flow, and motor current profiles at given loads. An anomaly detection model can spot subtle leaks, fouling, or mechanical issues long before thresholds are crossed.

Tuning sensitivity is crucial. Too sensitive, and you create alert fatigue; too conservative, and you miss early warnings. Integrate anomaly alerts into existing asset health monitoring dashboards and maintenance scheduling optimization processes, so planners can quickly decide whether to inspect, adjust, or ignore.

Stage 3: Supervised Failure Prediction Models

When you finally accumulate enough well-labeled failures and near-failures for specific asset classes, you can graduate to supervised failure prediction models. These models don’t just say “this looks weird”; they estimate the probability and timing of specific failure modes.

On a motor fleet, for instance, you might build a model that predicts bearing failure within the next seven days based on patterns in vibration, temperature, and load. With sufficient history, it can provide remaining useful life estimates and confidence scores, enabling more precise maintenance scheduling optimization.

Supervised models require careful AI model deployment and monitoring. As equipment ages, recipes change, or operators adjust settings, models can drift. Continuous retraining, validation, and performance tracking are essential to avoid silent degradation in prediction quality.

Integrate AI into CMMS and Daily Maintenance Workflows

The smartest predictive insights are useless if they live in yet another dashboard no one checks. To deliver real ai predictive maintenance solutions for manufacturing, insights must show up where work gets done—inside your CMMS, EAM, or maintenance planning tools.

That means predictive alerts should auto-create maintenance work orders, suggest inspection tasks, or adjust asset criticality scores. For example, if the predictive model flags elevated risk of failure on a key compressor, the system might generate a high-priority work order for a detailed inspection in the next 48 hours. Guidance from asset management providers like IBM emphasizes exactly this kind of integration between AI signals and maintenance workflows.

This is not just a technical challenge; it’s change management. Planners, technicians, and reliability engineers need to understand why an alert was generated, what data supports it, and how to give feedback. A useful analogy is AI for customer service: the models only work when tightly integrated into existing workflows, with humans in the loop refining and trusting recommendations over time.

How Buzzi.ai De-Risks Predictive Maintenance AI

Data-First Discovery and Readiness Assessment

Most ai predictive maintenance vendors show up with a generic model and a slide deck full of perfect-case studies. At Buzzi.ai, we start by assuming your data is messy and incomplete—because in brownfield manufacturing plants, it usually is.

Every engagement begins with a structured predictive maintenance data readiness assessment. We map sensor coverage, profile sensor data quality, and align everything with your most business-critical assets and KPIs: downtime reduction, maintenance cost per unit, safety incidents, and quality losses. This turns an abstract Industry 4.0 initiative into a concrete, staged roadmap.

In one engagement, a manufacturer wanted end-to-end supervised prediction on all lines. Our assessment showed that only one high-value line had enough historical failure data, while others had major coverage gaps. Instead of overpromising, we recommended starting with anomaly detection on that line and planning gradual sensor upgrades elsewhere—a classic example of predictive maintenance AI consulting for data readiness assessment done right.

Built-In Sensor Data Quality Checks and Robust Modeling

Buzzi.ai’s platform is built with noisy, incomplete data in mind. Incoming sensor streams pass through automated sensor data quality checks: missing-data alerts, drift detection, timestamp validation, and sanity checks against physical constraints. If something looks wrong in your industrial IoT data, we flag it before it poisons your models.

On the modeling side, we use approaches that can handle imperfect inputs: ensembles that combine multiple signals, uncertainty estimation to avoid overconfident predictions, and fallback rules when data is insufficient. For legacy equipment monitoring, we often blend anomaly detection with traditional engineering rules to create an industrial AI predictive maintenance solution for legacy equipment that’s robust instead of brittle.

Because we’re an AI predictive maintenance vendor that handles poor data quality by design, you don’t have to pretend your plant looks like a greenfield showcase. We meet your data where it is and improve it step by step.

From Pilot to Scaled Deployment, Aligned to ROI

Buzzi.ai’s deployment approach mirrors the staged roadmap we outlined earlier. We typically start with rules and smart alerts on existing data, move to anomaly detection as your data stabilizes, and then layer in supervised prediction where historical failure data supports it. Each stage is tied to clear KPIs like downtime reduction, MTBF improvements, and maintenance cost savings.

For a multi-plant manufacturer, that might look like: a pilot on one or two high-impact asset classes, a checkpoint to validate ROI, then gradual rollout to similar assets and plants. As data governance in manufacturing improves and your teams gain confidence, we scale both the models and the organizational usage.

If you’re looking for the best AI platform for predictive maintenance in brownfield plants, the deciding factor shouldn’t be the fanciest algorithm demo. It should be which partner can help you turn messy reality into a practical roadmap. Our predictive analytics and forecasting services are built exactly for that: enterprise AI solutions that respect constraints and scale with your data maturity.

Conclusion: Fix the Data, Then Scale the AI

AI for predictive maintenance doesn’t live or die on model architecture; it lives or dies on sensor data availability, quality, and consistency. Without trustworthy data, even world-class algorithms will underperform or fail in production.

The right move for manufacturers is to start with a clear data readiness assessment, map sensors to critical assets and failure modes, and close the highest-value gaps first. From there, a staged roadmap—rules, anomaly detection, then supervised prediction—lets you deliver tangible ROI at each step while your data matures.

If you’re tired of gambling on optimistic POCs, it’s time to flip the script. Instead of starting with a model, start with a brutally honest look at your data and workflows. Then, use a partner like Buzzi.ai that leads with data reality and structured assessment to design a predictive maintenance AI strategy that your plant—and your balance sheet—can actually sustain.

Ready to take the next step? Begin with a focused data readiness assessment on one or two high-value assets, and turn AI for predictive maintenance from a risky experiment into a disciplined investment.

FAQ: AI for Predictive Maintenance & Data

Why do so many AI for predictive maintenance projects fail in real manufacturing plants?

Most projects fail because the underlying sensor data is incomplete, inconsistent, or poorly governed, not because the algorithms are weak. Brownfield plants often have sensor coverage gaps, historian outages, and fragmented records across PLCs, historians, and CMMS. When predictive models trained on clean pilot data meet this messy reality, their performance drops, trust erodes, and the initiative stalls.

What types of sensor data are truly required for effective predictive maintenance AI?

At a minimum, you need condition monitoring signals (e.g., vibration, temperature, current) plus event data (failures, maintenance work orders) and context (operating mode, throughput, product recipes). AI for predictive maintenance depends on the combination of “what the machine felt,” “what happened,” and “under what conditions.” The exact mix varies by asset type, but these three pillars are consistent across most use cases.

How can I tell if my plant has enough data to start an AI-based predictive maintenance project?

The most reliable approach is a structured predictive maintenance data readiness assessment. You map critical assets and failure modes, audit sensor coverage and sensor data quality, and review years of historical failure data. If you have clean signals but few labeled failures, you can start with anomaly detection; if you have rich history and clear labels, you may be ready for supervised failure prediction models.

What is a realistic minimum history and sampling rate for training models on rotating equipment?

For many motors, pumps, and fans, months of vibration and temperature data sampled in the 1–10 Hz range is a practical starting point. Higher sampling rates may be justified for critical machines or specific failure modes that manifest at higher frequencies. Just as important as sampling rate is having several well-labeled failures or near-failures per asset family, so models can learn reliable patterns instead of overfitting to one-off events.

How should brownfield plants with legacy equipment prioritize where to add new sensors?

Start from business value, not from the technology itself. Rank assets by downtime cost, safety risk, and maintenance spend, then focus sensor retrofits on the few asset classes that drive most losses. For legacy equipment monitoring, low-cost wireless vibration sensors, temperature sensors, and current transformers can close critical sensor coverage gaps without large capital projects.

What is the difference between condition monitoring dashboards and AI-driven predictive maintenance?

Condition monitoring dashboards typically show current values and simple threshold alarms: if a reading exceeds a limit, you get an alert. AI-driven predictive maintenance looks at historical patterns, correlations, and context to detect subtle degradation before limits are breached. The former tells you when something is clearly wrong right now; the latter aims to tell you that something will likely go wrong soon, giving you time to plan intervention.

How can manufacturers handle noisy, missing, and inconsistent sensor data when building predictive models?

The key is to treat data quality as a first-class problem. Implement automated checks for missing data, timestamp drift, unit inconsistencies, and physically impossible values, and feed those results back to maintenance and controls teams. Platforms like Buzzi.ai embed these checks directly into predictive maintenance AI workflows, so bad data gets flagged and handled instead of silently corrupting models.

What does a practical predictive maintenance data readiness assessment look like in practice?

In practice, it involves three main activities: mapping assets and failure modes, auditing sensor coverage and data quality, and reviewing historical failures and maintenance work orders. The deliverable is a prioritized list of where AI for predictive maintenance can start now, where you should use anomaly detection versus supervised models, and which sensor upgrades will unlock the most value. Buzzi.ai’s structured AI Discovery and data readiness assessment service is built specifically to deliver this kind of roadmap.

How can we roll out predictive maintenance AI in stages to match our current data maturity?

A staged roadmap typically starts with rules and smart alerts on existing condition monitoring data, then moves to unsupervised anomaly detection once signals are clean and stable. As you accumulate reliable labels for failures and near-failures, you can introduce supervised failure prediction models for high-value asset classes. At each stage, the goal is to deliver incremental ROI while also improving data quality, governance, and workflow integration.

How does Buzzi.ai’s approach to predictive maintenance reduce the risk of failed pilots and wasted investment?

Buzzi.ai reduces risk by starting with a brutally honest assessment of your sensor data and failure history instead of promising magic models on day one. We align each phase of deployment—rules, anomaly detection, supervised prediction—with clear KPIs like downtime reduction and maintenance cost savings. By focusing on brownfield manufacturing plants and building in robust sensor data quality checks, we turn predictive maintenance AI from a high-stakes bet into a disciplined, staged investment.