Choose an AI‑Native Software Development Firm That Actually Delivers

Learn how to choose an AI‑native software development firm, spot superficial AI vendors, and match your project’s risk and complexity to the right partner.

Most “AI software development firms” are just traditional dev shops with a model glued on top. For AI‑heavy products, that’s the fastest path to a flashy demo that collapses in production. If you’ve ever watched a proof‑of‑concept impress leadership and then quietly die in the wild, you’ve felt this gap firsthand.

The hard part isn’t deciding you want AI. It’s figuring out which AI software development firm is genuinely built for AI‑native development—and which one will treat AI as an add‑on widget. The websites all look the same, the pitch decks are full of the same logos, and everyone claims to do “enterprise AI solutions” and “custom AI solutions.”

In this guide, we’ll give you a practical way to tell them apart. You’ll learn how to choose an AI software development firm based on how it actually builds, deploys, and operates AI systems, not how it markets itself. We’ll look at methodology, team structure, and architecture as the three big signals, then wrap with a vendor maturity scorecard and concrete questions you can use in your next RFP.

We’ll also show how we at Buzzi.ai approach AI‑native development for enterprises—AI agents, voice, and workflow automation—so you can benchmark us against anyone else you’re considering. Use this as a field manual, not a brochure.

What Makes an AI‑Native Software Development Firm Different

Under the same label “AI software development firm,” you’ll find everything from classic web agencies to deeply specialized ML shops. For AI‑centric products, that distinction isn’t academic; it’s the difference between a resilient system and an expensive toy. So we’ll start by putting some structure on the landscape.

AI‑Native vs “AI‑Augmented” vs Traditional: A Simple Spectrum

Think of vendors on a spectrum with three broad types. On one end, there’s the traditional software house: excellent at CRUD apps, APIs, dashboards, and mobile front‑ends, but with no real AI capabilities beyond calling an API. On the other end is the AI‑native software development firm, where models, data, and experimentation sit at the center of how the organization works.

In the middle are AI‑augmented firms. These are traditional shops that have bolted on a data science team, signed up with an LLM provider, or partnered with an AI consultancy. They can often deliver light recommendation features, analytics, or simple chatbot flows—solid work, as long as AI isn’t the beating heart of the product.

The easiest way to tell where a firm sits is to ask how they’d approach the same problem. Take an intelligent support assistant:

- A traditional firm will design a ticketing UI, some basic search, and maybe a rules‑based FAQ bot. AI is a plugin, if it appears at all.

- An AI‑augmented firm will keep that core design but add intent detection, simple classification, or LLM‑based answers wired straight into the app.

- An AI‑native software development firm vs traditional will start from data flows, retrieval quality, and feedback signals. They’ll design the assistant as a model‑centric system with guardrails, evaluation frameworks, and model lifecycle management built in.

Marketing pages rarely admit this spectrum. Everyone claims to do everything. Your job is to identify whether you need traditional, AI‑augmented, or genuinely AI‑native capabilities for the project in front of you.

Why Orientation Matters for AI‑Heavy Products

Orientation determines where a firm puts its time, talent, and budget. In traditional feature work, most effort goes into requirements, UI, APIs, and tests; the “smart” part (if any) is a call to an external service. In true ai-native development, the bottlenecks are different: data quality, model selection, ml experimentation, evaluation frameworks, and ongoing improvement.

Consider a fraud detection or recommendation engine. A traditional vendor might hard‑code rules, ship a version‑one model, and move on when accuracy looks acceptable in a test set. An AI‑native shop will obsess over data coverage, feedback loops, and how the model behaves in production under drift, adversarial behavior, and new segments.

This isn’t theoretical. The State of AI Report has repeatedly shown that a large share of AI projects stall or fail to reach production value, often because teams underestimate deployment, monitoring, and operations. An AI‑native ai consulting partner internalizes this: success is measured not by launching a feature, but by maintaining and improving impact over time.

That’s why, for AI‑heavy products, the right ai implementation strategy is inseparable from the partner’s orientation. AI‑native firms are better at reliability, iteration speed, and compounding model performance—because their organizations are built around those outcomes.

How AI‑Native Methodology Changes the Software Lifecycle

If you only look at slideware, most firms will say they’re “agile” and follow some flavor of scrum. The real differences show up when you examine the software development lifecycle for AI work: what gets planned, what gets measured, and what happens after v1 ships.

From Feature‑First to Data‑ and Experiment‑First

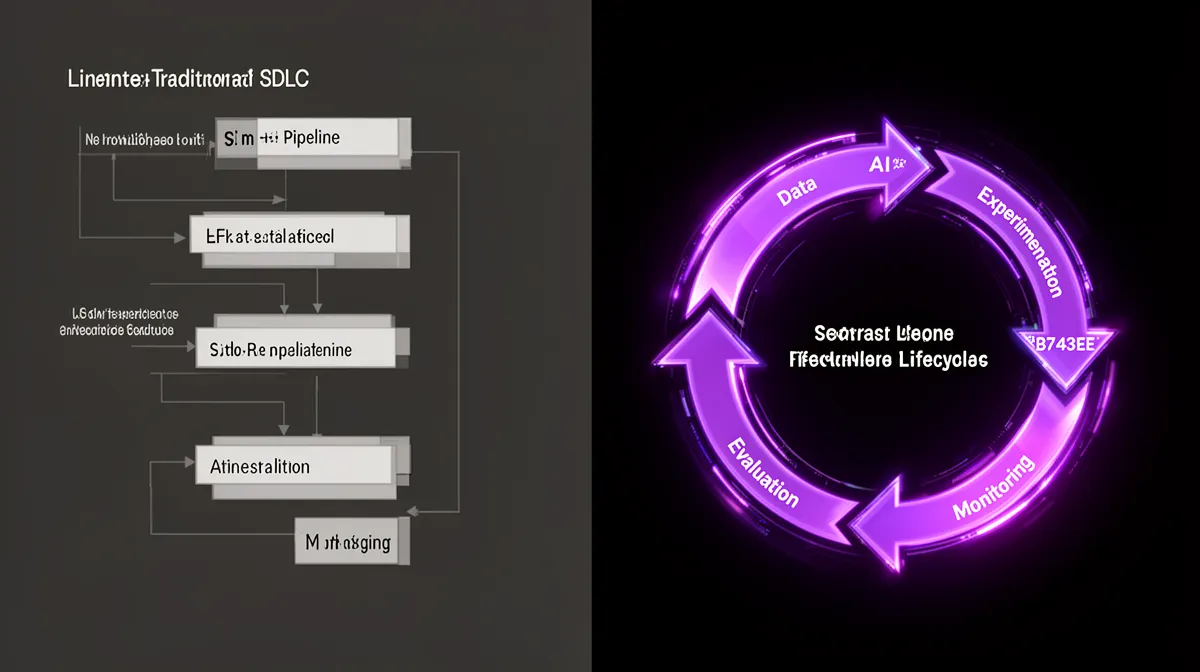

Traditional SDLC flows in a straight line: requirements → design → build → test → deploy. It assumes that if you implement the spec correctly, the system will behave predictably. That mental model breaks down when you’re dealing with probabilistic models and constantly changing data.

In an AI‑native lifecycle, the loop looks more like: data → experiment → evaluate → integrate → monitor → repeat. Instead of obsessing over feature completeness, teams obsess over the quality and coverage of data, the strength of evaluation frameworks, and the outcomes of ml experimentation. This is classic data-centric development: improving the data often yields bigger gains than endlessly tweaking model architectures.

For example, we’ve seen projects where relabeling 10% of edge‑case data increased accuracy more than three new model variants combined. In an AI‑native ai pipeline, that kind of discovery is expected and budgeted for; it’s not an unplanned detour that derails the roadmap.

Diagrammed, the difference is stark: the traditional loop ends at deployment, while the AI‑native loop treats deployment as the beginning of a continuous feedback and learning cycle.

Agile for AI Projects: What Actually Changes

“We do agile” doesn’t mean much for AI unless the process bakes in AI‑specific checkpoints. A serious AI‑native firm treats sprints as a balance of product work, data work, modeling, and evaluation—not just tickets in Jira labeled “build model.”

In practice, that means sprint backlogs include items like “define experiment to compare two RAG strategies,” “improve labeling rules for complaints in Spanish,” “design offline evaluation harness,” and “implement A/B test for new ranking model.” The agile for ai projects cadence includes gates around data readiness, model performance, and ai governance reviews for bias, privacy, and compliance.

Models are treated as evolving hypotheses, not fixed features. A good ai product discovery phase will explicitly identify which decisions are high‑uncertainty and require experimentation. From there, the team runs controlled trials with clear success metrics before rolling out changes widely.

So while the labels (sprints, standups, retros) might look familiar, what’s happening inside them—especially around experimentation and responsible AI—is quite different.

Handling Uncertainty: Probabilistic Outputs and Guardrails

Unlike deterministic code, AI models emit probabilities. An ai-native development methodology confronts that head‑on. Instead of pretending models are always right, AI‑native firms design thresholds, fallback paths, and human‑in‑the‑loop flows from day one.

Take an LLM‑based customer support assistant. An AI‑native firm will design confidence scoring, clear escalation rules, and safe defaults. If model confidence drops below a threshold, the system might fall back to a retrieval‑only answer, offer options instead of assertions, or route the conversation to a human agent—while logging the case for future training.

This is where model monitoring and model observability come in. An AI‑native responsible ai approach plans dashboards, alerts, drift detection, and user override metrics into the lifecycle, instead of scrambling to add them after an incident. Reports like Google’s “Hidden Technical Debt in Machine Learning Systems” show how many failures tie back to missing observability and governance.

Handled well, uncertainty becomes manageable risk instead of a lurking source of reputational damage.

The Team Structure of a Serious AI Software Development Firm

Process only works if the right people are in the room. The org chart of a truly AI‑native software development firm looks very different from a traditional dev shop, even one that claims to have “some data science.” This is where you start to see whether you’re talking to a vendor or a partner.

Core Roles Beyond “Developers and QA”

In a mature machine learning engineering team, you’ll see a set of distinct but tightly aligned roles. At minimum, expect specialized data engineers, ML engineers, MLOps engineers, LLM/prompt engineers, AI product managers, and some form of AI governance or ethics advisor. These aren’t luxury hires; they’re prerequisites for running production AI systems.

Data engineers own pipelines, quality, and transformations—very different from generic data engineering inside a BI project. ML engineers design and implement models, from classic ML to llm application development. MLOps engineers ensure the ai pipeline from training to ml model deployment is reliable, observable, and repeatable. Prompt engineers or LLM specialists design prompts, tools, and guardrails—an extension of prompt engineering into real application logic.

On top of this, AI product managers connect business outcomes to model objectives, deciding when “good enough” is actually good enough. Cross‑functional squads form around initiatives—say, an enterprise‑grade AI assistant—with product, data, model, and platform expertise working as one unit.

A sample squad for a predictive analytics or AI assistant project might include: 1 AI PM, 1–2 ML engineers, 1 data engineer, 1 MLOps engineer, 1 LLM/prompt specialist, and 1–2 application engineers. If your prospective partner can’t describe squads at this level of clarity, it’s a red flag.

Dedicated MLOps and Model Lifecycle Ownership

A defining trait of an ai software development firm with dedicated mlops team is that someone explicitly owns the model lifecycle. MLOps isn’t a side hobby of an overworked ML engineer; it’s a discipline responsible for deployment, monitoring, rollbacks, and continuous improvement.

Think of MLOps as DevOps plus model‑specific concerns: versioning datasets and models, managing experiment tracking, creating reproducible training runs, and handling canary releases and rollbacks for new models. This is where model lifecycle management becomes concrete policy instead of a buzzword.

A typical workflow might look like this: ML engineers push experiments into a tracking system; successful candidates are promoted into a model registry with metadata and evaluation results; MLOps engineers deploy them behind stable APIs; model monitoring feeds performance, drift, and error signals back into the backlog. When something goes wrong, there’s a clear on‑call path and rollback playbook.

Without this, AI features become brittle one‑offs. With it, they become living systems that can improve over time without breaking the product.

How Buzzi.ai Organizes Teams for AI‑Heavy Work

At Buzzi.ai, we structure around three pillars: AI agents, data, and MLOps. Our pods are built for AI‑heavy initiatives from day one, not retrofitted later. For example, a pod working on workflow and process automation with AI will include both model experts and process engineers who understand the operational realities of your business.

For AI voice bots for WhatsApp or phone support, we bring together speech specialists, LLM and ai agent development experts, and engineers who understand latency, telephony integration, and multilingual UX. These are not projects you can safely hand to a generic dev team with a few prompt engineers on the side.

Because our teams are built this way, we can act as an ai consulting partner as well as a delivery arm—advising on custom ai solutions and long‑term operations, not just building an MVP. The result is faster iteration, safer releases, and systems that keep getting better after go‑live, not worse.

Architecture Choices in an AI‑Native Software Development Firm

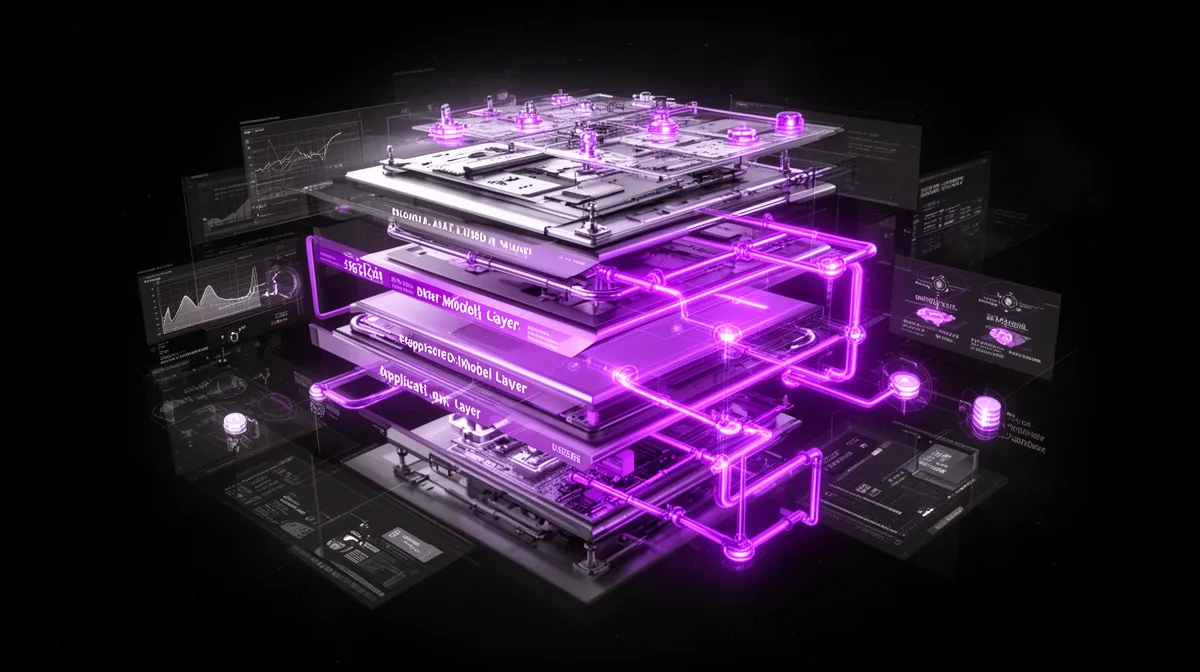

Even with the right people and process, architecture decisions can make or break AI projects. An AI‑native software development firm for enterprises doesn’t just sprinkle AI on an existing stack; it designs the stack around data, models, and ai architecture from the start.

From Monoliths to AI‑Centric Architectures

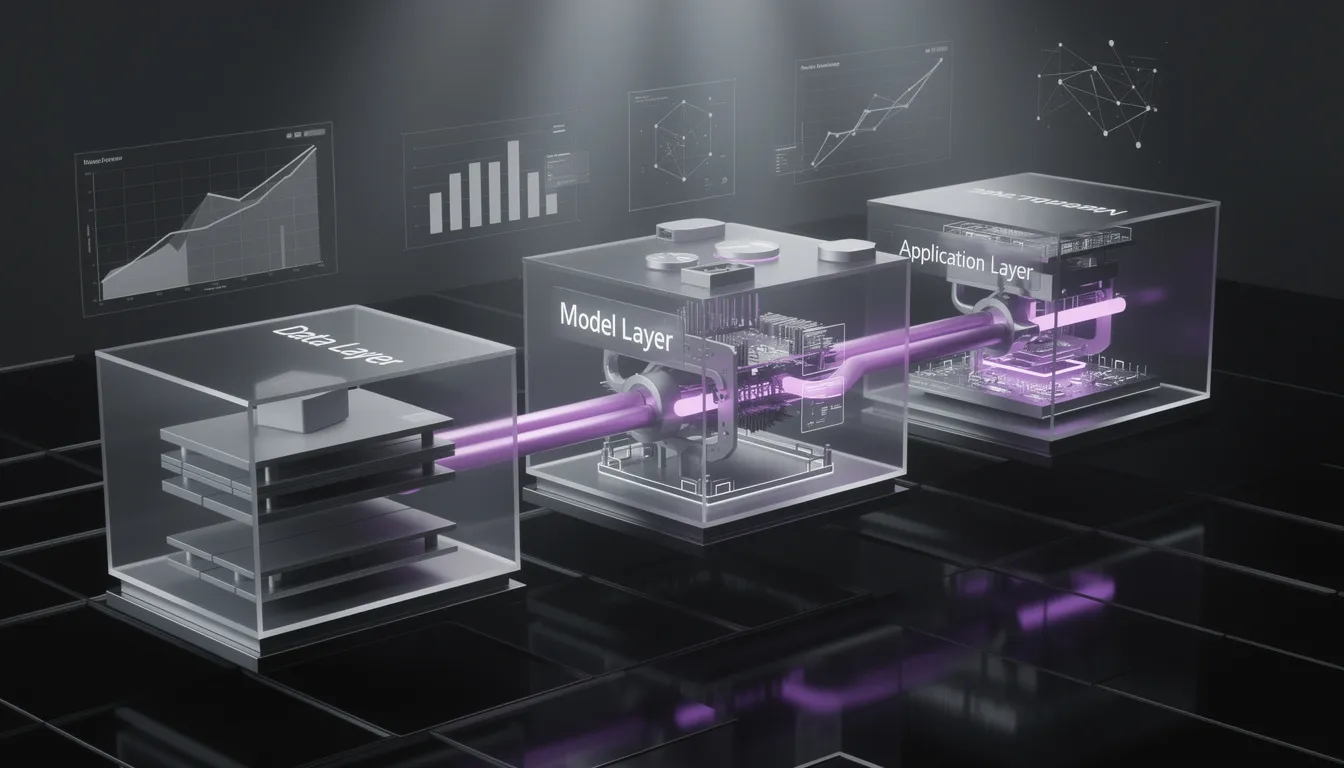

AI‑native firms favor architectures that cleanly separate model services from application logic. Instead of burying model calls deep inside controllers or UI code, they expose models through well‑defined ML APIs or microservices. This makes ml model deployment and iteration possible without breaking the app every time the model changes.

Under the hood, you’ll often see streaming vs batch pipelines for different use cases, feature stores to standardize inputs, and vector databases for semantic retrieval. These are the building blocks of modern ai pipeline design in production ai systems.

Consider a recommendation engine: an AI‑native architecture will have a data ingestion and feature engineering layer, a model serving layer with versioning and A/B routing, and an application layer that consumes recommendations via an API. If you decide to upgrade or replace the model, the surrounding system barely notices.

This decoupling is exactly what keeps you from being trapped in legacy models or brittle integrations two years down the line.

LLM and Agent‑Centric Design Patterns

With LLMs, architecture choices become even more critical. Mature firms use patterns like retrieval‑augmented generation (RAG), tool‑using agents, and workflow‑embedded assistants—not just “send prompt, show output.” A good ai software development firm for enterprises will walk you through these patterns and trade‑offs.

For an enterprise knowledge assistant, that might mean an ingestion pipeline into a vector store, a RAG layer to ground the LLM, and an orchestration layer that manages tools and policies. This is the core of robust llm application development and ai for customer service solutions.

There are also sourcing decisions: do you call OpenAI’s API, fine‑tune a foundation model on a cloud platform, or host your own? An AI‑native firm will discuss latency, cost, data privacy, and vendor lock‑in with you, not just pick whatever is trendy. Resources like the LLM design pattern guides from leading labs can be a useful reference during these conversations.

What you’re looking for is a partner who talks in terms of design patterns, failure modes, and evolution paths—not just model names.

Built‑In Observability, Governance, and Safety Layers

In an AI‑native stack, observability extends beyond CPUs and response times. You get metrics on model accuracy, drift, bias indicators, and user override rates. This is real model observability, not just log aggregation.

Governance is similarly baked in. That means audit trails for model changes and prompt templates, approval flows for high‑impact updates, and access controls for who can deploy or query specific models. The goal is to implement ai governance and responsible ai practices as part of the architecture, not as a compliance afterthought.

Research from organizations like the Google Responsible AI team highlights how governance and observability prevent real‑world failures—from biased lending models to unsafe content generation. AI‑native firms internalize this, designing safe failure modes and clear escalation paths.

If a potential partner can’t show you how their logging, tracing, and alerting capture model behavior and data issues—not just server errors—you’re looking at a risk, not just a vendor.

A Practical AI Vendor Maturity Framework You Can Use

By now, we’ve covered methodology, team, and architecture in isolation. To make this usable in the real world, let’s translate it into a simple ai maturity assessment you can apply to any AI software development firm during selection.

Four Dimensions of AI Vendor Maturity

We recommend evaluating vendors across four dimensions: Methodology, Team, Architecture, and Governance. Think of it as a lightweight technical due diligence ai checklist rather than a heavyweight audit.

On Methodology, basic means “we do standard agile and sometimes train models.” Intermediate means they have a repeatable software development lifecycle for ai with data and experiment loops. Advanced means an explicit AI‑native lifecycle with continuous evaluation, model monitoring, and feedback integration.

On Team, basic is “developers and one data scientist.” Intermediate adds data engineering and some MLOps. Advanced is a clearly defined ai software development firm with dedicated mlops team, specialized AI roles, and cross‑functional squads.

On Architecture, basic vendors embed model calls in app logic. Intermediate ones separate model services and use some modern ai architecture patterns. Advanced vendors design full production ai systems with feature stores, vector search, and abstraction layers that support enterprise ai solutions and evolution over time.

On Governance, basic vendors mention security and compliance but have no explicit ai implementation strategy for risk. Intermediate vendors have informal practices for bias checks and approvals. Advanced vendors have codified responsible ai and ai governance policies with tooling support and regular reviews.

Questions to Ask an AI Software Development Firm Before Hiring

To turn this into action, here are concrete questions—aligned to the four dimensions—that you can ask in interviews or RFPs. This is exactly what to ask an ai software development firm before hiring them for an AI‑heavy project.

- Methodology

- “Walk me through your end‑to‑end process for an AI project, from discovery to post‑launch.” (Listen for data work, experimentation, and monitoring, not just coding.)

- “How do you decide when a model is good enough to ship?” (You want specific metrics and evaluation frameworks, not hand‑waving.)

- “How do you handle failed experiments?” (Mature firms talk about learning and iteration, not blame.)

- Team

- “Who would be on the core team for our project, and what are their roles?” (Look for data engineering, ML, MLOps, and product—not just generic engineers.)

- “Who owns model lifecycle management after go‑live?” (There should be a clear answer, not finger‑pointing.)

- Architecture

- “Can you show an example of your AI architecture for a similar project?” (You’re checking for decoupled models, pipelines, and observability.)

- “How do you design for switching or upgrading models or LLM providers over time?” (You want plans to avoid lock‑in.)

- Governance & Responsible AI

- “What is your approach to responsible ai and ai governance in client projects?” (Look for policies, not platitudes.)

- “How do you monitor and mitigate hallucinations and unsafe outputs in LLM systems?” (Expect specifics on guardrails, RAG, and human‑in‑the‑loop.)

- “Show me an example of your experiment tracking and model monitoring setup.” (Screenshots or tooling names are good signs.)

Strong answers will be concrete, with examples and trade‑offs. Weak answers will lean on tool names (“we use Kubernetes”) without explaining the underlying practices.

Matching Your Project’s Risk and Complexity to the Right Partner

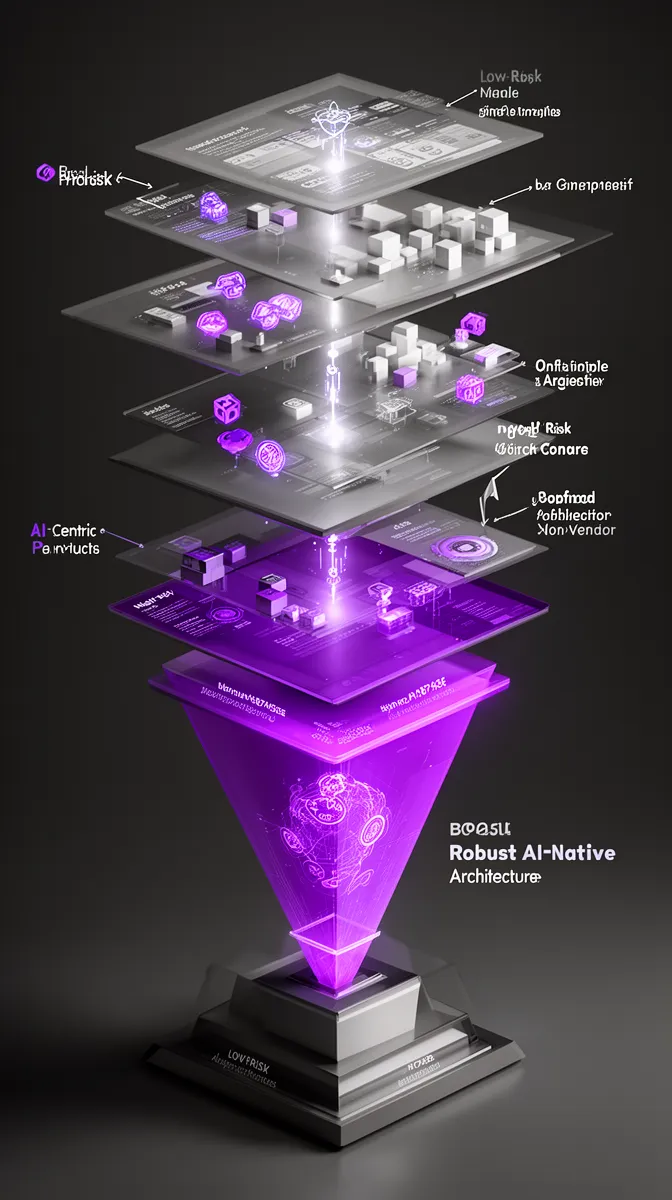

Not every project needs the best ai software development firm for ml products. Some work is straightforward enough that a traditional or AI‑augmented shop can do just fine. The key is to match partner type to project profile.

For low‑risk, adjacent analytics—dashboards, basic forecasts, simple personalization—a solid traditional dev shop with some AI augmentation can be enough. Here, ai implementation strategy is mostly about integration and UX. If things go slightly wrong, the downside is limited.

For medium‑risk decision support—pricing suggestions, churn predictions, internal knowledge assistants—you want at least an AI‑augmented partner with good data and MLOps practices. Failure here means lost revenue or productivity, so you need more robust enterprise ai solutions.

For high‑risk or AI‑centric products—customer‑facing AI agents, fraud detection, medical triage, financial decisions—you should insist on a truly ai software development firm for enterprises with AI‑native methods. This is where choosing the right partner is existential to the product’s success. If you’re building something in this category, treat selection like choosing a long‑term technology co‑founder, not a short‑term vendor.

Common Failure Modes When You Pick the Wrong AI Partner

What happens when you mis‑match project and partner? Industry case studies and news headlines provide plenty of answers, but the patterns repeat. Understanding them will sharpen your sense of risk.

Surface‑Level AI Features That Don’t Survive Real Usage

A common failure looks like this: a vendor ships an impressive demo of a chatbot or recommendation system. It works nicely in a pilot, on a curated dataset, under light load. Leadership is excited. Then real customers start using it.

Over time, inputs shift, new edge cases appear, and model drift eats away at performance. There’s no robust model monitoring, no clear owner for retraining, and no MLOps pipeline to roll out improvements safely. The system degrades in the background until one day a high‑profile failure makes people nervous.

Without strong ai architecture and production ai systems practices, the easiest response is to quietly turn the AI off and go back to rules or humans. The project is declared “a useful learning exercise,” which is usually code for “it didn’t survive contact with reality.” Reports like the Landing AI production ML report document how widespread this pattern is.

Vendor Lock‑In, Brittle Integrations, and No Path to Improvement

The second major failure mode is strategic: you ship something that works, but you can’t evolve it. This often comes from tightly coupling app logic to a single proprietary platform or LLM API, with no abstraction layer.

Initially, progress is fast. Over time, though, costs increase, features you need aren’t available, or regulatory changes demand more control and ai governance. You discover that swapping providers would mean rewriting half the system. The lack of observability also means you have no clear sense of model behavior or risk profile.

An AI‑native partner designs for portability and evolution upfront: clean interfaces around models, configuration‑driven routing, modular pipelines. That’s the difference between a proof‑of‑concept and an asset you can build on for years as part of your broader enterprise ai solutions and ai implementation strategy.

How Buzzi.ai Embodies an AI‑Native Software Development Firm

Everything so far has been vendor‑agnostic on purpose. Now let’s make it concrete and show how we at Buzzi.ai apply these principles as an ai software development firm for enterprises. You should use the same criteria on us that you apply to anyone else.

AI‑First Focus: Agents, Voice, and Workflow Automation

We focus on three core areas: custom AI agents, AI voice bots for WhatsApp and telephony, and workflow process automation plus predictive analytics. These are all use cases where AI isn’t a sidecar—it’s the engine. That forces us to operate as a truly AI‑native shop, not an AI‑augmented dev house.

Take AI voice bots in emerging markets. Latency, noise, multilingual support, and fail‑safes all matter. We design end‑to‑end: from data ingestion and training to LLM‑based ai voice assistant development, call routing, and monitoring. A traditional vendor with a few scripts bolted onto a telephony stack simply can’t manage that complexity reliably.

Similarly, our AI‑native AI agent development services combine orchestration, tool use, retrieval, and governance in one coherent architecture. These aren’t just chatbots—they’re agents embedded into your systems, with clear guardrails and auditability.

Methods, Structures, and Governance Built for AI

Operationally, we run the AI‑native lifecycle described earlier: data and experiment‑first, with model evaluation and monitoring baked in. Our pods include dedicated MLOps, and we treat mlops, ml model deployment, and lifecycle management as first‑class concerns, not back‑office chores.

On governance, we align with emerging responsible ai and ai governance best practices, building observability and safety layers into every solution. That continues into our support model: post‑go‑live, we run improvement cycles that monitor performance, retrain where needed, and expand capabilities in partnership with you.

We position ourselves as a long‑term ai consulting partner offering enterprise ai solutions, ai automation services, and ai strategy consulting—not just an MVP factory. If you decide to work with us, we expect you to apply the maturity framework in this article to us as rigorously as to anyone else.

Conclusion: Turn AI Buzzwords into a Concrete Buying Decision

The label “AI software development firm” hides a wide range of capabilities and orientations. Once you see the spectrum from traditional to AI‑augmented to AI‑native, it becomes obvious why some projects thrive while others stall.

AI‑native firms differ in methodology (data‑ and experiment‑first), team roles (dedicated MLOps, ML engineering, prompt specialists), and architecture (AI‑centric ai architecture, observability, and governance). A structured ai maturity assessment across methodology, team, architecture, and governance turns “gut feel” into a defendable choice.

For low‑risk, peripheral use cases, a traditional or AI‑augmented partner may be enough. But for AI‑centric, high‑stakes, or regulated work, knowing how to choose an ai software development firm that is truly AI‑native is critical. That’s where firms like Buzzi.ai can make the difference between a pilot that fizzles and a platform that compounds value.

Use the questions and framework in this guide as your next vendor checklist. And if you’re exploring AI agents, voice, or workflow automation, talk to us at Buzzi.ai about an AI‑native approach to your next initiative—we’re happy to walk through how we’d apply this framework to your specific context.

FAQ

What is an AI‑native software development firm and how is it different from a traditional software company that just adds AI features?

An AI‑native software development firm is built around models, data, and experimentation as first‑class concerns, not as afterthoughts. Traditional firms may bolt on AI via simple API calls or third‑party widgets, but keep a feature‑first mindset. AI‑native teams design lifecycle management, MLOps, observability, and governance into the product from day one so AI remains reliable in production, not just impressive in demos.

How can I tell if an AI software development firm is truly AI‑focused and not just using buzzwords?

Look beyond marketing slides and ask for specifics on their AI methodology, team composition, and architecture. A truly AI‑focused ai software development firm will show you how they handle data pipelines, experiment tracking, model monitoring, and responsible AI practices. If they can’t explain who owns models after launch or how they manage drift and hallucinations, they’re likely more buzzword‑driven than AI‑native.

What questions should I ask an AI software development firm before hiring them for an AI‑heavy project?

Ask about their end‑to‑end AI lifecycle, who owns model lifecycle management post‑launch, and how they design for monitoring and governance. Include LLM‑specific questions such as how they mitigate hallucinations, implement guardrails, and handle prompt and context management. Our vendor maturity framework in this article offers a full list of “what to ask an ai software development firm before hiring” so you can compare partners systematically.

How does an AI‑native development methodology differ from a standard agile or traditional SDLC process?

Standard agile and SDLC are feature‑first and usually end at deployment, assuming deterministic behavior once tests pass. AI‑native development is data‑ and experiment‑first, with continuous loops for data improvement, ml experimentation, evaluation, and model monitoring after launch. It also adds AI‑specific checkpoints—bias reviews, performance gates, and governance controls—that are typically missing from traditional processes.

What specialized roles should a mature AI software development firm have on its team?

A mature ai software development firm with dedicated mlops team will usually include ML engineers, data engineers, MLOps engineers, LLM/prompt engineers, AI product managers, and governance or ethics advisors. These roles complement application developers and DevOps to form a complete delivery capability. Without them, you’re likely dealing with a traditional team trying to stretch into AI rather than a truly AI‑native organization.

How does an AI‑native orientation change the architecture and technology stack of a project?

AI‑native orientation leads to architectures that decouple model services from application logic, use feature stores and vector databases, and emphasize robust AI pipelines. You’ll also see built‑in model observability, drift detection, and governance tooling for audit trails and access control. This makes it far easier to evolve models, switch LLM providers, and scale reliable production ai systems over time.

When should I choose an AI‑native software development firm instead of a traditional dev shop with AI capabilities?

You should prioritize an AI‑native partner when AI is central to the product or when decisions are high‑stakes—customer‑facing agents, fraud detection, medical or financial decisions, or regulated domains. In these contexts, risks from poor ai architecture, weak governance, or missing MLOps can be existential. For simpler analytics or low‑risk personalization, a competent AI‑augmented shop may be sufficient.

How should a serious AI firm handle data pipelines, MLOps, and model monitoring in production?

A serious AI firm treats data pipelines, MLOps, and monitoring as core infrastructure. That means reproducible training and deployment pipelines, a model registry, experiment tracking, and dashboards tracking performance, drift, and user overrides. If you want to see how we do this in practice, explore Buzzi.ai’s AI‑native AI agent development services and talk with our team about our MLOps stack.

What are common failure modes when companies choose the wrong type of AI development partner?

Common failure modes include impressive demos that degrade in production due to lack of model monitoring and lifecycle management, and brittle systems locked into specific vendors or models. There are also compliance and reputational risks when governance and responsible AI practices are weak. Many publicized AI incidents trace back to these structural issues rather than just “bad models.”

How does Buzzi.ai demonstrate that it is an AI‑native software development firm for enterprises?

Buzzi.ai demonstrates AI‑native maturity through its focus areas (agents, voice, workflow automation), dedicated ML and MLOps roles, and AI‑centric architectures. We run an AI‑native lifecycle with data‑ and experiment‑first practices, model observability, and strong governance. As an ai software development firm for enterprises, we aim to be a long‑term partner for AI automation and strategy, not just a vendor that builds one‑off prototypes.