AI Language Model Training for Enterprises: Stop Pretraining by Default

AI language model training doesn’t always mean pretraining. Use a capability framework to choose prompt engineering, RAG, or fine-tuning for faster ROI.

Most enterprises don’t have an AI language model training problem—they have a capability selection problem. Pretraining from scratch is the most expensive way to discover you only needed better retrieval, evaluation, and governance.

If that sounds a little too blunt, it’s because the default assumption in many boardrooms is still: “We need to train our own model.” In 2026, that phrase is ambiguous to the point of being misleading. Are we talking about building a new foundation model from raw text? Or are we talking about making an existing model behave correctly inside your workflow, under your policies, with your data?

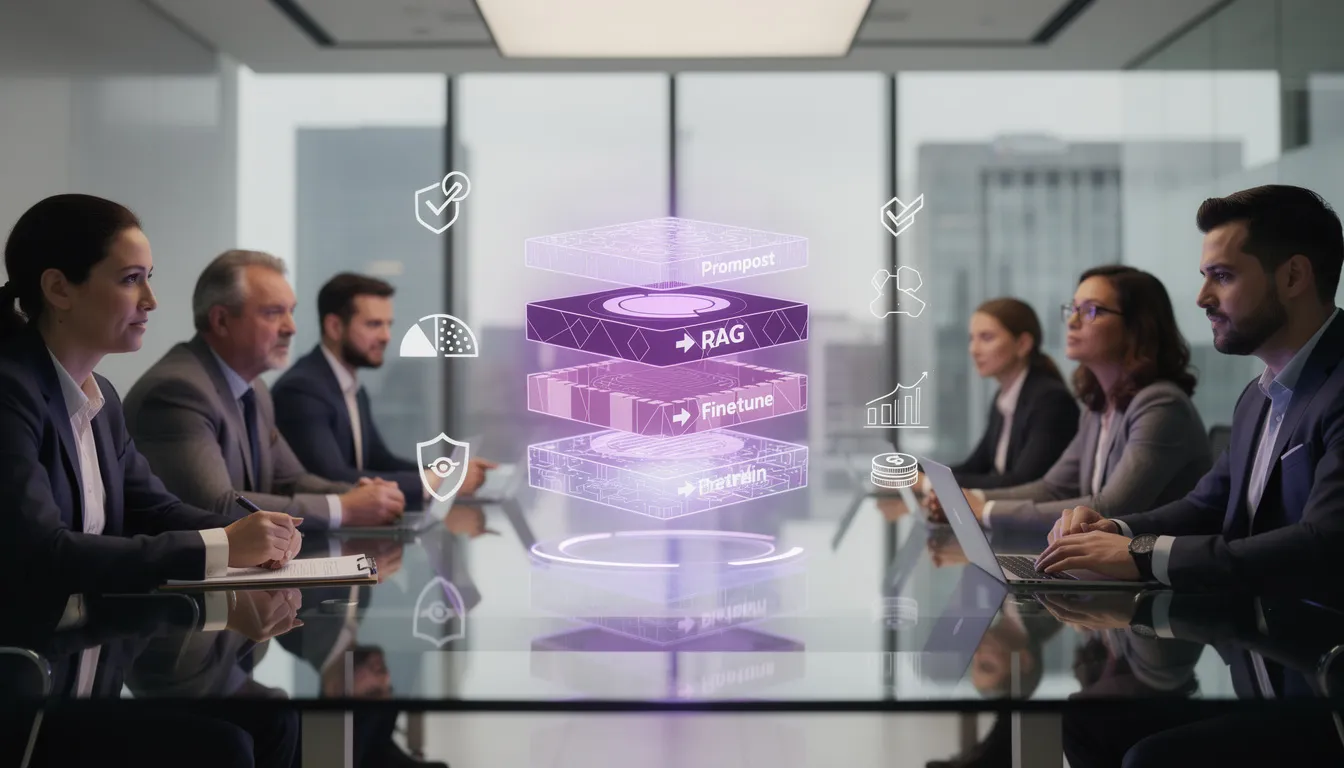

This guide reframes language model training as a spectrum: prompts → retrieval-augmented generation (RAG) → fine-tuning (including PEFT/LoRA) → pre-training. The goal isn’t to pick the most impressive option. The goal is to pick the lowest-cost approach that meets your requirements for accuracy, privacy, latency, and control—and to do it in a way you can evaluate, govern, and roll back.

Along the way, we’ll also cover the part most teams don’t budget for: operational reality. Because the long-term cost of language model training rarely lives in the training run; it lives in evaluation harnesses, monitoring, incident response, compliance reviews, and the organizational muscle required to ship reliably.

At Buzzi.ai, we build enterprise AI agents and model-adaptation systems—RAG pipelines, fine-tuning workflows, and governance-first deployments—precisely to help teams avoid irreversible bets while still shipping measurable outcomes.

Let’s start by cleaning up the language.

What “AI language model training” means in 2026 (and why it’s misleading)

When someone says “language model training,” they’re often importing assumptions from classic machine learning: collect data, train a model, deploy it, repeat. That mental model worked when the model was the product. With foundation models, the model is a platform—and most enterprise value comes from how you adapt and operate that platform.

In other words, AI language model training is usually shorthand for “making a model useful for us.” But there are multiple ways to do that, and they don’t have the same economics or risk.

Pre-training vs adaptation: two very different jobs

Pre-training is the process of learning general language and world statistics from massive corpora. It’s compute-hungry, data-hungry, and inherently research-like: you don’t fully know what you’ll get until you run the experiment. Pre-training creates foundation models.

Adaptation, by contrast, is everything we do to make a foundation model behave usefully in a particular domain or workflow. Adaptation includes prompt engineering, tool use, retrieval-augmented generation, guardrails, and fine-tuning.

Executives conflate these two jobs for understandable reasons. Vendors talk about “custom models” as a catch-all. And many leaders still remember the era when “training our own model” was the only path to differentiation.

Consider a short vignette. A bank asks for “custom language model training” because its compliance team is terrified of hallucinations. But what they actually need is (1) policy-grounded answers with citations, (2) refusal behavior when the policy doesn’t cover a question, and (3) logging and review workflows. That’s mostly RAG, evaluation, and governance—not pre-training.

A simple mental model: capabilities are cheaper than weights

Enterprise outcomes usually come from capabilities, not from changing weights. Capabilities come from instructions, tools, retrieval, guardrails, and evaluation gates. They’re modular: you can swap a retrieval index, add a validator, or tighten a policy without retraining a model.

Changing weights can be powerful, but it’s also an irreversible cost center. Once you introduce a fine-tuned model (or, worse, a fully pre-trained one), you’ve created a new artifact that must be governed, versioned, evaluated, and defended under scrutiny.

An analogy helps. If you want a better driving experience, you don’t build an engine factory; you add software, navigation, and safety systems. Similarly, most enterprises don’t need to build a new foundation model; they need to add retrieval, tool access, and governance around an existing one.

The hidden cost center: operations, not training

The total cost of ownership for language model training is often dominated by operations. Regardless of whether you do prompt engineering, RAG, or fine-tuning, you need a baseline set of ongoing responsibilities:

- Data pipelines: document ingestion, redaction, indexing, lifecycle management

- Evaluation harness: test sets, regression checks, human review loops, quality dashboards

- Monitoring: drift detection, retrieval failures, latency and cost tracking, abuse signals

- Incident response: on-call ownership, escalation paths, rollback playbooks

- Risk and compliance: access control audits, retention policies, red-teaming, approvals

The key point: different approaches shift cost between “training” and “operations.” The mistake is optimizing the one-time build while ignoring the recurring obligation.

The Language Model Capability Strategy Framework (Prompt → RAG → Fine-tune → Pretrain)

Here’s the practical enterprise framework: treat AI language model training as a ladder of increasing commitment. Start at the bottom, climb only when you can prove the lower level can’t meet requirements, and instrument each step so you know what improved—and what it cost.

This is also the best answer to the question that quietly drives most budgets: how to choose between fine tuning and pre training language models. Most of the time, the right move is neither. The right move is to build a system around a foundation model.

Level 1: Prompt engineering (fastest time-to-value)

Prompt engineering works when the task is clear, the output format is constrained, and you can tolerate some variability. It’s the fastest way to ship because it mostly uses what foundation models already know—and relies on your ability to specify what you want.

What prompt engineering actually needs in an enterprise setting is less “clever prompting” and more engineering hygiene:

- Strong instructions and examples (in-context learning)

- Structured outputs (JSON schemas, templates)

- Prompt versioning and change control

- Tool use (calling APIs) so the model doesn’t guess

Typical failure modes include prompt drift across versions, brittle edge cases, and hallucinations when the model lacks grounding. If you see repeated “it sounds confident but it’s wrong,” it’s usually a sign you’ve reached the limits of prompts alone.

Example: customer support triage. If the goal is to classify incoming tickets, draft responses using a strict template, and route to the right team, prompts + tools can get you to production quickly—especially if you validate outputs before they hit customers.

Level 2: RAG as “knowledge integration,” not a chatbot trick

Retrieval-augmented generation (RAG) is the default for knowledge-heavy enterprises because it turns your internal content into the source of truth. Instead of hoping the model “knows” your policies, you retrieve the relevant policy text at run time and ground the answer in it.

A real RAG pipeline is a system, not a single vector search:

- Document hygiene: ownership, freshness, and canonical sources

- Chunking and embeddings

- Vector databases (plus metadata filters and access control)

- Retrieval + reranking

- Citations and “answerability” thresholds

Why does RAG often beat fine-tuning for enterprise knowledge? Because policies and facts change, and RAG updates happen in the knowledge base, not in model weights. It also improves auditability: you can show the source text that drove the answer.

Example: internal policy Q&A. Users ask, “Can we reimburse this expense?” The assistant retrieves the relevant section of the expense policy, answers with citations, and refuses when it can’t find coverage. That’s governance-friendly by design.

Level 3: Fine-tuning (including PEFT/LoRA) for behavior and style

Fine-tuning is about consistent behavior. Use it when you need the model to reliably follow a specific style, adhere to strict schemas, use domain jargon correctly, or make tool calls with higher precision than prompts alone can achieve.

It helps to distinguish two common fine-tuning goals:

- Instruction tuning: teach consistent task behavior and formatting

- Domain-specific adaptation: nudge the model toward your terminology and patterns

In many enterprise settings, parameter-efficient fine-tuning (PEFT) methods like LoRA adapters are especially attractive. They reduce cost, speed iteration, and make rollbacks easier because you can version adapters rather than replacing an entire model.

Risks are real: training data can leak sensitive information, overfitting can degrade generality, and evaluation becomes more complex because you can break previously working behaviors. Fine-tuning without a robust eval harness is like updating production code without tests.

Example: structured claim notes generation. If every output must match a schema (fields, codes, mandatory sections), fine-tuning plus schema validators can improve reliability dramatically, reducing downstream manual rework.

Level 4: Pre-training (rare, but real)

Pre-training is a nuclear option. It’s only justified when you have an unusually strong combination of constraints and scale:

- A unique data moat at massive volume

- Extreme latency or on-device constraints that require a specialized model

- Regulatory sovereignty rules that prevent using external foundation models

- Niche language coverage not served by existing models

Even then, pre-training demands organizational readiness: GPU procurement, data licensing and cleaning, a research-caliber team, safety work, and a multi-year roadmap. The economic inflection point is when marginal performance gains justify multi-million-dollar fixed costs.

Thought experiment: a mid-sized enterprise with a few million internal documents. That sounds like a lot until you compare it to the scale required to create a competitive foundation model. Most organizations simply don’t cross the threshold where pre-training is rational.

For more detail on practical fine-tuning workflows, see OpenAI’s fine-tuning guide. And for the underlying idea behind LoRA, the original paper LoRA: Low-Rank Adaptation of Large Language Models is still the clearest reference.

Decision criteria: map enterprise constraints to the right approach

The best approach to enterprise language model training—pretraining vs adaptation—depends less on ideology and more on constraints. You can treat this like an engineering decision, but it’s really an economic one: which path gets you to a measurable outcome with acceptable risk?

This is where a structured capability assessment pays for itself. At Buzzi.ai, our AI discovery and capability assessment is designed to translate business requirements into concrete system choices and an AI implementation roadmap you can actually execute.

Accuracy and auditability: when citations matter more than “smartness”

If your users need to trust answers—legal ops, compliance, HR policy, regulated customer communications—then auditability matters more than “wow.” Prefer RAG with citations, refusal behaviors, and traceable source selection.

If outputs must be deterministic or strictly structured, consider fine-tuning plus constrained decoding and validators. The model can still be “creative” inside a box, but the system enforces the box.

Evaluation also changes depending on what you optimize. For knowledge-heavy systems, the key model evaluation criteria include groundedness (did the answer come from retrieved sources?), factuality checks, and citation correctness. For structured generation, schema tests and regression suites matter more than open-ended benchmarks.

Mini-case: a compliance team rejects an assistant because it can’t justify answers. Adding citations via RAG—and an escalation path when confidence is low—often turns a rejected demo into an approvable system.

Data privacy, governance, and residency

Governance constraints frequently decide your architecture before anyone debates model quality. Where can data go? What logs are allowed? How do you handle PII/PHI? What are your retention and deletion requirements?

Common patterns include private model hosting, VPC deployments, on-prem options, and hybrid retrieval with redaction. In many cases, RAG reduces risk because it can minimize what you put into training data. You can keep embeddings private, apply access controls at retrieval time, and avoid “baking” sensitive content into weights.

Example: in healthcare, you might keep the retrieval layer entirely inside your network and ensure content is de-identified before any fine-tuning. The decision isn’t just “HIPAA compliant or not”; it’s whether your architecture supports governance continuously.

For a governance lens that’s useful beyond vendor marketing, the NIST AI Risk Management Framework is a strong baseline for risk thinking and operational controls.

Latency and cost: the practical SLO view

Latency and cost aren’t abstract; they show up as SLOs. Token costs scale with output length and traffic. Retrieval adds its own latency and infrastructure costs. Fine-tuning adds training cost and can change serving cost—sometimes for the better if you can move to a smaller tuned model.

One underappreciated truth: “one big model” is often worse than a system of smaller components. A high-end model might be great for reasoning, but you don’t want it doing everything. Routing, caching, and tool calls can reduce cost and improve responsiveness.

Qualitative comparison: a customer support agent might need fast, cheap, repeatable answers—so a smaller model with RAG and strict guardrails wins. An analyst assistant might need deeper reasoning and longer context—so a larger model with selective retrieval and caching might be worth the spend.

Change rate of knowledge and policy

If your knowledge changes weekly, RAG usually wins. Fine-tuning becomes a treadmill: every update implies new training data, new runs, and new evaluation cycles.

If behavior changes rarely but must be consistent (tone, structure, tool calling), fine-tuning can be worth it. The key is to separate “behavior” from “facts.”

In practice, the best enterprise pattern is often combined: a tuned model for consistent behavior plus RAG pipelines for freshness. Product catalogs change daily; brand voice changes quarterly. Architect accordingly.

Architecture patterns that combine RAG, fine-tuning, and prompts

Enterprises succeed with language model training when they stop treating the LLM as a single monolith and start treating it as one component in a workflow. That’s also the cleanest answer to how to implement RAG instead of training a custom language model: you don’t replace “training” with “RAG.” You replace “weights as truth” with “systems as truth.”

Below are three patterns we see repeatedly in successful LLM deployment programs.

The “RAG-first assistant” pattern (most enterprise wins)

This is the default for most internal assistants: user request → route to the right policy/tool → retrieve content → generate with citations → run post-checks → deliver or escalate.

The power comes from guardrails and thresholds:

- Allowlist sources (only approved SOPs, runbooks, policies)

- Answerability scoring (refuse when retrieval is weak)

- Escalation paths (open a ticket, notify a human)

- Post-generation checks (PII redaction, policy compliance)

Example: an IT helpdesk assistant that cites runbooks and opens tickets. When it can’t find relevant guidance, it doesn’t guess—it routes to a human and attaches the retrieved context.

The “tuned core + RAG edge” pattern (behavior + freshness)

Here, fine-tuning handles the “how” while RAG handles the “what.” You fine-tune for tone, structure, and tool calling reliability. You use retrieval-augmented generation for facts and policy references.

To make this safe and maintainable, add evaluation gates: schema validators for structured outputs and groundedness scoring for retrieved claims. And prefer versioned LoRA adapters for rollbacks instead of monolithic model replacements.

Example: insurance claims summarization. The tuned core produces consistent, regulator-friendly summaries; RAG pulls the specific policy clauses and claim history needed for accuracy.

The “prompt + tools” pattern (when you don’t even need RAG)

Sometimes the cleanest “knowledge base” is already an API: CRM, ERP, ticketing, billing, inventory. In these cases, the model should orchestrate tools, not memorize anything.

This pattern is usually underrated because it’s less flashy. But it’s often the most governable: access control lives in existing systems, and logging is straightforward.

Example: a sales ops assistant pulls pipeline metrics from the CRM, summarizes changes, and drafts follow-ups. The truth lives in the CRM; the LLM just translates and coordinates.

If you’re building assistants that act inside real enterprise workflows, our AI agent development for enterprise workflows focuses on exactly these patterns: tool orchestration, retrieval, guardrails, and measurable outcomes.

For a practical overview of RAG patterns from a major platform perspective, Microsoft’s guide on using your data with Azure OpenAI is a useful reference—even if you’re not on Azure—because it makes the system boundaries explicit.

Costs and timelines: what enterprises underestimate (and how to budget)

The phrase “AI language model training” tends to anchor budgets on the most visible event: the training run. But the biggest overruns come from what happens before (data readiness, evaluation design) and after (operations, governance, iteration).

To budget well, you want to understand the true cost drivers for each level of the capability ladder—and why they behave differently.

Pre-training cost drivers (and why they don’t scale down)

Pre-training costs don’t scale down gracefully. “We’ll do a small pre-training run” often yields a weak model and still forces you to build most of the same machinery.

Surprising cost buckets include:

- Compute procurement: GPUs, networking, storage, and scheduling

- Data licensing and cleaning: legal review, deduplication, filtering, provenance

- Research iteration: multiple runs to tune architecture and training recipes

- Safety work: red-teaming, alignment, abuse prevention

- Evaluation: broad benchmark coverage plus domain-specific tests

- Model hosting: serving infrastructure and ongoing optimization

This is why “when is it worth pre training a language model for my business” is usually answered with a follow-up question: are you ready to run an ongoing research and operations program, not just buy a one-off project?

Fine-tuning cost drivers (data and evaluation, not just GPUs)

Fine-tuning looks cheaper because it often is. But enterprises still underestimate the cost of high-quality training data and the evaluation harness required to prevent regressions.

Quality dominates quantity. Two hundred high-quality instruction pairs with clear success criteria can outperform 20,000 noisy examples that encode inconsistency and errors. Preference data (what “good” looks like) is especially valuable—and especially hard to produce without domain experts.

Budget not just for the tuning run, but for:

- Dataset creation and review

- Edge-case collection

- Regression suites tied to your model evaluation criteria

- Monitoring and retraining cadence decisions

RAG cost drivers (content, governance, and retrieval quality)

RAG’s main cost driver is not embeddings. It’s content readiness and governance. “Dump SharePoint into a vector DB” fails because the organization hasn’t agreed on what’s canonical, who owns updates, and what to do with contradictory documents.

Expect work in:

- Document curation and lifecycle management

- Indexing strategy and metadata taxonomy

- Access control and audit logs (data governance)

- Retrieval testing (finding failures before users do)

- Citation UX that makes trust usable

Done well, RAG pipelines are a compounding asset: every improvement to content quality and retrieval improves all downstream assistants.

Vendor and partner evaluation: how to spot real model-training expertise

The market for “custom AI language model services” is noisy, and language makes it worse. Many vendors sell AI language model training as a default deliverable because it sounds like ownership. But the real differentiator is whether they can help you ship and operate a system that stays correct over time.

If you’re considering AI language model consulting for enterprise model adaptation, start with measurement and operations. Then evaluate architecture choices.

Ask for the eval plan before the architecture diagram

A credible partner leads with how you’ll measure success, not with which model they like. They should define success metrics, test sets, and failure conditions before they show you a diagram.

Here are vendor questions that separate demos from delivery:

- What are the SLOs (latency, cost, uptime) for production?

- What are the model evaluation criteria and how will we regress-test changes?

- How do you evaluate groundedness and citation correctness in RAG?

- What data leaves our environment, and what’s logged/retained?

- How do you handle PII/PHI redaction and access control?

- Do you have a red-teaming plan and abuse monitoring?

- What is the rollback strategy (prompts, adapters, model versions)?

- Who owns on-call and incident response after launch?

- How do you monitor retrieval failures and model drift?

- What is the escalation path to humans for low-confidence cases?

Red flag: a vendor shows impressive outputs but can’t explain how they’ll measure and maintain them in production.

For a deeper view on retrieval evaluation as an IR problem (which is what RAG depends on), the BEIR benchmark paper is a useful conceptual anchor.

Demand an adaptation-first recommendation (and justification)

A good partner explicitly justifies why prompts, RAG, and fine-tuning aren’t enough before proposing pre-training. They should tell an economic story: cost, impact, risk, and timeline.

What good looks like: “Given your requirement for traceable answers and weekly policy updates, we recommend RAG-first with citations, plus a small fine-tune for schema adherence. Pre-training won’t improve auditability and would add multi-year fixed costs.”

What bad looks like: “We’ll build you a custom model” with no discussion of evaluation, governance, or change rate of knowledge.

Operational readiness: MLOps and governance are the differentiator

Enterprises rarely fail because the first demo was weak. They fail because nobody owns the system after launch. Look for MLOps for LLMs capabilities that resemble mature software operations:

- Model and prompt registry

- Prompt versioning and approvals

- Retrieval monitoring and index lifecycle management

- Data retention controls and access logs

- Runbooks for incident response and escalation

Example incident workflow: a user reports an unsafe answer. The team traces the prompt version, retrieved sources, and model version; reproduces the issue; patches the retrieval allowlist or validator; and rolls back the adapter version if needed. This is operational maturity—not “training.”

Conclusion

Enterprises talk about AI language model training as if it’s a single decision. In reality, it’s a ladder of commitments. Most of the time, the highest ROI comes from adaptation: prompts for speed, retrieval-augmented generation for knowledge and auditability, and fine-tuning for consistent behavior.

Pre-training is real, but rare. It only makes sense when you have massive unique data, hard constraints, and the maturity to run a multi-year program. For everyone else, the real cost—and the real advantage—lives in evaluation, governance, and operations.

In 2026, winning with language models isn’t about owning weights. It’s about owning a measurable, governable capability that keeps getting better.

If you’re evaluating AI language model training for your organization, start with a capability assessment. We’ll map your requirements to the lowest-cost approach that meets your accuracy, privacy, and latency targets—then ship a pilot you can measure. Get started with our AI discovery and capability assessment.

FAQ

What is AI language model training in an enterprise context?

In an enterprise context, AI language model training usually means making a foundation model useful and safe inside your business, not necessarily building a new model from scratch. That includes prompt engineering, tool use, retrieval-augmented generation, and sometimes fine-tuning. The enterprise requirement is less “make it smart” and more “make it reliable, auditable, and governable in production.”

What’s the difference between pre-training a language model and fine-tuning one?

Pre-training builds general capability by learning from huge corpora and requires substantial compute, data pipelines, and safety work. Fine-tuning adapts an existing foundation model to behave more consistently for your tasks, style, or schema constraints. Practically, pre-training is a research program, while fine-tuning is an adaptation technique—still demanding good data and strong evaluation.

When is it worth pre-training a language model from scratch for my business?

It’s worth considering only if you have a unique data advantage at massive scale, strong sovereignty requirements, or niche language coverage that existing models can’t support. You also need organizational readiness: GPUs, research talent, and a multi-year roadmap. If your primary pain point is correctness on internal policies or fast-changing knowledge, RAG is usually a better first move than pre-training.

How do I choose between prompt engineering, RAG, and fine-tuning?

Start with prompts when tasks are clear and you can validate outputs. Add RAG when accuracy depends on internal knowledge, policies, or documents that must be cited and updated frequently. Use fine-tuning when you need consistent behavior—tone, schema adherence, or reliable tool calling—that prompts can’t deliver, ideally with PEFT/LoRA so you can iterate and roll back safely.

Can RAG replace training a custom language model for knowledge-heavy use cases?

Often, yes. RAG can deliver better factual freshness and auditability than training a custom language model because it grounds answers in retrieved, approved sources at runtime. It also reduces the risk of encoding sensitive content into model weights, since the knowledge stays in governed storage. The trade-off is that you must invest in content hygiene, access control, and retrieval evaluation.

What are the real costs and timelines of pre-training vs fine-tuning vs RAG?

Pre-training has large fixed costs (compute, data licensing/cleaning, safety, evaluation) and typically implies a long timeline with research uncertainty. Fine-tuning can be fast, but the real cost is high-quality examples and an evaluation harness to avoid regressions. RAG often ships quickly, but the durable cost is document curation, governance, and retrieval quality—especially if you want reliable citations in production.

How do data privacy and governance requirements change my LLM strategy?

They influence where data can flow, what can be logged, and what must remain private (including embeddings and retrieved snippets). In many regulated environments, RAG with strict access control reduces risk versus putting sensitive data into tuning sets. If you’re unsure what your constraints imply architecturally, start with a capability assessment like Buzzi.ai’s AI discovery and capability assessment to translate governance into system design.

What metrics should we use to evaluate RAG and fine-tuned models in production?

For RAG, prioritize groundedness (does the answer rely on retrieved sources?), citation correctness, retrieval success rate, and “answerability” thresholds that trigger refusal or escalation. For fine-tuned models, measure schema adherence, tool-call accuracy, and regression performance on a stable test suite. In production, add operational metrics like latency, cost per request, user corrections, and incident rates.

What’s the safest architecture to combine RAG with fine-tuning and tool use?

A strong pattern is “tuned core + RAG edge”: fine-tune for structure, tone, and tool calling; use RAG for policy/fact grounding; and add validators plus refusal/escalation gates. This reduces hallucinations while preserving freshness when documents change. Safety also comes from operational controls: versioning, monitoring retrieval failures, and having rollback playbooks.

How do we evaluate vendors offering custom AI language model training services?

Ask for the evaluation plan before the demo: what are the success metrics, test sets, failure definitions, and rollback strategy? Make them justify why adaptation (prompts/RAG/fine-tuning) isn’t sufficient before proposing pre-training. Finally, assess operational readiness—monitoring, governance, incident response—because that’s what determines whether your assistant survives contact with real users.