Choose an AI Development Company in the USA Built for Compliance

Choosing an AI development company in the USA is a compliance decision, not a geography one. Learn how to vet US AI vendors for real regulatory maturity.

Searching for an AI development company in the USA used to be a procurement task. Now it’s a board-level regulatory compliance decision.

If your AI vendor gets privacy, security, or fairness wrong, it’s not their logo on the subpoena. It’s yours. State privacy regulators, the FTC, sector regulators, and plaintiffs’ lawyers won’t care which US AI vendor wrote the code; they’ll care about the harm, the data misuse, and the missing documentation.

That’s the uncomfortable reality: two vendors can both market “enterprise AI development” in the US, yet only one is truly prepared for CPRA/CCPA, the Colorado Privacy Act, HIPAA, banking guidance, and emerging state AI laws. From the outside, they look similar. From a regulator’s perspective, they’re worlds apart.

In this article, we’ll reframe how you evaluate an ai development company usa: not as a local tech shop, but as a joint risk owner. We’ll break down the US regulatory landscape that actually matters, show what compliance-aware AI architecture looks like, and give you a concrete vendor checklist you can paste straight into an RFP.

We’ll also share how we at Buzzi.ai approach regulation-first AI delivery for risk-conscious US enterprises—not as a hard sell, but as a working example of what “compliance-by-design” actually looks like in practice.

Stop Treating “AI Development Company USA” as a Geography Choice

Why location alone won’t save you from US regulators

There’s a comforting myth in enterprise buying: if the vendor is US-based, the compliance risk is lower by default. For AI, that’s dangerously incomplete. A US ZIP code does not guarantee alignment with CPRA, the Colorado Privacy Act, HIPAA, or any other sector rule.

Regulators have been clear. The FTC has warned repeatedly that using AI or algorithms in unfair or deceptive ways will be treated like any other unfair or deceptive practice, regardless of where the underlying technology was built. The California Attorney General has already brought cases on privacy and dark patterns against companies using ostensibly “normal” tech stacks, not shady offshore tools.

In these actions, regulators didn’t ask, “Was this model built in San Francisco or Bangalore?” They asked: Did users understand how their data was being used? Were their rights respected? Were there undisclosed dark patterns, discriminatory outcomes, or data-sharing practices that violated law or promises?

This is the key shift: when you pick an US AI vendor, you’re not buying geography. You’re buying a set of design decisions about data flows, logging, retention, and explainability. Those decisions either make regulator conversations boring—or turn them into front-page stories.

Liability is shared. If your AI system mishandles data or produces discriminatory outcomes, you are on the hook, no matter which ai development company usa wrote the code. You cannot outsource accountability, only execution.

The real decision: compliance maturity, not ZIP code

So what are you really choosing when you pick an AI partner? You’re choosing their compliance maturity: how well their processes, documentation, and governance align with the regulatory world you live in.

A marketing-led vendor sells “Silicon Valley-grade innovation,” glossy demos, and vague promises of “trustworthy AI.” A regulation-first partner behaves more like a hybrid of an AI consulting firm and a GRC function: they design for audits from day one, treat logs and documentation as deliverables, and assume that legal and compliance will eventually read everything.

In a mature partner, governance risk and compliance isn’t an afterthought. GRC, security, and legal are first-class citizens in project planning, not people you loop in the night before go-live. That mindset directly shapes your architecture: how user data flows, which fields are stored, what’s encrypted, how model decisions are recorded.

Imagine two vendors pitching the same customer-scoring AI. Vendor A leans on “move fast” narratives, downplays risk, and promises they can hook into your data in a week. Vendor B starts with use-case scoping, maps data categories, flags CPRA-sensitive attributes, and proposes approval checkpoints. Both are “US-based.” Only one is building something you’d want to explain to a regulator.

US Regulatory Landscape: What Your AI Partner Actually Needs to Understand

The US doesn’t have a single “AI law” yet, but you already operate under a dense web of federal AI regulations proxies, state privacy laws, and sector rules. Any serious ai development company usa for regulated industries must navigate this landscape as fluently as they navigate model architectures.

Federal expectations: security, fairness, and accountability

At the federal level, regulators are sending consistent signals about algorithmic accountability and responsible machine learning. The FTC’s business guidance on AI and algorithms makes clear that unfair or discriminatory outcomes, opaque practices, and poor data security can all be treated as unfair or deceptive. That applies even if the algorithm is a black box vendor model.

The FTC’s AI guidance emphasizes transparency, data quality, and ongoing monitoring. In parallel, the NIST AI Risk Management Framework provides a de facto playbook for how organizations should think about AI risks across design, development, deployment, and operation.

Sector regulators add their own expectations. Banking regulators (OCC, FDIC, Federal Reserve) lean on model risk management principles akin to SR 11-7—documented model governance, validation, and oversight. Consumer finance regulators like the CFPB focus on fair lending and discrimination. HHS and OCR focus on privacy and security of PHI in health contexts.

For an enterprise-ready partner, these aren’t academic references. They translate into specific design choices: strong access controls, encryption, explainability hooks, and processes for documenting model limitations and monitoring drift. If a potential partner can’t speak concretely about NIST-style risk controls or FTC expectations, they are not yet the best ai development company in usa for regulated industries you need.

State privacy laws that bite: CPRA, CCPA, Colorado and beyond

The state layer is where your AI design choices meet hard legal requirements. California’s CCPA and the California Privacy Rights Act (CPRA) upgrade have reshaped expectations on cpra compliance and ccpa compliance: data minimization, purpose limitation, honoring consumer rights, and heightened treatment of sensitive data.

For AI, that means you can’t simply hoover up data “just in case” it’s useful for training. You need clear purposes, retention limits, and the ability to respond to access, deletion, and opt-out requests—even when decisions are made by models, not humans. High-risk profiling or automated decision-making may trigger data protection impact assessment-style documentation, even if not labeled as such.

The Colorado Privacy Act raises the bar further, explicitly calling out profiling and requiring Data Protection Assessments for certain high-risk processing. Other states are adopting similar state ai laws, and more are coming.

Take a customer scoring or risk-rating model. Under CPRA and the Colorado Privacy Act, you may need to document why each data element is necessary, how you mitigate discriminatory outcomes, what opt-outs exist, and how individuals can exercise their rights. An AI partner that can’t explain how their architecture will support this is not ready for your world.

Industry-specific mandates: financial services and healthcare

Regulated industries have even less margin for error. A usa ai development company for financial services compliance must treat model governance as non-negotiable. Banking guidance on model risk management expects clear model inventories, validation, challenge, and controls around use and change.

For a credit risk model, this means documenting data sources, feature engineering, validation results, and fair lending analysis. When examiners ask why a model made a given decision, you need traceability and explainability—not just “the AI said so.” The same applies to fraud models, pricing models, and any system affecting consumer rights or financial soundness.

Healthcare raises its own bar. Any AI handling PHI (e.g., a clinical decision support chatbot triaging symptoms) must operate within HIPAA’s Privacy and Security Rules. That includes secure pipelines, restricted access, logging, and often a BAA with an ai software development firm in usa for healthcare and hipaa that actually understands hipaa compliant ai design.

The best ai development company usa for these industries will be able to talk, in concrete terms, about HIPAA-compliant hosting, granular access controls, encryption, logging, and the way BAAs and security policies shape their reference architectures.

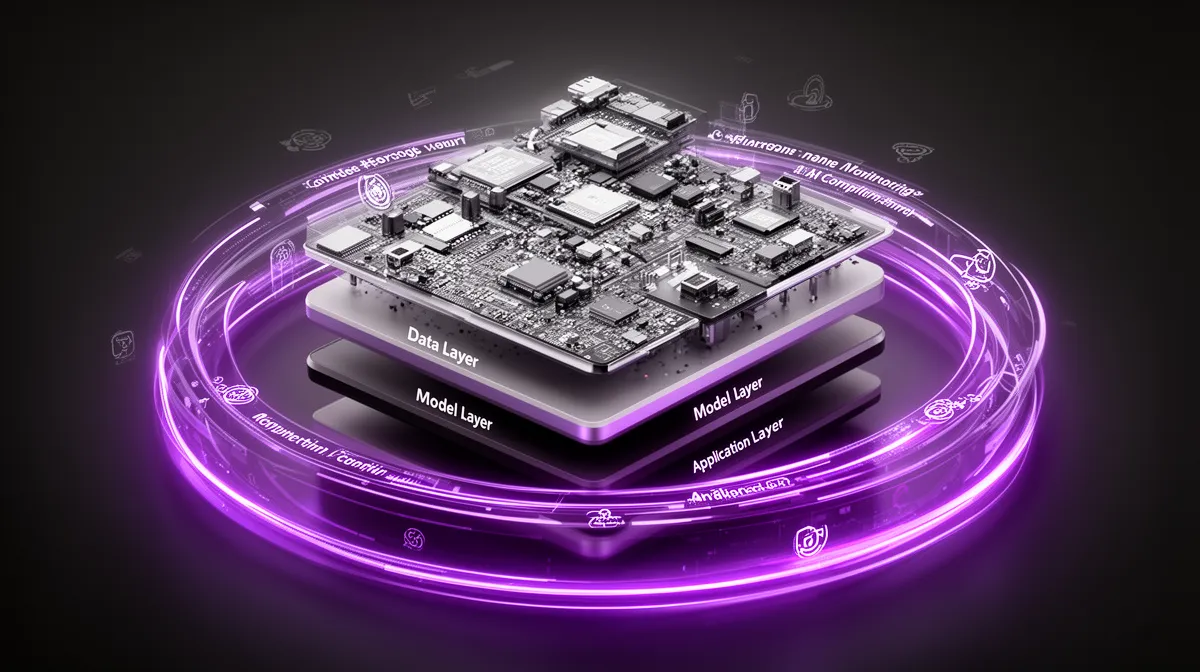

What Compliance-Aware AI Architecture Looks Like in Practice

Compliance isn’t a legal PDF you attach at the end. It’s an architectural property. In mature enterprise AI development, you can see regulatory thinking encoded in data flows, configurations, and monitoring dashboards.

From generic pipelines to governed data flows

Many AI prototypes start life as “quick win” pipelines: dump data into a lake, run feature engineering, train a model, deploy an API. It works for demos, but it’s a compliance nightmare. Personal and sensitive data are often mixed, retention is undefined, and nobody can cleanly answer “where does this field go?”

A compliance-aware pipeline looks different. The first step is data classification: is this personal data, sensitive data, PHI, payment data? What jurisdictional rules apply? Then come minimization and retention controls: stripping unneeded attributes, masking where possible, and defining how long specific tables are kept.

For many US enterprises, data residency and data residency guarantees are critical. You may have contractual or regulatory commitments that training and inference data stay in the US—or even in specific regions. A competent ai development company usa will architect storage, compute, and logging to respect those constraints end to end.

Finally, logging, access control, and encryption are defaults, not add-ons. Every access to sensitive data is logged. Role-based access control restricts who can view production data and who can deploy models. Encryption at rest and in transit is standard. That’s how enterprise ai development services usa with data residency guarantees earn their keep.

Consider a recommendation engine prototype built on raw customer clickstream and purchase data. In its early form, everything is dumped into one big table, with no clear retention policy and ad hoc access. To productionize this under CPRA and banking expectations, a mature partner will separate identifiers, tokenize where possible, lock down access by role, design explicit retention windows, and ensure logs capture every access and transformation.

Model governance, audit trails, and explainability

By 2026, a regulator or internal auditor investigating your AI won’t be impressed by “we used a reputable framework.” They’ll want a model governance picture: where the model came from, how it was trained, who approved it, and how it has behaved over time.

An acceptable audit trail includes at least: data sources and versions; feature engineering steps; training configurations and hyperparameters; experiment and training run IDs; model versions; validation results; approvals with timestamps and owners; deployment history; and change logs. Top US AI vendors are already building this into their MLOps practices.

Operationally, you need logging of model inputs and outputs (appropriately pseudonymized), monitoring for drift and anomalies, and the ability to trace a contested decision back to the model version and dataset that produced it. This is what regulators and risk committees increasingly expect under the umbrella of algorithmic accountability.

Explainability ties in directly. Techniques like feature importance, partial dependence, and example-based explanations don’t just help data scientists; they help you answer regulators, auditors, and customers. If your partner can’t show you how their architecture supports explainability, they’re not a candidate for “top us ai vendors with model audit trail and bias testing.”

Bias testing and fairness controls as non-negotiables

Bias is where ethics, law, and math collide. For many high-stakes use cases—hiring, lending, insurance, healthcare triage—US regulators and courts will treat discriminatory outcomes as serious issues, regardless of whether the model “intended” them.

Mature responsible machine learning practices include bias testing as standard. Where lawful and feasible, you segment performance metrics by protected or proxy attributes (e.g., gender, race, age bands) and compare false positive/negative rates, approval rates, and error distributions. You run adverse impact analyses similar to those used in employment and fair lending.

When gaps are found, you document remediation: reweighting, alternative modeling approaches, feature reviews, or constrained optimization. And you keep records. If a regulator asks, “What have you done to ensure fairness?”, you can show test methodologies, results, and change histories.

Imagine a vendor selection chatbot used in hiring. Initial tests show that candidates from certain schools advance at significantly different rates than peers with similar experience. A mature partner identifies this during bias testing, iterates on features and thresholds, and documents the process. That’s the standard you want from the ai development company usa you trust with your brand.

External research backs this up: many academic and industry papers on algorithmic fairness now outline practical testing regimes and metrics. Your vendor should be fluent in this body of work, not reinventing fairness from scratch.

How Offshore or Low-Maturity AI Vendors Increase US Regulatory Risk

Hidden data access and data residency exposure

Offshore engineering is not inherently bad. But hidden offshore access is a major problem for US enterprises with strict data residency or regulatory commitments. Many nominally “US-based” vendors quietly rely on offshore teams for development, monitoring, or support—often with broad access to production data.

For CPRA/CCPA, this can complicate onward transfer obligations and security representations. For banks and HIPAA-covered entities, it can directly conflict with contractual promises or examiner expectations. The risk isn’t offshore talent; it’s offshore access to live or identifiable customer data without clear controls.

A regulation-aware ai development company usa will be transparent about locations, subcontractors, and access controls. They’ll be able to show you exactly which roles can see which data, from which countries, under what technical and contractual safeguards. That’s the minimum bar for responsible ai outsourcing and risk management.

Governance shortcuts that come back as fines and headlines

The other risk vector is low governance maturity. Many vendors can produce working models—but without DPIAs, documented model approvals, robust logging, or clear incident response processes. Everything looks fine until a regulator or plaintiff asks, “Show us your documentation.”

Red flags include: no formal data protection impact assessment process for high-risk use cases; model deployment based solely on data scientist judgment; sparse or inconsistent logs; and weak or undefined processes for handling DSARs that involve model outputs. When these gaps surface under pressure, companies often have no choice but to pause systems while they scramble to reconstruct history.

By contrast, compliance focused ai development services in the united states use governance checklists and sign-offs as standard practice. Every material model has a paper trail, a risk assessment, and an owner. That’s what gives your GC and CISO the confidence to support ambitious AI rollouts without fearing unmanageable surprises.

Vendor Evaluation Checklist: Questions to Expose Real Compliance Maturity

Now to the practical part: what questions to ask a us ai development company about compliance. You can’t rely on marketing decks. You need pointed questions that separate true regulatory maturity from buzzword theater.

Data handling, residency, and privacy rights

If you want to know how to choose an ai development company in the usa for cpra compliance, start with data flows. Where is data stored? Where is it processed? Who can access it—and from where? How are backups, logs, and analytics environments handled?

You also need to check how the architecture will support privacy rights: access, deletion, correction, and opt-out. For AI, this includes being able to locate and, where feasible, remove an individual’s data from training sets or at least ensure it no longer meaningfully affects outcomes going forward.

Here’s a mini-checklist you can paste directly into an RFP for enterprise ai development services usa with data residency guarantees:

- Describe in detail all locations (countries, regions) where my data will be stored, processed, or accessed—including by subcontractors.

- Explain your approach to data residency. Can you commit contractually that certain data will never leave the US, and how do you enforce this technically?

- How does your architecture support CPRA/CCPA rights (access, deletion, correction, opt-out) in AI workflows? Give concrete examples.

- For high-risk automated decision-making, do you perform and document data protection impact assessments or similar risk assessments? Can you share a sanitized example?

- What is your retention policy for raw data, features, model inputs/outputs, and logs, and how is it enforced?

Security, SOC 2, HIPAA, and sector requirements

Security certifications are easy to put in a slide and harder to implement rigorously. When a vendor claims to be an ai development company usa with soc 2 and hipaa compliance, you need to probe how deep that competence really goes.

For SOC 2, ask what type of report they have (Type I vs Type II), which Trust Services Criteria are covered, and whether your specific AI workloads will fall under the scope of those controls. For HIPAA, ask whether they sign BAAs, how PHI is segregated, and how audit logs are managed.

Consider including questions like:

- Do you have a current SOC 2 Type II report? Which Trust Services Criteria are covered, and can you summarize any relevant findings?

- For hipaa compliant ai workloads, will you sign a BAA? How do you ensure PHI is handled separately from non-PHI data in your pipelines?

- How do SOC 2 and HIPAA controls influence your reference architectures for logging, access control, and encryption?

- Describe your key management approach and incident response SLAs for AI systems handling regulated data.

- For an ai software development firm in usa for healthcare and hipaa use case, can you walk us through a recent (anonymized) architecture you deployed?

These questions quickly distinguish real soc 2 certification and regulatory rigor from checkbox compliance.

Model governance, bias, and lifecycle management

Next, drill into model governance, bias testing, and lifecycle management. This is where you find out who will own the model after go-live—and whether your vendor is prepared for regulators demanding a model audit trail.

For vendors claiming to be among the top us ai vendors with model audit trail and bias testing, ask:

- Describe your model approval workflow. Who signs off, based on what criteria, and how is this documented?

- How do you version models and datasets? Can we roll back quickly if we detect an issue in production?

- What does your standard model audit trail include (e.g., data sources, features, training runs, validation results, approvals, deployment history)?

- Describe your bias testing methodology. Which metrics do you use, how often do you test, and can you share a sample (redacted) bias report?

- Post go-live, how do you monitor models for drift and degradation? What triggers revalidation or reapproval?

The answers will tell you whether continuous ongoing monitoring is built into their service, or whether they plan to disappear after launch.

Team structure, onshore/offshore mix, and contracting

Finally, understand who will actually touch your systems and data. This is where you evaluate whether you’re truly getting an ai development partner in usa with california cpra ccpa expertise, or a thin US front end with opaque offshore execution.

Key questions include:

- Where are all members of the delivery team located (by country), and which roles will have access to production or training data?

- Do you use subcontractors? If so, how are they disclosed, bound contractually, and restricted technically?

- In our data processing agreement, can we specify data residency, retention, and deletion obligations—and do you have standard clauses for this?

- Who owns trained models and derived features? Under what conditions can you reuse components trained on our data?

- What audit rights do we have over your controls relevant to our engagement?

Good answers here not only protect you; they make conversations with your board, auditors, and regulators much more straightforward. Benchmark multiple US AI vendors using the same checklist and you’ll quickly see who has built real ai outsourcing discipline and who hasn’t.

Inside a Regulation‑First AI Development Partner (and How Buzzi.ai Works)

Compliance-by-design from discovery to deployment

So what does a regulation-first partner actually look like day to day? At Buzzi.ai, we treat governance risk and compliance as the backbone of every project, not a separate workstream. That starts with discovery.

In a typical engagement—say a bank wanting a smarter underwriting assistant or a healthcare provider exploring a triage chatbot—we begin with regulatory scoping, data mapping, and risk classification. Before we talk models, we map use cases against CPRA/CCPA, sector rules, and internal policies. This is where our AI discovery and regulatory scoping services come in.

We then build DPIA/PIA-style assessments and governance checkpoints into the project plan itself. Each stage—data ingestion, feature engineering, training, deployment—has explicit review points for privacy, security, and fairness. This keeps risk, compliance, and security leaders in the loop without forcing them to micromanage every sprint.

The result is that by the time we deploy, the architecture, logs, and documentation are already designed to withstand internal and external scrutiny. That’s how enterprise ai development can move quickly without cutting corners.

Concrete practices that reduce fines, shutdowns, and reputational damage

Regulation-first isn’t a slogan; it’s a set of practices. In our work as a compliance-focused ai solutions provider, we emphasize: data residency guarantees where required, detailed model and data audit trails, standardized bias reports, and regulator-ready documentation packages that clients can share with boards or examiners.

For example, in a financial services project, we helped a client redesign an internal decision-support system that had grown organically. We classified data, introduced stricter access controls, documented model assumptions, and implemented monitoring and bias checks. When an internal audit review came three months later, the client could present a coherent model inventory and governance story instead of scrambling.

This is the core advantage of working with compliance focused ai development services in the united states: you ship AI products that are ready for real-world scrutiny. For heavily regulated sectors, that’s what makes a partner a candidate for the best ai development company in usa for regulated industries—not who has the flashiest demo, but who keeps you off the front page for the wrong reasons.

If you want to see how this would look for your specific environment, we can map your regulatory landscape and redesign your AI roadmap around it—combining strategy, architecture, and, where relevant, enterprise AI development and agent implementation.

Conclusion: Treat AI Vendor Choice as a Regulatory Decision

The thread connecting all of this is simple: picking an AI development company in the USA is fundamentally a compliance and governance choice, not just a geography or cost decision. The wrong partner may build fast, but you’ll pay in fines, emergency shutdowns, and reputation damage when regulators eventually knock.

The right partner designs regulatory compliance into the architecture: privacy-by-design, security controls, clear audit trails, and robust bias testing baked into the model lifecycle. They can show you how their approach aligns with CPRA/CCPA, the Colorado Privacy Act, federal expectations, and your sector mandates.

You now have a concrete checklist for exposing real compliance maturity—from data residency and DPIAs to SOC 2, HIPAA, model governance, and offshore access. Share it with your risk, compliance, and security teams; use it to benchmark vendors; and insist on answers that stand up to regulator-level scrutiny.

If you’re ready to reframe AI as a regulated capability rather than a side project, we’d be happy to help. Start with a discovery session to map your specific regulatory landscape and design a compliant AI roadmap tailored to your business and risk profile.

FAQ

How should a US enterprise evaluate an AI development company in the USA beyond location and price?

Look at compliance maturity instead of geography. Ask about data flows, logging, retention, model governance, and how they support CPRA/CCPA rights and sector rules. A mature vendor will show you concrete architectures, processes, and sample documentation, not just assurances that they are “US-based.”

What specific compliance questions should I ask a US-based AI vendor about CPRA and CCPA?

Ask how they implement data minimization, purpose limitation, and sensitive data handling in AI pipelines. Probe how individuals can exercise access, deletion, correction, and opt-out rights when decisions are made by models. Request examples of DPIA-style assessments for automated decision-making and how these impact design choices.

How do I verify that an AI development company truly understands SOC 2 and HIPAA requirements?

Request their latest SOC 2 report (Type II if possible) and ask which Trust Services Criteria are in scope for your project. For HIPAA, confirm whether they sign BAAs, how PHI is segregated, and how access and logging are controlled for regulated data. Ask them to walk you through a recent healthcare or financial architecture and explain where each control lives in the design.

What does a compliance-aware AI architecture look like compared with a standard AI implementation?

Compliance-aware architectures classify data, apply minimization and retention rules, and enforce data residency from the start. They include detailed logging, role-based access, encryption, and model governance features such as versioning, approvals, and audit trails. Standard pipelines often treat these as optional add-ons, which creates significant regulatory and operational risk.

How can I confirm where my AI training and production data are stored and processed (data residency)?

Require a detailed data flow diagram and location inventory as part of the engagement. Insist that the contract and DPA specify approved storage and processing regions, including any subcontractors or cloud services. A strong vendor will also offer technical enforcements and monitoring to ensure data doesn’t silently move outside agreed jurisdictions.

What should an acceptable model audit trail include to satisfy US regulatory expectations?

A robust audit trail documents data sources and versions, feature engineering steps, training configurations, experiment IDs, and validation metrics. It also records approvals, deployment history, change logs, and monitoring results such as drift and bias tests. This gives you the traceability regulators and internal auditors expect for high-impact AI systems.

How should AI vendors document and test for bias to align with emerging US AI legislation?

Vendors should define clear metrics and segment performance across relevant groups where lawful, checking for disparate error rates or outcomes. They should run these tests at pre-deployment and periodically post-deployment, documenting methods, results, and remediation steps. Look for practices that align with fair lending and EEO-style analyses, which regulators already understand well.

How can offshore or low-maturity AI development partners increase regulatory risk for US companies?

Hidden offshore access to production or training data can undermine data residency commitments and raise security concerns. Low-maturity vendors often lack DPIAs, formal model approvals, and adequate logging, making it hard to respond to DSARs or regulator inquiries. In a crisis, these gaps can force you to pause systems or accept unfavorable settlements.

Which contract and data processing agreement clauses are critical when hiring a US AI development partner?

Focus on clauses covering data residency, retention and deletion, subcontractor disclosures, and breach notification timelines. Clarify model and data ownership, reuse rights, and your audit rights over relevant controls. A good DPA will mirror your regulatory obligations so accountability is clearly shared and enforceable.

How does Buzzi.ai’s regulation-first approach to AI development reduce the risk of fines or shutdowns?

We embed regulatory scoping, DPIA-style assessments, and governance checkpoints into every stage—from discovery through deployment and monitoring. Our architectures prioritize data residency, logging, audit trails, and bias testing, giving clients regulator-ready documentation and clear accountability. You can learn more about this approach in our AI discovery and regulatory scoping services, which help map your regulatory landscape before you scale AI initiatives.