AI Agents for Business That Protect Value, Not Just Automate

Learn where AI agents for business actually add value, where they don’t, and how to design a selective, process-fit deployment roadmap that protects outcomes.

Most companies will lose more value than they create with ai agents for business—not because the tech is bad, but because they’ll put agents in the wrong places. The instinct will be to “automate everything” instead of asking a simpler question: where does an AI agent actually fit the process?

AI business agents are not magic workers. They’re software entities that can perceive context, make decisions, and act across your tools. Put them in the right workflows and you unlock meaningful business process automation; put them in the wrong ones and you get slower cycles, frustrated customers, and messy exception queues.

Executives feel real pressure to “do something with AI.” Boards ask about AI deployment strategy; vendors pitch end-to-end automation; competitors brag about headcount savings. In that environment, it’s easy to ship agents into every touchpoint—and quietly degrade both customer experience (CX) and employee experience (EX).

The constraint isn’t just model quality. It’s process-fit: how stable your workflow is, how clear the rules are, how good your data and knowledge are, and how often exceptions occur. This article gives you a practical scorecard and decision framework to decide where agents belong, where humans stay, and where simpler scripts or RPA are a better answer.

We’ll walk through what AI agents for business really are, why blanket rollouts backfire, how to score your processes, and how to design hybrid human–agent workflows that don’t break. At Buzzi.ai, we build tailor-made agents and AI voice bots for WhatsApp with this selective mindset; the playbook here distills what we’ve seen work in the real world.

What Are AI Agents for Business, Really?

Before you can place ai agents for business intelligently, you need a clear mental model of what they are—and what they are not. The label “AI agent” is now slapped on everything from basic scripts to advanced multi-agent systems, which makes serious planning difficult.

From Scripts to Agents: The Capability Shift

In a business context, think of autonomous ai agents as software entities that can perceive context, reason over options, and take actions in your systems to pursue a defined goal. They don’t just answer questions; they log into tools (via APIs), update records, route requests, and trigger workflows. They operate semi-autonomously, within guardrails you set.

Compare that to a script or classic RPA bot. Those tools follow a fixed, deterministic sequence: click here, copy this, paste there. They’re excellent for stable, rules-based workflows—like daily reconciliations or batch data loads—but brittle when anything unexpected happens. This is the essence of task automation vs agents: scripts automate steps, agents pursue outcomes.

Now consider chatbots. Many “AI chatbots” are just decision trees with a conversational UI—great for FAQ flows, but they can’t act across systems. An AI agent for support, by contrast, might read an incoming ticket, retrieve the customer’s account data, check eligibility for a refund, and then trigger the refund workflow in your billing system.

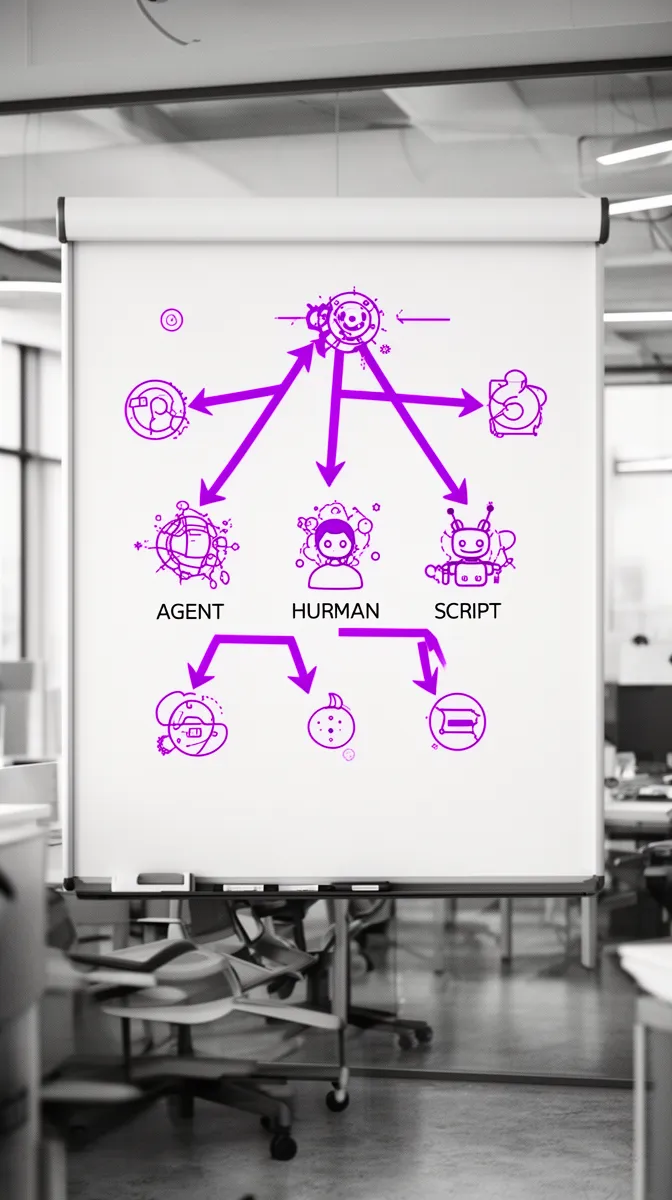

Architecture adds more nuance. In multi-agent systems, you might orchestrate a group of specialized agents—one for classification, one for research, one for action execution—through a central brain or workflow orchestration layer. But it’s important to see these as implementation details, not value guarantees. Multi-agent complexity doesn’t replace the need for good business process design.

Industry analysts like McKinsey frame this shift as moving from rules-based automation to learning-based, context-aware automation that can handle messier knowledge work automation. The opportunity is real; the challenge is directing that capability only where your processes can support it.

Where Agents Fit in the Automation Spectrum

It helps to place AI business agents on a spectrum alongside other tools. Imagine a line that goes: SOPs → scripts/RPA → APIs → decision engines → AI agents → humans.

At the left, you have documented Standard Operating Procedures (SOPs) where humans follow step-by-step instructions. Move right and you get scripts/RPA and API integrations—classic business process automation that excels in highly structured environments. Decision engines add codified rules and scoring; they’re deterministic but more expressive.

AI agents live between decision engines and humans. They shine where tasks require context, flexible choices, and navigation across imperfect systems—exactly where rules-based workflows fall apart. They’re powerful for intelligent workflow automation but still bounded by the quality of your processes and data.

Humans remain at the far right: they handle high ambiguity, novel problems, and emotionally sensitive situations. The key shift is to treat ai agents for business as one tool in your business process design toolkit, not a universal upgrade for everything humans or scripts do today.

The Hidden Risk: Indiscriminate AI Agent Rollout

Once leaders see what AI agents can do in a demo, the temptation is obvious: “Let’s roll this out across all our customer interactions.” That’s where your ai deployment strategy can quietly turn into a liability.

Why Blanket Agent Strategies Destroy Value

Boards ask pointed questions about AI, vendors promise end-to-end automation, and the media celebrates headcount reductions. Under that pressure, many organizations launch “agent everywhere” initiatives as part of sweeping digital transformation programs. It feels bold; in practice, it often increases friction.

Misplaced agents slow things down. Put an agent into a process with high ambiguity and weak data, and you’ll see more back-and-forth, more manual exception handling, and more “sorry, I didn’t understand that” loops. Customers get frustrated when agents fumble edge cases; employees get frustrated when they must constantly rescue the system.

There’s also hidden operational complexity. Each new agent requires monitoring, change management, compliance review, and support. When you scatter dozens of agents across poorly understood workflows, process governance becomes a nightmare: no one owns the end-to-end journey, and fixes are band-aids, not structural.

We’ve seen this pattern echoed in external case studies. For example, high-profile chatbot rollouts covered in outlets like the Wall Street Journal show that over-automated support can tank NPS when complex exceptions are mishandled. The technology isn’t the problem; putting it in the wrong jobs is.

3 Common Failure Patterns in Agent Deployments

When you look across failed agent projects, the same three patterns recur.

Failure pattern 1: Agents in high-stakes, low-data, ambiguous decisions. Think complex underwriting decisions, nuanced HR actions, or sensitive dispute resolutions. In one bank, an AI agent was allowed to send preliminary loan denial emails without full policy awareness; the backlash from customers and regulators forced a rollback within weeks.

Failure pattern 2: Agents in processes with undefined or outdated SOPs. If you don’t have current Standard Operating Procedures, your process variability is already high for humans. Dropping an agent into that chaos just amplifies inconsistency. The agent learns from messy precedent, bakes in contradictory patterns, and generates outcomes no one can defend.

Failure pattern 3: Agents added on top of broken processes. Instead of fixing root causes—unclear ownership, duplicate systems, ad-hoc workarounds—teams add AI as a quick fix. You get automation of chaos: the agent faithfully executes a bad flow faster, creating more rework and support tickets downstream. Research contrasting RPA and AI-based automation, such as studies summarized by Deloitte, consistently warns against automating before standardizing.

These patterns are your early warning system for where where not to use ai agents in business. When you see them, your priority should be process governance and redesign, not more automation.

A Process-Fit Scorecard for AI Agents in Business Workflows

To move from vibes to rigor, you need a structured way to evaluate where ai agents for business actually fit. That’s the role of a process-fit scorecard: a simple, repeatable lens you can apply across workflows before committing to automation.

The Four Dimensions of Process Fit

We recommend assessing each candidate workflow along four dimensions: process stability, rule clarity, data/knowledge quality, and exception rate. Together, these give you a practical process assessment for ai agent implementation.

Process stability asks: how often do the steps change? A monthly tweak is fine; weekly reinvention is a red flag. Rule clarity asks whether the decisions in the process can be expressed as policies or guidelines that a system can learn or reference.

Data and knowledge quality focuses on completeness, consistency, and accessibility. Are the required inputs available in digital systems? Do you have up-to-date SOPs and a maintained knowledge base? Poor data quality and patchy knowledge management are the fastest way to sabotage an agent.

Exception rate measures how frequently non-standard scenarios appear. If more than, say, 30–40% of cases require human judgment beyond documented rules, your process variability is high and automation should be cautious.

To make this actionable, score each dimension from 1 to 5:

- 1 = very weak (e.g., unstable, unclear, low-quality, or exception-heavy)

- 3 = moderate (some issues, but manageable)

- 5 = strong (stable, clear, high-quality, low exceptions)

In general, ai agents for business workflows perform best when at least three of these four dimensions score 4 or 5. If two or more dimensions are at 1–2, treat the process as high-risk for agents and consider focusing on simpler process automation or human-only improvements first.

Build a Simple Process-Fit Scorecard

Turn those dimensions into a simple 0–20 score: four criteria times 1–5 points each. This becomes your lightweight decision tree for ai agent adoption in business.

Use these thresholds:

- 16–20: Strong candidate for agent-first automation.

- 11–15: Hybrid agent + human; consider agents for sub-steps.

- 6–10: Look at scripts/RPA or improving the process before adding agents.

- 0–5: Keep human-led for now; focus on clarifying rules and data.

Apply the scorecard step-by-step. Map the workflow, identify decision points, then rate each dimension. This gives you a quantitative starting point for discussions about ai agents for business process automation strategy and value realization.

Consider two example workflows.

Inbound support triage. Steps are relatively stable, rules are moderately clear (based on categories and SLAs), data comes from digital tickets, and exception rates are manageable. You might score: stability 4, rule clarity 4, data quality 4, exception rate 3 = 15/20. That suggests a hybrid model where an agent classifies, routes, and answers simple questions—great for operational efficiency—while humans handle complex issues.

Complex pricing approvals. Steps change often, rules live in emails and slide decks, data is scattered, and every large deal is “special.” You might score: stability 2, rule clarity 2, data quality 2, exception rate 1 = 7/20. That points away from agents; better choices are standardizing policies, tightening governance, and perhaps using AI only as a drafting assistant, not an autonomous agent.

By starting with 5–10 candidate processes and ranking them by score, you create a rational, defensible roadmap instead of an AI hype wishlist.

A Decision Framework: Where Agents, Humans, and Scripts Each Win

The scorecard tells you which processes look promising. You still need a clear ai agent deployment framework for enterprises to decide who does what inside each workflow: agents, humans, or scripts.

Designing the Decision Tree for Agent Placement

Think of this as a verbal decision tree you can sketch on a whiteboard. At each branch, you choose between ai agents versus traditional automation in business processes and human work.

Step 1: Process criticality. Ask: if this process fails, what’s the impact—minor annoyance, revenue impact, or regulatory risk? For high-stakes flows, default to human-first with agent assistance; for moderate-stakes, agent-first can be acceptable within guardrails.

Step 2: Data and knowledge readiness. Use your earlier assessment. If data and SOPs are weak, choose “fix process before automating.” If they’re solid, proceed to the next branch.

Step 3: Exception rate. If exceptions are rare, scripts or RPA may be enough; they’re cheaper and simpler. If exceptions are moderate and well-understood, agents are attractive because they can route edge cases to humans while automating the rest.

Step 4: User experience sensitivity. For customer-facing steps with high emotional stakes—like complaint handling—bias towards human-first or tight human-in-the-loop. For back-office data shuffling, scripts and agents can dominate.

Walk through a customer onboarding journey. Scripts and APIs can validate IDs and sync records between systems. An AI agent might handle routine “what’s the status of my application?” messages and guide users through document uploads. A human handles edge cases, fraud concerns, or customers who need nuanced explanations. One process, three different tools—each in its best-fit role for business process design and customer journey automation.

Concrete Examples: Where Each Approach Excels

To make this concrete, it helps to group workflows by their natural bias.

Agent-first processes. These include support triage, repetitive email responses, FAQ-style policy questions, simple order changes, and many ai agents for customer service workflows. Here, context matters but policies are clear and data is digital. Agents can read incoming content, consult knowledge bases, and act in systems.

Script/RPA-first processes. Think deterministic data transfers between systems, nightly batch jobs, or ledger reconciliations. Once your business rules are defined, classic automation beats agents on cost and predictability. This is traditional business process automation at its best.

Human-first processes. These include complex negotiations, bespoke contract structuring, sensitive HR conversations, high-stakes exceptions, and novel problem-solving. Here, task automation vs agents is the wrong framing; the right framing is “How do we augment humans?” AI can draft emails, summarize context, or propose options, but decisions stay with people.

Consider a side-by-side example. In customer service, an AI agent can authenticate a user, pull their recent orders, answer shipping questions, and process a simple refund. In finance operations, posting 10,000 invoices into an ERP each night is better handled by RPA with strict validations. The art of your ai deployment strategy is to mix these so the end-to-end journey flows smoothly.

Where AI Agents Consistently Work—and Where They Don’t

Once you apply the scorecard and decision tree, clear patterns emerge about when should you use ai agents in your business and when to avoid them. This is where selective deployment turns into a competitive advantage.

High-Fit Use Cases for AI Business Agents

High-fit processes have four traits: high volume, moderate complexity, clear policies, and digital data sources. They’re important enough to matter but not so ambiguous that each case is bespoke. This is where you typically see the best ai agents for business workflows delivering outsized ROI.

Common examples include:

- Inbound support classification and routing. An agent reads incoming tickets or messages, detects intent, and sends them to the right queue. This is exactly the pattern behind many successful AI agents for support ticket routing and triage.

- Proactive CX notifications. Agents watch for key events (shipment delays, payment issues) and reach out with personalized updates.

- Internal knowledge assistants. Employees ask, “What’s the SOP for X?” and an agent pulls the latest policy, citing sources.

- Lead qualification and enrichment. Agents research inbound leads, enrich CRM records, and propose next-best actions.

- Routine status updates across channels. Agents answer “Where’s my order?” or “Has my request been approved?” on web, email, and WhatsApp.

At Buzzi.ai, for example, we’ve seen strong results with AI voice bots on WhatsApp in emerging markets. These are ai business agents tuned to specific CX flows—order status, appointment reminders, simple changes—where policies are clear and customer expectations are transactional. They slot neatly into ai agents for customer service workflows and broader customer journey automation.

Red Flag Processes for Agents

Conversely, some patterns consistently correlate with failure and frustration. These are your “where not to use ai agents in business” zones.

Red flags include very high exception rates, poorly documented policies, fragmented or low-quality data, heavy regulatory subjectivity, and strong emotional sensitivity. When multiple of these show up together, treat the process as human-first and use AI only as an assistant.

Examples:

- Termination decisions. Firing someone is not something you want an agent to trigger, even with a checklist.

- Nuanced legal interpretations. Contract language often hinges on subtle, context-specific tradeoffs; AI can draft, but lawyers must decide.

- Bespoke enterprise contract negotiation. Every large customer has unique constraints; process variability is the point.

- Complex medical triage without clinician oversight. Mistakes here are life-and-death, not just operational.

Picture a legal contract review agent suggesting risky clauses to speed deals up. Without strong human review, the organization could take on hidden liabilities. In these domains, exception handling and responsible ai consulting principles say: keep humans firmly in control, and let AI support research and drafting—not autonomous decisions.

Designing Hybrid Human–Agent Workflows That Don’t Break

Pure automation is rarely optimal. The best ai agents for business workflows live inside deliberately hybrid journeys, where humans and agents collaborate and each does what they do best.

Orchestrating Hand-offs and Escalations

The foundation is solid human-in-the-loop design. That means clear escalation triggers, visible state, and reversible actions. Agents should know exactly when to stop and ask for help rather than bluffing their way through.

Define escalation rules around uncertainty levels, sentiment, and policy boundaries. When the model’s confidence is low, or the user expresses frustration, or a decision bumps into a sensitive rule, the agent should route to humans with full context. That’s where an agent orchestration layer or broader workflow orchestration system matters: it manages routing, logging, and multi-agent coordination in one place.

Imagine a support workflow. An agent answers simple questions, processes basic changes, and gathers details for more complex issues. When it detects anger or policy ambiguity, it escalates to a human, passing along the conversation, ticket history, and its own suggested resolution. Measured correctly, these designs often deliver better intelligent workflow automation than either humans or agents alone.

Protecting CX and EX in Agent-Augmented Journeys

Hybrid design is ultimately about protecting customer and employee experience. Customers should always know they can reach a human; employees should feel supported, not policed, by automation.

On the UX side, be explicit about what the agent can and cannot do. Give users fast, visible ways to transfer to a human, and provide transparent status updates (“I’ve handed you to our billing specialist; they can see everything we discussed”). For employees, design internal tools where agents handle low-value tasks—summaries, drafts, lookups—so people can focus on judgment and relationship work.

Industry reports on human-in-the-loop best practices, such as those from Gartner, consistently find that hybrid journeys outperform pure automation on KPIs like resolution time, accuracy, and satisfaction. That’s where best ai agents for business workflows shine: as force multipliers inside well-governed systems. When you’re ready to go deeper, partnering with specialists for AI agent development services helps encode these patterns from day one.

Data, Knowledge, and Governance: Preconditions for Reliable Agents

Even the best ai agent deployment framework for enterprises will fail without solid data, knowledge, and governance foundations. Agents are amplifiers: they multiply the quality of what you feed them—good or bad.

Get the Data and Knowledge House in Order First

Start by clarifying minimum requirements for ai implementation roadmap readiness. Systems must be accessible via APIs or integration layers; core entities need consistent schemas; SOPs and policies must be up to date; and you should have structured knowledge bases to support retrieval-augmented generation (RAG).

If your data quality is poor—duplicate records, missing fields, conflicting sources—agents will make wrong decisions and give inconsistent answers. Weak knowledge management means they hallucinate policies or quote outdated guidance. This is where standard operating procedures and curated knowledge hubs pay off.

Techniques like process mining and process mapping can help. Process mining tools, described in research from IEEE and vendors like Celonis, analyze event logs to reveal how work actually flows, not how it’s documented. That gives you a map of data touchpoints and bottlenecks before deploying agents. Companies that clean up their flows and knowledge first consistently see higher accuracy and fewer escalations later.

Governance, Monitoring, and Guardrails for Business Agents

Next comes governance. Each agent should have a clear business owner, defined responsibilities, and a change management process for its policies and logic. That’s the heart of sound process governance and ai governance consulting.

Monitoring must go beyond uptime. Track accuracy, exception rates, user satisfaction, and any domain-specific risks like bias or unfair treatment in finance or healthcare. Maintain audit logs of decisions, especially for high-stakes processes, and document runbooks for how to respond when metrics drift.

Industry studies from Gartner and IDC on AI project failure rates highlight governance gaps as a key cause of value leakage. To avoid those traps, treat agents like new team members: onboard them, set clear policies, regularly review performance, and document decisions. For a deeper dive into this mindset, see resources like an enterprise AI governance playbook tailored to your sector.

A Phased AI Agent Implementation Roadmap for Mid-Sized Enterprises

With all the pieces on the table, how do you actually roll out ai agents for business process automation strategy in a way that protects value? The answer is a phased roadmap that treats automation as a product, not a one-off project.

Phase 1: Discover, Map, and Score Processes

Start with discovery. Identify your top 20–30 processes by volume, cost, and customer impact. This forms the raw material for process assessment for ai agent implementation and broader business process design.

Use process mapping to document current flows: who does what, in which systems, with what inputs and outputs. Basic time-and-motion analysis—how long each step takes, how often work bounces between teams—will highlight where process automation could yield big gains.

Then apply your process-fit scorecard. For each workflow, rate stability, rule clarity, data/knowledge quality, and exception rate to generate a 0–20 score. Shortlist 5–10 candidate workflows with the highest scores as your initial pilots. Typical candidates include support triage, invoice processing, internal IT helpdesk, and lead qualification—work that sits squarely in the sweet spot for how to deploy ai agents in business processes.

Phase 2 and 3: Pilot, Scale, and Institutionalize

Phase 2 is all about piloting. Choose 1–3 high-fit workflows and design hybrid human–agent models explicitly. Define KPIs upfront: handle time, accuracy, NPS, cost per transaction, and exception rate. This is where you prove kpi improvement and tangible value realization.

Run limited pilots with clear success criteria and feedback loops. Treat each agent like a product: gather user feedback, iterate prompts and guardrails, adjust routing rules, and refine the orchestration. As you move into Phase 3 (Scale), expand to adjacent processes, integrate agents into existing workflow and ticketing systems, and formalize an internal playbook for future deployments.

A realistic ai implementation roadmap for a mid-sized enterprise spans 6–12 months: months 0–2 for discovery and scoring, 3–6 for pilots, and 6–12 for scaling successful patterns. Change management is continuous throughout; your teams need to understand how their roles evolve, not vanish. If you want a structured partner for this journey, Buzzi.ai’s AI discovery and process assessment services are designed exactly for this phase.

Conclusion: Treat AI Agents as Precision Tools, Not Blanket Automation

AI agents for business are powerful, but power cuts both ways. Used indiscriminately, they amplify broken processes, confuse customers, and create governance headaches. Used selectively, they unlock scalable, intelligent workflow automation that compounds over time.

The difference comes down to process-fit. By evaluating stability, rule clarity, data/knowledge quality, and exception rate, you can make disciplined choices about where agents belong, where scripts or RPA dominate, and where humans must stay in control. A simple scorecard and decision tree turn “AI strategy” from buzzword to operating practice.

The most resilient organizations won’t be those with the most automation. They’ll be the ones with the best-designed hybrid human–agent systems, underpinned by strong data and governance. If you’re ready to audit one or two critical workflows and see what’s truly high-fit for agents, we’d be glad to help design a tailored discovery and deployment plan through our AI agent development services.

FAQ

What are AI agents for business and how do they differ from chatbots or RPA?

AI agents for business are software entities that can understand context, make decisions, and take actions across your systems to achieve a goal. Unlike simple chatbots, they aren’t limited to answering questions; they can update records, trigger workflows, and coordinate with other tools. Compared to RPA, which follows fixed scripts, agents can handle more variability and use knowledge bases to adapt within defined boundaries.

How can I quickly tell if a process is a good candidate for AI agents?

Look for four traits: stable steps, clear rules or policies, high-quality digital data, and a manageable exception rate. If at least three of these are strong, the process is likely a good candidate for agent-first or hybrid automation. If rules are fuzzy, data is messy, and exceptions dominate, focus on clarifying and standardizing before introducing agents.

What process characteristics signal that AI agents will likely fail or add friction?

Red flags include very high process variability, missing or outdated SOPs, fragmented or low-quality data, and heavy reliance on tacit knowledge. When combined with high emotional or regulatory stakes, agents can easily generate inconsistent or risky outcomes. In these cases, AI should remain an assistant for research and drafting, not an autonomous decision-maker.

How do I build a practical decision tree for AI agent adoption in my organization?

Start from process criticality, then branch on data and knowledge readiness, exception rate, and UX sensitivity. At each node, decide whether the step should be agent-first, script/RPA-first, human-first, or postponed until the process is fixed. Sketching this decision tree for a few key journeys creates a reusable pattern for evaluating future automation opportunities.

Which business workflows typically see the best ROI from AI agents?

Workflows like support triage, repetitive email responses, lead qualification, simple order changes, and routine status updates often deliver strong ROI. They combine high volume with moderate complexity and clear rules, making them ideal for selective automation. Customer service channels—including WhatsApp voice bots in emerging markets—are particularly fertile ground when designed with a process-fit lens.

Where should I avoid using AI agents and keep humans in control?

Avoid deploying autonomous agents in workflows involving terminations, complex legal interpretations, bespoke enterprise contract negotiations, and high-risk medical or financial decisions. These flows tend to have high exception rates, ambiguous policies, and serious downside risk. AI can still help by drafting, summarizing, or researching, but final judgment should remain firmly with experienced humans.

How do I design hybrid workflows where AI agents and humans collaborate effectively?

Define clear hand-off rules based on uncertainty, sentiment, and policy boundaries, and ensure agents pass full context when escalating. Make it easy for customers and employees to reach a human at any time, and be transparent about what the agent can and cannot do. Over time, refine these rules based on performance data so that agents and humans each handle the work they’re best suited for.

What KPIs should I track to measure AI agent performance in production?

Core KPIs include handle time, first-contact resolution, accuracy, exception rate, and user satisfaction (e.g., NPS or CSAT). You should also monitor escalation patterns, error types, and any domain-specific risk indicators like compliance flags. Together, these metrics show whether agents are actually improving outcomes or just shifting work around.

What data and knowledge foundations do I need before deploying AI agents?

You need accessible systems with consistent schemas, up-to-date SOPs and policies, and curated knowledge bases for the agent to reference. Investing in process mapping and data quality improvements upfront reduces downstream errors and escalations. In many organizations, this “prep work” is where most of the long-term reliability gains come from.

How can Buzzi.ai help my company design a selective, high-fit AI agent strategy?

Buzzi.ai specializes in building tailor-made AI agents and voice bots that are tightly aligned with your actual workflows and constraints. We help you discover and score candidate processes, design hybrid human–agent journeys, and implement the governance and data foundations required for safe scaling. To explore a structured approach, you can start with our AI discovery and process assessment and then move into full agent development and deployment.